JSON prompting for LLMs, explained with examples:

What is JSON prompting?

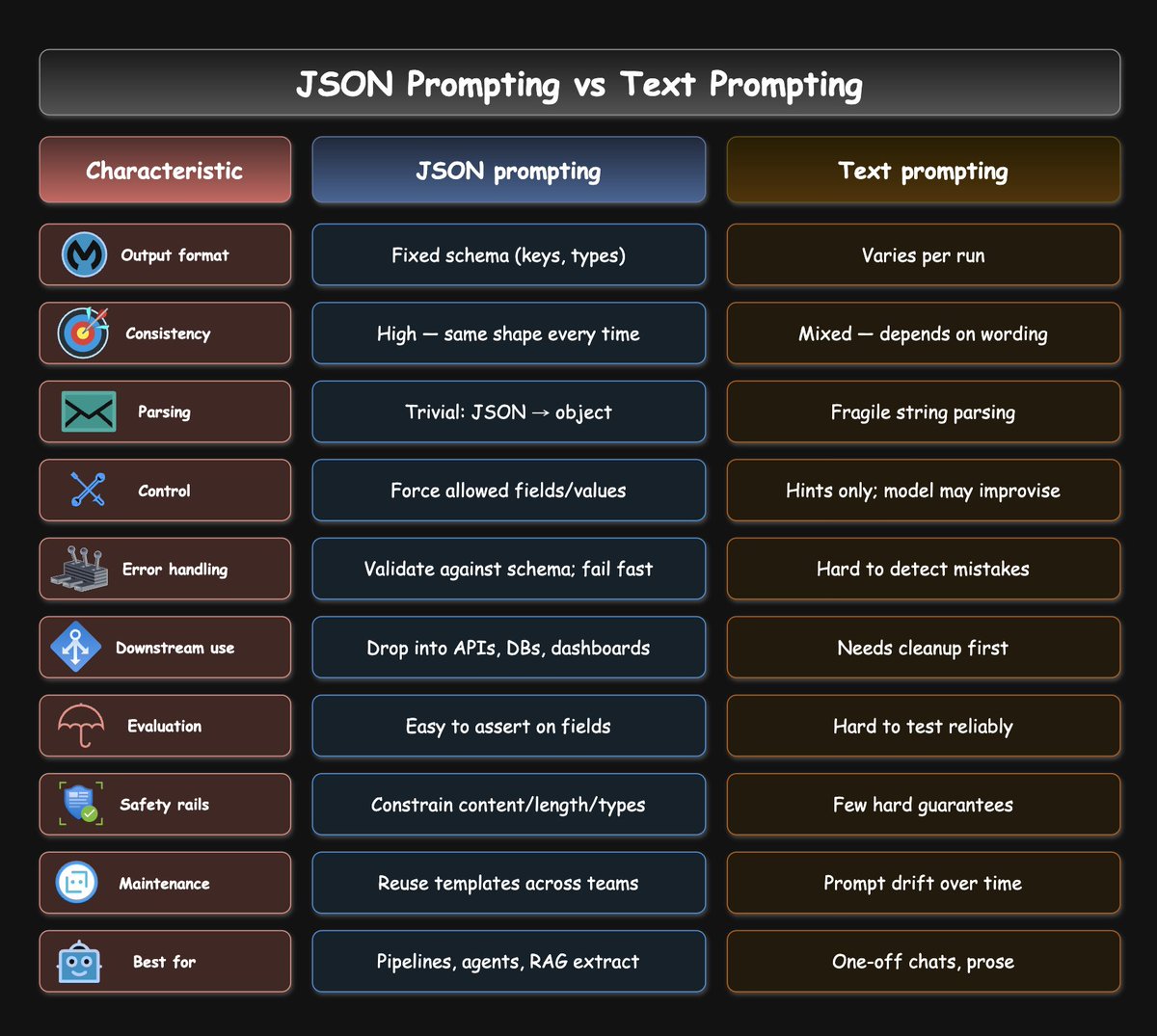

JSON prompting is a way of asking an LLM using a clear, structured format (with keys and values) and expecting the response in the same structured style.

Text prompts → inconsistent, messy outputs

JSON prompt → consistent, parseable data

JSON prompting is a way of asking an LLM using a clear, structured format (with keys and values) and expecting the response in the same structured style.

Text prompts → inconsistent, messy outputs

JSON prompt → consistent, parseable data

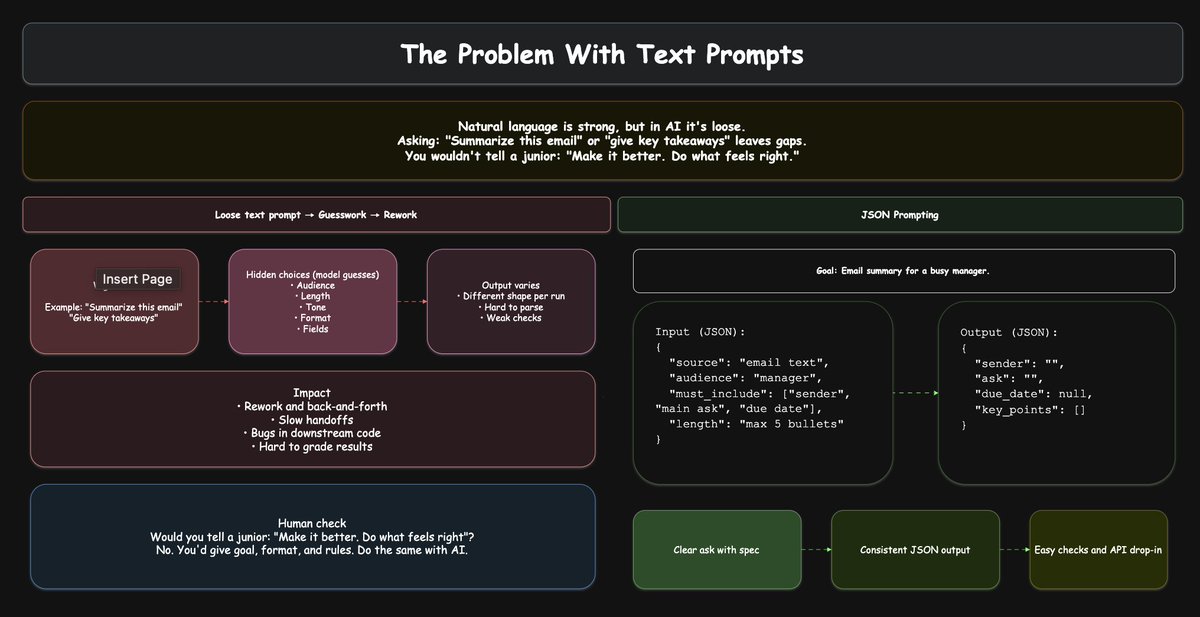

The Problem With Text Prompts

Natural language is strong, but in AI it’s loose.

“Summarize this email” or “give key takeaways” leaves room for guesswork.

You wouldn’t tell a junior: “Make it better. Do what feels right.”

Yet we do that with AI all the time.

Natural language is strong, but in AI it’s loose.

“Summarize this email” or “give key takeaways” leaves room for guesswork.

You wouldn’t tell a junior: “Make it better. Do what feels right.”

Yet we do that with AI all the time.

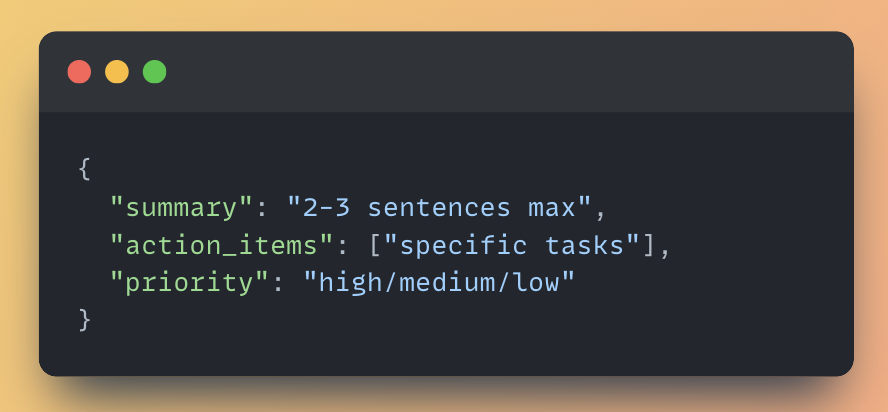

Structure Forces Precision

JSON makes you define exactly what you need instead of hoping the LLM guesses correctly. Text prompts like "analyze this data" leave room for interpretation & inconsistent outputs.

JSON forces you to specify fields, formats, and constraints.

JSON makes you define exactly what you need instead of hoping the LLM guesses correctly. Text prompts like "analyze this data" leave room for interpretation & inconsistent outputs.

JSON forces you to specify fields, formats, and constraints.

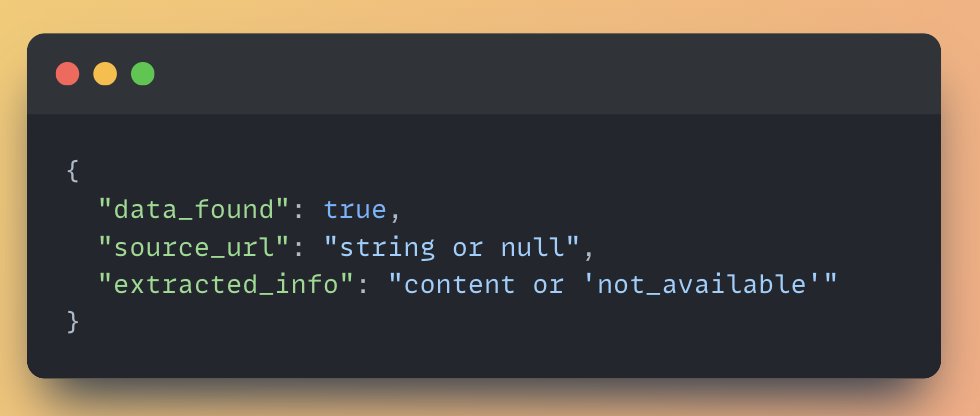

Predictable Output Enables Integration

Every response follows the same pattern, making your systems reliable. You know exactly where each piece of data will be located. No more parsing different response formats or handling unexpected structures.

Every response follows the same pattern, making your systems reliable. You know exactly where each piece of data will be located. No more parsing different response formats or handling unexpected structures.

Validation Becomes Automatic

With JSON structure, you can check if the response has all required fields before your application uses the data. Traditional text responses might seem complete but miss critical information buried in paragraphs.

JSON forces completeness.

With JSON structure, you can check if the response has all required fields before your application uses the data. Traditional text responses might seem complete but miss critical information buried in paragraphs.

JSON forces completeness.

Templates Scale Across Teams

One well-designed JSON prompt becomes a reusable asset for your entire organization. Teams can share proven templates and get consistent results regardless of who writes the prompt.

One well-designed JSON prompt becomes a reusable asset for your entire organization. Teams can share proven templates and get consistent results regardless of who writes the prompt.

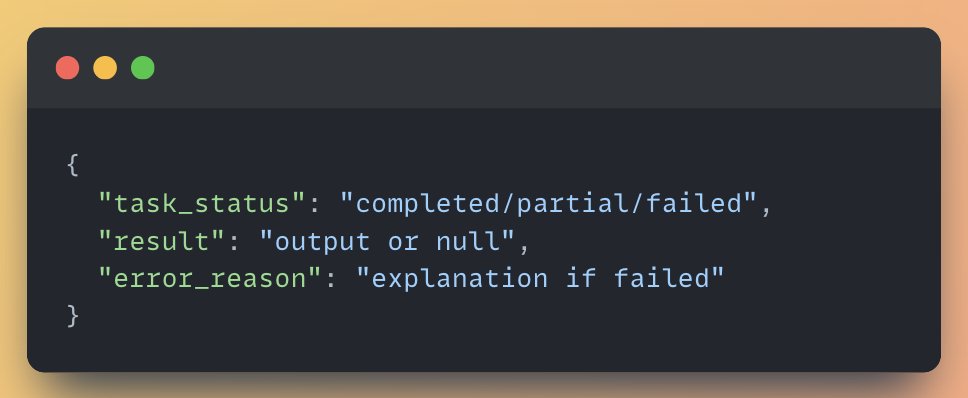

Error Handling Gets Built-In

JSON lets you design explicit failure modes instead of getting ambiguous or broken responses. Text outputs might hide errors in conversational language or incomplete sentences.

JSON makes problems visible and actionable.

JSON lets you design explicit failure modes instead of getting ambiguous or broken responses. Text outputs might hide errors in conversational language or incomplete sentences.

JSON makes problems visible and actionable.

3 Limitations of JSON Prompting

• Token Overhead: JSON uses more tokens due to structural markup

• Creativity Constraints: Strict formatting stifles creative writing flow

• Wrong Tool for many tasks: Code generation and conversations work better in native format.

• Token Overhead: JSON uses more tokens due to structural markup

• Creativity Constraints: Strict formatting stifles creative writing flow

• Wrong Tool for many tasks: Code generation and conversations work better in native format.

JSON prompting is really good for data extraction, API integration, and structured workflows where consistency matters most.

But don't force it everywhere.

Try it where structure matters. Skip it where creativity does.

But don't force it everywhere.

Try it where structure matters. Skip it where creativity does.

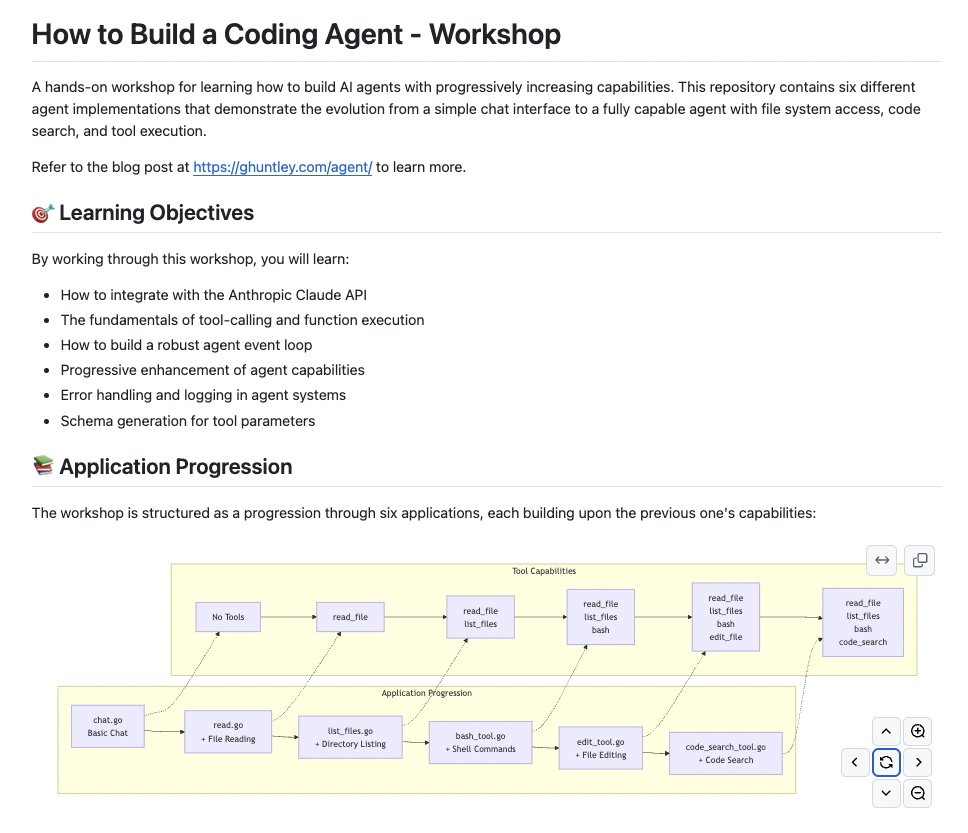

100+ free step-by-step tutorials with code covering:

🚀 AI Agents

📀 RAG Systems

🗣️ Voice AI Agents

🌐 MCP AI Agents

🤝 Multi-agent Teams

🎮 Autonomous Game Playing Agents

P.S: Don't forget to subscribe for FREE to access future tutorials.

theunwindai.com

🚀 AI Agents

📀 RAG Systems

🗣️ Voice AI Agents

🌐 MCP AI Agents

🤝 Multi-agent Teams

🎮 Autonomous Game Playing Agents

P.S: Don't forget to subscribe for FREE to access future tutorials.

theunwindai.com

Stay tuned for more such interesting posts → @Saboo_Shubham_

I have created 100+ AI Agents and RAG tutorials, 100% free and opensource.

P.S: Don't forget to star the repo to show your support 🌟

github.com/Shubhamsaboo/a…

I have created 100+ AI Agents and RAG tutorials, 100% free and opensource.

P.S: Don't forget to star the repo to show your support 🌟

github.com/Shubhamsaboo/a…

• • •

Missing some Tweet in this thread? You can try to

force a refresh