This week in Replit

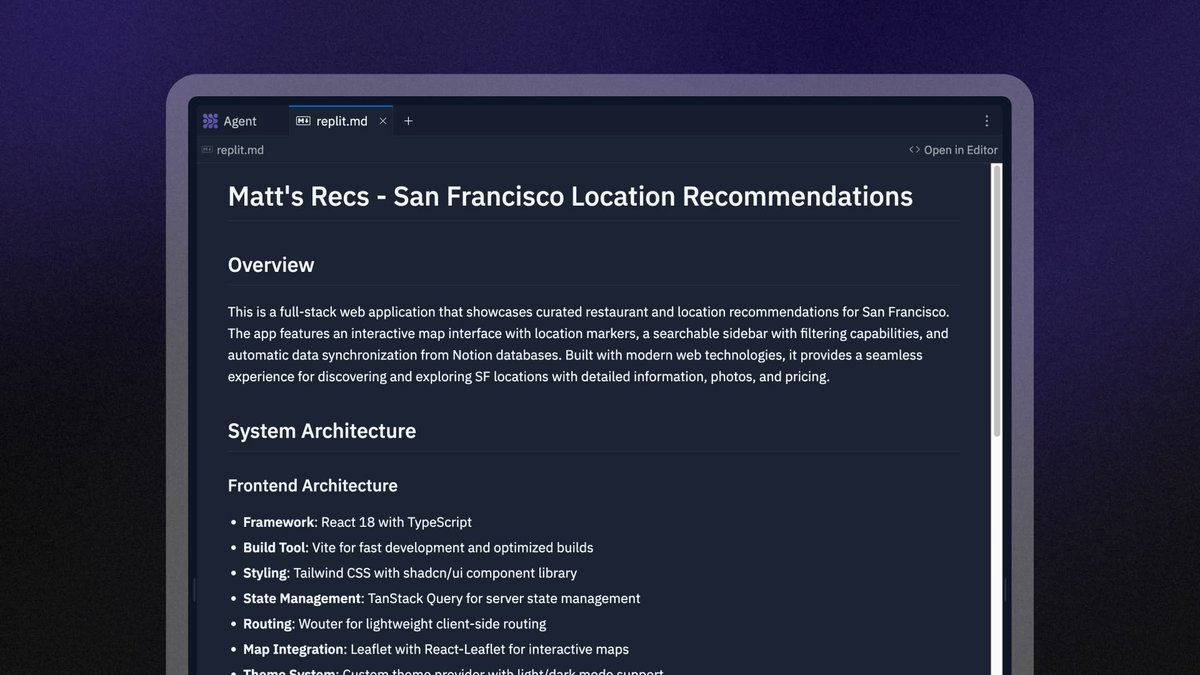

Agent 3 is here! 🤖 Our AI is now more autonomous, reliable, and faster. It can test your app in a real browser, find bugs, and automatically fix them for you.

Agent 3 is here! 🤖 Our AI is now more autonomous, reliable, and faster. It can test your app in a real browser, find bugs, and automatically fix them for you.

1/ Tackle bigger projects with longer run times. Agent 3 can now work autonomously for up to 200 minutes, with automated testing so you can track its progress.

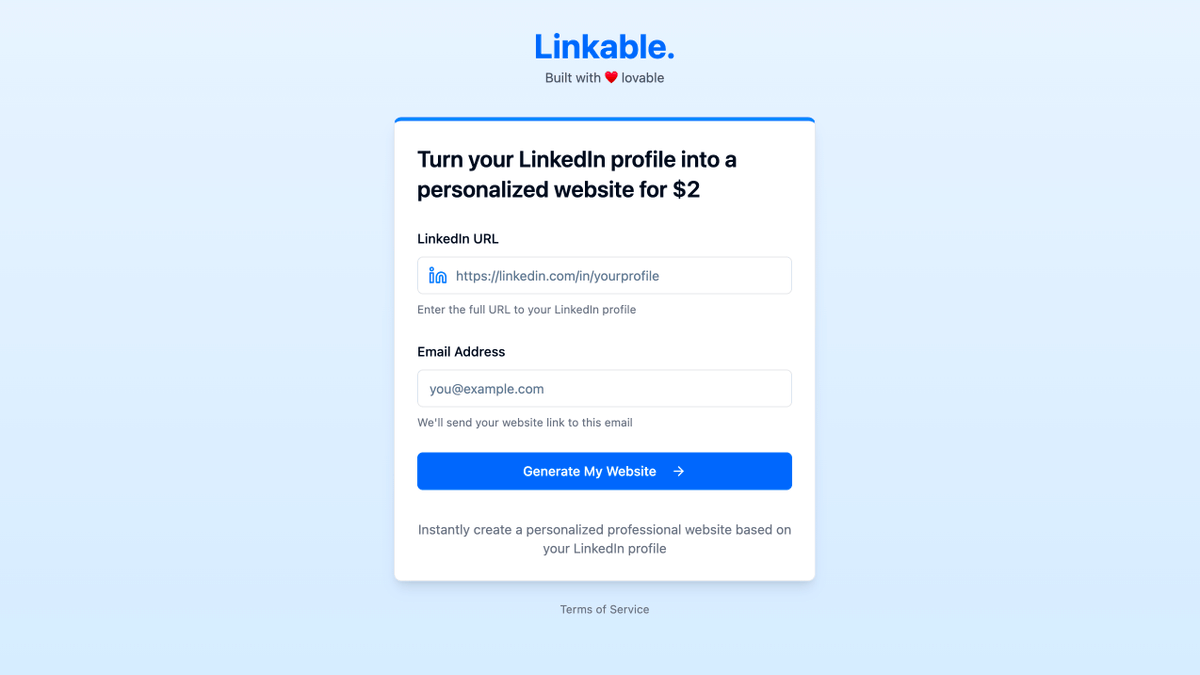

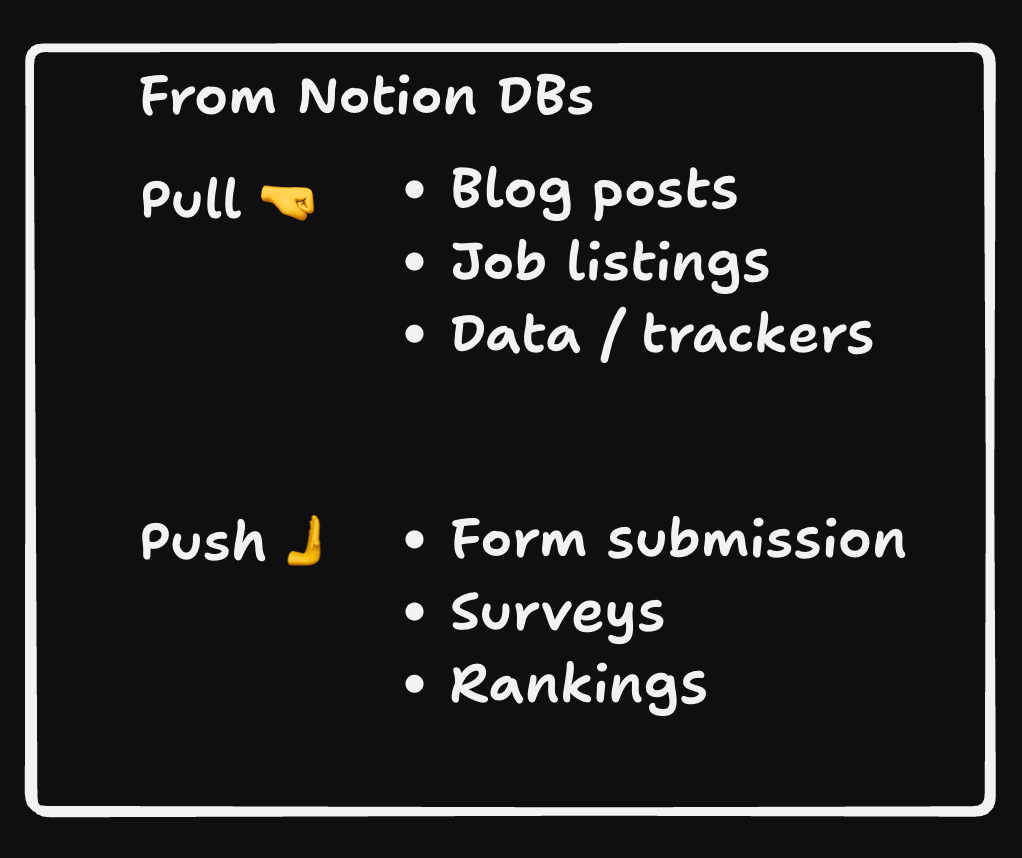

2/ Ship faster with new App Connectors & Integrations. Connect your apps to your favorite services by signing in just once, and reuse the connection across all your projects.

3/ Build intelligent bots and workflows with Agents & Automations (beta). Create custom Slackbots, Telegram bots, or run tasks on a schedule, all from your workspace.

That's it for this week, be sure to follow along for weekly updates: docs.replit.com/updates

• • •

Missing some Tweet in this thread? You can try to

force a refresh