We’re announcing a major advance in the study of fluid dynamics with AI 💧 in a joint paper with researchers from @BrownUniversity, @nyuniversity and @Stanford.

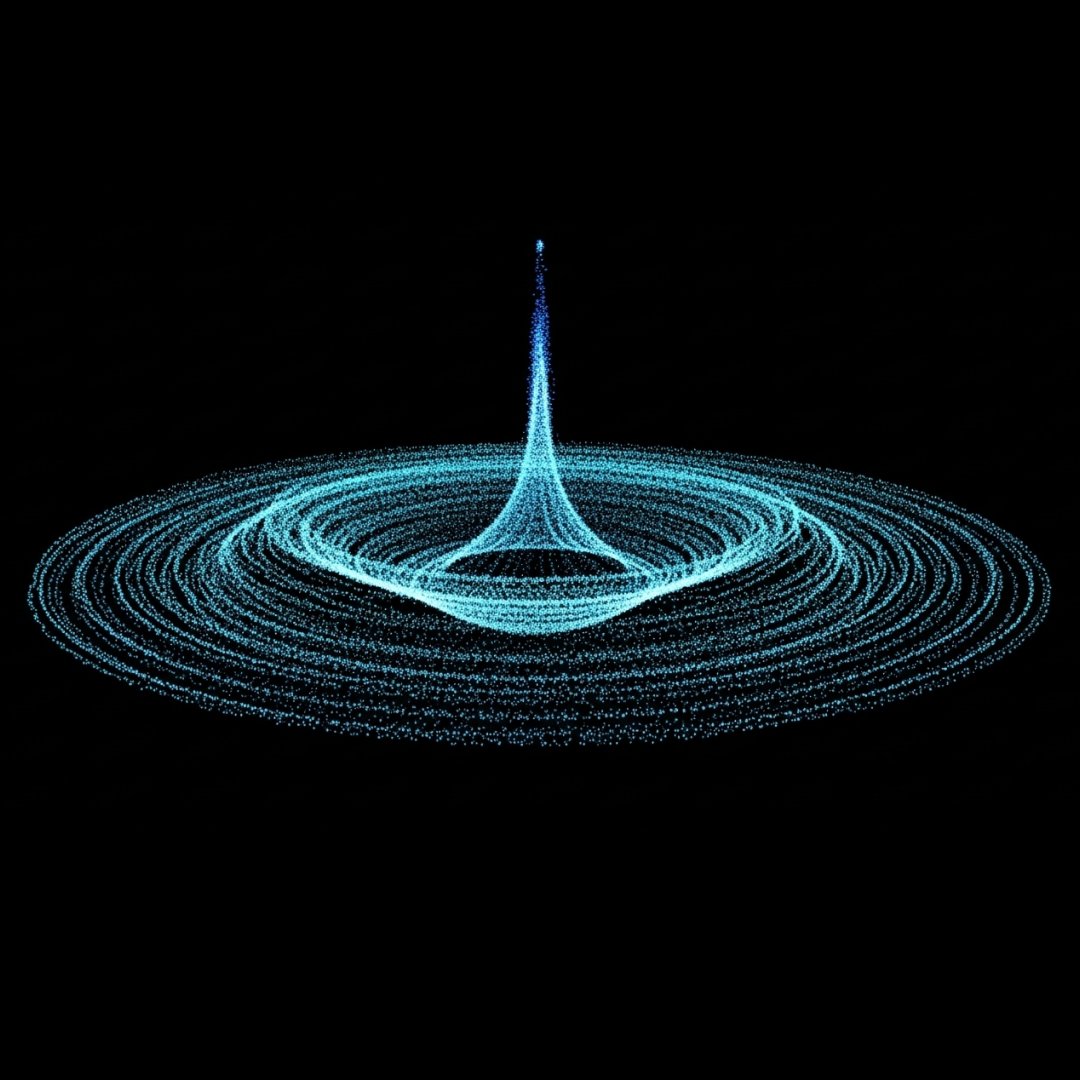

Equations to describe fluid motion - like airflow lifting an airplane wing or the swirling vortex of a hurricane - can sometimes "break," predicting impossible, infinite values.

These "singularities" are a huge mystery in mathematical physics.

These "singularities" are a huge mystery in mathematical physics.

We used a new AI-powered method to discover new families of unstable “singularities” across three different fluid equations.

A clear and unexpected pattern emerged: as the solutions become more unstable, one of the key properties falls very close to a straight line.

This suggests a new, underlying structure to these equations that was previously invisible.

A clear and unexpected pattern emerged: as the solutions become more unstable, one of the key properties falls very close to a straight line.

This suggests a new, underlying structure to these equations that was previously invisible.

This breakthrough represents a new way of doing mathematical research - combining deep insights with cutting-edge AI.

We’re excited for this work to help usher in a new era where long-standing challenges are tackled with computer-assisted proofs. → goo.gle/46loOuZ

We’re excited for this work to help usher in a new era where long-standing challenges are tackled with computer-assisted proofs. → goo.gle/46loOuZ

• • •

Missing some Tweet in this thread? You can try to

force a refresh