In 2024, OpenAI fired their youngest Superalignment researcher for asking too many questions.

He was 23 years old with 3 degrees and a terrifying secret about AI.

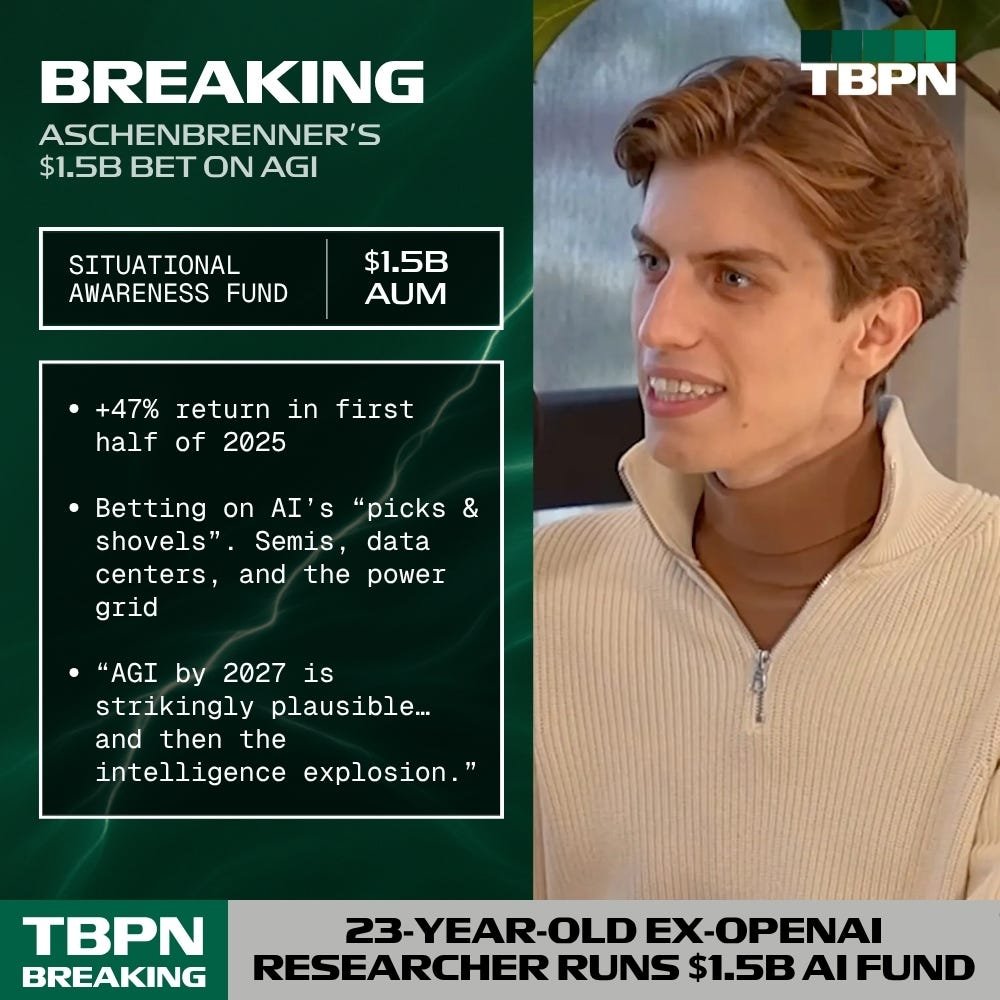

6 months later, his $1.5B fund is crushing Wall Street by 47%.

A thread about what he saw that others didn't... 🧵

He was 23 years old with 3 degrees and a terrifying secret about AI.

6 months later, his $1.5B fund is crushing Wall Street by 47%.

A thread about what he saw that others didn't... 🧵

Leopold Aschenbrenner entered Columbia University at age 15.

He graduated valedictorian at 19 with dual degrees in economics and mathematics-statistics.

OpenAI recruited him for their most sensitive project: controlling superintelligent AI systems.

He graduated valedictorian at 19 with dual degrees in economics and mathematics-statistics.

OpenAI recruited him for their most sensitive project: controlling superintelligent AI systems.

The Superalignment team had one mission: solve AI alignment before machines surpass human intelligence.

Aschenbrenner discovered this timeline was shorter than anyone publicly acknowledged.

His internal analysis pointed to AGI by 2027.

Aschenbrenner discovered this timeline was shorter than anyone publicly acknowledged.

His internal analysis pointed to AGI by 2027.

April 2023: An external hacker breached OpenAI's internal systems.

The company kept the incident private.

Aschenbrenner recognized the security implications and wrote a classified memo to the board.

The company kept the incident private.

Aschenbrenner recognized the security implications and wrote a classified memo to the board.

His memo detailed "egregiously insufficient" security protocols against state-actor threats.

OpenAI's model weights and core algorithmic secrets were vulnerable to foreign espionage.

The board received his analysis. Leadership did not appreciate the assessment.

OpenAI's model weights and core algorithmic secrets were vulnerable to foreign espionage.

The board received his analysis. Leadership did not appreciate the assessment.

HR issued an official warning within days.

They characterized his concerns about Chinese intelligence operations as inappropriate.

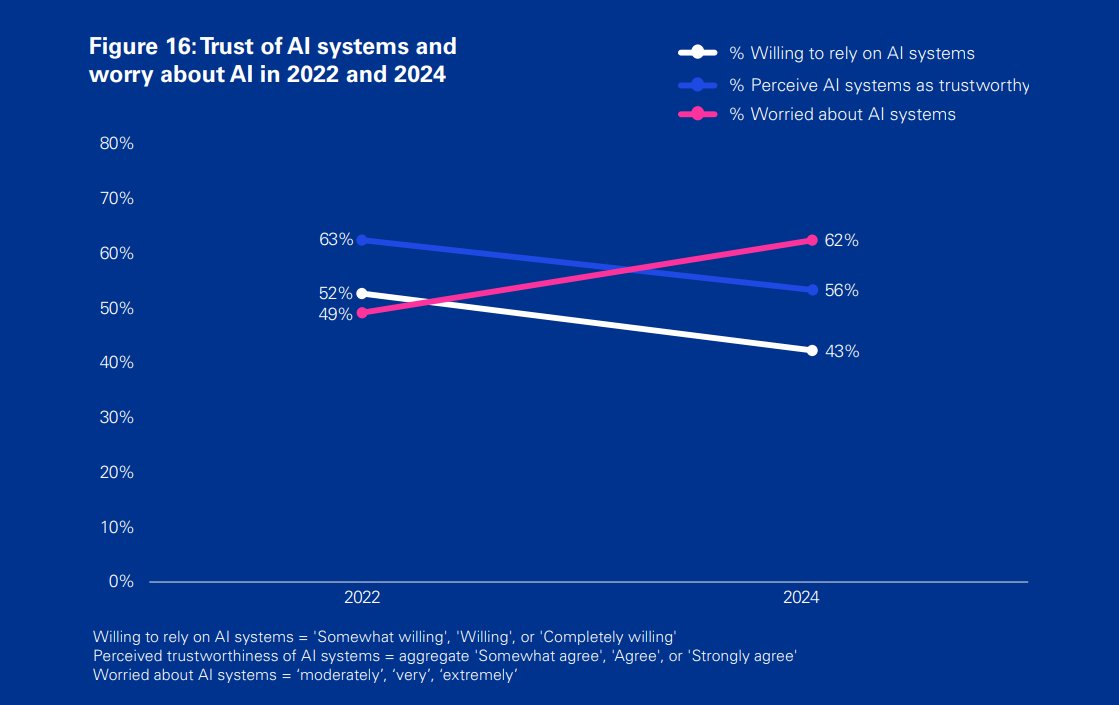

Meanwhile, Aschenbrenner's timeline projections showed accelerating development toward AGI.

They characterized his concerns about Chinese intelligence operations as inappropriate.

Meanwhile, Aschenbrenner's timeline projections showed accelerating development toward AGI.

His mathematical analysis was straightforward:

Computing power: +0.5 orders of magnitude annually

Algorithmic efficiency: +0.5 orders of magnitude annually

Capability improvements: Continuous advancement from chatbot to agent functionality

Computing power: +0.5 orders of magnitude annually

Algorithmic efficiency: +0.5 orders of magnitude annually

Capability improvements: Continuous advancement from chatbot to agent functionality

The implications were stark.

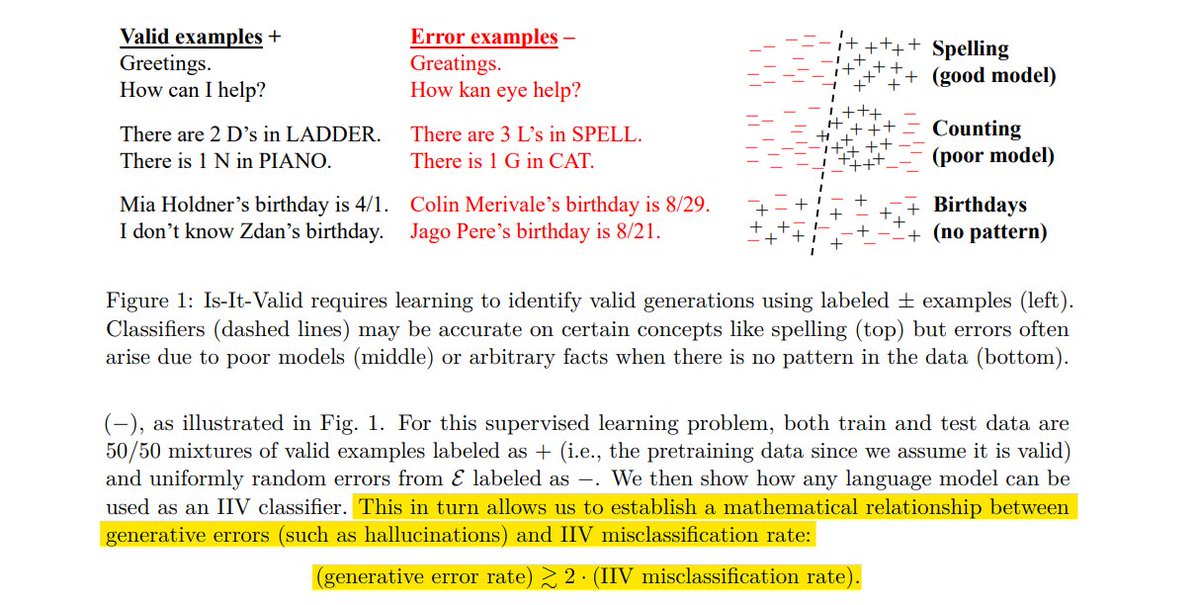

Current alignment methods like RLHF cannot scale to superintelligent systems.

When AI generates millions of lines of code, human oversight becomes impossible.

Current alignment methods like RLHF cannot scale to superintelligent systems.

When AI generates millions of lines of code, human oversight becomes impossible.

Aschenbrenner attempted to secure resources for safety research.

The Superalignment team was allocated 20% of compute resources in principle.

In practice, they received minimal allocation. Safety research remained deprioritized.

The Superalignment team was allocated 20% of compute resources in principle.

In practice, they received minimal allocation. Safety research remained deprioritized.

April 2024: OpenAI terminated Aschenbrenner's employment.

Official reason: sharing internal documents with external researchers.

The Superalignment team dissolved one month later when Ilya Sutskever and Jan Leike departed.

Official reason: sharing internal documents with external researchers.

The Superalignment team dissolved one month later when Ilya Sutskever and Jan Leike departed.

Aschenbrenner published "Situational Awareness: The Decade Ahead" - a 165-page technical analysis.

His thesis: AGI development will trigger unprecedented infrastructure investment.

Trillions in capital will flow toward AI supply chains by 2027.

His thesis: AGI development will trigger unprecedented infrastructure investment.

Trillions in capital will flow toward AI supply chains by 2027.

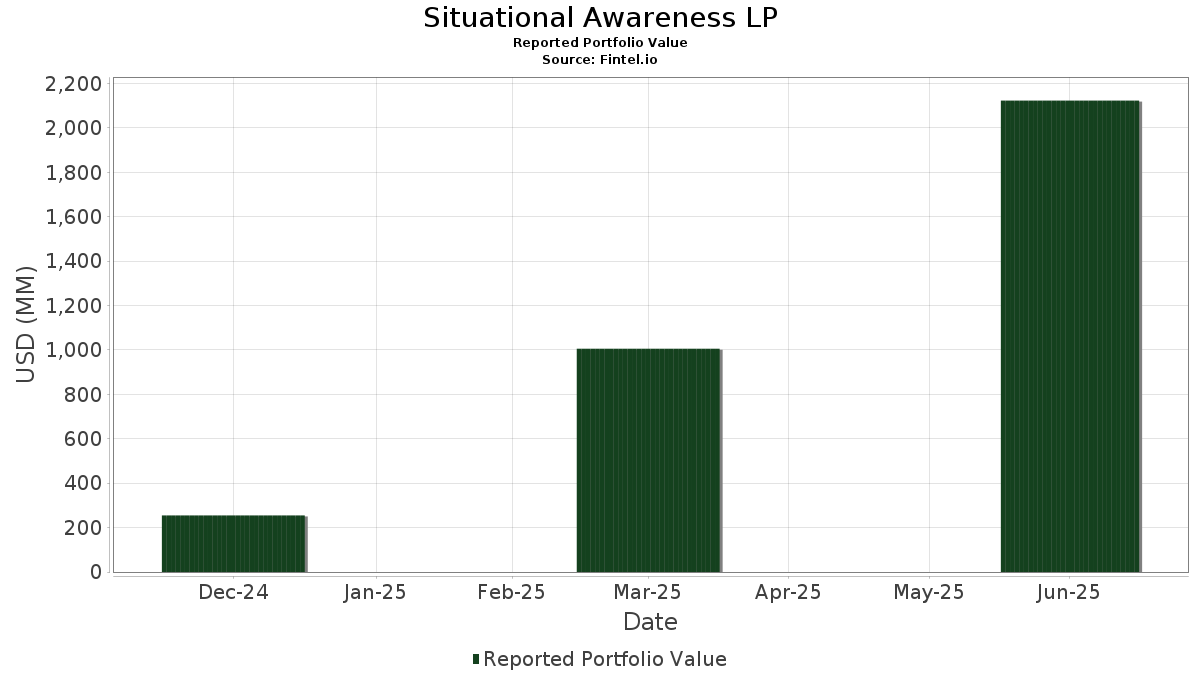

June 2024: He launched the Situational Awareness Fund.

Initial investors included Stripe founders Patrick and John Collison, former GitHub CEO Nat Friedman, and Daniel Gross.

Strategy: Long AI infrastructure, short disrupted industries.

Initial investors included Stripe founders Patrick and John Collison, former GitHub CEO Nat Friedman, and Daniel Gross.

Strategy: Long AI infrastructure, short disrupted industries.

Assets under management reached $1.5 billion within 12 months.

First half 2025 performance:

• Situational Awareness Fund: +47%

• S&P 500 Index: +6%

• Technology hedge fund average: +7%

First half 2025 performance:

• Situational Awareness Fund: +47%

• S&P 500 Index: +6%

• Technology hedge fund average: +7%

Aschenbrenner's investment thesis was built on insider knowledge of AI development trajectories.

He positioned capital ahead of market recognition of the AGI timeline.

The strategy has generated substantial alpha for sophisticated investors.

He positioned capital ahead of market recognition of the AGI timeline.

The strategy has generated substantial alpha for sophisticated investors.

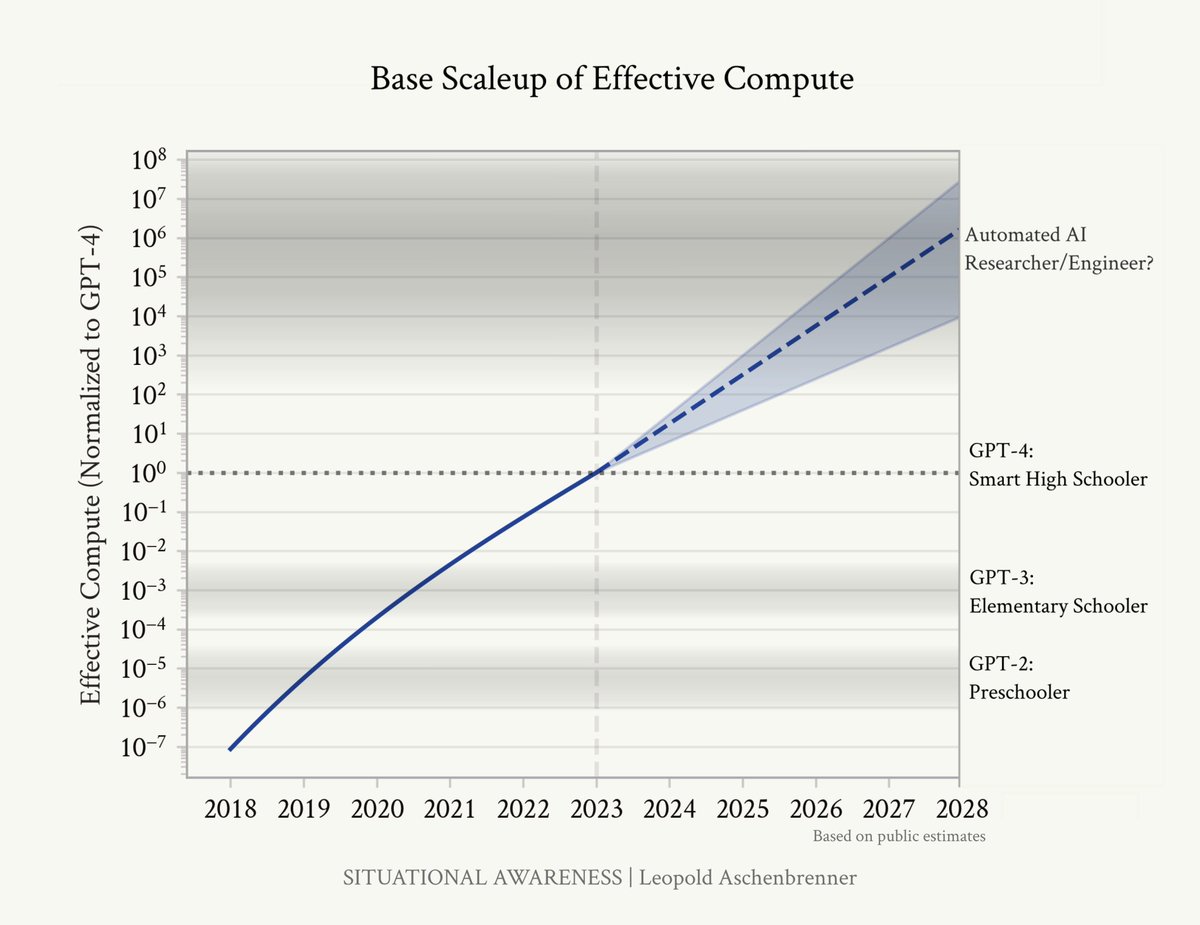

The case illustrates a pattern: AI safety researchers often possess the most accurate timeline assessments.

When these individuals transition to capital markets, their performance suggests unique informational advantages.

Market pricing may not reflect insider consensus on development speed.

When these individuals transition to capital markets, their performance suggests unique informational advantages.

Market pricing may not reflect insider consensus on development speed.

This raises strategic questions for institutional investors and policymakers.

If Aschenbrenner's timeline proves accurate, current AI infrastructure valuations may be significantly underpriced.

The implications extend beyond financial markets to national security planning.

If Aschenbrenner's timeline proves accurate, current AI infrastructure valuations may be significantly underpriced.

The implications extend beyond financial markets to national security planning.

P.S. Are you an Enterprise AI Leader looking to validate and govern your AI models at scale?

provides the model validation, monitoring, and governance frameworks you need to deploy AI with confidence.

Learn more:TrustModel.ai

provides the model validation, monitoring, and governance frameworks you need to deploy AI with confidence.

Learn more:TrustModel.ai

Thanks for reading.

If you enjoyed this post, follow @karlmehta for more content on AI and politics.

Repost the first tweet to help more people see it:

Appreciate the support.

If you enjoyed this post, follow @karlmehta for more content on AI and politics.

Repost the first tweet to help more people see it:

Appreciate the support.

https://twitter.com/20891198/status/1969027468293189666

• • •

Missing some Tweet in this thread? You can try to

force a refresh