Very cool work from Meta Superintelligence Lab.

They are open-sourcing Meta Agents Research Environments (ARE), the platform they use to create and scale agent environments.

Great resource to stress-test agents in environments closer to real apps.

Read on for more:

They are open-sourcing Meta Agents Research Environments (ARE), the platform they use to create and scale agent environments.

Great resource to stress-test agents in environments closer to real apps.

Read on for more:

TL;DR

ARE + Gaia2: a research platform and benchmark for building and stress-testing agent systems in realistic, time-driven environments.

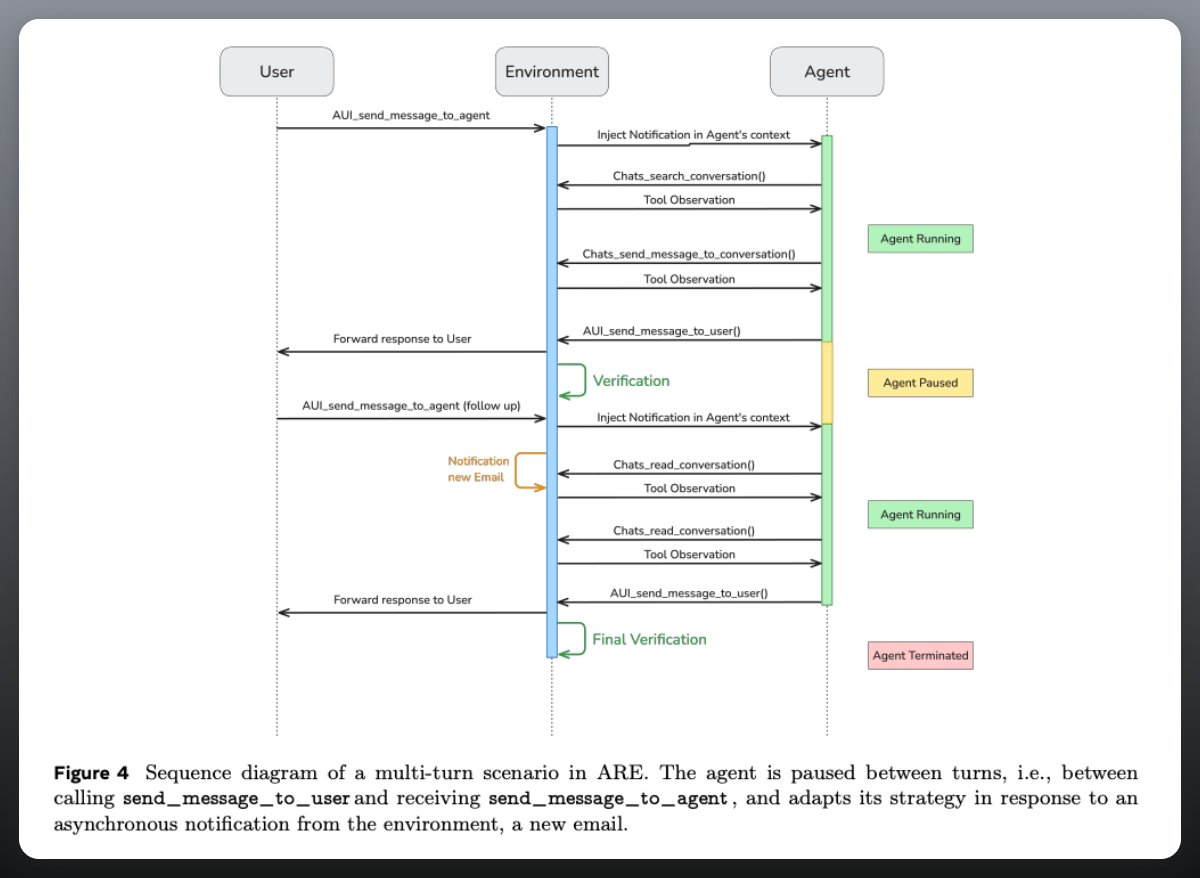

The paper introduces a modular simulator (ARE) and a mobile-style benchmark (Gaia2) that emphasize asynchronous events, verification of write actions, and multi-agent coordination in noisy, dynamic settings.

ARE + Gaia2: a research platform and benchmark for building and stress-testing agent systems in realistic, time-driven environments.

The paper introduces a modular simulator (ARE) and a mobile-style benchmark (Gaia2) that emphasize asynchronous events, verification of write actions, and multi-agent coordination in noisy, dynamic settings.

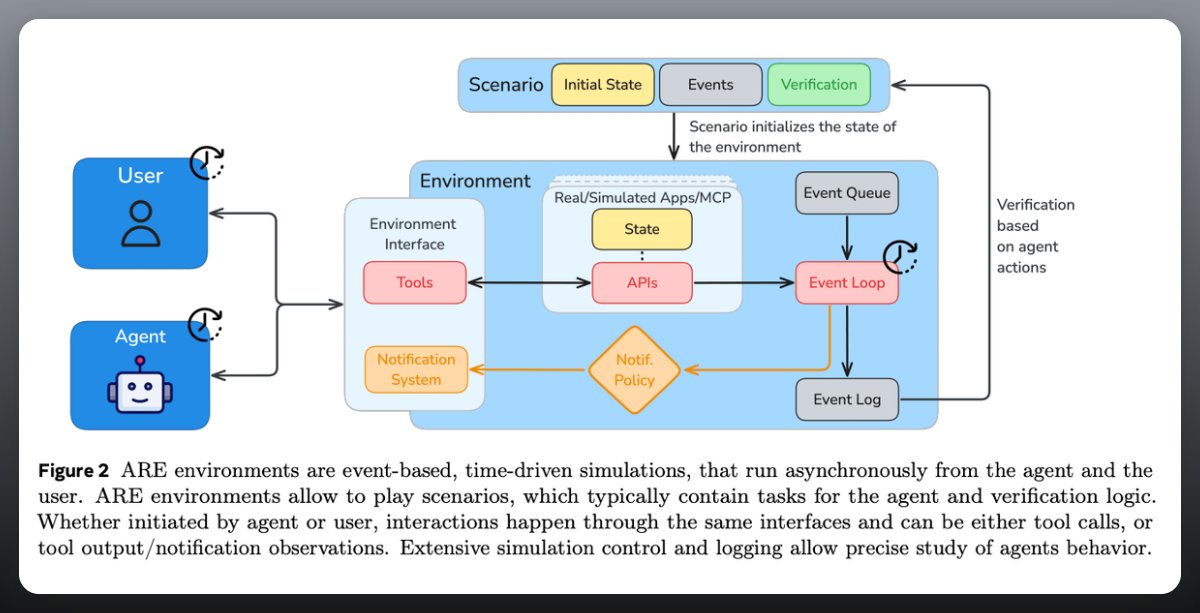

ARE: the simulator

• Everything is modeled as apps, events, notifications, and scenarios.

• Time keeps flowing even while the agent is thinking, so slow models miss deadlines.

•Agents use tools, get async notifications, and operate under rules defined by directed acyclic graphs.

• Everything is modeled as apps, events, notifications, and scenarios.

• Time keeps flowing even while the agent is thinking, so slow models miss deadlines.

•Agents use tools, get async notifications, and operate under rules defined by directed acyclic graphs.

Gaia2: the benchmark

• 1,120 scenarios in a smartphone-like world with 12 apps (Chats, Calendar, Shopping, Email, etc.).

• Six main challenge types: Search, Execution, Adaptability, Time, Ambiguity, and Agent-to-Agent collaboration (examples on pages 12–14, with event graphs shown in the GUI screenshots).

• Scenarios are verifiable: oracle write-actions are compared to the agent’s actions with hard checks (IDs, order) and soft LLM judging (content).

• 1,120 scenarios in a smartphone-like world with 12 apps (Chats, Calendar, Shopping, Email, etc.).

• Six main challenge types: Search, Execution, Adaptability, Time, Ambiguity, and Agent-to-Agent collaboration (examples on pages 12–14, with event graphs shown in the GUI screenshots).

• Scenarios are verifiable: oracle write-actions are compared to the agent’s actions with hard checks (IDs, order) and soft LLM judging (content).

Results so far

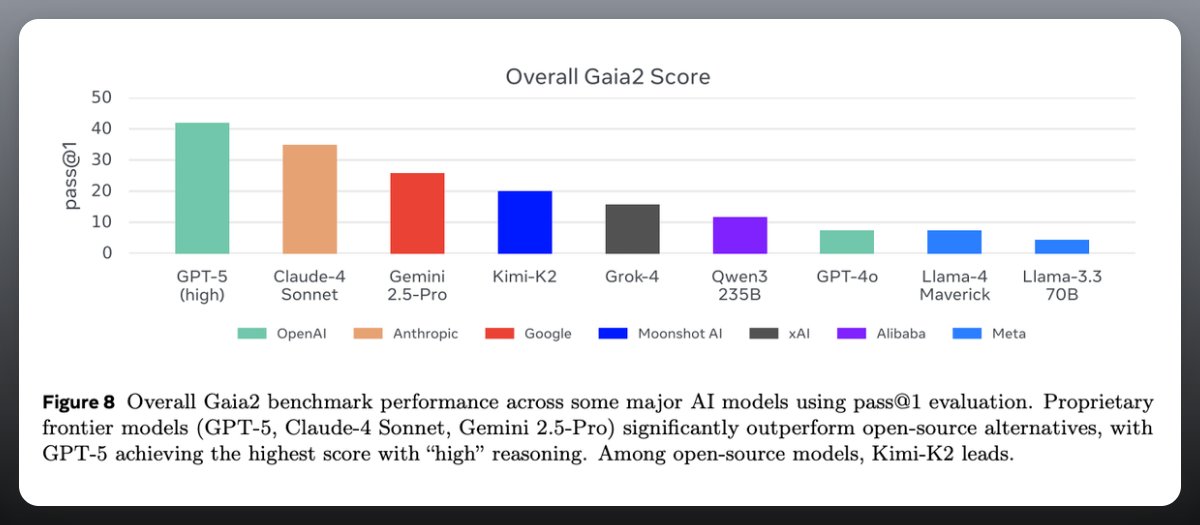

No single model dominates: GPT-5 “high” reasoning leads on tough tasks but collapses on time-critical ones.

Claude-4 Sonnet balances speed vs accuracy but at higher cost. Open-source models (like Kimi-K2) show promise in adaptability.

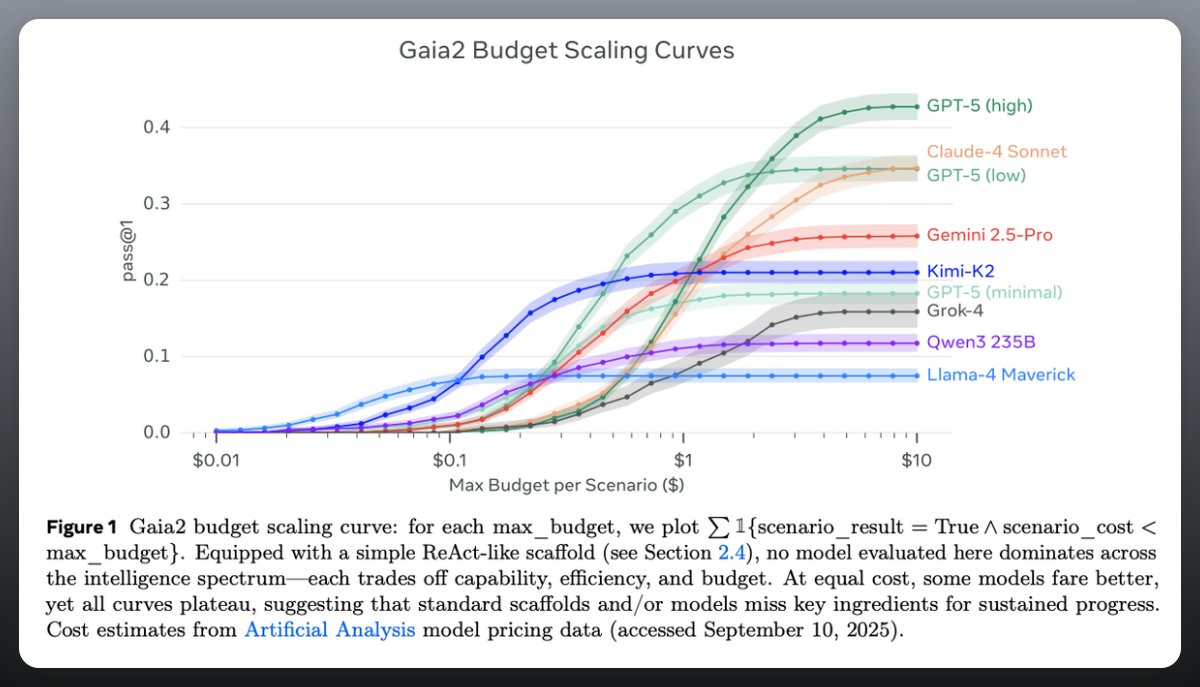

Scaling curves plateau, showing diminishing returns from throwing more compute at the same scaffold.

No single model dominates: GPT-5 “high” reasoning leads on tough tasks but collapses on time-critical ones.

Claude-4 Sonnet balances speed vs accuracy but at higher cost. Open-source models (like Kimi-K2) show promise in adaptability.

Scaling curves plateau, showing diminishing returns from throwing more compute at the same scaffold.

Key insights for devs

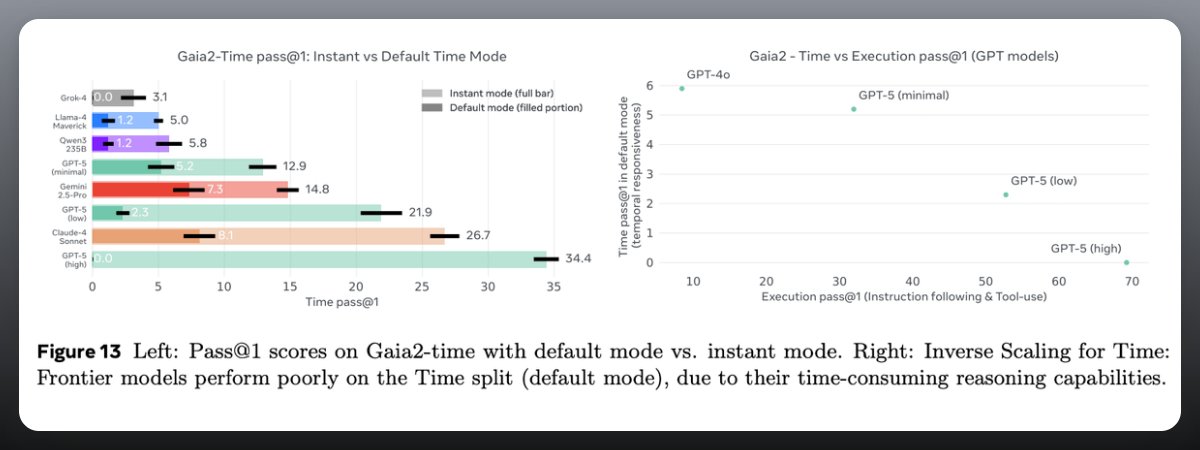

Strong reasoning models often fail at timeliness (“inverse scaling” effect).

Instant mode experiments confirm that long reasoning hurts when deadlines matter.

Multi-agent setups help weaker models coordinate better, but give mixed results for the strongest system.

Paper: ai.meta.com/research/publi…

Demo: huggingface.co/spaces/meta-ag…

Strong reasoning models often fail at timeliness (“inverse scaling” effect).

Instant mode experiments confirm that long reasoning hurts when deadlines matter.

Multi-agent setups help weaker models coordinate better, but give mixed results for the strongest system.

Paper: ai.meta.com/research/publi…

Demo: huggingface.co/spaces/meta-ag…

• • •

Missing some Tweet in this thread? You can try to

force a refresh