Something you may not know about Sonnet 4.5: it’s a special model for cybersecurity.

For the past few months, the Frontier Red Team has been researching how to make models more useful for defenders.

We now think we’re at an inflection point. New post on Red:

For the past few months, the Frontier Red Team has been researching how to make models more useful for defenders.

We now think we’re at an inflection point. New post on Red:

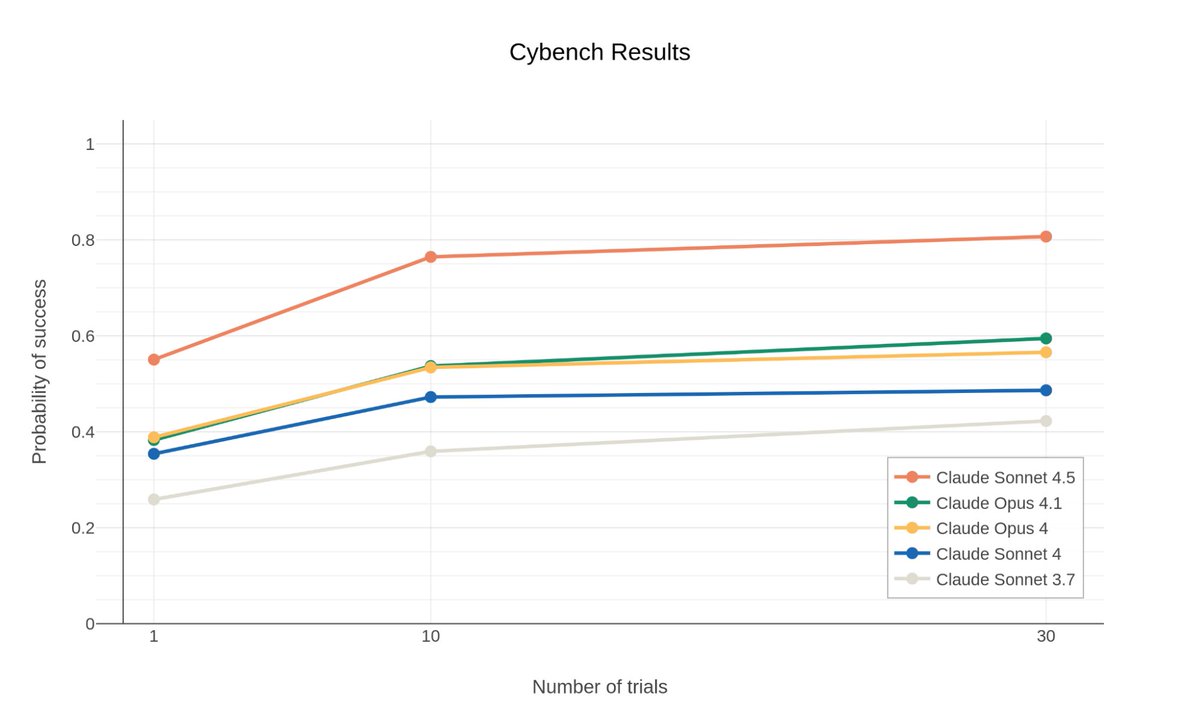

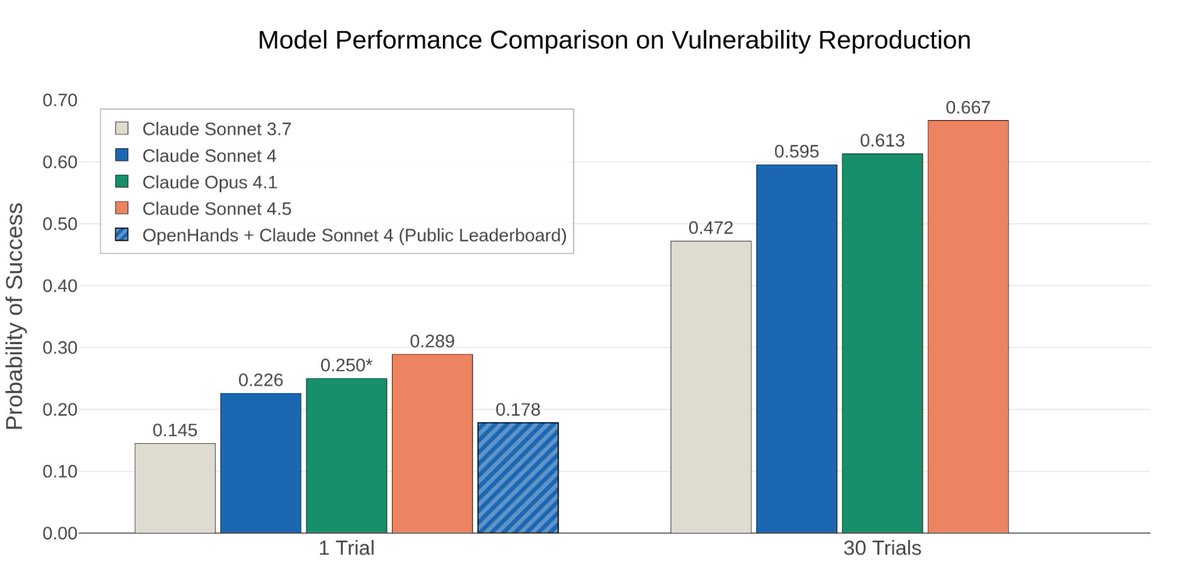

Sonnet 4.5 is very good on all our cyber tasks. Using some simple approaches, we more than doubled SOTA on some benchmarks.

We figured 4.5 would be good at code + agentic stuff. So over the past few months, part of my team has been researching how to make models more useful for defenders. @DARPA's AI Cyber Challenge, and work by companies like @Google and @Xbow, inspired us.

red.anthropic.com/2025/ai-for-cy…

red.anthropic.com/2025/ai-for-cy…

The results from our research:

a/ Sonnet 4.5 is better at cybersecurity tasks

b/ models are already better than people think

c/ I think they could get better fast from here

The question: because cyber is dual use, how do we make sure this advantages defenders?

a/ Sonnet 4.5 is better at cybersecurity tasks

b/ models are already better than people think

c/ I think they could get better fast from here

The question: because cyber is dual use, how do we make sure this advantages defenders?

This is a really hard question. The world needs to answer it ASAP.

We focused on making Sonnet 4.5 better at tasks a lot of defenders do and ones that aren't clearly offensive. But we need to make models even better defensively, and start deploying defenses...

We focused on making Sonnet 4.5 better at tasks a lot of defenders do and ones that aren't clearly offensive. But we need to make models even better defensively, and start deploying defenses...

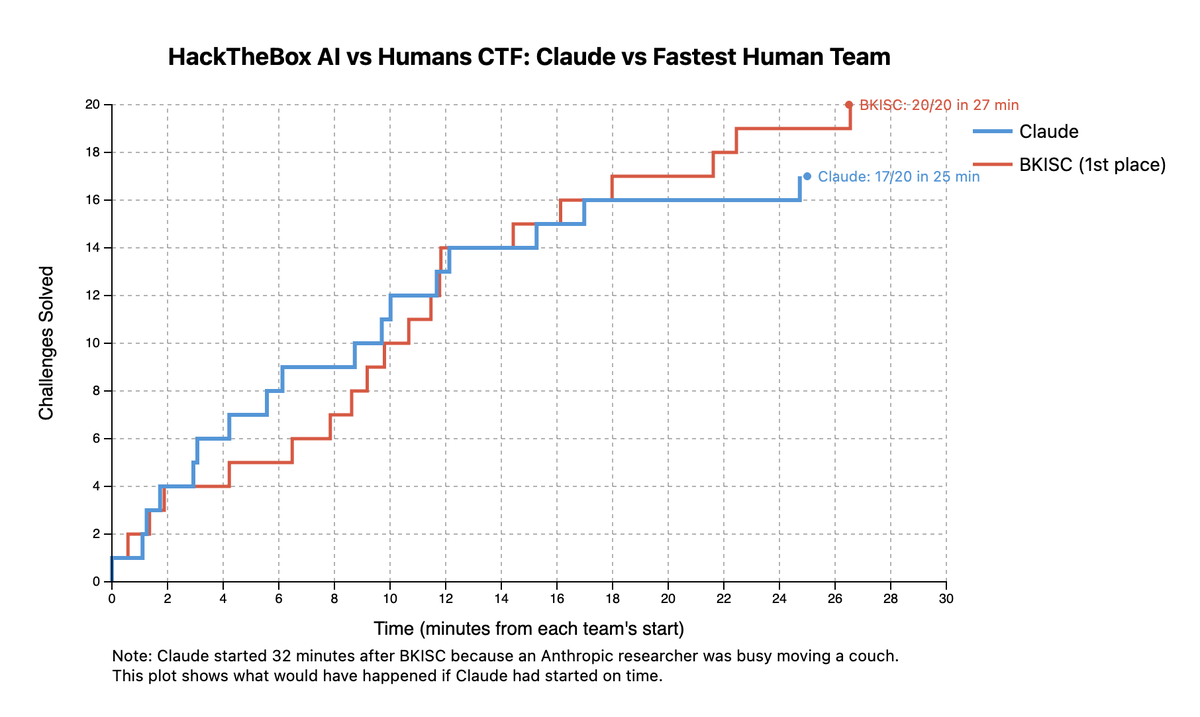

...because we see risks coming. For example, we caught (and disrupted) threat actors using models for "vibe hacking", and when we entered Claude into cyber competitions, sometimes it beat others.

https://x.com/AnthropicAI/status/1960660072322764906

What happens when LLMs write & review 95% of all code? When they win flagship CTF competitions? (e.g. Blue Water @ LiveCTF 2025?) When they find vulns at scale in all open source, and patch them?

Seems like a new world.

I think this is super highly underrated.

Seems like a new world.

I think this is super highly underrated.

We're a team of ML researchers, some of whom with backgrounds as security researchers & operators.

We look at 0-to-1 moments, progress curves, and things that took months of effort 1 year ago being solved in 1 shot today. We see them now.

We look at 0-to-1 moments, progress curves, and things that took months of effort 1 year ago being solved in 1 shot today. We see them now.

What to do? Industry should:

+ build evals (hyperrealistic, defensive)

+ build tools for defenders & experiment as fast as possible

+ ask questions about what defense in an era of AGI means

+ build evals (hyperrealistic, defensive)

+ build tools for defenders & experiment as fast as possible

+ ask questions about what defense in an era of AGI means

More detail on what we did & found and what we think you should do:

red.anthropic.com/2025/ai-for-cy…

red.anthropic.com/2025/ai-for-cy…

You can now subscribe for updates!

p.s. did you know that all of Red was built w/ Claude Code by our non-technical social scientist team member? Only gets better from here.

p.s. did you know that all of Red was built w/ Claude Code by our non-technical social scientist team member? Only gets better from here.

• • •

Missing some Tweet in this thread? You can try to

force a refresh