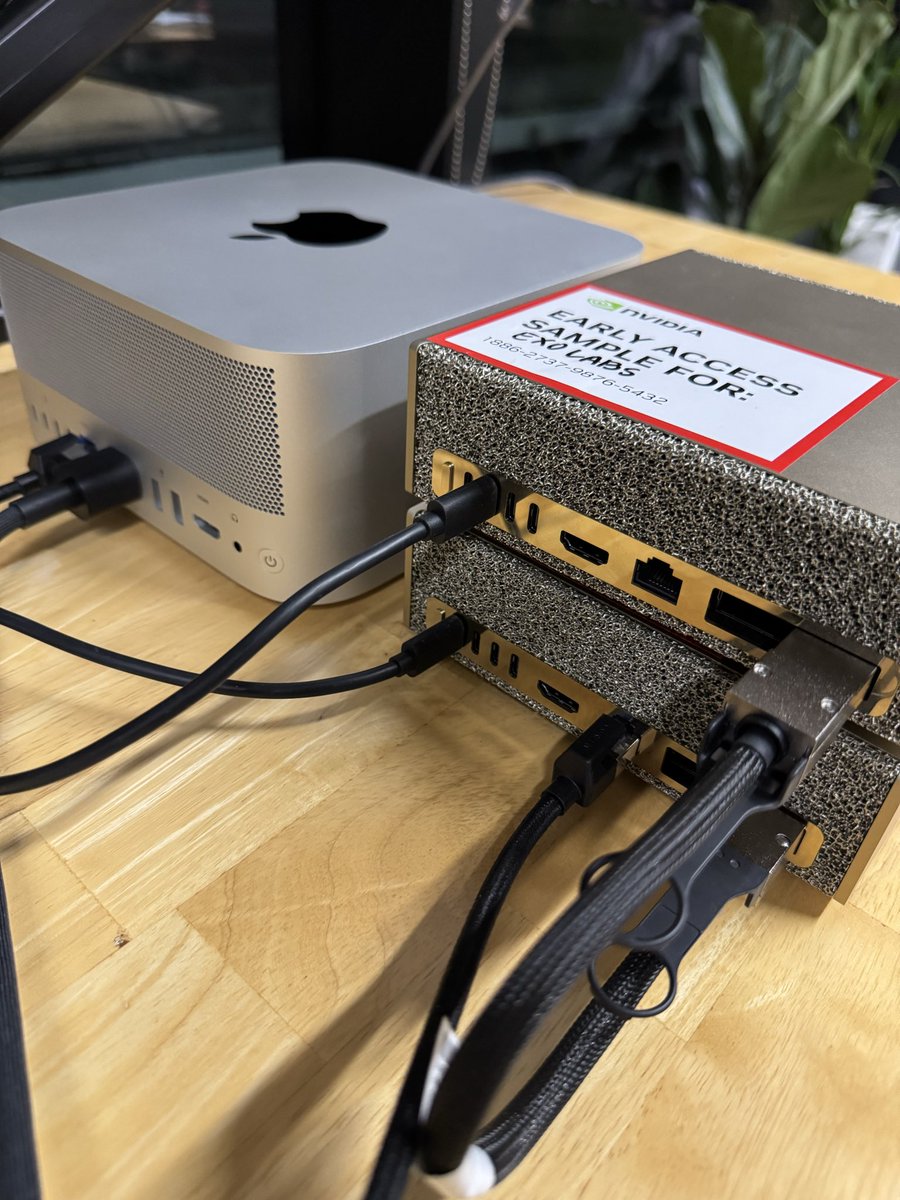

Clustering NVIDIA DGX Spark + M3 Ultra Mac Studio for 4x faster LLM inference.

DGX Spark: 128GB @ 273GB/s, 100 TFLOPS (fp16), $3,999

M3 Ultra: 256GB @ 819GB/s, 26 TFLOPS (fp16), $5,599

The DGX Spark has 3x less memory bandwidth than the M3 Ultra but 4x more FLOPS.

By running compute-bound prefill on the DGX Spark, memory-bound decode on the M3 Ultra, and streaming the KV cache over 10GbE, we are able to get the best of both hardware with massive speedups.

Short explanation in this thread & link to full blog post below.

DGX Spark: 128GB @ 273GB/s, 100 TFLOPS (fp16), $3,999

M3 Ultra: 256GB @ 819GB/s, 26 TFLOPS (fp16), $5,599

The DGX Spark has 3x less memory bandwidth than the M3 Ultra but 4x more FLOPS.

By running compute-bound prefill on the DGX Spark, memory-bound decode on the M3 Ultra, and streaming the KV cache over 10GbE, we are able to get the best of both hardware with massive speedups.

Short explanation in this thread & link to full blog post below.

LLM inference consists of a prefill and decode stage.

Prefill processes the prompt, building a KV cache. It’s compute-bound so gets faster with more FLOPS.

Decode reads the KV cache and generates tokens one by one. It’s memory-bound so gets faster with more memory bandwidth.

Prefill processes the prompt, building a KV cache. It’s compute-bound so gets faster with more FLOPS.

Decode reads the KV cache and generates tokens one by one. It’s memory-bound so gets faster with more memory bandwidth.

We can run these two stages on different devices:

Prefill: DGX Spark (high compute device, 4x compute)

Decode: M3 Ultra (high memory-bandwidth device, 3x memory-bandwidth)

However, now we need to transfer the KV cache over the network (10GbE). This introduces a delay.

Prefill: DGX Spark (high compute device, 4x compute)

Decode: M3 Ultra (high memory-bandwidth device, 3x memory-bandwidth)

However, now we need to transfer the KV cache over the network (10GbE). This introduces a delay.

But the KV cache is created for each transformer layer. By sending each layer’s KV cache after it’s computed, we overlap communication with computation.

We stream the KV cache and hide the network delay.

We achieve a 4x speedup in prefill & 3x in decode, with 0 network delay.

We stream the KV cache and hide the network delay.

We achieve a 4x speedup in prefill & 3x in decode, with 0 network delay.

Full blog post and more details about EXO 1.0:

Thanks @NVIDIA for early access to two DGX Sparks. #SparkSomethingBigblog.exolabs.net/nvidia-dgx-spa…

Thanks @NVIDIA for early access to two DGX Sparks. #SparkSomethingBigblog.exolabs.net/nvidia-dgx-spa…

• • •

Missing some Tweet in this thread? You can try to

force a refresh