🚨 BREAKING: A new AI model just changed how machines see the world.

This isn’t another “multimodal” system it’s an agentic one.

Instead of passively captioning or classifying, DeepEyes V2 reasons across text, image, and video like a cognitive agent.

It breaks down what it sees, plans what to look for next, and decides which modality matters most all without human hints. Its architecture the Agentic Multimodal Model (AMM) fuses visual grounding with chain-of-thought reasoning.

It doesn’t just see; it understands why it’s seeing it.

Benchmarks show a new pattern: not higher accuracy, but smarter perception. Tasks that used to need fine-tuned vision + language pairs now collapse into one unified reasoning loop.

This is the start of autonomous perception systems that can interpret, plan, and act not just describe pixels.

We’ve officially entered the age of agentic vision.

This isn’t another “multimodal” system it’s an agentic one.

Instead of passively captioning or classifying, DeepEyes V2 reasons across text, image, and video like a cognitive agent.

It breaks down what it sees, plans what to look for next, and decides which modality matters most all without human hints. Its architecture the Agentic Multimodal Model (AMM) fuses visual grounding with chain-of-thought reasoning.

It doesn’t just see; it understands why it’s seeing it.

Benchmarks show a new pattern: not higher accuracy, but smarter perception. Tasks that used to need fine-tuned vision + language pairs now collapse into one unified reasoning loop.

This is the start of autonomous perception systems that can interpret, plan, and act not just describe pixels.

We’ve officially entered the age of agentic vision.

Everyone’s seen “multimodal” models before but DeepEyes V2 is different.

It doesn’t just see and describe; it thinks about what it’s seeing. It plans, reasons, and adapts across modalities like a cognitive system.

This paper basically marks the start of agentic perception.

It doesn’t just see and describe; it thinks about what it’s seeing. It plans, reasons, and adapts across modalities like a cognitive system.

This paper basically marks the start of agentic perception.

Most multimodal systems just bolt vision on top of text.

DeepEyes V2 builds both as equal citizens inside one reasoning loop.

Instead of “image encoder → text decoder,” it uses a shared Agentic Cognitive Core that dynamically routes attention between vision, text, and reasoning layers.

DeepEyes V2 builds both as equal citizens inside one reasoning loop.

Instead of “image encoder → text decoder,” it uses a shared Agentic Cognitive Core that dynamically routes attention between vision, text, and reasoning layers.

What makes it “agentic”?

It doesn’t wait for a human to ask the next question it decides what to do next itself.

DeepEyes V2 runs a Perception–Intention–Action loop:

👁 Perceive visual + textual input

🧠 Form hypotheses

⚙️ Act by focusing on the next region, concept, or modality

It doesn’t wait for a human to ask the next question it decides what to do next itself.

DeepEyes V2 runs a Perception–Intention–Action loop:

👁 Perceive visual + textual input

🧠 Form hypotheses

⚙️ Act by focusing on the next region, concept, or modality

This changes multimodal reasoning completely.

When given an ambiguous image and text, older models freeze or guess. DeepEyes V2 re-evaluates which modality to trust more, then reinterprets.

It’s not fusing it’s negotiating meaning between modalities.

When given an ambiguous image and text, older models freeze or guess. DeepEyes V2 re-evaluates which modality to trust more, then reinterprets.

It’s not fusing it’s negotiating meaning between modalities.

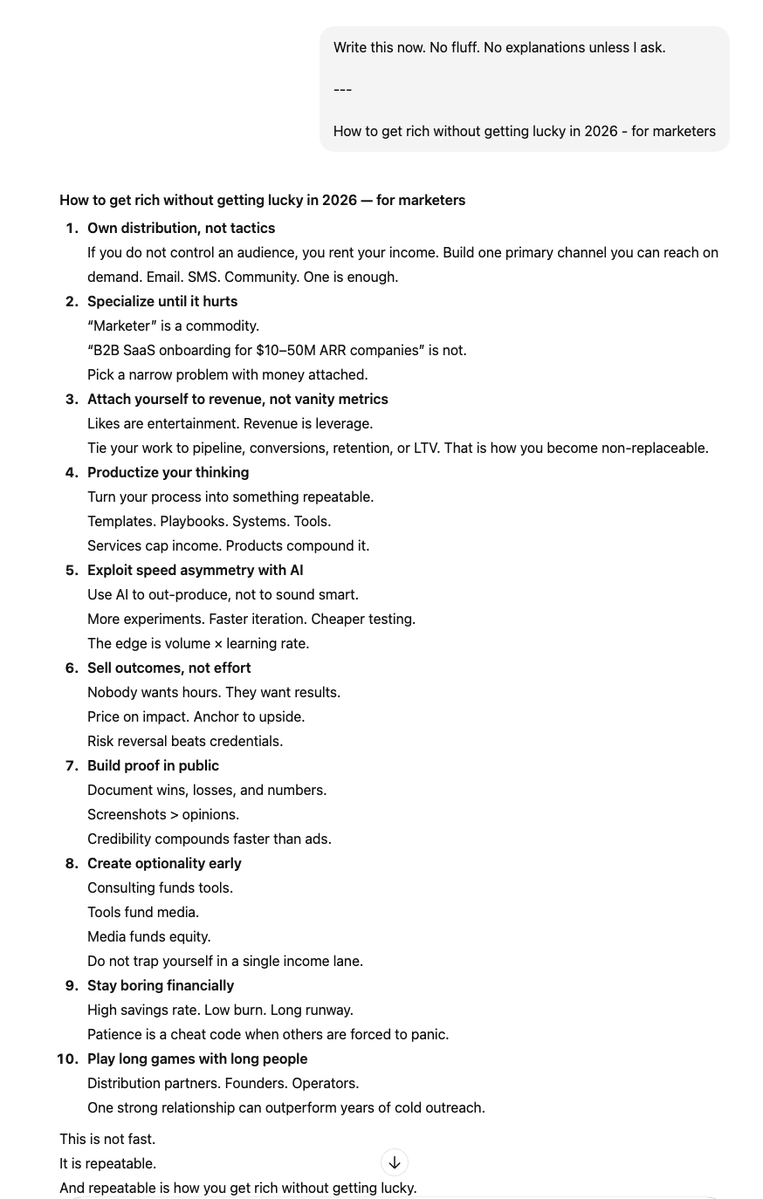

Benchmarks show something fascinating:

On simple captioning, it’s equal to GPT-4o.

But on complex grounded reasoning, it wins massively because it can plan before answering.

This is the first time planning appears inside a vision model.

On simple captioning, it’s equal to GPT-4o.

But on complex grounded reasoning, it wins massively because it can plan before answering.

This is the first time planning appears inside a vision model.

Agentic multimodality isn’t about accuracy it’s about adaptability.

DeepEyes V2 reuses reasoning traces across vision tasks. When it fails, it learns from its own trace logs building internal “perceptual memory.”

DeepEyes V2 reuses reasoning traces across vision tasks. When it fails, it learns from its own trace logs building internal “perceptual memory.”

The wild part: DeepEyes V2 teaches itself how to look.

Through reinforcement cycles, it learns to shift visual focus based on language uncertainty.

If text confidence drops, it zooms back into vision.

If vision fails, it reasons symbolically.

Through reinforcement cycles, it learns to shift visual focus based on language uncertainty.

If text confidence drops, it zooms back into vision.

If vision fails, it reasons symbolically.

This architecture could power next-gen autonomous agents.

Imagine AI assistants that can actually look at dashboards, code, or designs and reason about them in context.

DeepEyes V2 isn’t a step toward better vision models.

It’s a step toward cognitive vision systems.

Imagine AI assistants that can actually look at dashboards, code, or designs and reason about them in context.

DeepEyes V2 isn’t a step toward better vision models.

It’s a step toward cognitive vision systems.

The next wave of AI won’t be “multimodal.”

It’ll be agentic multimodal models that don’t just process inputs, but decide what to perceive next.

DeepEyes V2 is the blueprint.

And it quietly rewrites what “seeing” means in AI.

It’ll be agentic multimodal models that don’t just process inputs, but decide what to perceive next.

DeepEyes V2 is the blueprint.

And it quietly rewrites what “seeing” means in AI.

AI is not going to take job.

Our newsletter, The Shift, delivers breakthroughs, tools, and strategies to help you become value creator and build in this new era easily.

Subscribe:

Plus: Get access to 2k+ AI Tools and free AI courses when you join.theshiftai.beehiiv.com/subscribe

Our newsletter, The Shift, delivers breakthroughs, tools, and strategies to help you become value creator and build in this new era easily.

Subscribe:

Plus: Get access to 2k+ AI Tools and free AI courses when you join.theshiftai.beehiiv.com/subscribe

• • •

Missing some Tweet in this thread? You can try to

force a refresh