The secret behind Gemini 3?

Simple: Improving pre-training & post-training 🤯

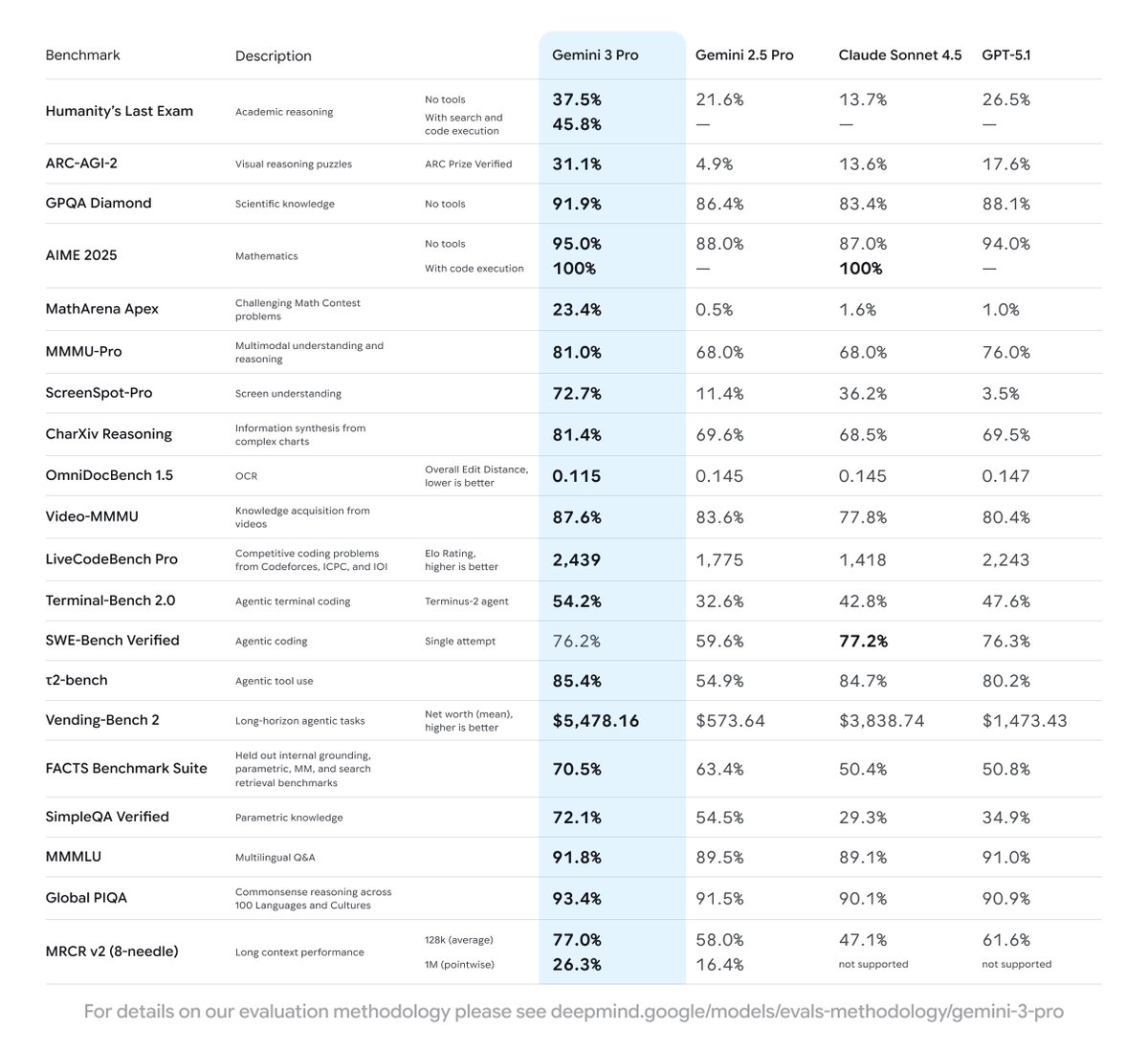

Pre-training: Contra the popular belief that scaling is over—which we discussed in our NeurIPS '25 talk with @ilyasut and @quocleix—the team delivered a drastic jump. The delta between 2.5 and 3.0 is as big as we've ever seen. No walls in sight!

Post-training: Still a total greenfield. There's lots of room for algorithmic progress and improvement, and 3.0 hasn't been an exception, thanks to our stellar team.

Congratulations to the whole team 💙💙💙

Simple: Improving pre-training & post-training 🤯

Pre-training: Contra the popular belief that scaling is over—which we discussed in our NeurIPS '25 talk with @ilyasut and @quocleix—the team delivered a drastic jump. The delta between 2.5 and 3.0 is as big as we've ever seen. No walls in sight!

Post-training: Still a total greenfield. There's lots of room for algorithmic progress and improvement, and 3.0 hasn't been an exception, thanks to our stellar team.

Congratulations to the whole team 💙💙💙

• • •

Missing some Tweet in this thread? You can try to

force a refresh