To mark the 2nd anniversary of LLM360, we are proud to release K2-V2: a 70B reasoning-centric foundation model that delivers frontier capabilities.

As a push for "360-open" transparency, we are releasing not only weights, but the full recipe: data composition, training code, logs, and intermediate checkpoints.

About K2-V2:

🧠 70B params, reasoning-optimized

🧊 512K context window

🔓 "360-Open" (Data, Logs, Checkpoints)

📈 SOTA on olympiad math and complex logic puzzles

As a push for "360-open" transparency, we are releasing not only weights, but the full recipe: data composition, training code, logs, and intermediate checkpoints.

About K2-V2:

🧠 70B params, reasoning-optimized

🧊 512K context window

🔓 "360-Open" (Data, Logs, Checkpoints)

📈 SOTA on olympiad math and complex logic puzzles

We evaluated K2 across general knowledge, STEM, coding, and agentic tool use.

The goal? To show open models need not be smaller, weaker versions of closed ones.

K2 outperforms models of similar sizes, and performs close to models that are larger.

🔗 Check out the model here:

Generously sponsored by @mbzuai.huggingface.co/LLM360/K2-V2

The goal? To show open models need not be smaller, weaker versions of closed ones.

K2 outperforms models of similar sizes, and performs close to models that are larger.

🔗 Check out the model here:

Generously sponsored by @mbzuai.huggingface.co/LLM360/K2-V2

K2 introduces three "reasoning effort" modes—Low, Medium, and High—allowing you to balance cost vs. capability.

K2-High excels on hard math tasks like AIME 2025 (80.2%) and HMMT, while K2-Medium is the sweet spot for efficiency.

K2-High excels on hard math tasks like AIME 2025 (80.2%) and HMMT, while K2-Medium is the sweet spot for efficiency.

True openness means shipping the artifacts others hide. We release K2 components in three suites 📕

1. Full checkpoints (including mid-training), logs, and code.

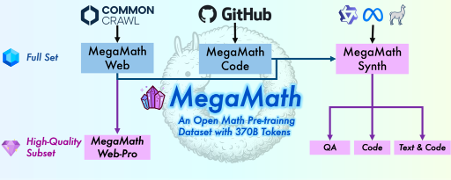

2. Exact data recipes and curation details.

3. The "TxT360-3efforts" SFT data to teach models to think.

1. Full checkpoints (including mid-training), logs, and code.

2. Exact data recipes and curation details.

3. The "TxT360-3efforts" SFT data to teach models to think.

The secret sauce is our "Mid-Training" phase.

We didn't only fine-tune; we infused reasoning early by feeding K2 billions of reasoning tokens and extending context to 512K tokens.

This ensures reasoning is a native behavior. See how K2-High achieves state-of-the-art results by leveraging more "thinking tokens."

We didn't only fine-tune; we infused reasoning early by feeding K2 billions of reasoning tokens and extending context to 512K tokens.

This ensures reasoning is a native behavior. See how K2-High achieves state-of-the-art results by leveraging more "thinking tokens."

Ready to dive in?

📄 Technical Report: llm360.ai/reports/K2_V2_…

🤗 Model & Data: huggingface.co/collections/LL…

📊 Analysis: wandb.ai/llm360/K2-V2

💻 Training Code: github.com/llm360/k2v2_tr…

💻 Evaluation Code: github.com/llm360/eval360

A huge thank you to the OSS ecosystem! @huggingface @wandb @github @lmsysorg @AiEleuther @allen_ai @BigCodeProject @PyTorch @nvidia @cerebras @mbzuai and many more.

📄 Technical Report: llm360.ai/reports/K2_V2_…

🤗 Model & Data: huggingface.co/collections/LL…

📊 Analysis: wandb.ai/llm360/K2-V2

💻 Training Code: github.com/llm360/k2v2_tr…

💻 Evaluation Code: github.com/llm360/eval360

A huge thank you to the OSS ecosystem! @huggingface @wandb @github @lmsysorg @AiEleuther @allen_ai @BigCodeProject @PyTorch @nvidia @cerebras @mbzuai and many more.

• • •

Missing some Tweet in this thread? You can try to

force a refresh