CHATGPT-5.2 JUST TURNED LEARNING INTO A PAYWALL-FREE

People are still buying courses, sitting through playlists, and bookmarking “learn later” links while ChatGPT-5.2 can design a personalized curriculum, teach you in real time, test your understanding, and adapt on the fly

for literally any skill if you know how to prompt it correctly.

Here’s how:

People are still buying courses, sitting through playlists, and bookmarking “learn later” links while ChatGPT-5.2 can design a personalized curriculum, teach you in real time, test your understanding, and adapt on the fly

for literally any skill if you know how to prompt it correctly.

Here’s how:

1/ BUILD YOUR “AI DEGREE” IN 30 SECONDS

Pros don’t ask “teach me X”.

They ask for the full roadmap.

Prompt to steal:

“Create a complete learning curriculum for [skill].

Break it into beginner, intermediate, and advanced modules.

Add exercises, real world projects, weekly goals, and skill checkpoints.”

Pros don’t ask “teach me X”.

They ask for the full roadmap.

Prompt to steal:

“Create a complete learning curriculum for [skill].

Break it into beginner, intermediate, and advanced modules.

Add exercises, real world projects, weekly goals, and skill checkpoints.”

2/ THE “TEACH ME LIKE I’M LAZY” METHOD

If the roadmap feels heavy, compress it.

Prompt to steal:

“Summarize this topic in 10 key principles.

Then give me examples so I never forget the concepts.”

This is the cheatcode for retention.

If the roadmap feels heavy, compress it.

Prompt to steal:

“Summarize this topic in 10 key principles.

Then give me examples so I never forget the concepts.”

This is the cheatcode for retention.

3/ THE RAPID SKILL DOWNLOAD

Want mastery without fluff?

Tell ChatGPT to cut the nonsense.

Prompt to steal:

“Give me the 20 percent of [topic] that drives 80 percent of results.

Be brutally practical.”

This is the Pareto move.

Want mastery without fluff?

Tell ChatGPT to cut the nonsense.

Prompt to steal:

“Give me the 20 percent of [topic] that drives 80 percent of results.

Be brutally practical.”

This is the Pareto move.

4/ THE PERSONAL TUTOR MODE

This is where it gets insane.

Prompt to steal:

“Ask me 10 questions to diagnose what I don’t understand about [topic].

Then reteach the weak spots with examples.”

This feels like hiring a $200 per hour tutor.

This is where it gets insane.

Prompt to steal:

“Ask me 10 questions to diagnose what I don’t understand about [topic].

Then reteach the weak spots with examples.”

This feels like hiring a $200 per hour tutor.

5/ THE PROJECT MODE

Skills don’t stick unless you build.

Prompt to steal:

“Give me 5 real world projects to practice this.

Then guide me step by step through the first one.”

You’ll learn faster than any passive course.

Skills don’t stick unless you build.

Prompt to steal:

“Give me 5 real world projects to practice this.

Then guide me step by step through the first one.”

You’ll learn faster than any passive course.

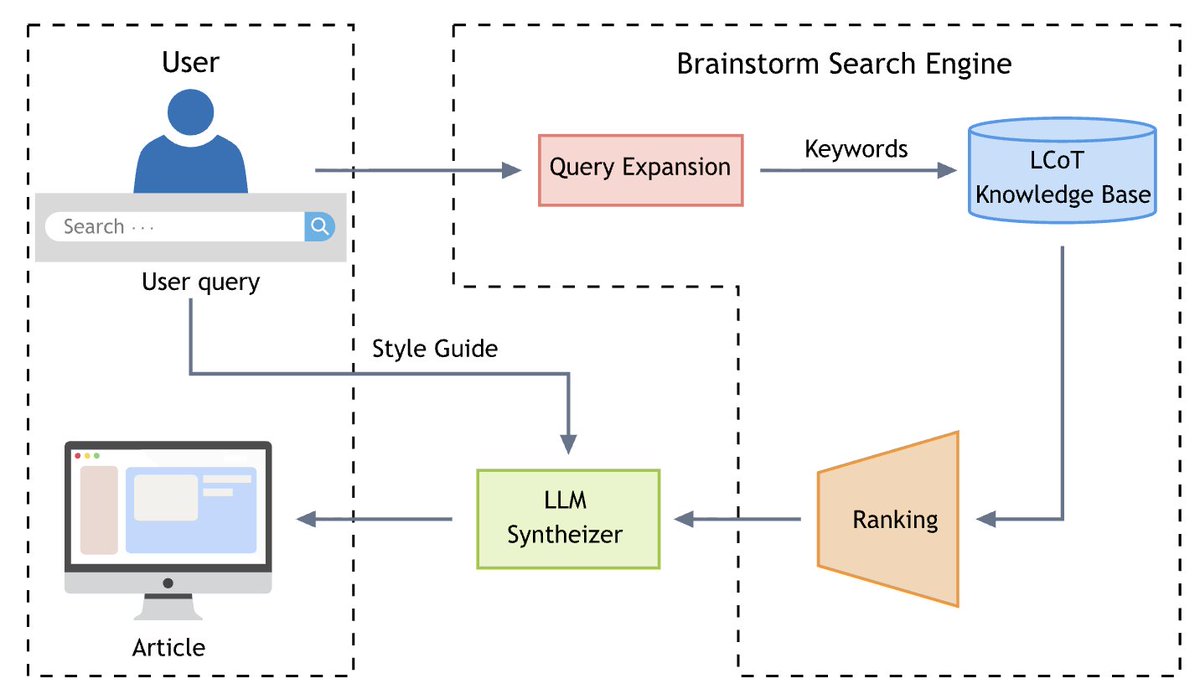

AI makes content creation faster than ever, but it also makes guessing riskier than ever.

If you want to know what your audience will react to before you post, TestFeed gives you instant feedback from AI personas that think like your real users.

It’s the missing step between ideas and impact. Join the waitlist and stop publishing blind.

testfeed.ai

If you want to know what your audience will react to before you post, TestFeed gives you instant feedback from AI personas that think like your real users.

It’s the missing step between ideas and impact. Join the waitlist and stop publishing blind.

testfeed.ai

• • •

Missing some Tweet in this thread? You can try to

force a refresh