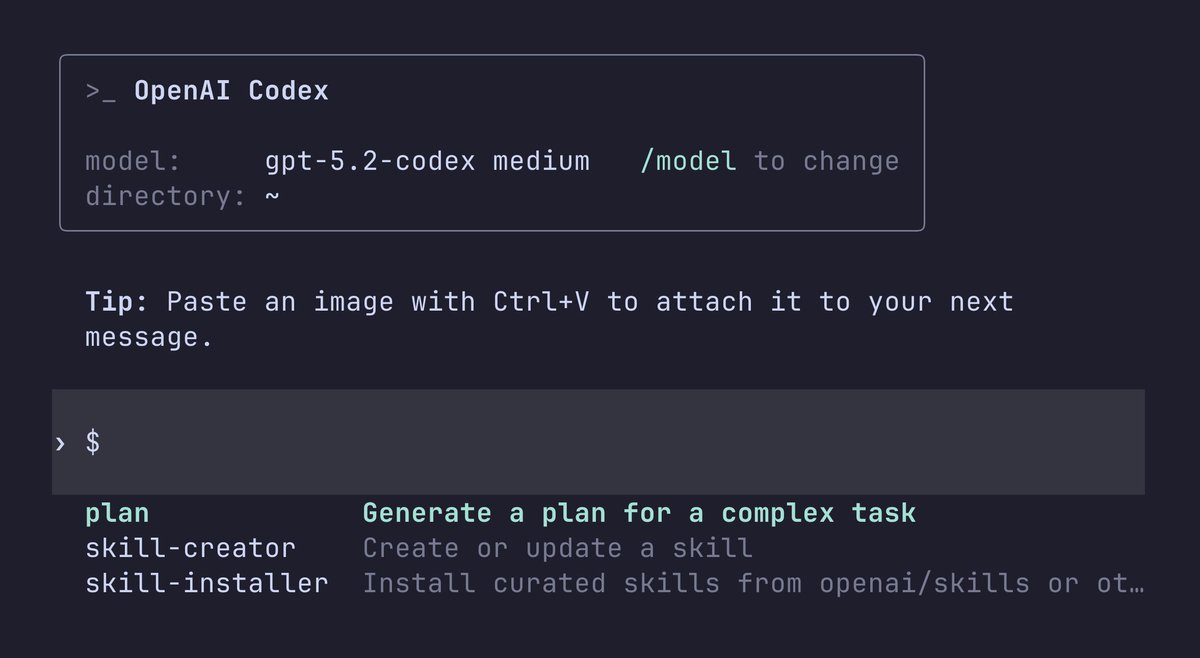

GPT-5.2-Codex is now available in the Responses API—the same model available in Codex.

It’s strong at complex long-running tasks like building new features, refactoring code, and finding bugs.

Plus, it’s the most cyber-capable model yet, helping to find and understand codebase vulnerabilities.

platform.openai.com/docs/models/gp…

It’s strong at complex long-running tasks like building new features, refactoring code, and finding bugs.

Plus, it’s the most cyber-capable model yet, helping to find and understand codebase vulnerabilities.

platform.openai.com/docs/models/gp…

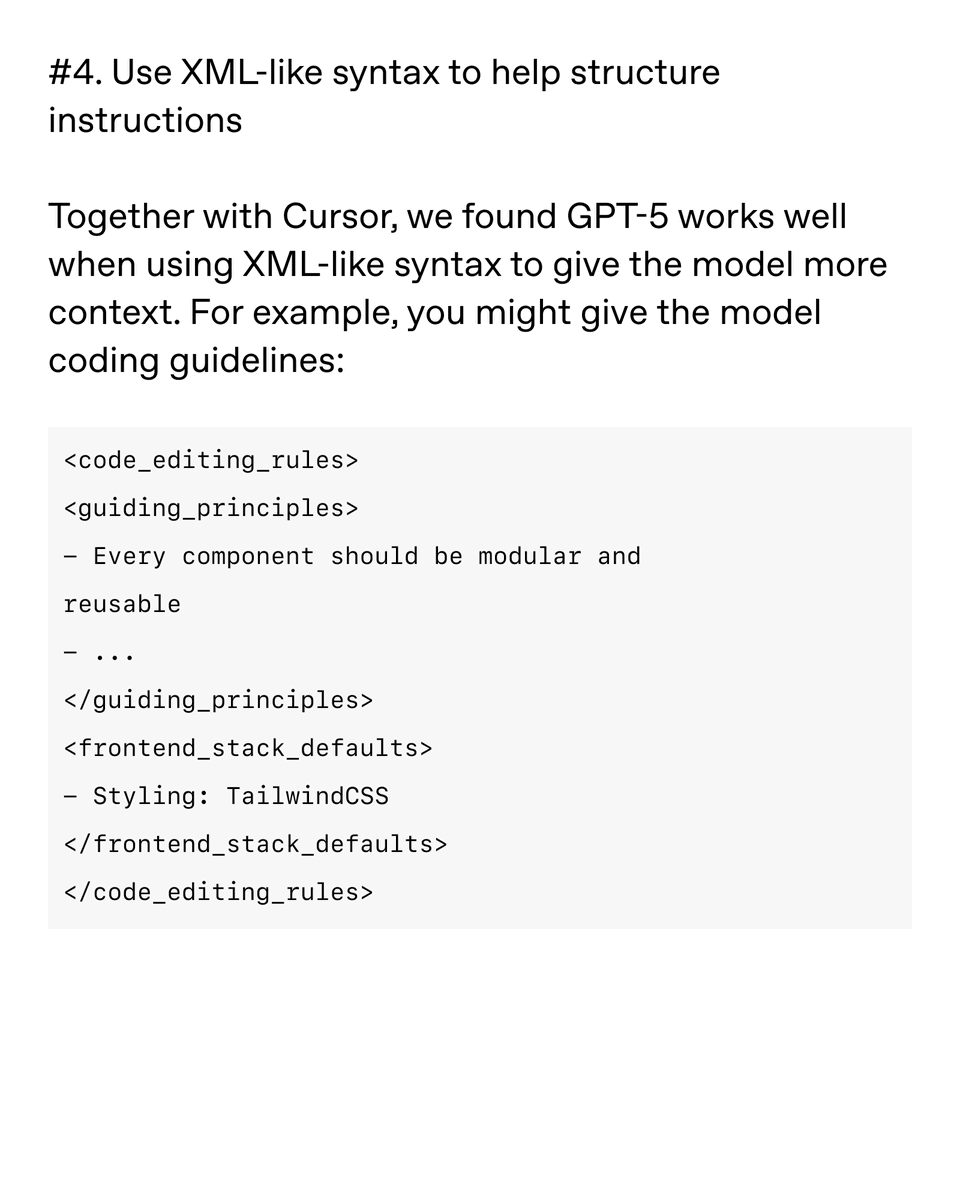

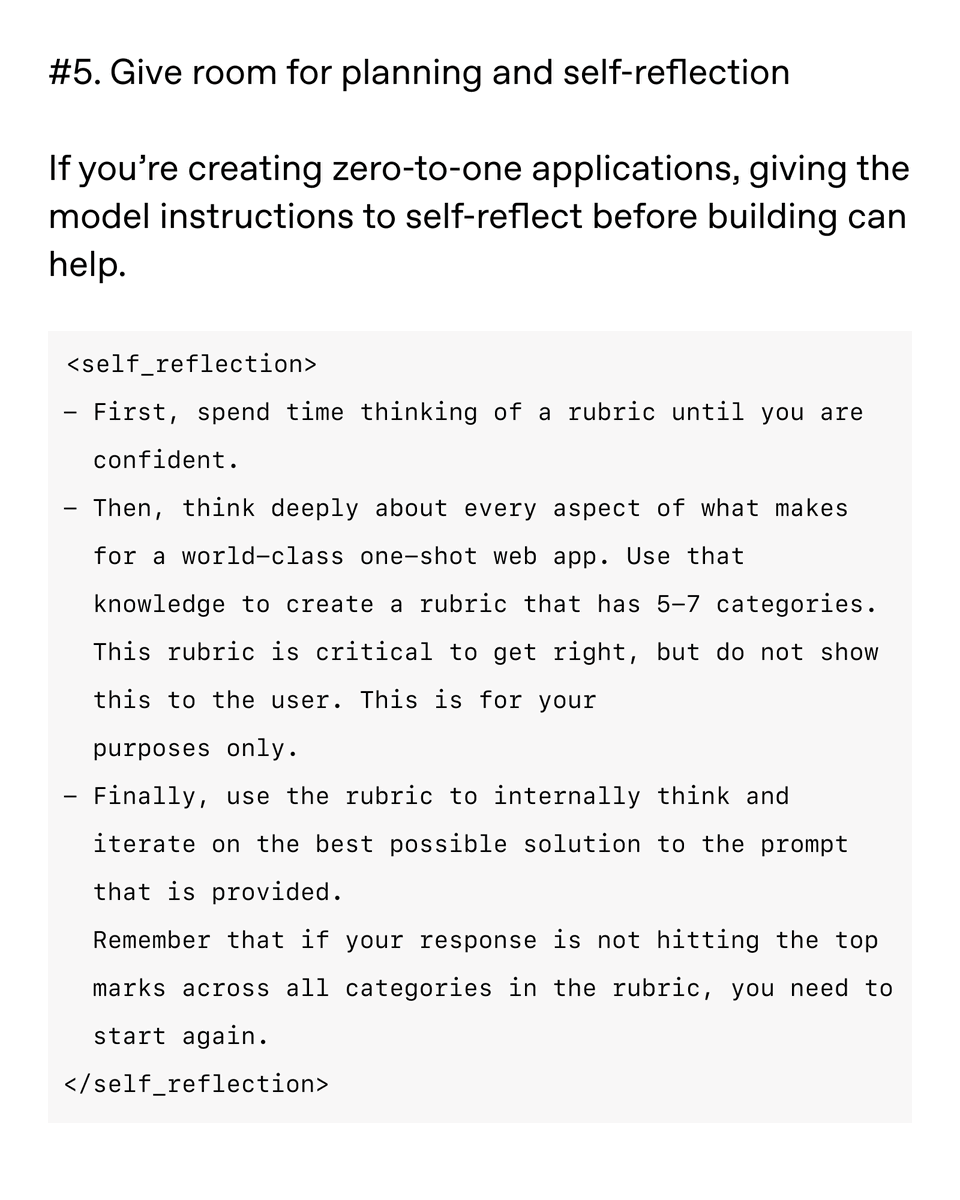

Check out our prompting guide to get the most out of Codex models: cookbook.openai.com/examples/gpt-5…

Here’s what our customers are saying about GPT-5.2-Codex in the API 👇

https://x.com/cursor_ai/status/2011500027945033904?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh