tldr

spent the weekend debugging why our scraping workers kept crashing 😅

turns out processing everything in parallel was eating up memory and causing OOM errors

switched to sequential processing, added some GC triggers, and bumped up memory limits.

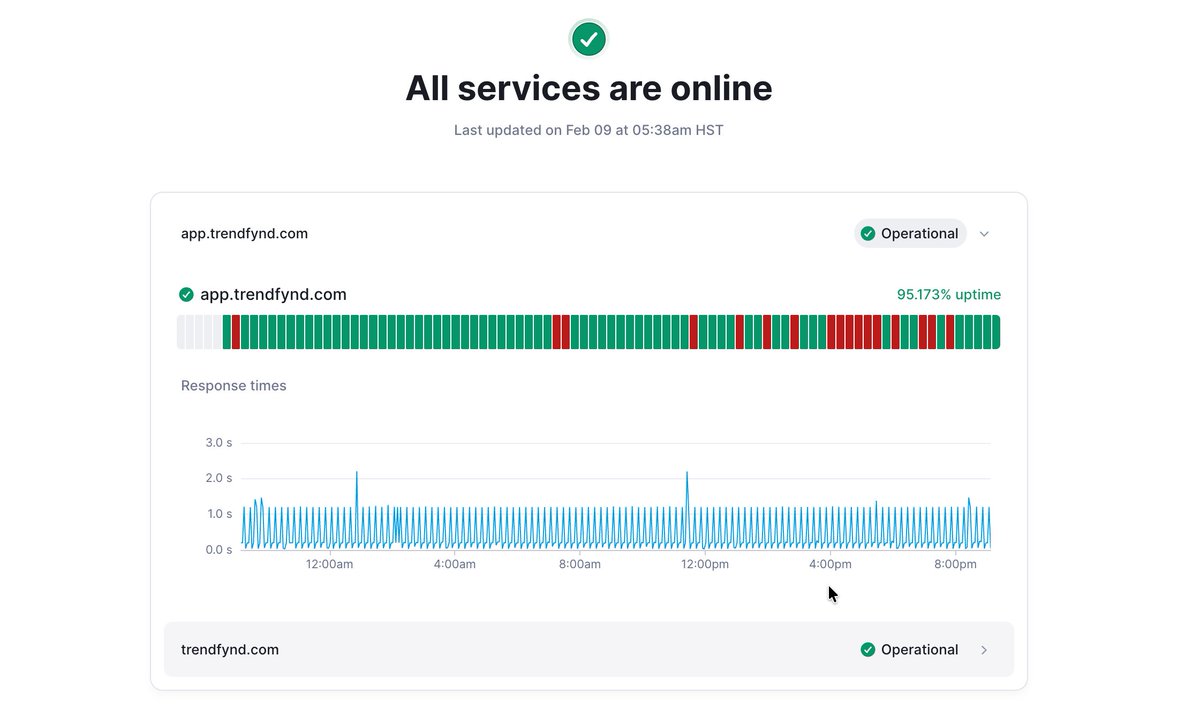

back to 95%+ uptime now 🎉

spent the weekend debugging why our scraping workers kept crashing 😅

turns out processing everything in parallel was eating up memory and causing OOM errors

switched to sequential processing, added some GC triggers, and bumped up memory limits.

back to 95%+ uptime now 🎉

1. how it was working before:

we were scraping 7 platforms (X, reddit, youtube, tiktok, instagram, threads, pinterest) all at once using Promise.all()

worked fine initially. but as we scaled and sessions got bigger, memory started spiking...

we were scraping 7 platforms (X, reddit, youtube, tiktok, instagram, threads, pinterest) all at once using Promise.all()

worked fine initially. but as we scaled and sessions got bigger, memory started spiking...

2. first attempt to fix:

thought it was just a memory limit issue. bumped from 512MB to 1GB in the dockerfile

workers still crashed 💥

checked logs: "JavaScript heap out of memory" errors everywhere

thought it was just a memory limit issue. bumped from 512MB to 1GB in the dockerfile

workers still crashed 💥

checked logs: "JavaScript heap out of memory" errors everywhere

3. Second attempt:

ok maybe it's the batch size? reduced SESSION_BATCH_SIZE from 5 to 2

still crashing. tried adding manual garbage collection triggers

nope. still OOM errors 😤

ok maybe it's the batch size? reduced SESSION_BATCH_SIZE from 5 to 2

still crashing. tried adding manual garbage collection triggers

nope. still OOM errors 😤

4. third attempt:

maybe we're loading too much data? limited existing IDs retrieval to 500 per platform

added retry logic with exponential backoff for transient errors

workers still dying. status page showing red segments every few hours 📉

maybe we're loading too much data? limited existing IDs retrieval to 500 per platform

added retry logic with exponential backoff for transient errors

workers still dying. status page showing red segments every few hours 📉

5. the realization:

was staring at memory graphs and it hit me - we're holding ALL platform data in memory at once

twitter + reddit + youtube + tiktok + instagram + threads + pinterest = 💥

even with 1GB, when each platform returns hundreds of posts, it adds up fast

was staring at memory graphs and it hit me - we're holding ALL platform data in memory at once

twitter + reddit + youtube + tiktok + instagram + threads + pinterest = 💥

even with 1GB, when each platform returns hundreds of posts, it adds up fast

6. the final fix:

switched to sequential processing. one platform at a time, save to DB immediately, then move on

added explicit GC after each platform finishes

kept the 1GB limit but now it's actually enough

added retry logic so transient API errors don't kill the whole job

switched to sequential processing. one platform at a time, save to DB immediately, then move on

added explicit GC after each platform finishes

kept the 1GB limit but now it's actually enough

added retry logic so transient API errors don't kill the whole job

7. results:

went from intermittent crashes to 95%+ uptime ✨

response times stabilized (no more 2s spikes)

workers process jobs reliably now

sometimes slower is better. especially when "faster" means your service is down

went from intermittent crashes to 95%+ uptime ✨

response times stabilized (no more 2s spikes)

workers process jobs reliably now

sometimes slower is better. especially when "faster" means your service is down

8. bonus win:

was running on a 13€/month server (16GB RAM) but only using like 2-3GB

after the fix, downgraded to 5€/month (8GB) and it's still more than enough 💰

was running on a 13€/month server (16GB RAM) but only using like 2-3GB

after the fix, downgraded to 5€/month (8GB) and it's still more than enough 💰

• • •

Missing some Tweet in this thread? You can try to

force a refresh