I teach cryptography at Johns Hopkins. Mostly on BlueSky these days at https://t.co/GI4QlxYTdk.

27 subscribers

How to get URL link on X (Twitter) App

There are many problems with these ideas, not the least of which is that we’re asking for-profit companies to collect even more identifying information on users — information that (even if you fully trust the government) could end up breached or sold.

There are many problems with these ideas, not the least of which is that we’re asking for-profit companies to collect even more identifying information on users — information that (even if you fully trust the government) could end up breached or sold.

There is malware that targets and compromises phones. There has been malware that targets the Signal application. It’s an app that processes many different media types, and that means there’s almost certainly a vulnerability to be exploited at any given moment in time.

There is malware that targets and compromises phones. There has been malware that targets the Signal application. It’s an app that processes many different media types, and that means there’s almost certainly a vulnerability to be exploited at any given moment in time.

I understand that it uses differential privacy and some fancy cryptography, but I would have loved to know what this is before it was deployed and turned on by default without my consent.

I understand that it uses differential privacy and some fancy cryptography, but I would have loved to know what this is before it was deployed and turned on by default without my consent.

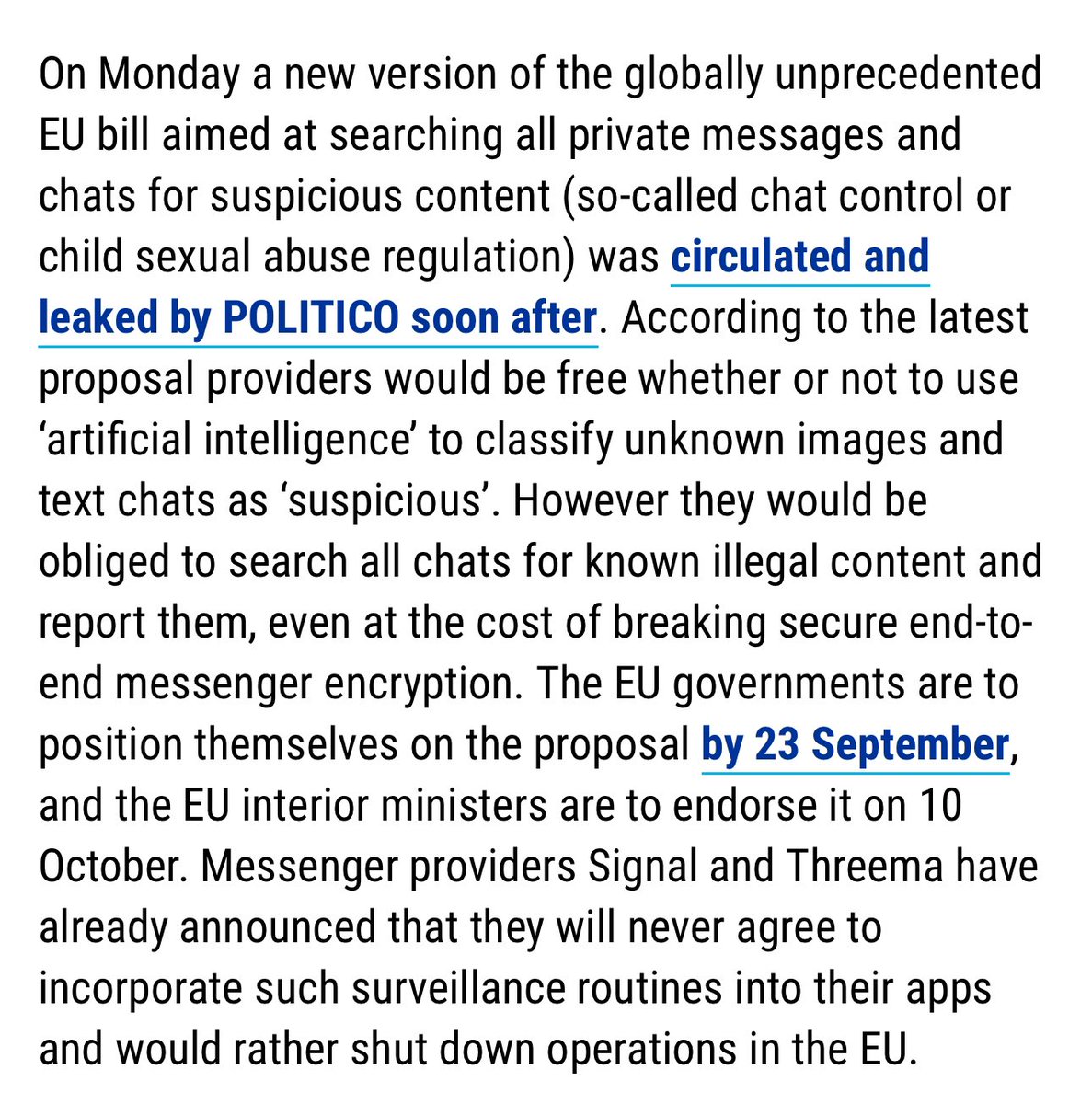

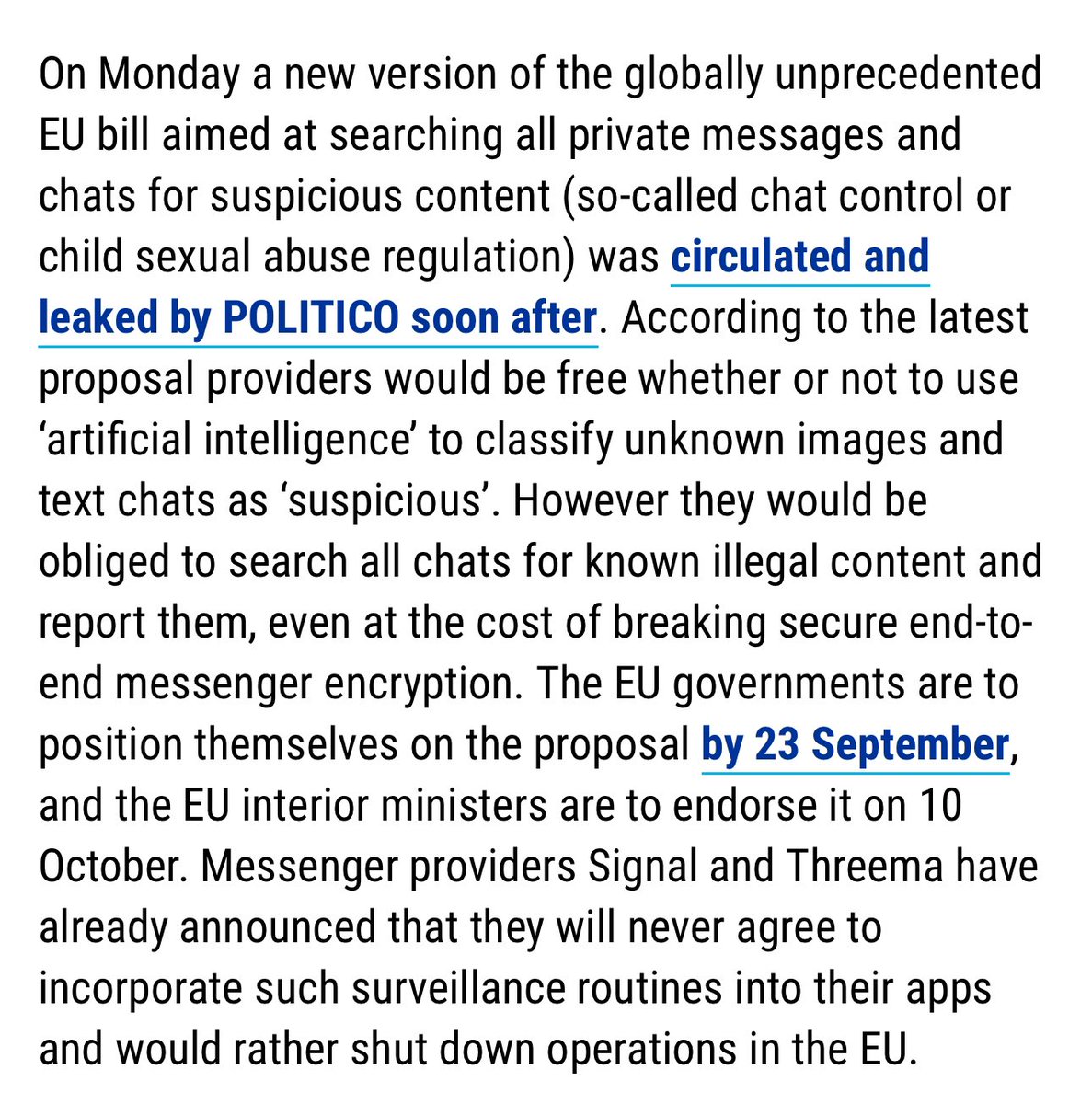

https://twitter.com/privacymatters/status/1834177749679751575For those who haven’t been paying attention, the EU Council and Commission have been relentlessly pushing a regulation that would break encryption. It died last year, but it’s back again — this time with Hungary in the driver’s seat. And the timelines are short.

https://twitter.com/profwoodward/status/1833486476740334063In a very short timespan it’s going to be expected that your phone can answer questions about what you did or talked about recently, what restaurants you went to. More capability is going to drive more data access, and people will grant it.

https://twitter.com/lcasdev/status/1810696257137959018The fact that Google is doing this right in the face of US and EU anti-monopoly efforts either means they’ve made a very sophisticated calculation, or they’re headed towards a major reckoning.

https://twitter.com/mer__edith/status/1808456649054589438If you’re thinking that some kind of privacy-preserving age verification system is the answer, that’s great! But you need to make sure your goals (easy access for adults, real privacy, no risk of credentials being stolen) actually overlap with the legislators’ goals.