In 2018 the blockchain/decentralization story fell apart. For example, a study of 43 use cases found a 0% success rate. theregister.co.uk/2018/11/30/blo…

Let's talk about some mistaken assumptions about decentralization that led to the blockchain hype, and what we can learn from them.

Let's talk about some mistaken assumptions about decentralization that led to the blockchain hype, and what we can learn from them.

(For context, I think the technology is sound, interesting and important from a CS perspective. That’s why I’ve been researching and teaching it since 2013. bitcoinbook.cs.princeton.edu But I’ve also been speaking/writing about the pitfalls of decentralization for ~10 years.)

Blockchain proponents have a vision of _society_ in which centralized entities are weakened/eliminated. But blockchain tech is a way to build _software_ without centralized servers. Why would the latter enable the former? It’s a leap of logic that’s left unexplained.

There’s a widespread belief in the blockchain world that centralization results from government regulation and/or monopolistic rent-seeking. The truth is more mundane: centralization emerges naturally in a free market due to economies of scale and other efficiencies.

Absence of government intervention doesn’t mean decentralization—often the opposite. For example, after airlines were deregulated in the US in 1978, they quickly transitioned from a point-to-point model to a hub-and-spoke model, which is more centralized. en.wikipedia.org/wiki/Airline_d…

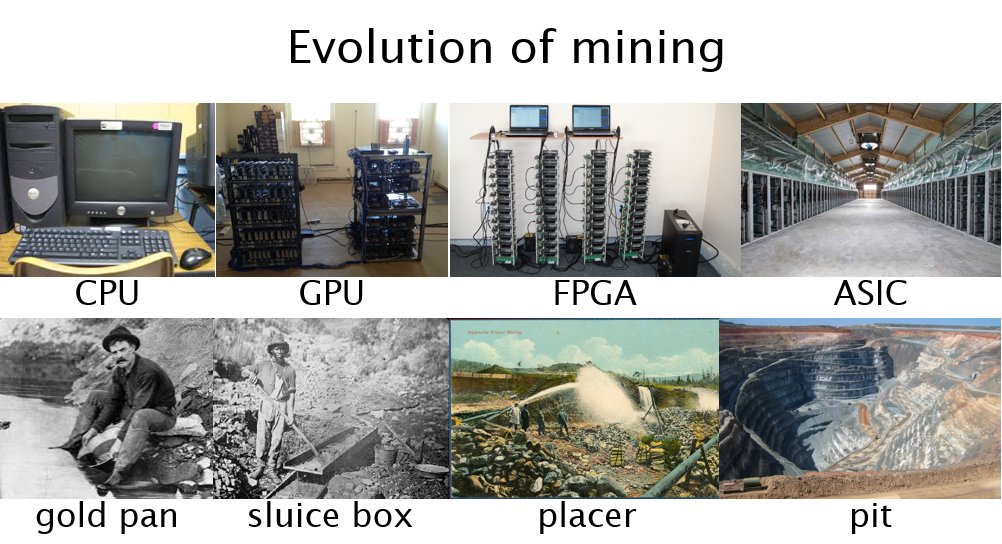

A neat illustration of how centralization arises naturally due to economies of scale is the fact that the evolution of cryptocurrency mining closely parallels gold mining more than a century ago! [By @josephboneau, from our book. bitcoinbook.cs.princeton.edu]

If there were a conspiracy, then blockchains might be a good answer. But, as Steve Jobs realized in the context of network TV, what we’ve got is what people want.

Open platforms can’t win by directly appealing to users on philosophical grounds, or even cost (see Linux on the desktop). Mainstream users have no good reason to directly interact with blockchain technology—or any piece of code—without intermediaries involved.

Openness and decentralization matter to _developers_. To succeed, decentralized platforms must attract developers and foster an ecosystem of services that build on each other and gradually improve in functionality and quality. That’s how the Internet beat Compuserve and AOL.

But this process has to happen organically and will take decades. It can't be rushed with VC/ICO money. What blockchain companies are doing today is as if Internet companies had tried to compete against print newspapers in the 80s. The supporting infrastructure just wasn’t there.

Another area where blockchain projects have faltered is in how they govern themselves. Decentralized doesn’t mean structureless — structureless groups don’t exist. jofreeman.com/joreen/tyranny…

You can reject governments but you can’t opt out of governance.

You can reject governments but you can’t opt out of governance.

Smart contracts are really cool, but they're neither smart nor contracts. freedom-to-tinker.com/2017/02/20/sma…

The fact that they are being proposed as replacements for legal contracts with a straight face is emblematic of the category errors that are rampant in the blockchain space.

The fact that they are being proposed as replacements for legal contracts with a straight face is emblematic of the category errors that are rampant in the blockchain space.

I'll leave you with pointers to two readings that I've found incredibly helpful. The first is the book Master Switch, a deep historical exploration of decades-long cycles of centralization & decentralization. amazon.com/Master-Switch-…

The second is absolutely everything written by Matt Levine on blockchains, smart contracts, and fintech. The most recent is this piece on Robinhood, and how fintech "innovation" is a great way to rediscover why regulations exist. bloomberg.com/opinion/articl…

• • •

Missing some Tweet in this thread? You can try to

force a refresh