Up and at it again, after a short lunch break. @katerowley0 on visual word recognition in deaf readers. #LingCologne

How do deaf readers connect phonology, orthography, and semantics (since phonology is not directly available)? #LingCologne

In a lexical identification task, deaf and hearing readers had same reaction time, but deaf readers were more accurate. #LingCologne

In a second experiment, deaf and hearing readers alike were affected by pseudohomophones (<brane> ~ /brein/ ~ <brain>), suggesting phonological processing of orthographic forms. #LingCologne

Whereas both deaf and hearing readers do phonological processing when reading, only hearing readers also react to semantic distractors. For hearing readers, phonological activation is automatic — in deaf readers it is not. #LingCologne

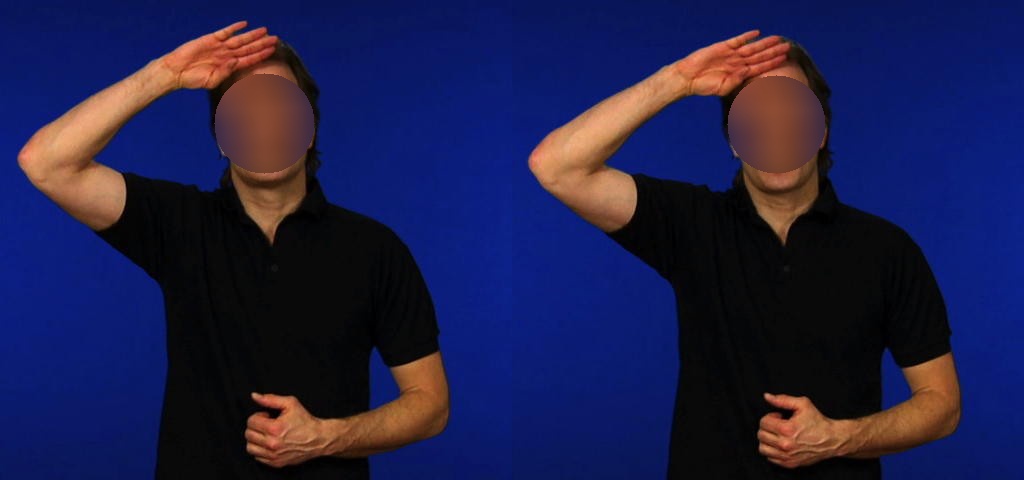

But we normally read sentences, not individual words. Another experiment looked at this, using target vs. phonological, orthographic, and unrelated distractors:

"She decided to cut her {hair, hare, hail, vest} before the wedding."

#LingCologne

"She decided to cut her {hair, hare, hail, vest} before the wedding."

#LingCologne

Orthographic previews are beneficial for deaf and hearing readers alike, but only hearing readers are affected by phonological preview. #LingCologne

Overall conclusion is that deaf readers are less concerned with phonological processing. They can use it (when forced), but it is not automatic as in hearing readers. Possibly lip reading is one way deaf individuals have accessed phonology originally. #LingCologne

• • •

Missing some Tweet in this thread? You can try to

force a refresh