Keynote 2 of day 2 at #LingCologne: @ozyurek_a (@GestureSignLab) on the integration of hand gestures in spoken language as multimodal communication.

Traditional approaches to language research have focused on the:

— spoken/written (not visual)

— arbitrary (not iconic)

— discrete/categorical

— unichannel (not multimodal)

Luckily, more recent work has broadened the perspective wrt the above points.

#LingCologne

— spoken/written (not visual)

— arbitrary (not iconic)

— discrete/categorical

— unichannel (not multimodal)

Luckily, more recent work has broadened the perspective wrt the above points.

#LingCologne

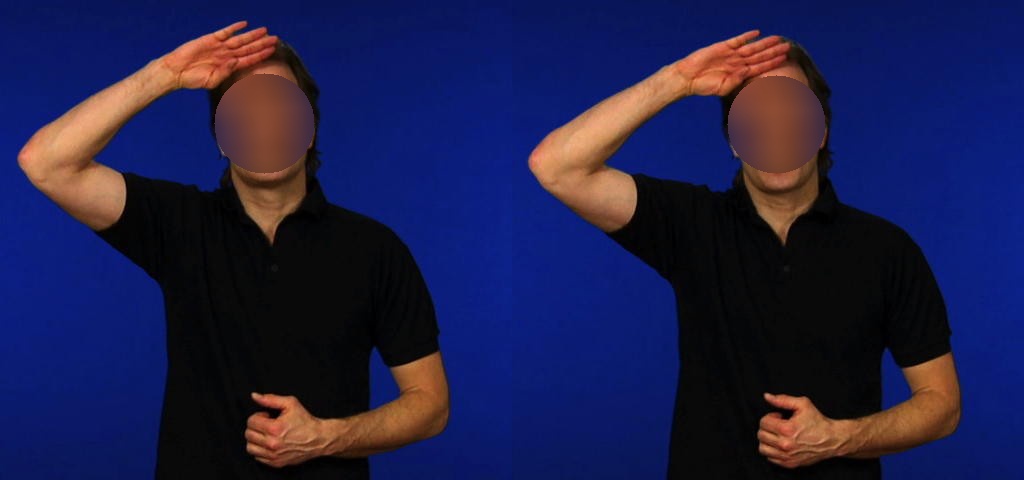

If gesture is only simulated action, gesturing should look the same regardless of one's language. However, the interface model predicts that gesture is integrated with language, such that properties of one's language will influence gesture strategies. #LingCologne

Looking at speech and gesture descriptions across languages, it was indeed found that properties of verb phrase structure influences the gesture strategies employed (including types of iconicity). Linguistic structure shapes iconicity even in blind gesturers! #LingCologne

Gestures are clearly enhancing communication. Differences seen in the amount of co-speech gestures during descriptions directed toward adults or children. #LingCologne

Also, as @lindadrijvers showed in her recent PhD work, gestures enhance communication during de-graded speech. #LingCologne

ru.nl/mlc/news-event…

ru.nl/mlc/news-event…

And the match or mis-match between speech and gestures may form a type of McGurk effect, affecting comprehension. #LingCologne

("Want a loo, sir?")

("Want a loo, sir?")

Concludes the talk by acknowledging all the collaborators of the @GestureSignLab at @Radboud_Uni and @MPI_NL. Definitely a lot of great work coming out of this research group!

#LingCologne

#LingCologne

• • •

Missing some Tweet in this thread? You can try to

force a refresh