Ardavan Guity & @jahochcam (with a pre-recorded intro) on ethics concerning language documentation of #signlanguages in #Deaf communities. #wfd2019

A very interesting story here is Ardavan being involved in language documentation as an informant at first, but becoming more and more involved, gaining experience and skills along the way (by interaction, collaboration, sharing), ending up a researcher! #wfd2019

Involving the #Deaf community and individual participants is crucial. Communication, information, consent. This enriches the collaboration between researchers and informants and gives agency and empowerment to language users! #wfd2019

Confession: When I first started researching #signlanguages, I was a terrible signer, slightly uncomfortable in signing environments, and quite bad at informing and involving Deaf participants and even colleagues...

... But I was fortunate enough to be working at @TspLingSU, which is a Deaf-led Deaf-majority research and teaching group, with colleagues who were supportive and gave me room and experience to improve both signing and research outreach...

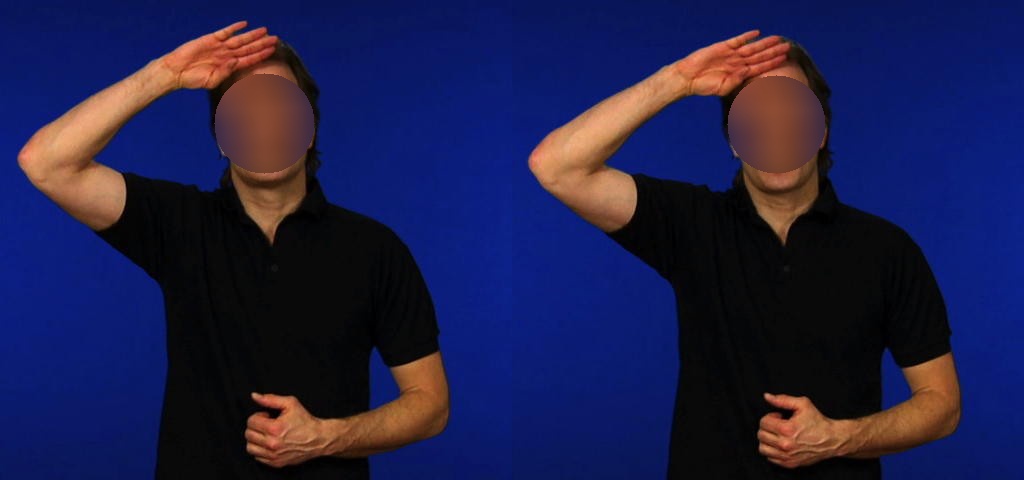

... There's still a lot more improvement needed in my case, which is apparent from this presentation, but I've been encouraged (and accepted) to give signed presentations of my research (in both 🇸🇪 and 🇳🇱), and have written popsci summaries of my work (in Deaf and general pubs).

I want to be an ally and do the best I can in this field, so I am grateful to my Deaf friends and colleagues for support and encouragement, but also welcome criticism (when needed). ❤️🤟

• • •

Missing some Tweet in this thread? You can try to

force a refresh