Very excited about Hope Morgan et al.'s talk on phonological (wiggle-fingers) complexity and frequency distribution! Relevant to much of my own work! #TISLR13

Phonological complexity can be defined by 1) markedness (frequency/economy) and 2) structure (quantity).

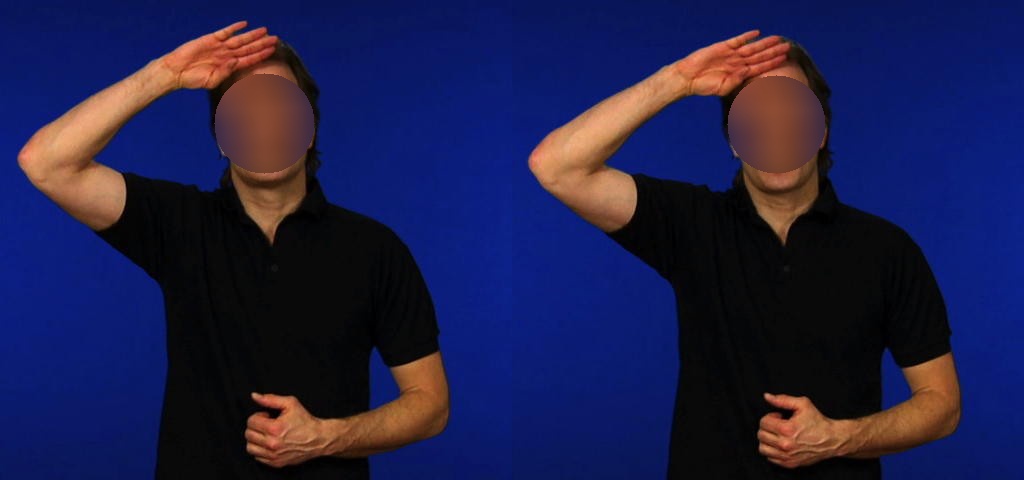

E.g.:

Some handshapes are easier (1). Two-handed signs with simultaneous movements have more structure (2).

#TISLR13

E.g.:

Some handshapes are easier (1). Two-handed signs with simultaneous movements have more structure (2).

#TISLR13

Research question: is there a maximum limit of complexity that can be packed into a sign? #TISLR13

Research question: does phonological complexity change over time (language age as a factor)? #TISLR13

Research question: how does frequency relate to phonological complexity?

We already know that lexical frequency correlates inversely with sign duration (citing Börstell et al. 2016, 2019 😍)

#TISLR13

We already know that lexical frequency correlates inversely with sign duration (citing Börstell et al. 2016, 2019 😍)

#TISLR13

A scoring matrix defining phonological complexity index. #TISLR13

Results: Generally signs are not very phonological complex (distribution highest in the lower range of the scale) #TISLR13

Results: the distribution across complexity scale correlates with age of the language. #signlanguages become more phonologically complex over time?

#TISLR13

#TISLR13

Plenty of future directions! Community size, neighborhood complexity, etc.

As for me (Calle), I'mma head over to Hope during the ☕ break and suggest a paper together. Hashtag networking hashtag I love all you TISLRs!

#TISLR13

As for me (Calle), I'mma head over to Hope during the ☕ break and suggest a paper together. Hashtag networking hashtag I love all you TISLRs!

#TISLR13

• • •

Missing some Tweet in this thread? You can try to

force a refresh