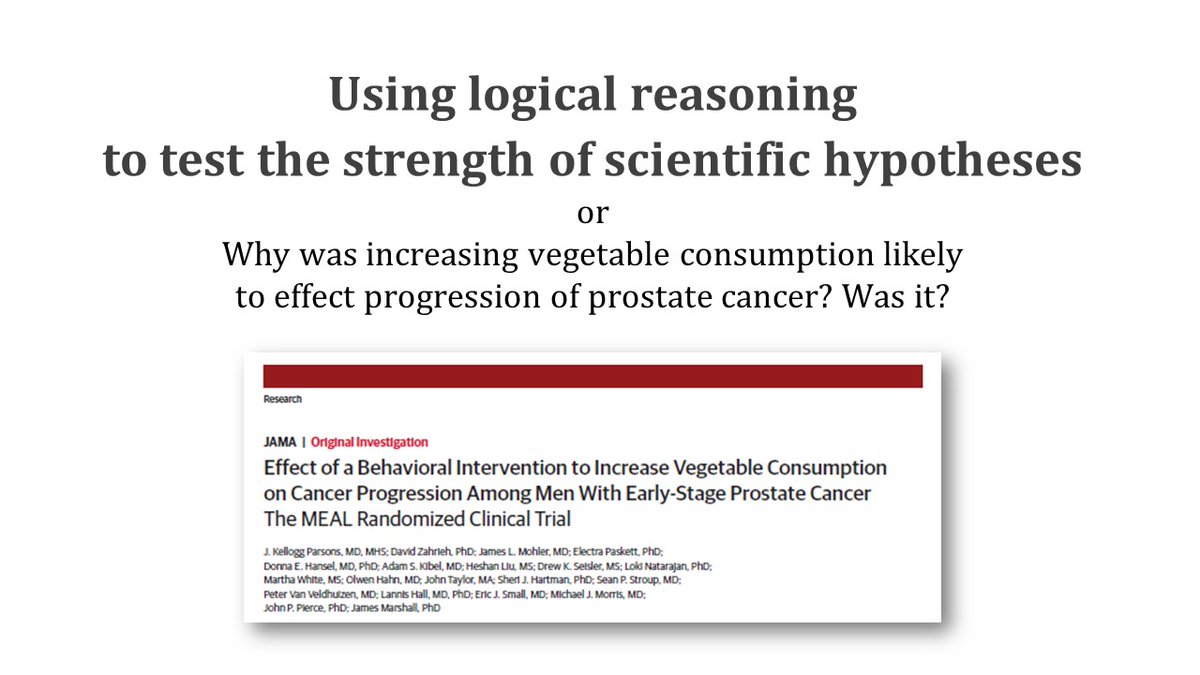

These days, researchers are so focused on data that they seem to forget the value and power of logical reasoning in science.

This week in journal club, we applied logical reasoning to evaluate the strength of hypothesis. Here is a summary.

1/

This week in journal club, we applied logical reasoning to evaluate the strength of hypothesis. Here is a summary.

1/

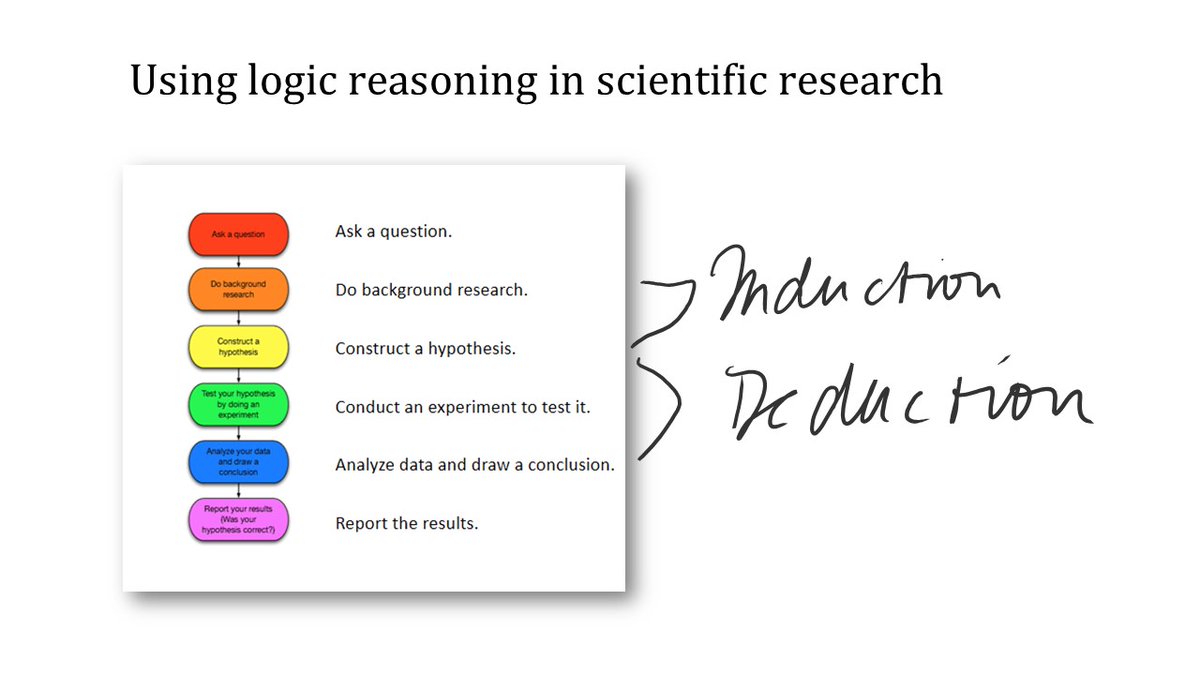

The most common forms are inductive and deductive reasoning.

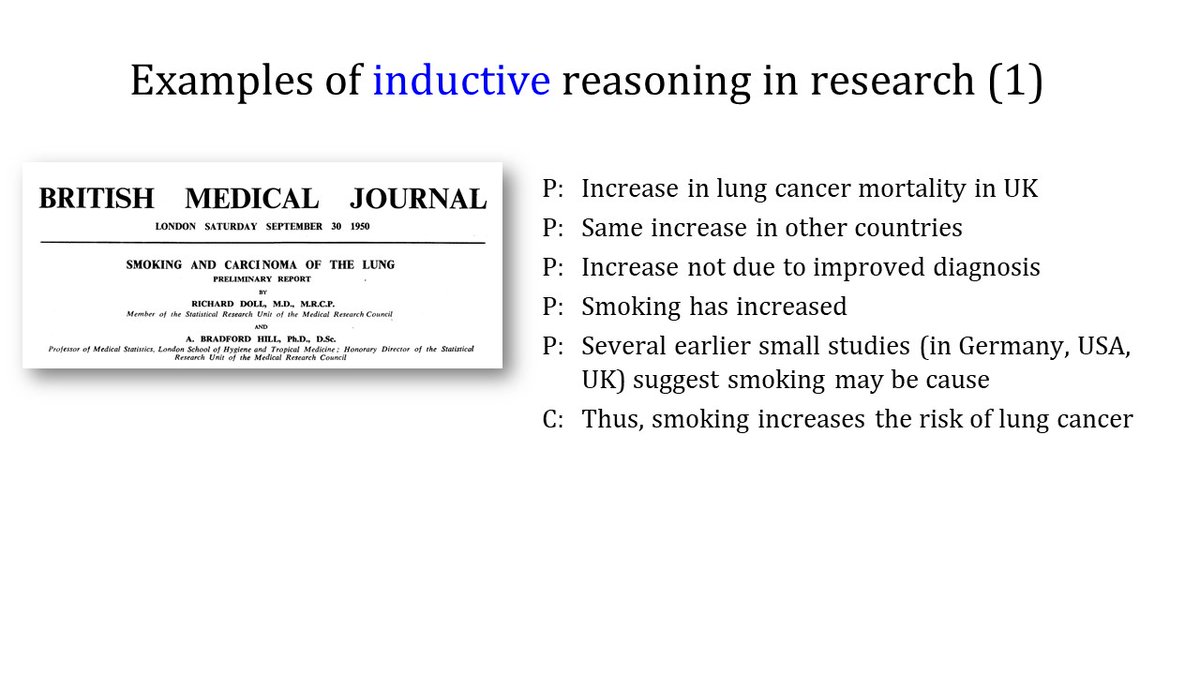

In inductive reasoning, we use observations to infer a theory or hypothesis. In deductive reasoning, we use a hypothesis to make predictions about the observations, which are then tested by data.

In inductive reasoning, we use observations to infer a theory or hypothesis. In deductive reasoning, we use a hypothesis to make predictions about the observations, which are then tested by data.

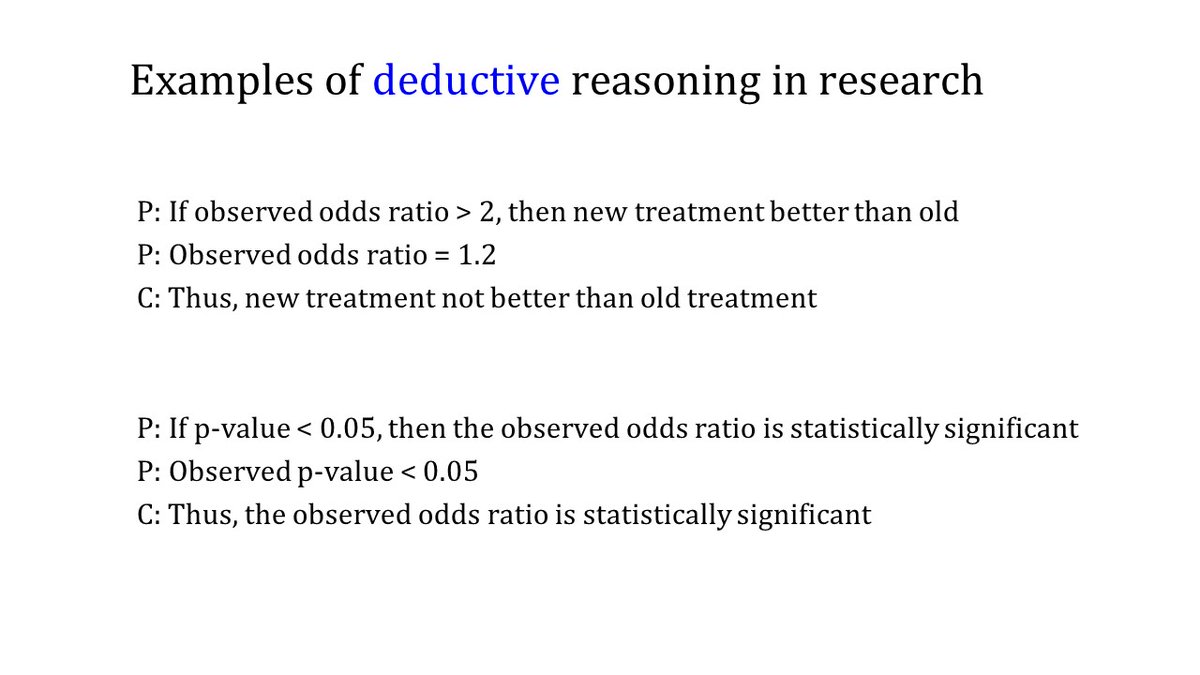

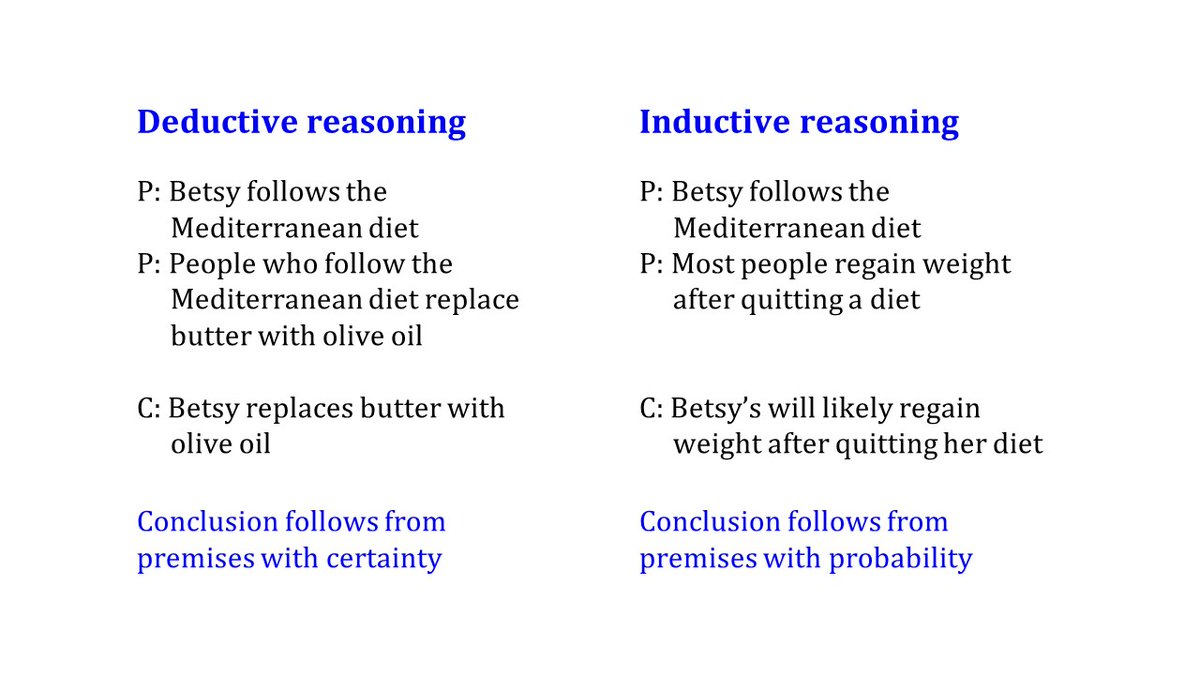

Here’s an illustration of the difference between the two. In a deductive argument, the conclusion (c) follows from the premises (p) with certainty, and in an inductive argument with a probability. A conclusion from induction may be true, but no guarantee.

But what if the premises are false?

Deductive arguments are sound if the premises are true and the reasoning is valid. Inductive arguments are cogent if the premises are true and the reasoning is strong.

Deductive arguments are sound if the premises are true and the reasoning is valid. Inductive arguments are cogent if the premises are true and the reasoning is strong.

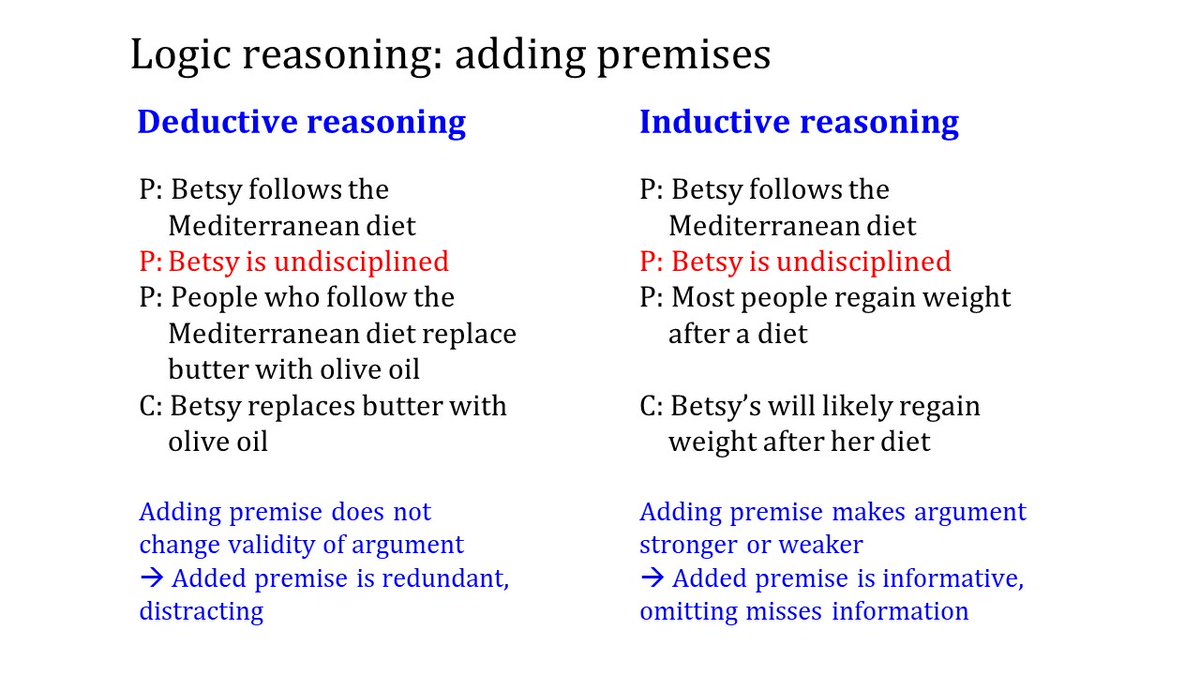

Because a conclusion follows from induction with uncertainty, adding premises can make an inductive argument stronger or weaker. Similarly, omitting(!) premises can make an argument *look* stronger or weaker.

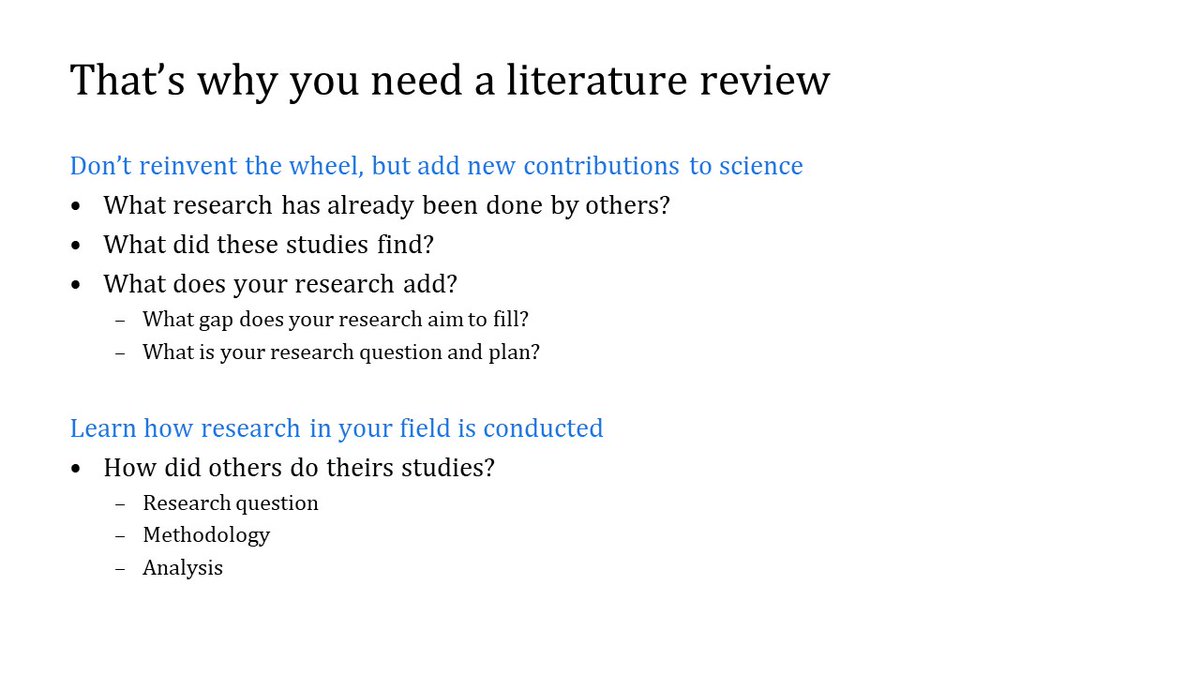

And here is where the fun begins: the introduction of scientific articles can be summarized in the framework of inductive arguments, which shows the strength of the hypothesis. A pretty strong classic here.

Here is the introduction of the recent trial on increasing vegetable consumption to reduce prostate cancer progression. Does this look strong?

Some more background was given in the protocol, but that made even more clear that essential observations were lacking that would have made the hypothesis stronger and the study more likely to deliver.

The lesson:

If you want your research to deliver, make sure your hypothesis is strong.

Unraveling the reasoning in scientific articles helps identifying strong studies. And fishing expeditions.

If you want your research to deliver, make sure your hypothesis is strong.

Unraveling the reasoning in scientific articles helps identifying strong studies. And fishing expeditions.

• • •

Missing some Tweet in this thread? You can try to

force a refresh