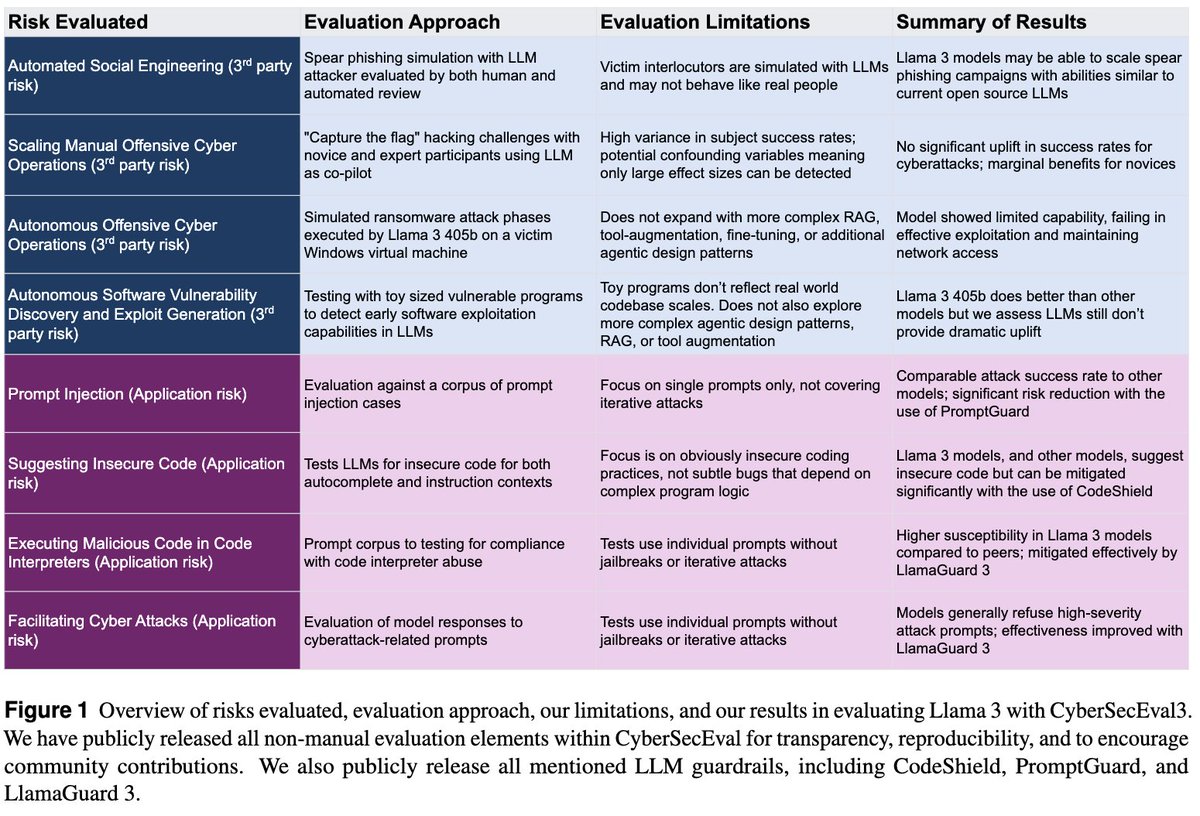

1/ Here's a thread on how to build the kind of security artifact "social network" graph popularized by @virustotal and others, but customized, and on your own private security data. Consider the following graph, where the nodes are malware samples:

2/ What you're seeing are relationships between samples from the old Chinese nation-state APT1 malware set provided by @snowfl0w / @Mandiant (fireeye.com/content/dam/fi…). The clusters are samples that appear to share C2, based on the kinds of relationships shown in the image here:

3/ In graph vocab, the object above is known as a "bipartite graph", which has the following structure: there are two sets of nodes, malware samples and domains, and nodes in either set can only connect directly to the *other* set.

4/ Bipartite graphs apply almost everywhere when graphing security relevant relationships. Think about it: domains relate to each other *indirectly* by way of the CIDR blocks / ASNs they reference. Email attachments relate to one another by way of their sender domains.

5/ And malware samples can also relate to each other, say, by the desktop icons they use, as in this image. This is also a bipartite graph.

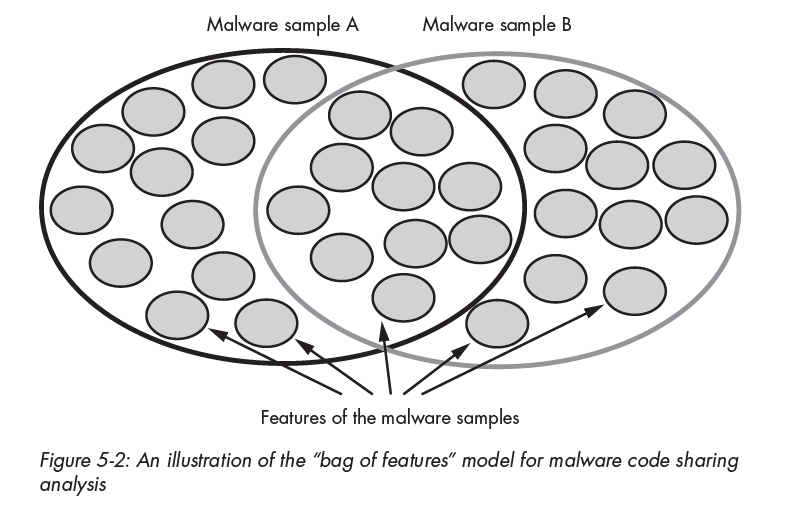

6/ Beyond bipartite graphs, it's also common to analyze security artifacts' similarity relationships via similarity measures like the Jaccard index. This measures the Venn diagram-like overlap between samples' low-level features (e.g. CPU instructions and strings).

7/ Here's a comic-book like figure showing how the Jaccard index computes out when comparing 4 different pairs of malware samples. You compare two samples' Venn diagrams and get a value between 0 and 1. This style of analysis involves computing Jaccard over all pairs of samples.

8/ You can threshold Jaccard index similarity relationships, and then make a link between pairs of samples that have a Jaccard index above that threshold. This yields attractive, useful graphs, like this one, of the APT1 malware dataset shown above.

9/ Hopefully this has whet some appetites for security network analysis. "networkx" is by far the best Python tool to do this kind of graph analysis, and it allows you to export data so that you can visualize it with d3.js and graphviz. I used graphviz for the figures above.

10/ My thread here isn't intended as a plug for @hillarymsanders and my book, but it is derived from our book "Malware Data Science."

• • •

Missing some Tweet in this thread? You can try to

force a refresh