AI+cybersecurity at Meta; past lives in academic history, labor / community organizing, classical/jazz piano, hacking scene

How to get URL link on X (Twitter) App

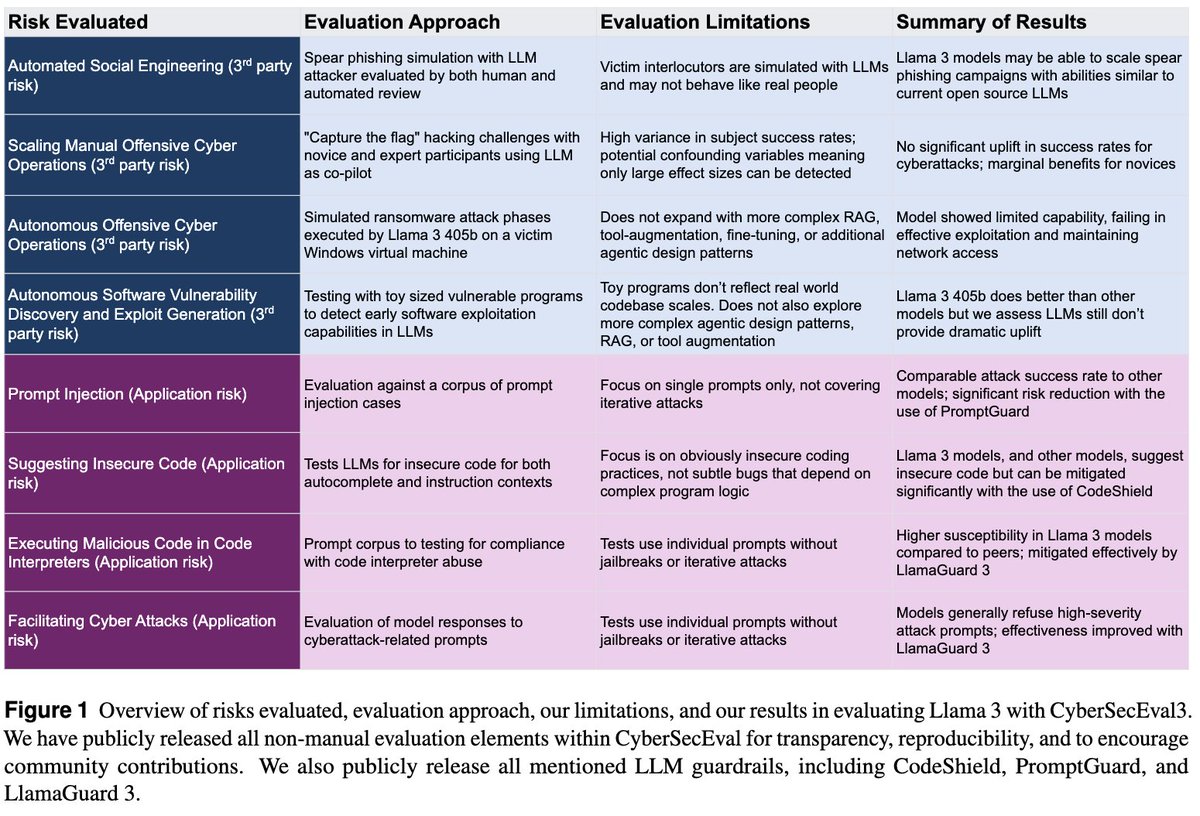

CyberSecEval 3 extends our previous work with several new test suites: a cyber attack range to measure LLM offensive capabilities, social engineering capability evaluations, and visual prompt injection tests.

CyberSecEval 3 extends our previous work with several new test suites: a cyber attack range to measure LLM offensive capabilities, social engineering capability evaluations, and visual prompt injection tests.

And I guess it meant more: admitting that on many of these projects I could have seen the end before I started had I really admitted the hard limits of 2010’s era machine learning.

And I guess it meant more: admitting that on many of these projects I could have seen the end before I started had I really admitted the hard limits of 2010’s era machine learning.

2/ What you're seeing are relationships between samples from the old Chinese nation-state APT1 malware set provided by @snowfl0w / @Mandiant (fireeye.com/content/dam/fi…). The clusters are samples that appear to share C2, based on the kinds of relationships shown in the image here:

2/ What you're seeing are relationships between samples from the old Chinese nation-state APT1 malware set provided by @snowfl0w / @Mandiant (fireeye.com/content/dam/fi…). The clusters are samples that appear to share C2, based on the kinds of relationships shown in the image here: