Which should one decide first?

Choose the endpoint, then calculate the sample size

or

Calculate the sample size, then choose the endpoint

They weren't sure what to look for, so they went for something that didn't need any understanding of medicine.

Even the janitor knows that if you get home alive within 14 days, that is better than still being stuck in hospital (or dead) at 14 days.

"My mom was really ill. She was in hospital for a month."

"Ah, my uncle did better. He was home in a week."

They don't need to know any test results, decide which tests are more important, or by how much.

But you are not allowed to decide afterwards. Why?

This is not financial pressure - it is desire to avoid embarrassment.

This way, when a trial is negative, they can proclaim innocence to the company.

- embarrassment of trial being neutral

- wanting to give patients hope

- not wanting to have to sack researchers dependent on research money, in turn dependent on positive results

Which way?

The endpoint of "gone home at 14d" is not unbiased, in an unblinded trial, since staff (and patients) may be happier to send home with the feeling of protection, even if it is from Francisoglimeprivir and therefore useless.

When we don't know much about the disease, it is hard for scientists to decide what to use as the endpoint.

But scientistS (plural) have a language of discourse and need to persuade each other, and that is what makes science good.

Let's think about numbers. How many binary digits in this number, which is binary representation of 7:

1 1 1

"Did the patient get discharged, alive, by 14 days? (Yes/No)"

Dichotomizing produces simplicity, but at the cost of throwing away lots of stuff, which you might regret.

To store the ANSWER for a "yes/no" question, you only need ONE binary digit in total. You don't need one for Yes and one for No. It is enough to store 1 for Yes, and 0 for No.

(I know this is not true in the Information Theory sense, with entropy & stuff, and I know @mshunshin and @DrJHoward will beat me up tomorrow, but you get the point.)

The @mshunshin formula for this is as follows.

What is the proportion of bad events (e.g. death-or-still-in-hospital-at-14d)?

Let's say that is 25%, or 1-in-4. We call that a RARITY factor of 4.

Suppose you prevent 20% of deaths, or 1-in-5 *of deaths*.

We call that a WEAKNESS factor of 5. (Why "weakness"? Because 100 means you only prevent one death out of 100 deaths).

Rarity 4 (since one in 4 die if untreated) and

Weakness 5 (since 1 in 5 of 25 deaths are prevented, bringing deaths down to 20)

30 * 4^2 * 5

That makes me feel a bit better. I knew the formula was a bit rough-and-ready, but I was quietly puzzling why it was so far out, when the official proper formula was giving 2922.

A. Hoping for a ludicrously large effect size.

B. Weren't thinking explicitly about the sample size. Just wanted to start something, and then update once they had more information. So started with a sample size they were sure they could fund.

This is good, because it means that a patient who is benefitted (but doesn't cross the 14-day discharge threshold) can still contribute useful information about the benefit.

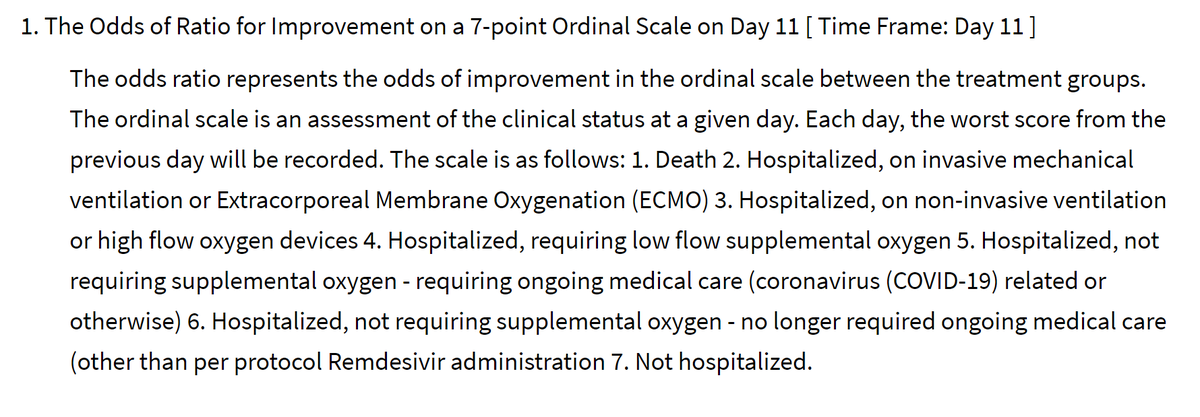

At 11 days (this is a non-round number so presumably they looked at a variety of days to pick the most informative day, i.e. where there was the greatest spread of outcomes in patients as a whole, but blinded to allocation arm).

Dead

Intubated/ECMO

CPAP or high flow O2

Low flow O2

Hosp, needing things other than O2

Hosp, not needing anything

Home

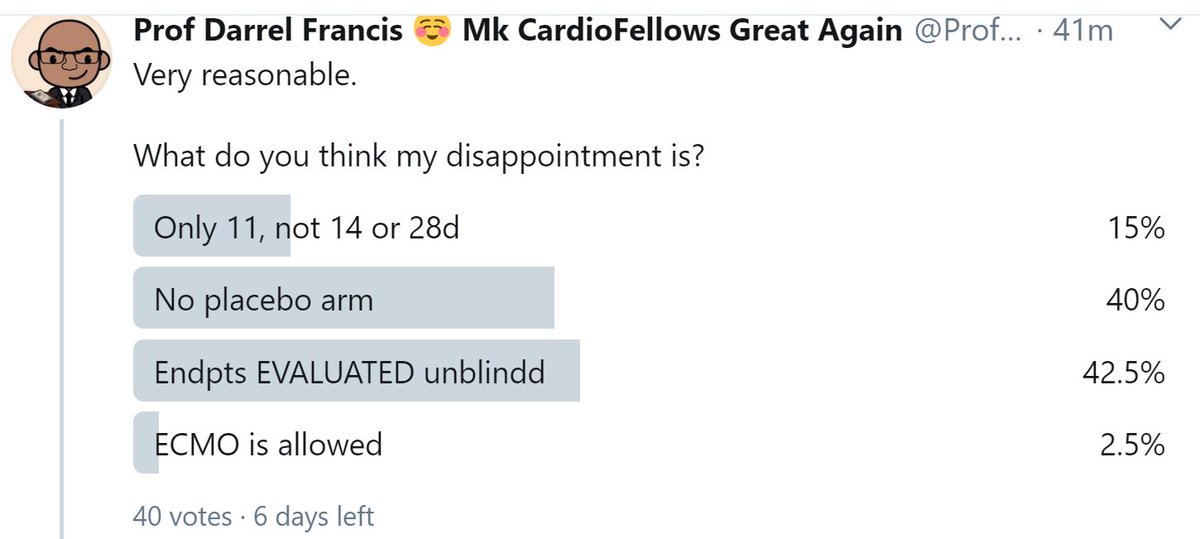

What do you think my disappointment is?

However I am unable to comment on the quote Darren has sent, because (a) I can't understand what it means.

Water is critical.

Having the same endpoint across all Covid trials is not critical. We can make plenty of progress with each trial deciding for itself and pre-specifying.

And at the end of the day,

(a) they probably contain more than one person and so more than one opinion (otherwise what is the point of having more than one person)

They review the records of the patient and rate the outcome, without knowledge of the treatment allocation.

Does this make the trial blinded?

Sadly, two funding peer reviewers, one of ORBITA and one of ORBITA-2, completely failed to understand this, despite our valiant attempts to explain.

They "killed" it because they said other PCI trials were blinded too!

But what are they looking at?

Death is death, but everything else is a measure of what their clinical staff decided to give that patient at that time, in the full knowledge of whether they were taking the drug, and likely the tacit belief that the drug is probably beneficial.

Otherwise I think the whole thing is excellent, and I wish them, and the patients, the best of luck!

What will the status of the other 80% be, along the spectrum of 6 remaining states?

With that 365-day timepoint picture in mind, what is the benefit of choosing the 7-level endpoint to evaluate that outcome, rather than a dichotomous outcome?

1000 peeps go in da hosp

At 3 months (MONTHS) [M O N T H S],

200 are dead.

Dat meens 800 still live

Where are dose 800 peeps? (At 3 months, or wun year?)

So the 7-level fanciness turns to crap.

It has a bunch of people (say 20%) in the worst tier, "dead".

And all of the rest in the best, "home".

What we should have done is picked the timepoint when people were spread out across the levels of the endpoint.

Right in the middle of the bad times.

11 days sounds good to me!

Remember that thing is a 45 degree triangle.

In a 45 degree triangle the short sides are Square-root-of-2 times smaller than the long side.

And you know the long side is two units: you just told me.

A short side (blue) is:

Add the blue and the black heights.

(I am using "s" to mean square root of 2)

Now square that ratio. This will give you the fraction UNSHADED.

(1 + s) squared is what? You can use paper if you want

2*2 + 2*2*s + s*s

which is 4 + 4s + 2

or 6 + 4s

= 3 + 2s

So now you know the proportion UNSHADED, which is

3 + 2s

divided by

6 + 4s

i.e.

POSTSCRIPT 2

If that causes false-positive bias, then what happens to the p value when you make the trial bigger?

Instead, it makes the problem worse.

8-(

The p-value is telling you how easily such an extreme result could happen through simply the play of CHANCE.

then it is not saying whether the reduction of events is due to the DRUG or due to BIAS.

p-values show how biased (or fraudulent) the researchers are!

And they've signed it and published it!

Amazing.

Anyway that's another story. bmj.com/content/bmj/34…

answer Yes

answer No

unanswerd

However there are two bigger problems with my analogy to bits.

When you count deaths, as well as NUMBER (or proportion) of deaths, you also have DATE of death.

So everyone could be dead in 100 years, but if one arm lived many years longer, that is the better arm.

So my analogy is reasonable.

Although we NEED one bit of computer memory to store the answer (as long as it is Yes/No),

we are not really getting one bit of INFORMATION content.

That is a certain amount of information.

You might say yes. (My analogy was based on this being the case)

It says "Yes" again.

Now that is impressive.

Because that can't be just because the thing is generally saying "No" a lot. It has got it right in two rare cases of Gregg Stone-ness.

They have to know this stuff to train t neural networks and things.

"Getting one more correct answer is far more meaningful if it is a rare YES than a common NO".

"If the YESes and NOs are roughly equal in number, each extra case is worth one binary digit of information.

If not, it is worth less than one bit."

That should matter to the general public.

But it has NO RELEVANCE to the readers of this thread, who are scientists and doctors. All peer review means is somebody like you and me had a look and said "OK go on then."

Question 1. ClinicalTrials.Gov

First respondent said "yes". I understand why, because the authors said this, and you are trusting lot.

chictr.org.cn/showprojen.asp…

Date of ethics approval and date of study start. Anyway, maybe that's the date they started lining up their test tubes etc, and not the date open for randomization, like we would write in the West.

Did it?

Here is the killer question.

How many arms did the trial have?

* Said it was on ClinTrials.Gov, but wasn't

* Published that it had 3 arms, but only reported on two

* Specifically said to be stratified only by site, but has only one site

* One primary endpoint incomprehensible

* BOTH PRIMARY ENDPOINTS DISAPPEARED

It means the people that wrote this cannot be trusted, EVEN ON THINGS THAT YOU CAN EASILY CHECK AND SEE THAT THEY ARE LYING.

Discuss.

(without mention of Donald Trump saying it's really good stuff)

If you say your name is Dr Pranev Sharma and you have a name badge saying the same, and a stethescope etc, and then you ask to take my pulse, I will not raise any query.

The paper is in the latter group.

I do not give it the benefit of the doubt.

Because it was intended to have 300 patients.

The paper mentioned randomization "stratified by site".

But it only gave the results of one site, with 62 patients.

It is not the Poisson for zero successes in 500, when the mean is 25, but it is in that league. Well past 0.99.

We can now discount all future proclamations from them because they

EITHER

Did not notice these crazy self-destruct features in the trial

(i.e. are stupid)

OR

Did not look for them

(i.e. are incompetent)

Can anyone provide links?