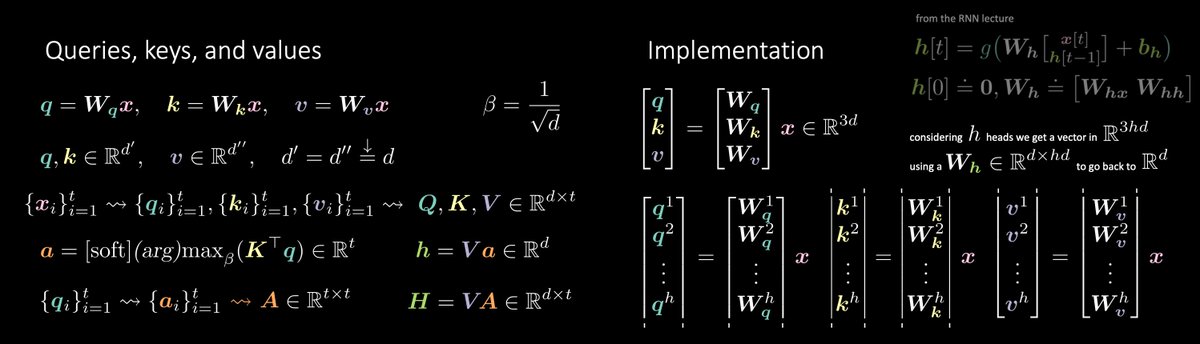

“Set to set” and “set to vector” mappings using self/cross hard/soft attention. We combined a (two) attention module(s) with a (two) k=1 1D convolution to get a transformer encoder (decoder).

Slides: github.com/Atcold/pytorch…

Notebook: github.com/Atcold/pytorch…

We recalled concepts from:

• Linear Algebra (Ax as lin. comb. of A's columns weighted by x's components, or scalar products or A's rows against x)

• Autoencoders (encoder-decoder architecture)

• k=1 1D convolutions (that does not assume correlation between neighbouring features and act as a dim. adapter)

and put in practice with @PyTorch.

There is no order. Therefore, computations can be massively parallelised (they are just a bunch of matrix products, afterall).

Just be aware of that t × t A matrix that could blow up, if t (your set length) is large.

My granny, knowing all recipes names (keys) and preparations (values) = encoder.

Me, figuring out what I want = self-attention.

Me, asking granny = cross-attention.

Dinner = yay!

Next week: Graph Neural Nets (if it's taking me less than a week to learn about them).