Loads of cool new stuff being built by the @msbotframework Team.

(mega-thread)

- Bot Framework Composer is now GA

- Bot Framework SDK v4.9

- Azure Bot Service

- Virtual Assistant 1.0 now generally available

- Speech, Language Understanding & QnA Maker

This lets you auto-generate Bot Framework Composer asssets from JSON schema / definition files!

Updates to Bot Framework Composer and Adaptive look awesome. Great stuff!

Let you automatically create robust Bot Framework Composer assets from JSON or JSON Schema that implement best practices like out-of-order slot filling, ambiguity resolution, help, cancel, correction and list manipulation.

The Direct Line App Service Extension is now GA.

This let's customers have greater control over how data is stored & transmitted within their bot using Direct Line or Webchat.

Speech - more language coverage!

Language - improved labelling!

QnA - now includes RBAC and improved editing!

Now in private preview, this provides a transformer model based orchestration capability optimized for chatbots.

It helps deliver improved accuracy of Skill based routing which can can be critical for sophisticated conversational experiences.

mybuild.microsoft.com/sessions?q=INT…

mybuild.microsoft.com/sessions?q=INT…

Find out more on @GaryPretty's blog here:

techcommunity.microsoft.com/t5/azure-ai/bu…

during #MSBuild

Questions can be submitted here:

msft.it/6010TiH6y

During @shanselman's keynote, we saw chatbot that could receive a message in the form of C# syntax, parse and execute the response back to the human.

I will share these here to help people get a quick-start on some of the key topics.

#MSBuild

jamiemaguire.net/index.php/2020…

jamiemaguire.net/index.php/2020…

jamiemaguire.net/index.php/2020…

jamiemaguire.net/index.php/2020…

I've been using it for a while.

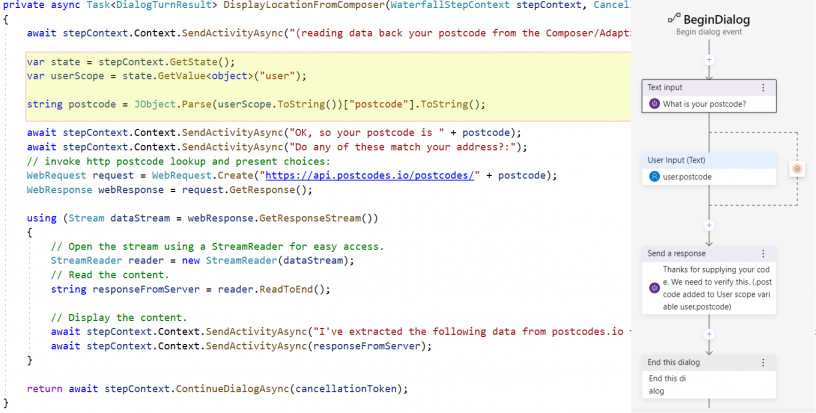

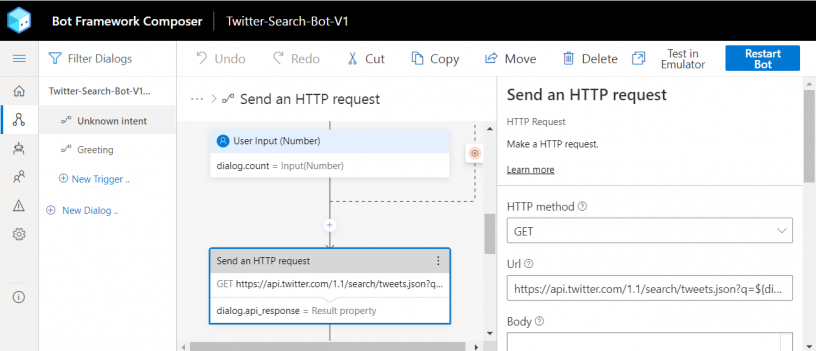

A good pattern is to encapulate business rules in an API of your choice then consume this in Composer.

Use data from the API to to steer conversational logic of your chatbot, invoke dialogs or serve different user experiences.

Let your backend team build the endpoints and the business can design conversational experiences using data provided by the back-end APIs

This gives you real power and control over the build and maintenence of your chatbots. I have a some projects on @github and several blog posts that show you how to do this.

- sentiment analysis

- key phrase extraction

- language detection

- named entity recognition

- 5 new categories

- 10 subcategories including Product, Event, Skill,Address

Deploy Cognitive Services anywhere from the cloud to the edge with containers. Language Understanding and Text Analytics sentiment analysis in containers are now generally available.

Learn more about creating custom machine learning models without having prior experience & without leaving the #dotNET ecosystem: msft.it/6010TiPnn

- Composer

- Orchestrator

- SSO

- QnA

Bots book:

aka.ms/GetOreillyVABo…

Blog: aka.ms/Build20-Conver…

Get started with Composer: aka.ms/composer

VA and Skills aka.ms/bfskillsbuildp…

QnA Maker:

Nice enhancements to the QnA maker editing experience. A new Rich Text Editor is now shipped.

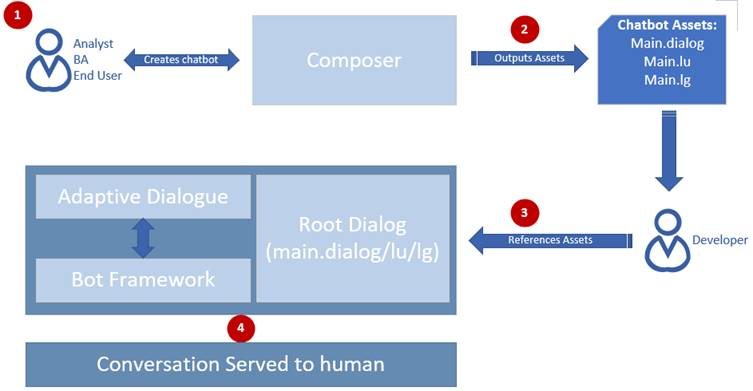

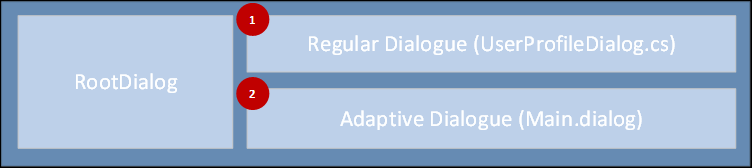

Actions are the main component of a Trigger.

They help to maintain the conversation flow & instruct bots to fulfill user's requests.

Composer provides different types of actions like Send Activities or messages.

You can now create your own CUSTOM ACTIONS.

This makes is possibly for you to drop in your own code widgets in the Composer designer canvas.

You can learn more about this here:

docs.microsoft.com/en-us/composer…

bisser.io/conversational…

Customers and partners have an increasing need to deliver advanced conversational assistant experiences tailored to their brand, personalized to their users, and made available across a broad range of canvases and devices.

The Virtual Assistant Solution Accelerator answers this need and, with v1.0 released at Build 2020, is now generally available!

The solution accelerator is open source in GitHub and provides you with a set of core foundational capabilities & full customization over the end user experience - including the name, voice, & personality of your VA – whilst not sacrificing privacy & data.

For common assistant requirements, such as introduction, or on-boarding & handling situations where there is a need to hand off the conversation to a human.

FAQ and Personality - allowing the bot to answer user questions, from FAQs made available from a QnA Maker knowledgebase, including taking advantage of the new multiturn feature, in addition to making use of the Chit Chat personalities

Interacting via natural language can be complex, but the VA handles common scenarios with ease, such as the ability for a user to switch context or interrupt their conversation, such as to a different skill

Speech-first experiences can be enabled without any custom-code, responding to the evolving change in user behavior towards multi-modal experiences on a broad range of platforms and devices.

A telemetry pipeline for Virtual Assistant, leveraging both PowerBI and Azure Application Insights. This enables you to quickly and easily understand how your assistant is being used by users and gain actionable insights

Several Skills, covering common assistant scenarios, are available to plug-in to your assistant immediately – rapidly increasing the capability of your solution without the need to expend custom development effort.

However, as with the core, Skills are fully customisable and make use of the same assets (dialogs, LU and LG files), allowing you to easily tailor them to suit your specific requirements.

Several Skills, covering common assistant scenarios, are available to plug-in to your assistant immediately:

- Calendar

- Point of Interest

- Hospitality

- IT Management

- Music

More information can be found here: techcommunity.microsoft.com/t5/azure-ai/bu…

Thankyou to everyone for making this virtual event happen.

The content, engagment & community spirit has been great.