I'm still thinking through this, by my reasoning for this is:

This has never been with "design interview" reasoning, it's always been domain knowledge.

But it was still after much more time than you get in a design interview.

In one case, the solution resolved something that had been an open problem in IR for decades, that's not interview material.

1. Buy a solution from N that's known to scale up to 100x A

3. Read the relevant bits on LWN and LKML, understand how the open source implementation of M by company P works

4. Run experiments, profile, read code, etc.

The interviewers very patiently explained to me that solutions 1-4 were invalid and kindly didn't fail me for those (I think most would've), but what's the point of this?

Of course I can't prove a causal link from design interviews, but it seems plausible that design interviews train people to design real systems without understanding the problem domain.

Likely same here.

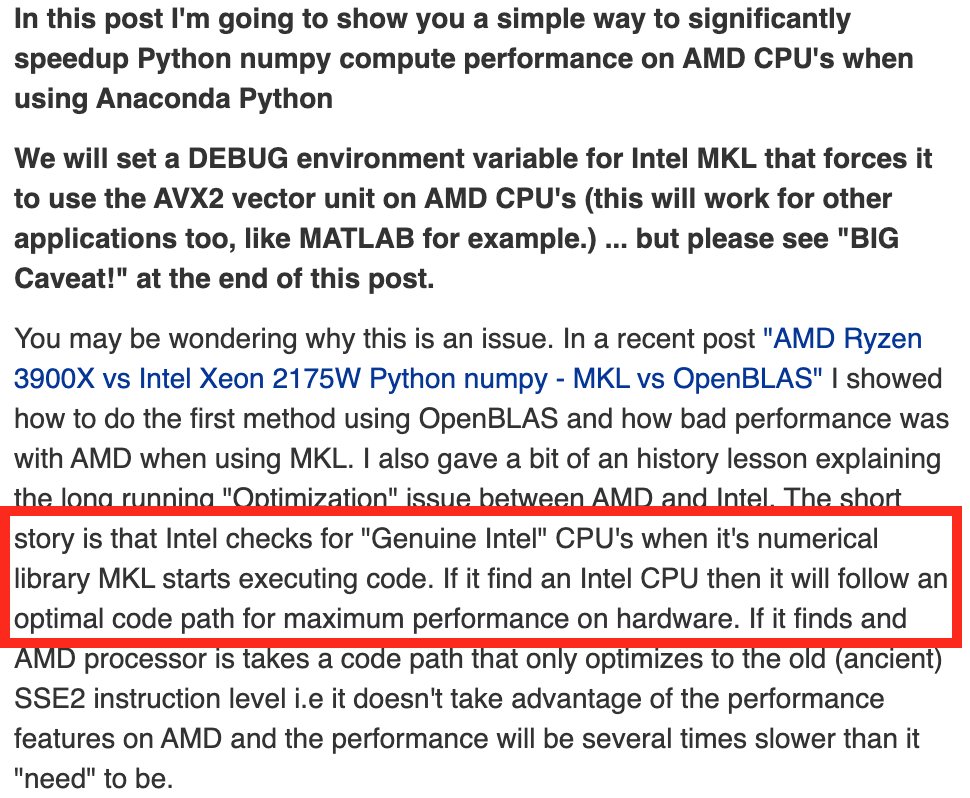

To answer a Fermi estimation question, you just need to know how to play the Fermi estimation game. Make up numbers, multiply them together, and then you pass.

Basically ditto for design interviews.

"How did you get that answer?" he asked. I looked confused.

"I counted."

Presumably he hadn't been expecting to interview someone who grew up in Manhattan, went to school in the Bronx, and spent a lot of time driving around New York with her dad.

I didn't get the job. Apparently, they were looking for people who used some process other than arithmetic to figure out how many gas stations there were in Manhattan." src="/images/1px.png" data-src="https://pbs.twimg.com/media/EY_bv8YVAAACvsK.png">