I guess this will be a ramble:

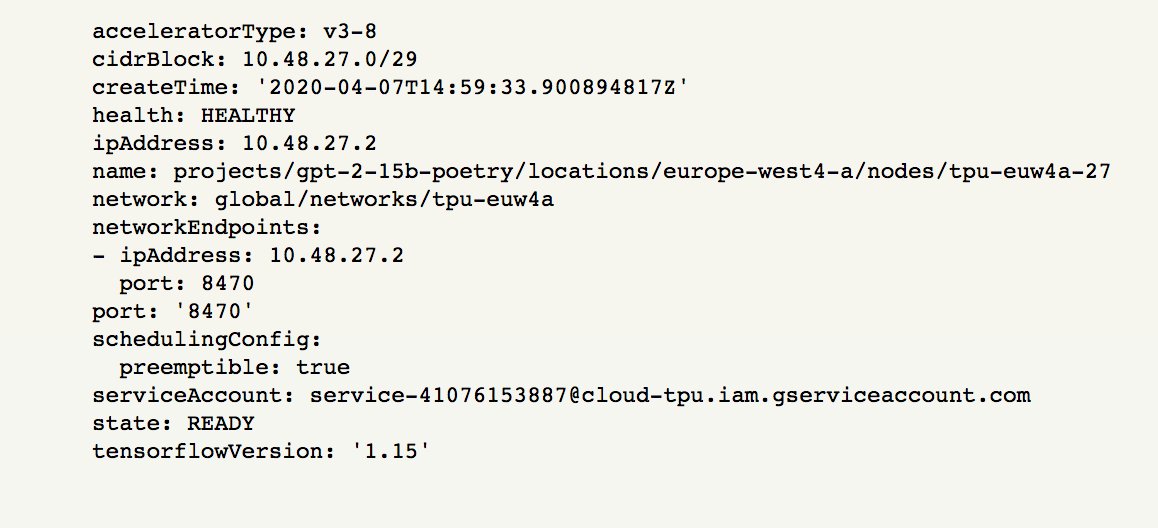

A TPU consists of 8 cores, plus a CPU. (Yes, the TPU has a CPU -- weird concept, but think of it like a big computer with 8 GPUs. Obviously, a computer with GPUs has a CPU.)

But that's a positive statement. It means you get some nice flexibility with the TPU's CPU.

When you're training on a GPU, the data is being streamed from your harddrive to the GPUs. Slow harddrive = slow training.

Cloud buckets are fast, and local to TPUs.

The answer is, yes, TPUs can save the data. Remember how I said that TPUs can save training logs for tensorboard? Those logs are literally just data.

Laughed for like 5min.