Today, I want to talk about the "debate" related to health policies, economic growth and the 1918 Spanish flu.

Everything I have to say is here (with codes): pedrohcgs.github.io/posts/Spanish_…

Let's get to it!

1/n

2/n

Although today's society has a different structure from 100 years ago, these findings can help shape the current debate about covid policies.

3/n

4/n

As an econometrician, however, I got super curious bout the methods used behind the scenes in the debate. This is where I jump in.

5/n

6/n

We also do not really understand what are the assumptions being made here, too.

7/n

At least here, we know more about what is going on.

8/n

Sant'Anna and Zhao (2020): arxiv.org/abs/1812.01723

Callaway and Sant'Anna (2020, new version coming soon): papers.ssrn.com/sol3/papers.cf…

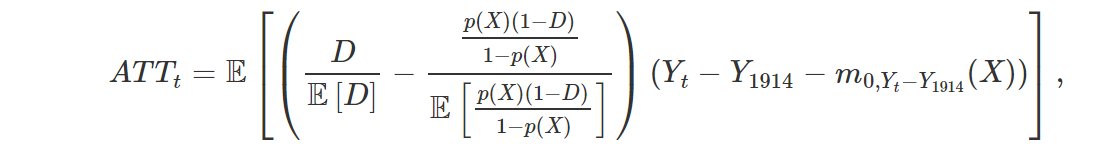

Estimand is not hard to understand: simple combination of regression and propensity scores!

10/n

12/n

13/n

Six cities were therefore dropped: Albany, Denver, Indianapolis, Nashville, New Orleans and Rochester.

14/n

Although results do not find any evidence against pre-trends here, this may be because test has little power. At the end of the day, we have 37 observations!

16/n

1) zero pre-trends are not necessary nor suffient for identification if we use SZ-CS procedure.

2) "post" pre-treatment periods: 1900, 1910, 1914 and 1917. This can be odd.

17/n

18/n

19/n

But again, this is a matter of *subjective* judgment, and reasonable people can disagree here.

20/n

Hope you enjoy reading this as much as I enjoyed writing it.

Again, the entire discussion can be found here: pedrohcgs.github.io/posts/Spanish_…

Take care!

21/21

Tagging Andrew Lilley of LLR (couldn't find the others): @alil9145