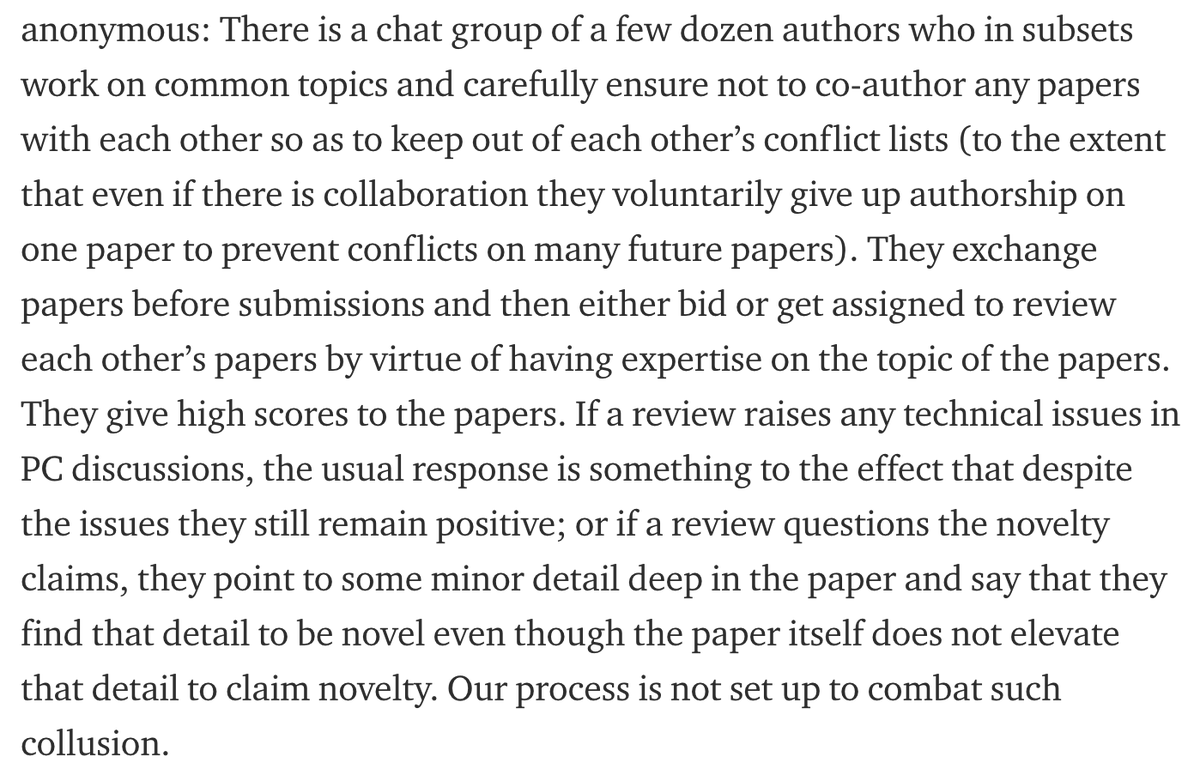

Starting with: "Our process is not set up to combat such collusion" and then meandering to academic integrity, ethics, open science, volunteer labor, and whatever else comes up. medium.com/@tnvijayk/pote…

- aren't colluding with each other to boost their reviews

- aren't asking authors to cite their papers solely to boost their own citations

- aren't tanking papers for self-interested reasons (e.g., not wanting to be scooped)

(1) incentives for good reviewing

(2) consequences for bad reviewing

(3) training/scaffolding for reviewing

Harder wish list:

(1) more open processes that don't rely on small "in groups" of reviewers

(2) more transparency for reviews

(3) everyone have integrity ❤️