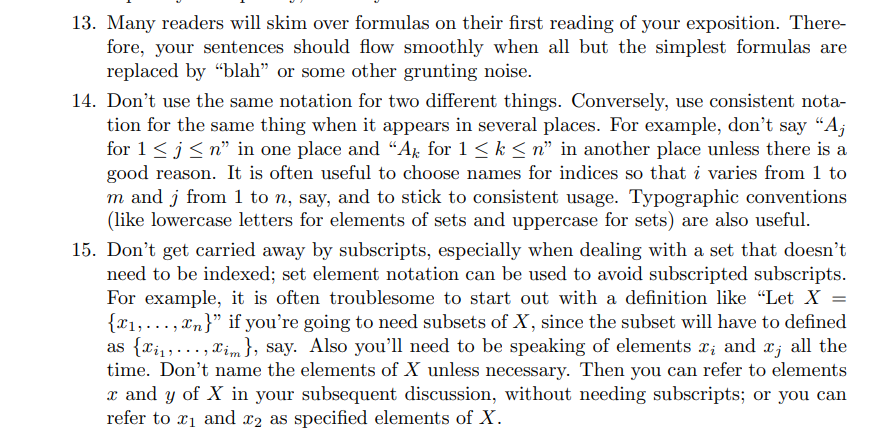

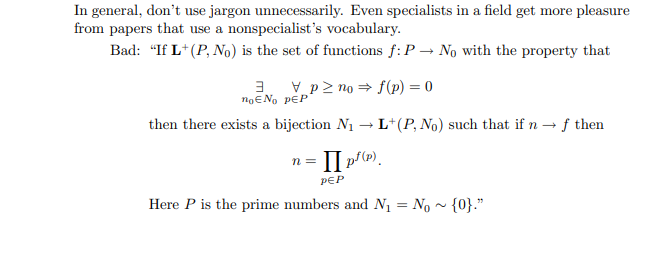

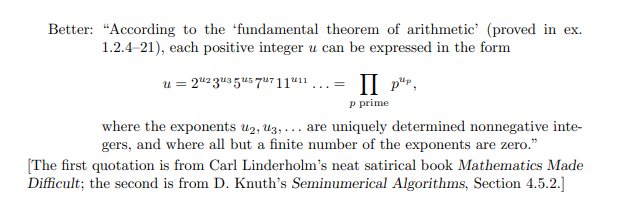

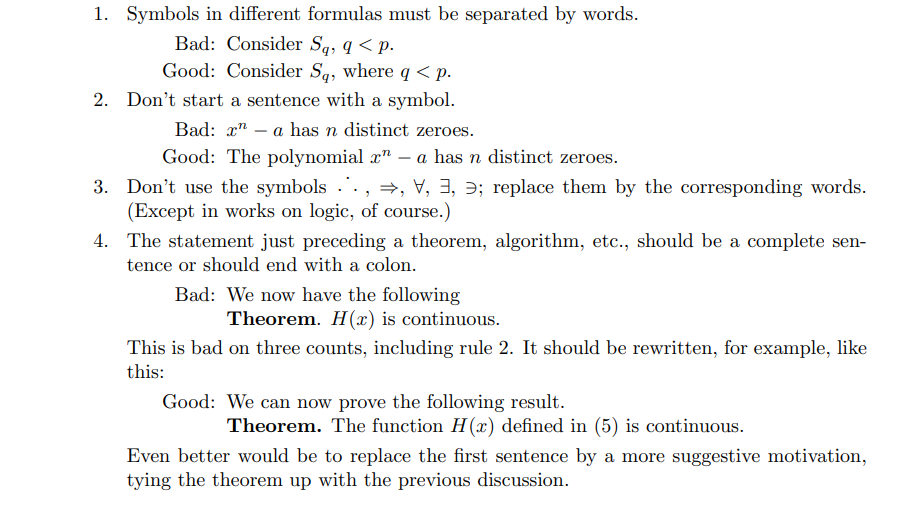

Just sent Knuth's advice on writing mathematical stuff to a student list and felt moved to retweet it more broadly.

Some favorite bits in the screenshots. 1/3

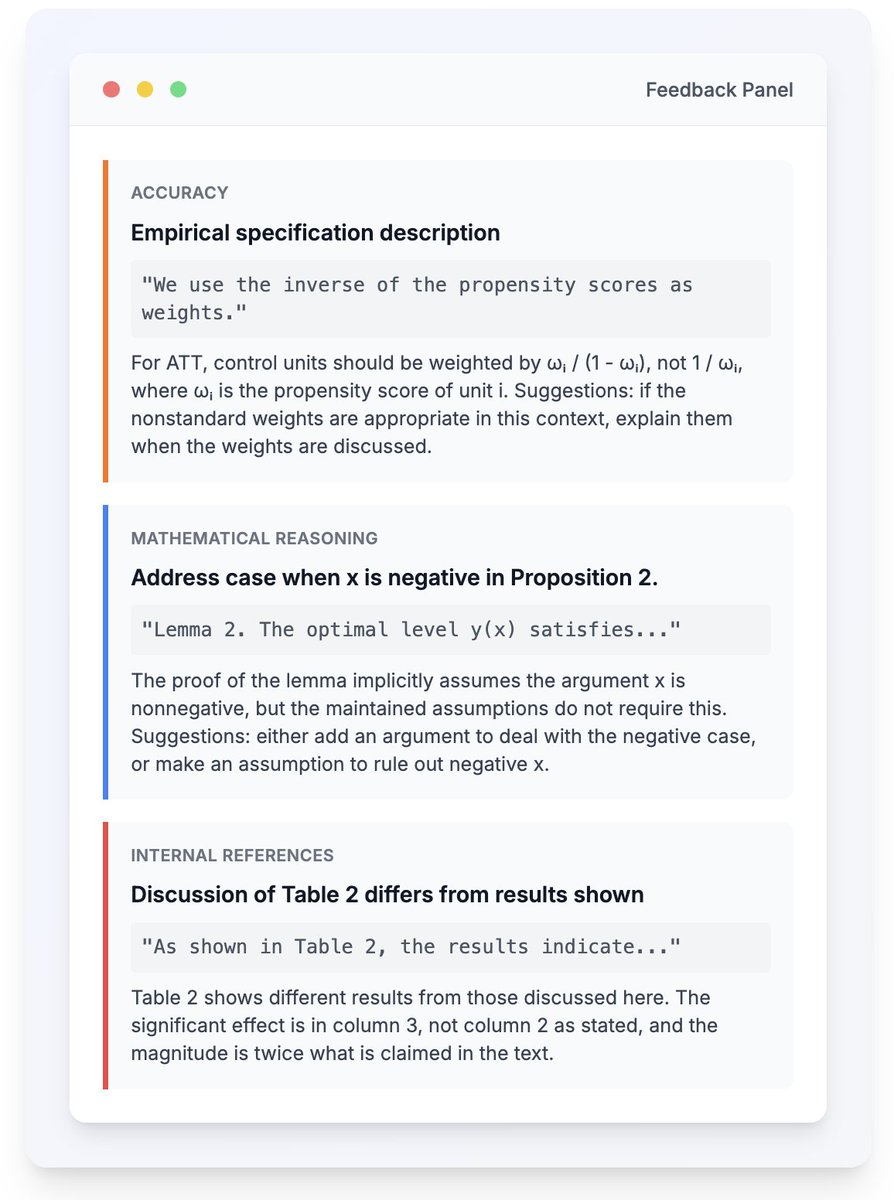

jmlr.csail.mit.edu/reviewing-pape…

Some favorite bits in the screenshots. 1/3

jmlr.csail.mit.edu/reviewing-pape…

Since this has been such a big part of my everyday head for a while, I feel a bit sheepish highlighting it. But often when reading things, I remember some of the best advice is not universally followed.

• • •

Missing some Tweet in this thread? You can try to

force a refresh