econ prof @NorthwesternU, co-founder at https://t.co/RSvoCbDuGc

studying networks

past: @Harvard | @Stanford'12 | @Caltech '07

4 subscribers

How to get URL link on X (Twitter) App

We'll give you free reviews, useful to stress-test a paper before submission or circulation.

We'll give you free reviews, useful to stress-test a paper before submission or circulation.

The two big quality dimensions are comfort and safety. The article obsesses over comfort while ignoring safety entirely.

The two big quality dimensions are comfort and safety. The article obsesses over comfort while ignoring safety entirely.

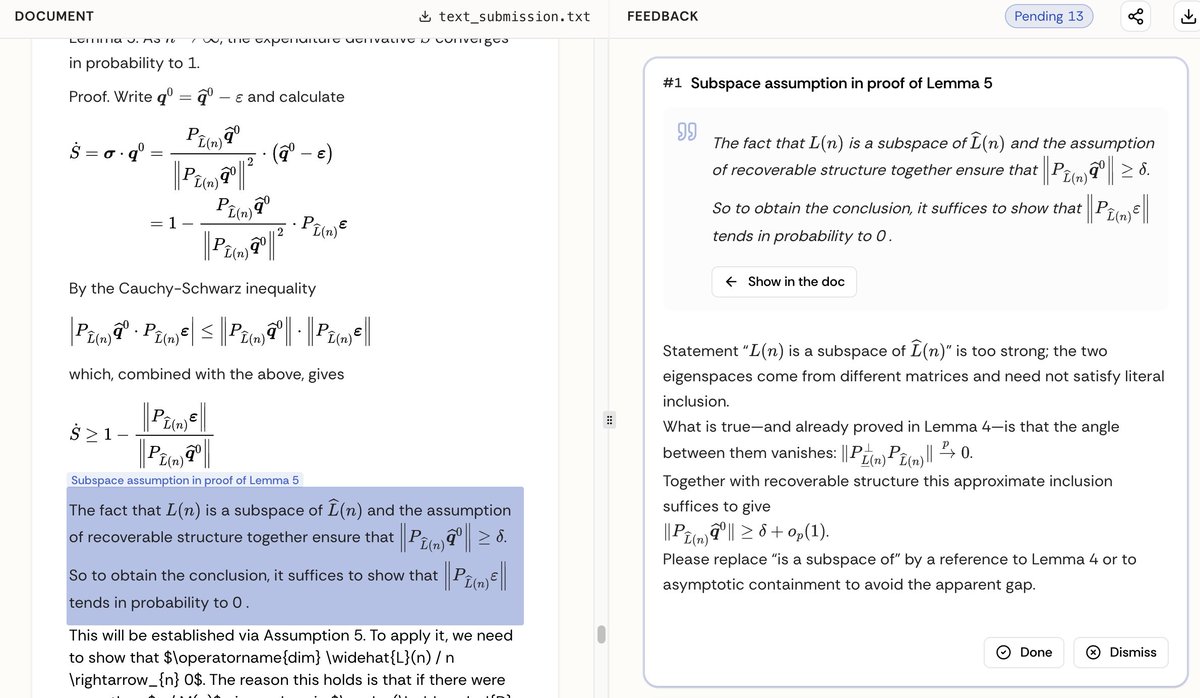

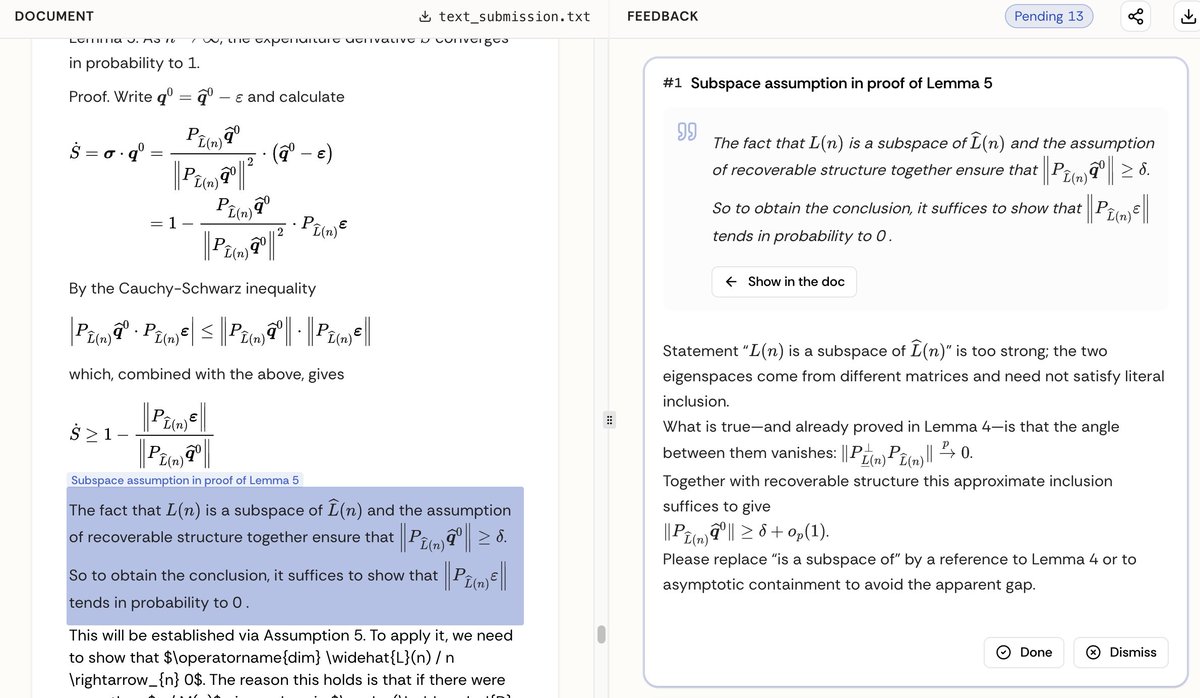

We’ve been beta testing it with frontier research in areas from social science to applied mathematics to computational biology to philosophy.

We’ve been beta testing it with frontier research in areas from social science to applied mathematics to computational biology to philosophy.

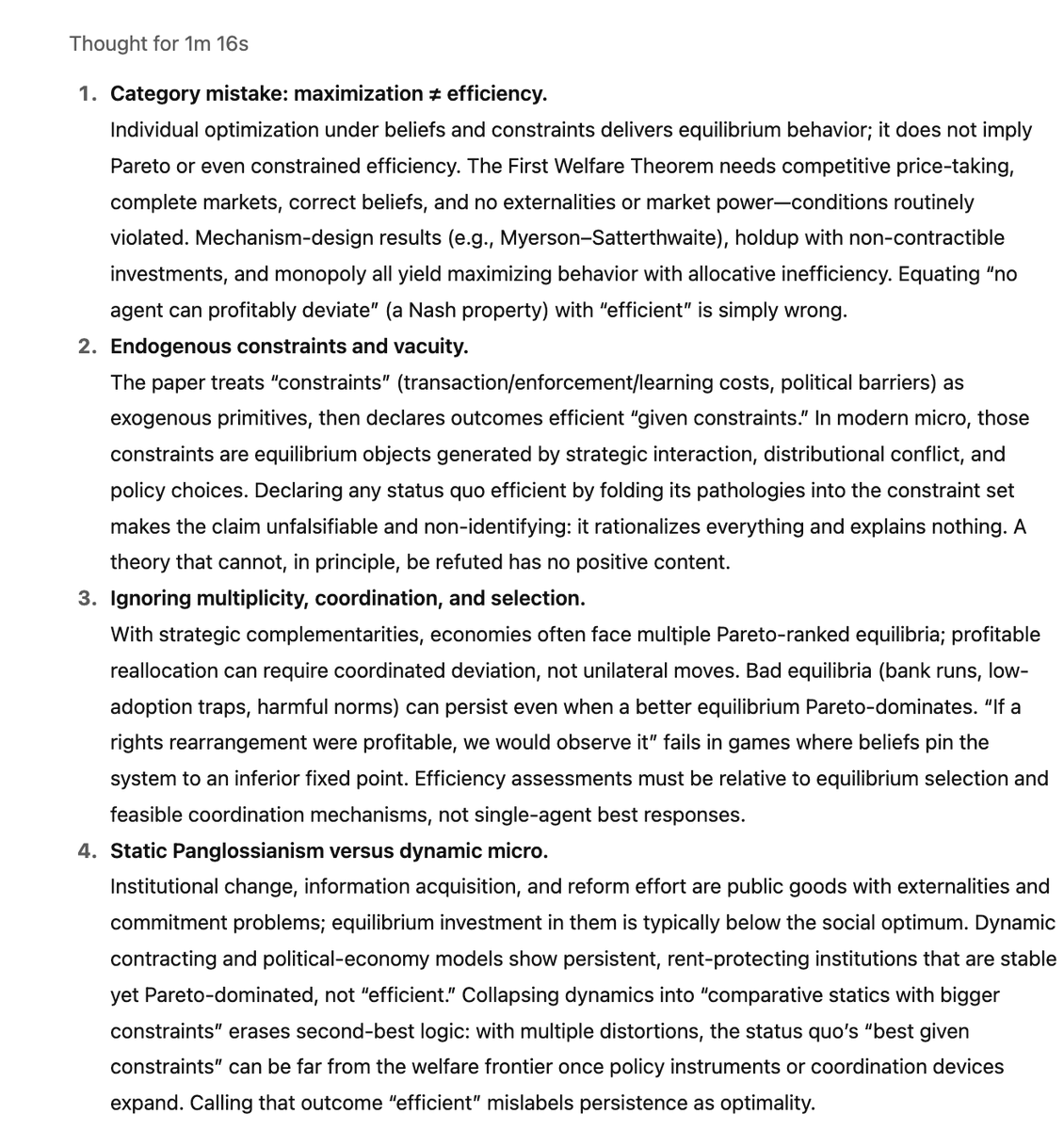

https://twitter.com/kingofthecoastt/status/1977219181461553435The first welfare theorem (individual optimization implies social efficiency) breaks down in the presence of frictions -

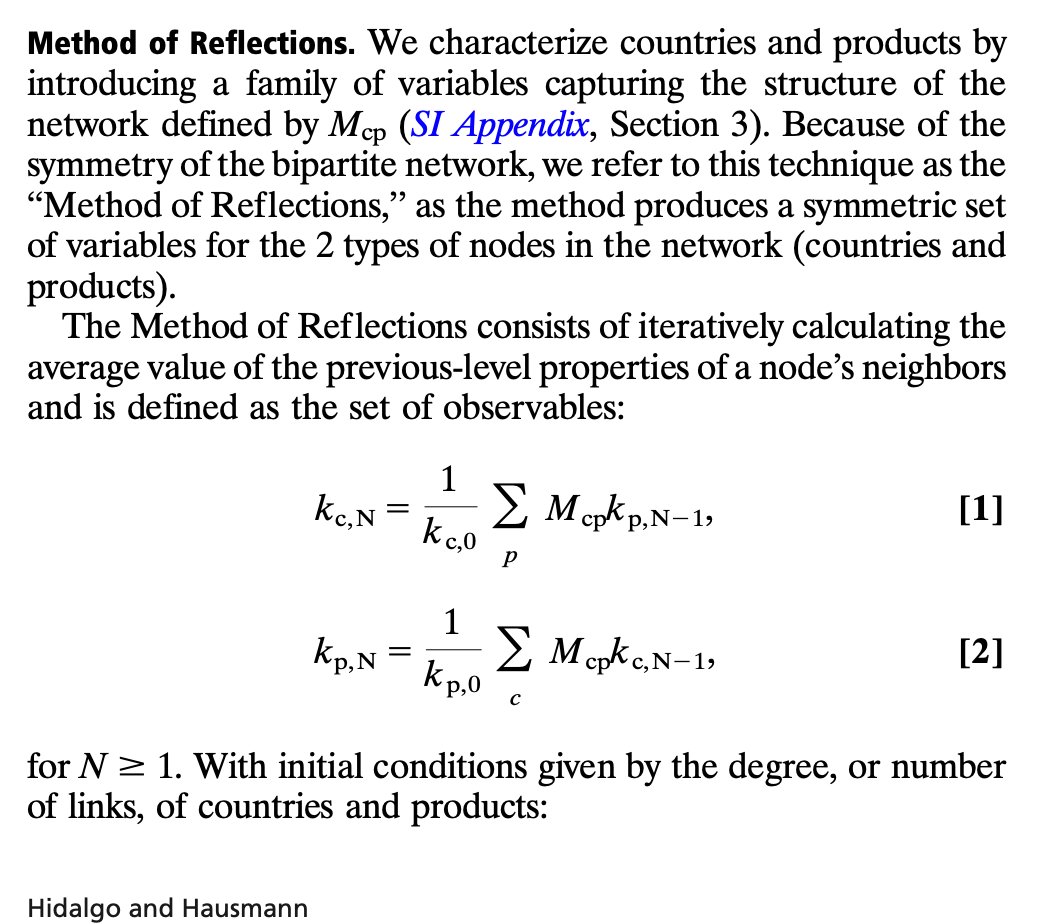

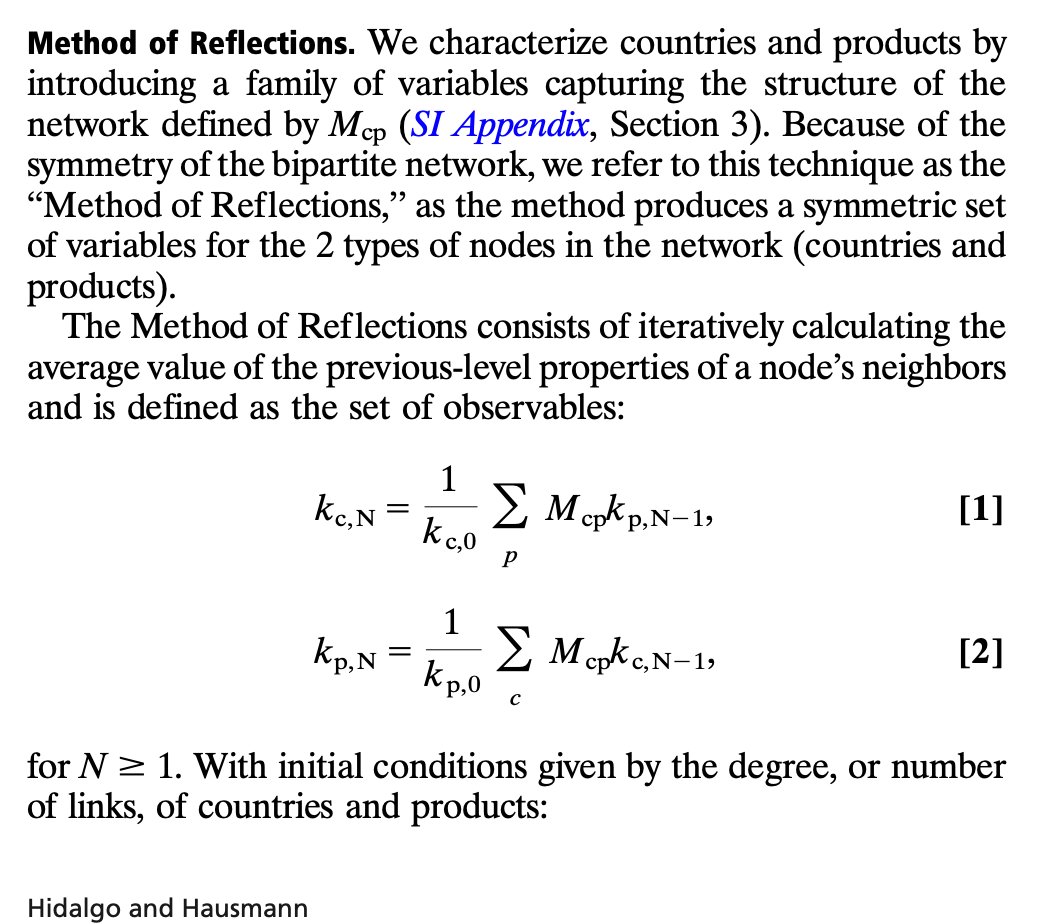

https://twitter.com/cesifoti/status/1973356748825092345The economic complexity index that Hidalgo and Hausman propose in "The building blocks of economic complexity" is a very close variant of Kleinberg's very famous 1999 HITS algorithm.

https://twitter.com/IsabellaMWeber/status/1971292262874370221To take an example from the graduate curriculum, we spend a lot of time dealing with fixed points in large models (dynamic, stochastic, etc.)

https://twitter.com/IgorNaverniouk/status/1920135460715659275I don't care at all about homework being done with AI since most of the grade is exams, so this takes out the "cheating" concern.

https://twitter.com/ben_golub/status/1908149372832960560A survey of what standard models of production and trade are missing, and how network theory can illuminate fragilities like the ones unfolding right now, where market expectations seem to fall off a cliff.

When this choreography unfolds, each firm in the network has contracts with specific partners.

When this choreography unfolds, each firm in the network has contracts with specific partners.

https://twitter.com/alz_zyd_/status/1874863049037033784smart professor translate the imposing and borderline unintelligible reading into contemporary, easy to follow English.

The paper has had a long childhood — I remember first seeing it in 2017 and finding the core adverse selection mechanism remarkable and compelling.

The paper has had a long childhood — I remember first seeing it in 2017 and finding the core adverse selection mechanism remarkable and compelling.

It's worth thinking through the "answer" he expects, which you can guess based only on knowing his personality (never great):

It's worth thinking through the "answer" he expects, which you can guess based only on knowing his personality (never great):https://twitter.com/besttrousers/status/1727727603803369878The top few singular vectors in issue space will tell you about "bundles" of issues along which there are considerable distances in the group.