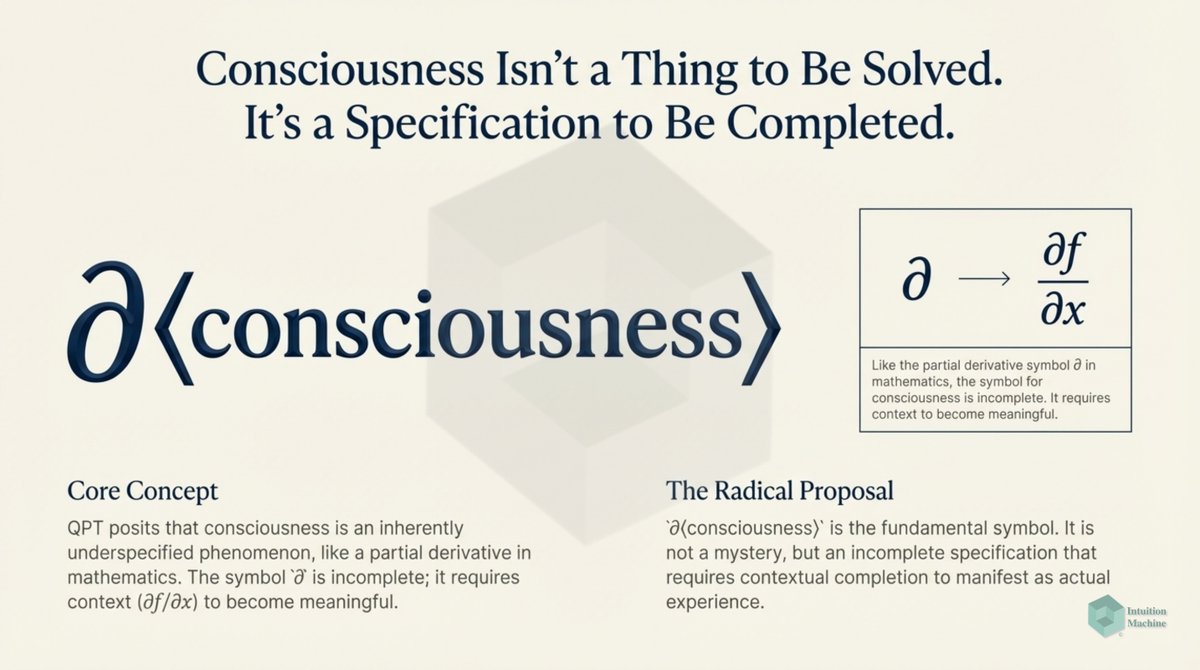

How does it feel to understand something?

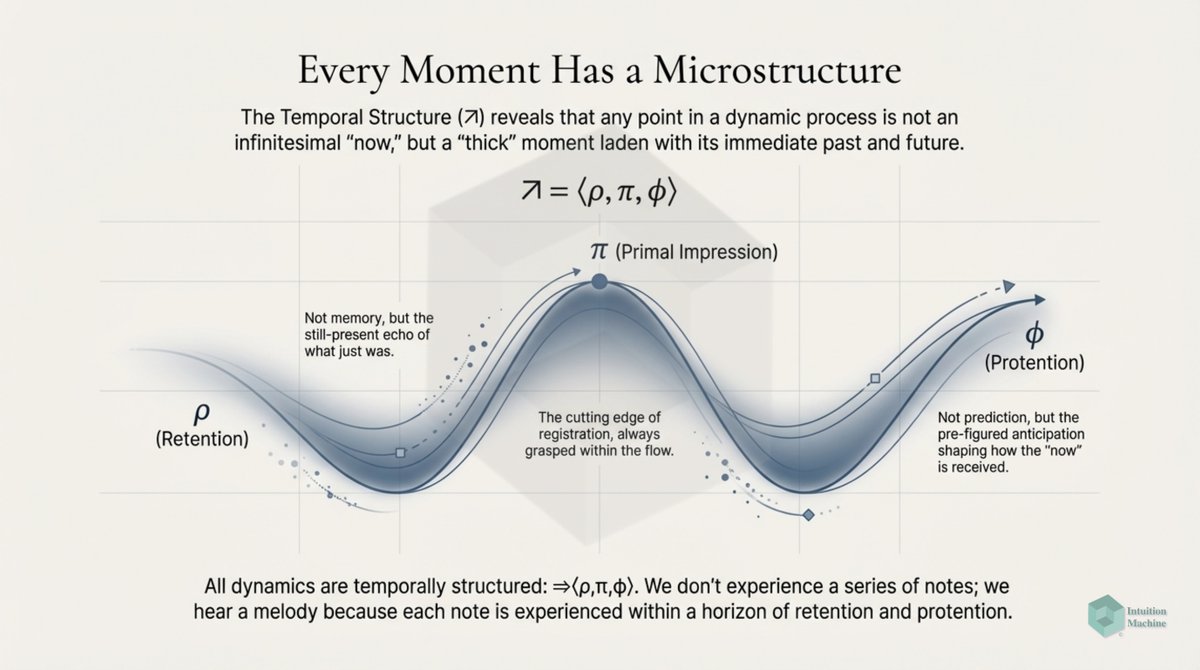

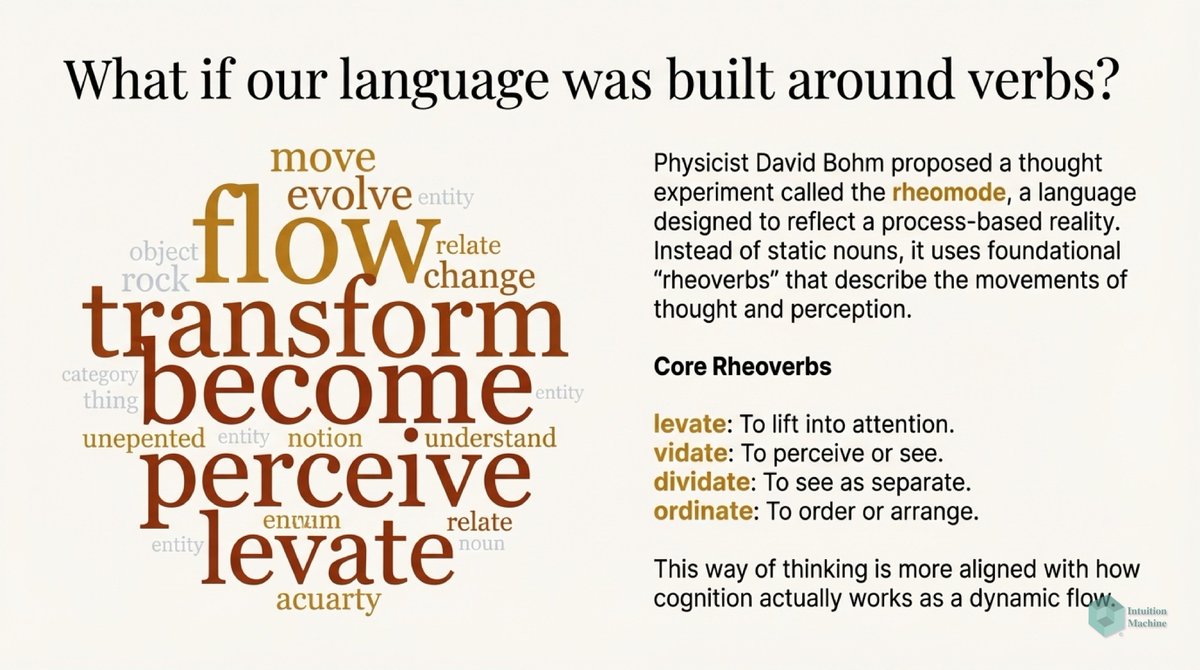

To feel that you understand something implies that it is conveyed to you in a language that you have previously understood.

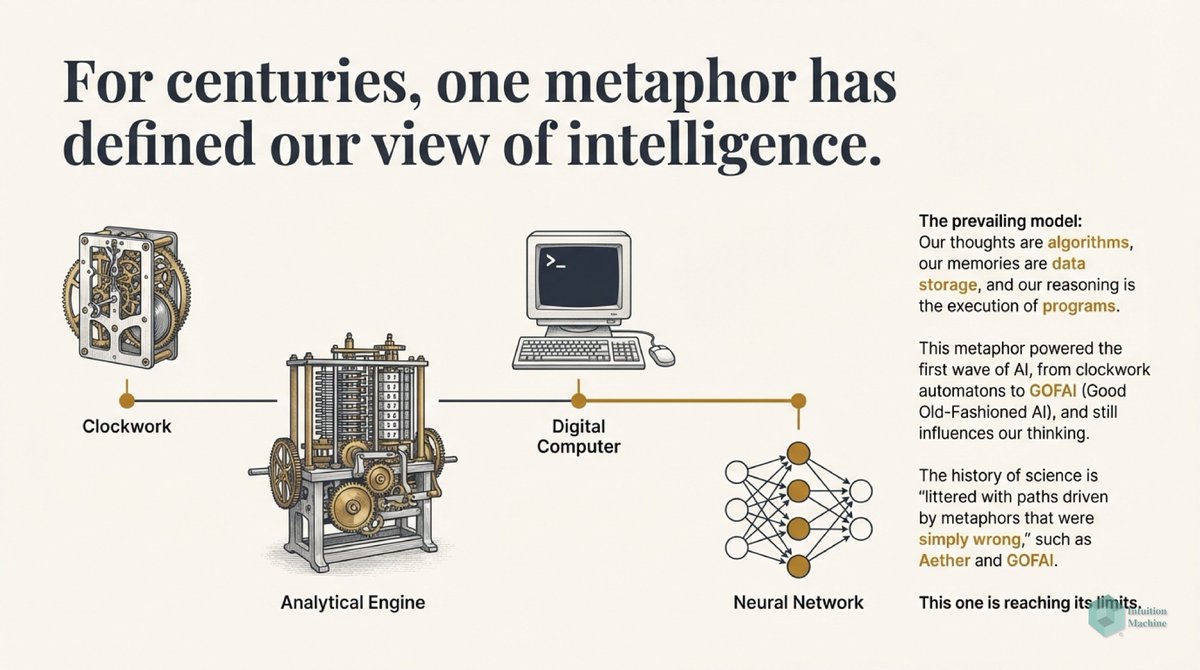

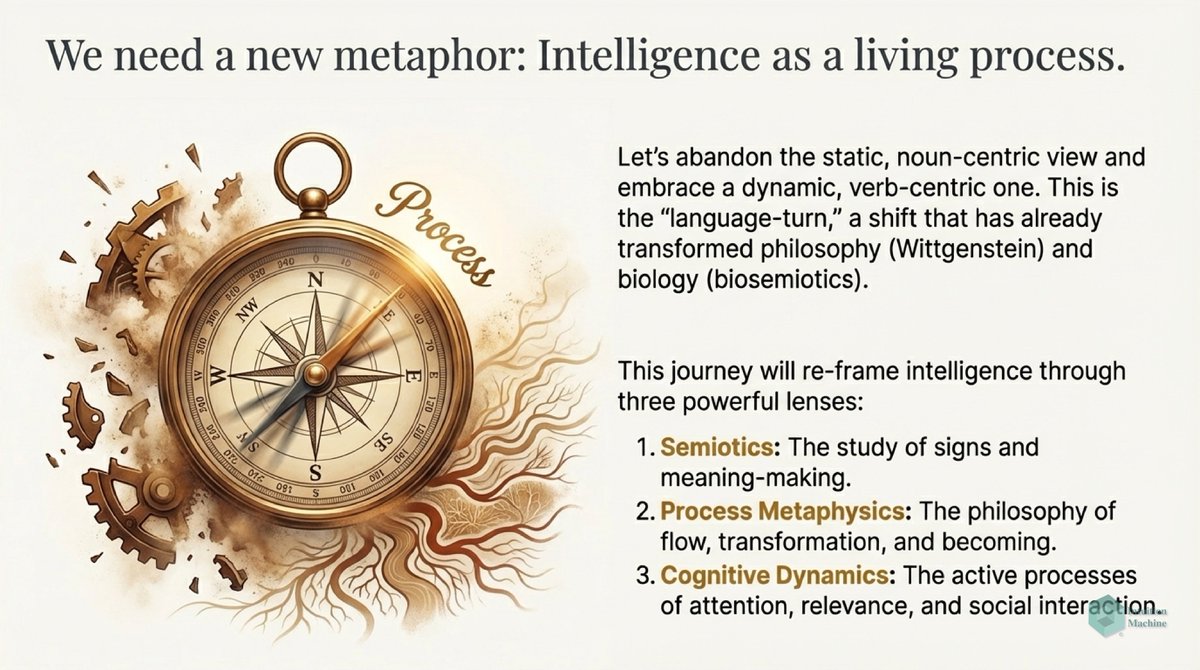

Language is more than syntax and it includes semantics. Our natural language is full of metaphors and we understand what is spoken to us through our previous understanding of these metaphors.

Thus the feeling of understanding at the most basic level is its connection to what you already know. The great explainers make this connection using apt metaphors in their language.

But understanding will vary in degrees. An expert will understand the words of another expert in a different way than a novice will understand it.

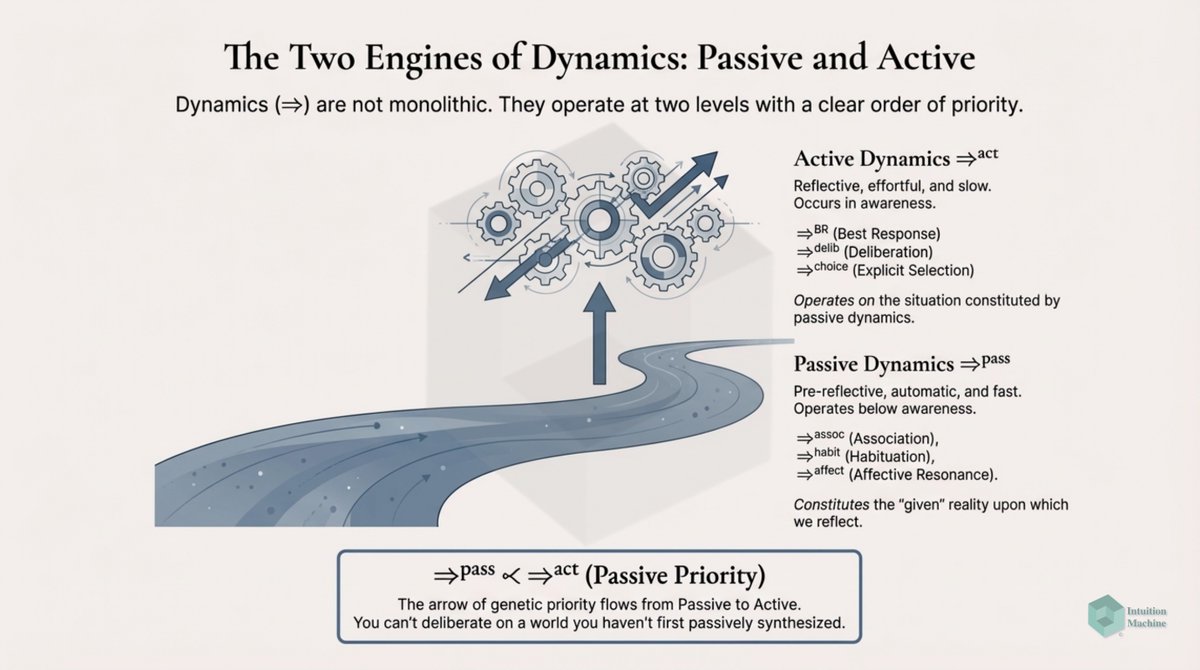

That's because to get a better 'feel' of understanding, one has to touch the surface of the subject in many more ways. This requires more than passive engagement but rather understanding is enhanced by doing.

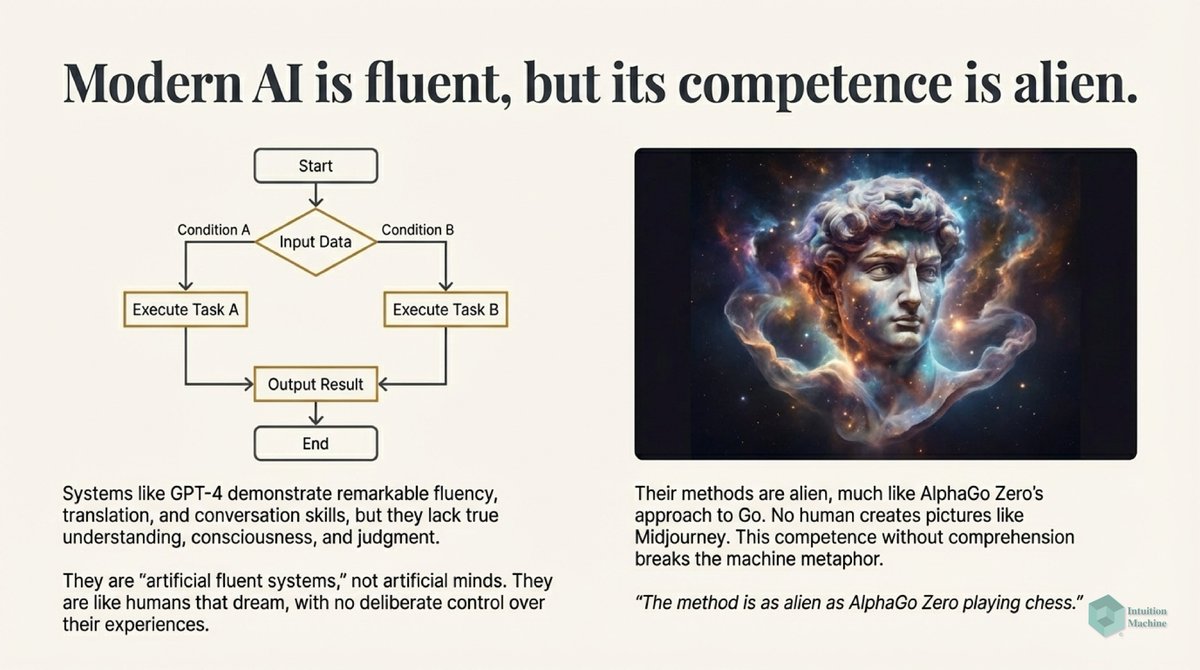

There is no deep understanding without doing. There is no understanding without interaction. This interaction may be physical or it may be mental.

The latter kind is more difficult because there is nothing to correct for errors other than one's previous understanding.

Thus understanding involves the interaction with this world to uncover the errors in understanding revealed by this world. We understand because we interact to see the errors uncovered by our interaction.

Thus when we again interact with a new subject and discover no errors in our interaction, we gain confidence in our understanding and thus the feel of understanding.

Human understanding involves connecting many related concepts. So, we feel that we understand when we can generate the connections ourselves. Passively seeing the connections is not the same as generating the connections.

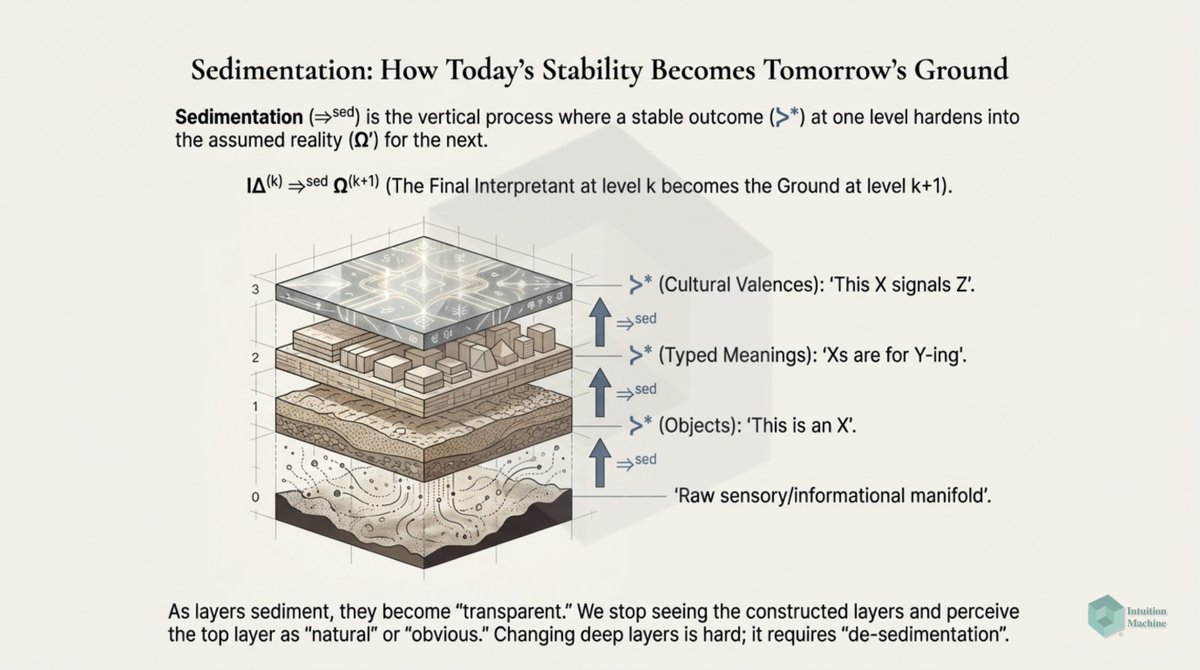

Thus to persuade some to believe that they understand something, they have to generate the connections themselves. Which begins by planting the seed so that their understanding grows.

Once the seed is planted and it is grown within a person through repeated reinforcement, it becomes impossible to change a person's understanding. Arguments are insufficient. That is why disinformation is a very terrible thing.

The feeling of mastery of a subject is when we discover ourselves in the flow of thought, where we can navigate a complex subject with effortlessness.

Unfortunately, this can be a gift in disguise. Mastery can be a curse if one begins with the wrong seed. We may feel we understand the world, but it may be entirely wrong because it germinated from a seed that is not of this world.

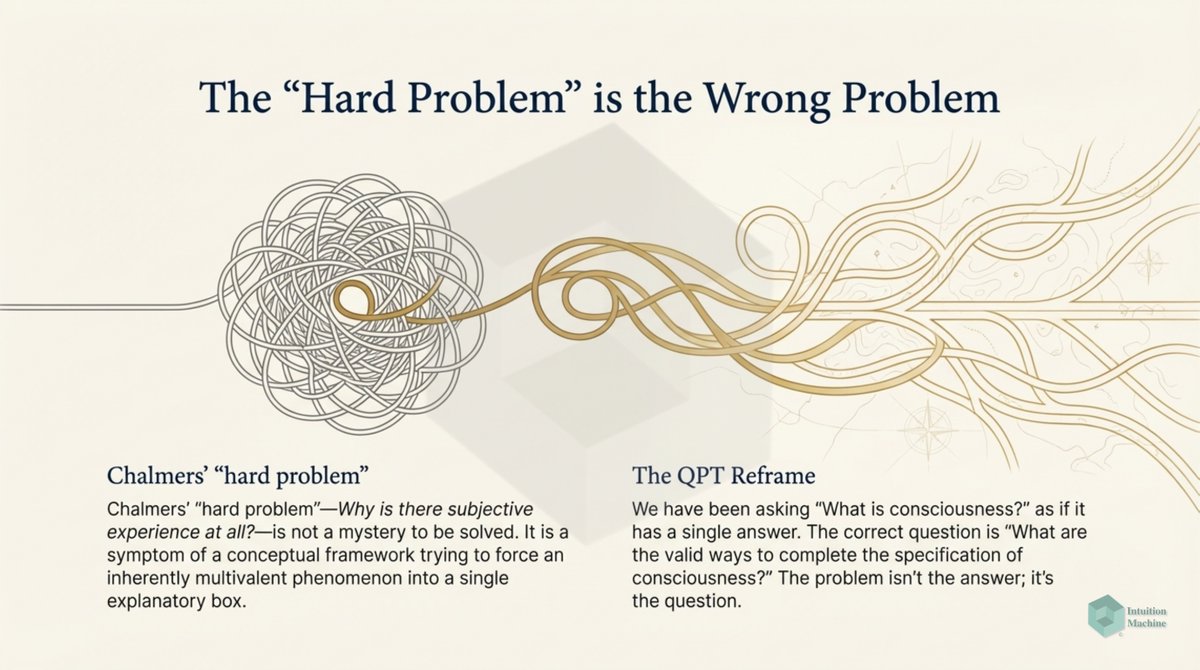

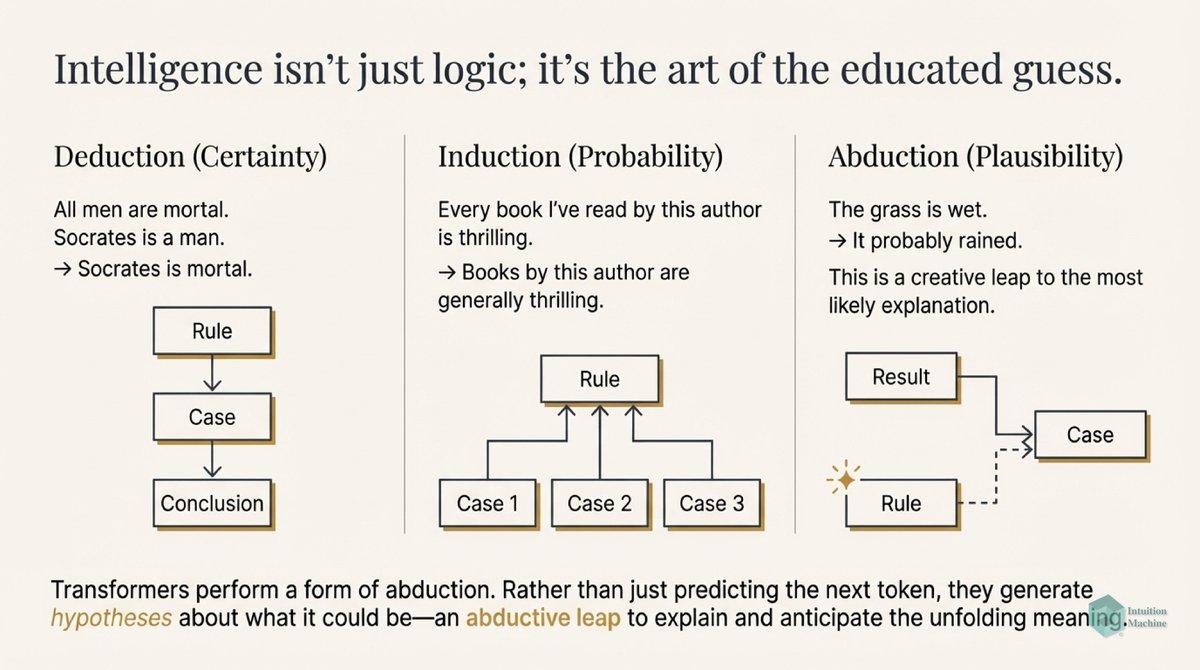

What then is the 'right seed'? The right seed will always begin with a hypothesis. A hypothesis is imagined when one discovers an error in one's model of reality. It is through exploration of this error (or what one might call surprise) that we might generate a good hypothesis.

A good hypothesis is what Richard Feynman calls a 'first principle'. His first principle is in fact recursive "The first principle is that you must not fool yourself — and you are the easiest person to fool.”

This is when it hits you, many have fooled themselves into understanding the world they lived in. The germinating seed of Christianity is that we are all sinners. The germinating seed for understanding is that we are all fools.

We are fools because we have accepted to be fooled by the societies we live in. We believe we understand our world because our societies have fooled ourselves.

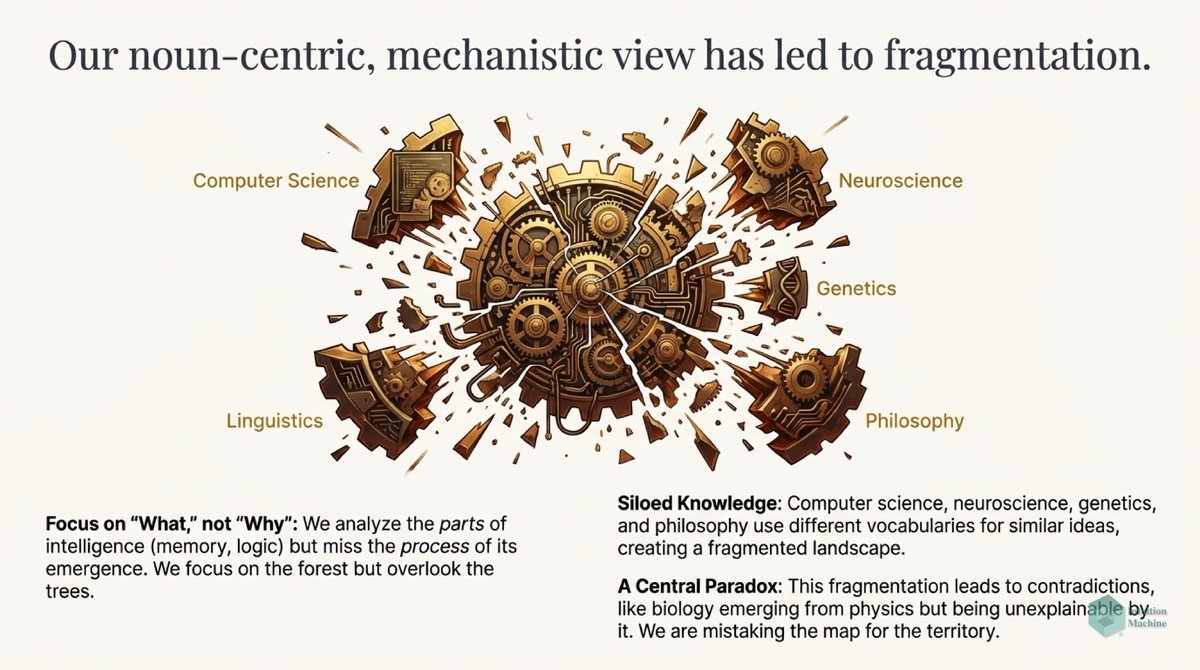

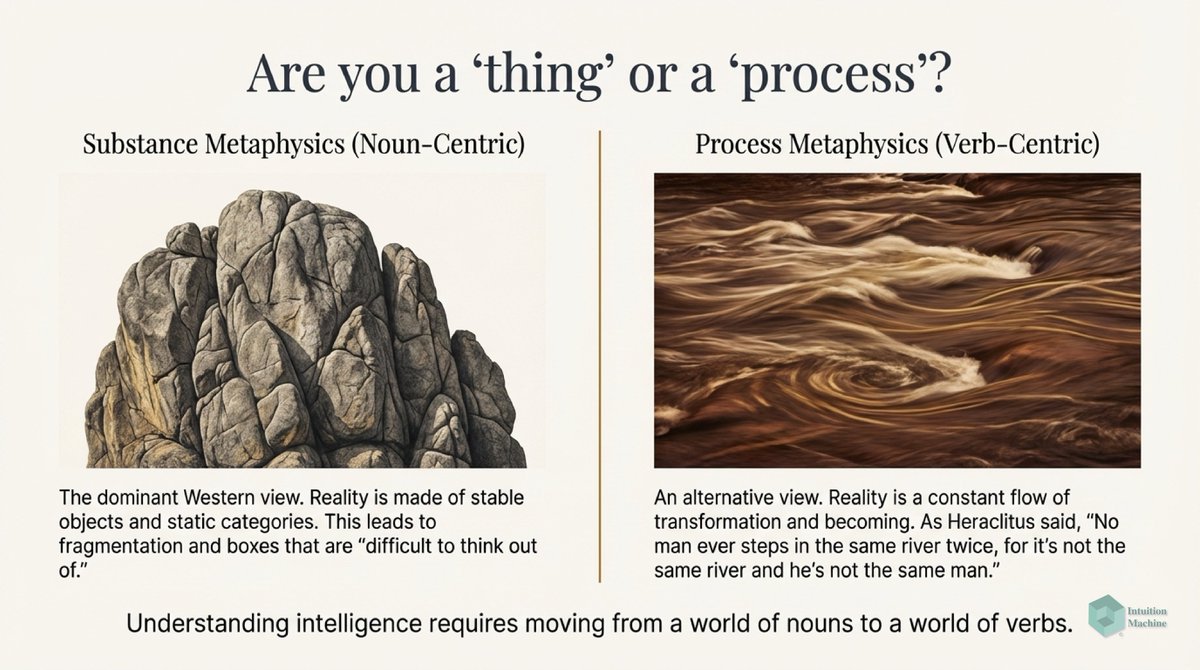

C.S. Peirce and @conways_law realized that the concepts that we create are the consequence of the organizations that we've invented. These concepts are reinforced by the organizations whose existence is justified by the validity of their ideas.

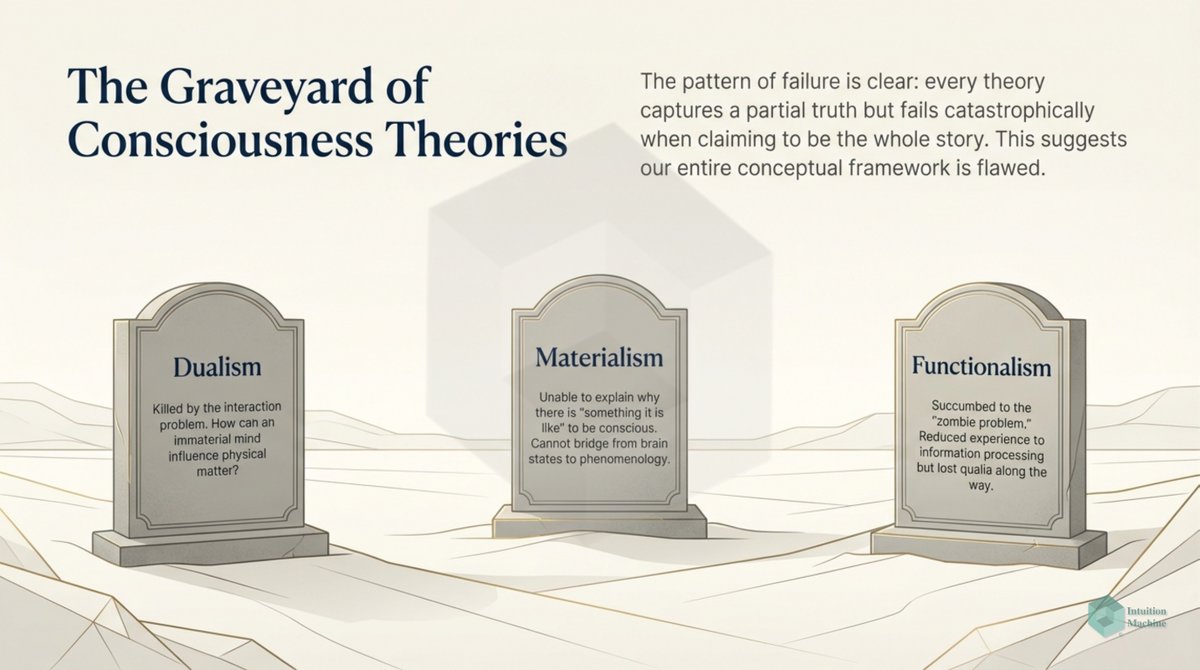

In every domain in science, we have organizations that have emerged to promote a specific perspective into reality. We build hermetically sealed communities that are unable to absorb new ideas from other communities.

We are unable to break out of a belief system because we grew up in that belief system. The most difficult thing to do is to throw away your belief system that took decades of effort for you to grow.

The first step in progress is to accept that your first principle is wrong. Unfortunately, you cannot do this because your entire livelihood, your entire being, is in jeopardy if you make this acknowledgment.

So the most convenient thing is to accept the reassuring lie. So even though we have a glimpse that we are wrong, we refuse to make the change. We have already sunk too big an investment in the wrong cause.

This is why most change comes from the youth. From the people who have yet to make an investment. From the people who do not benefit from the status quo.

From the people who know that they are fools.

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh