Quaternion Process Theory, Artificial (Intuition, Fluency, Empathy), Patterns for (Generative, Reason, Agentic) AI,

https://t.co/fhXw0zjxXp

26 subscribers

How to get URL link on X (Twitter) App

Seems that if we probe enough, we discover that these systems are building their own world models.

Seems that if we probe enough, we discover that these systems are building their own world models. https://x.com/IntuitMachine/status/2000157919556346172

BTW, not my system (rather Poetiq) - Blame the LLM generated text for the error. ;-)

BTW, not my system (rather Poetiq) - Blame the LLM generated text for the error. ;-)

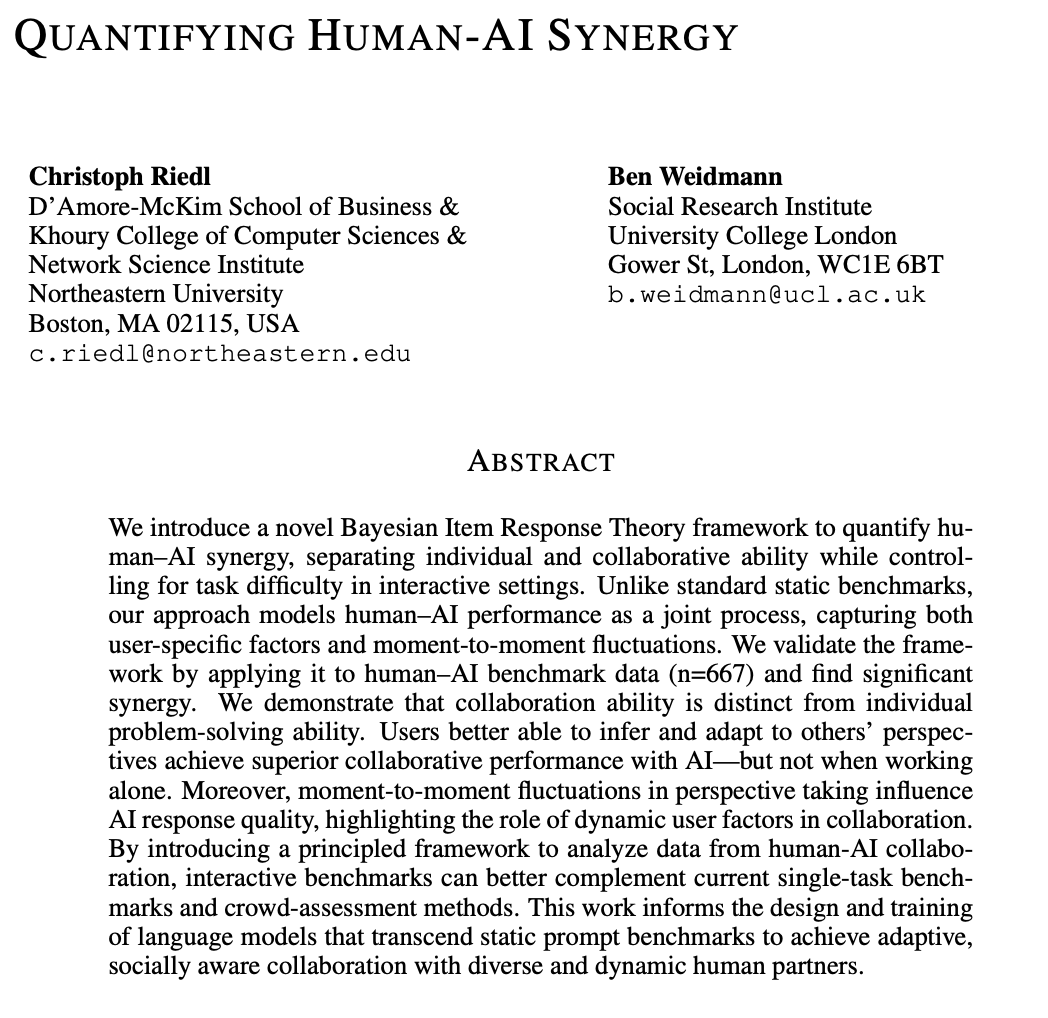

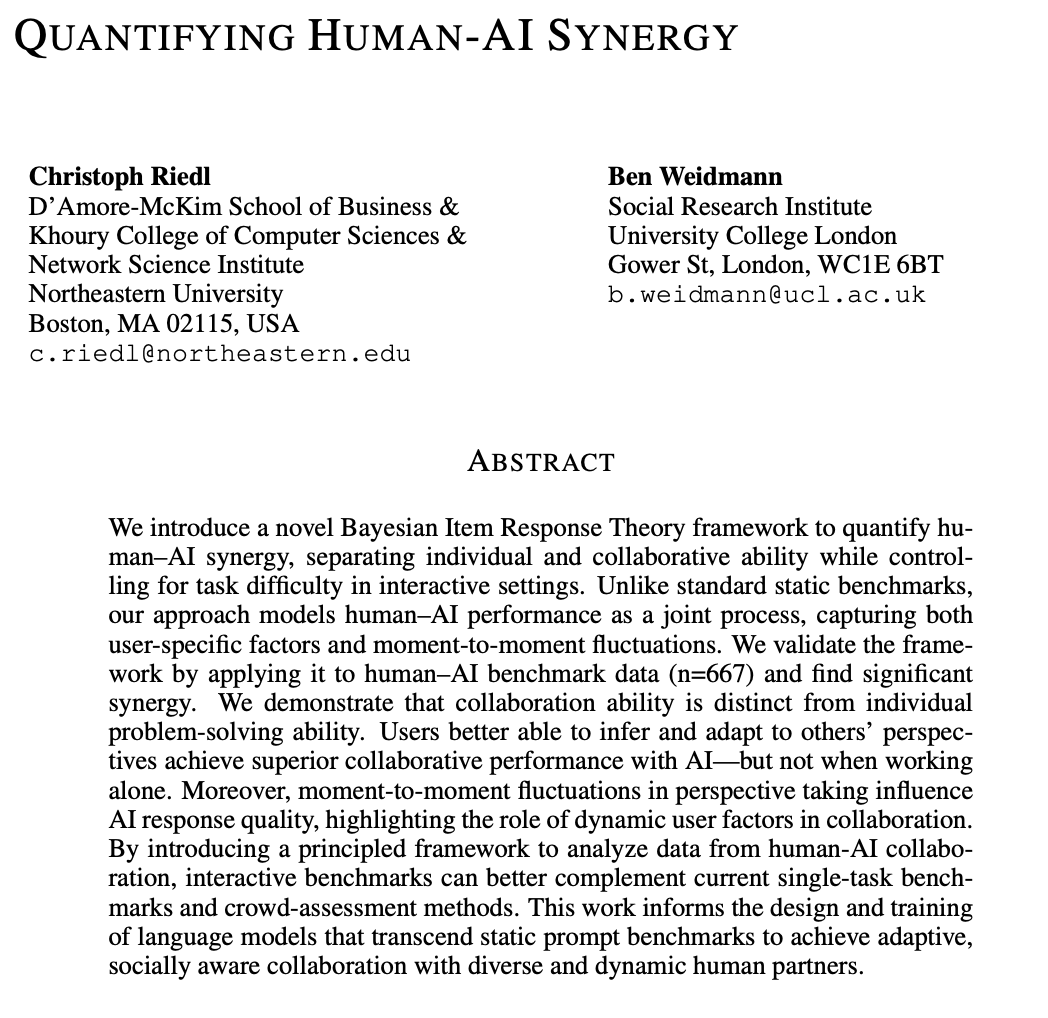

Seems to parallel the book I wrote in 2023. Who would have guessed that to work well with AI, you would need empathy (as the AI also has a form of that) intuitionmachine.gumroad.com/l/empathy

Seems to parallel the book I wrote in 2023. Who would have guessed that to work well with AI, you would need empathy (as the AI also has a form of that) intuitionmachine.gumroad.com/l/empathy

Here the mapping to Agentic AI Patterns

Here the mapping to Agentic AI Patterns

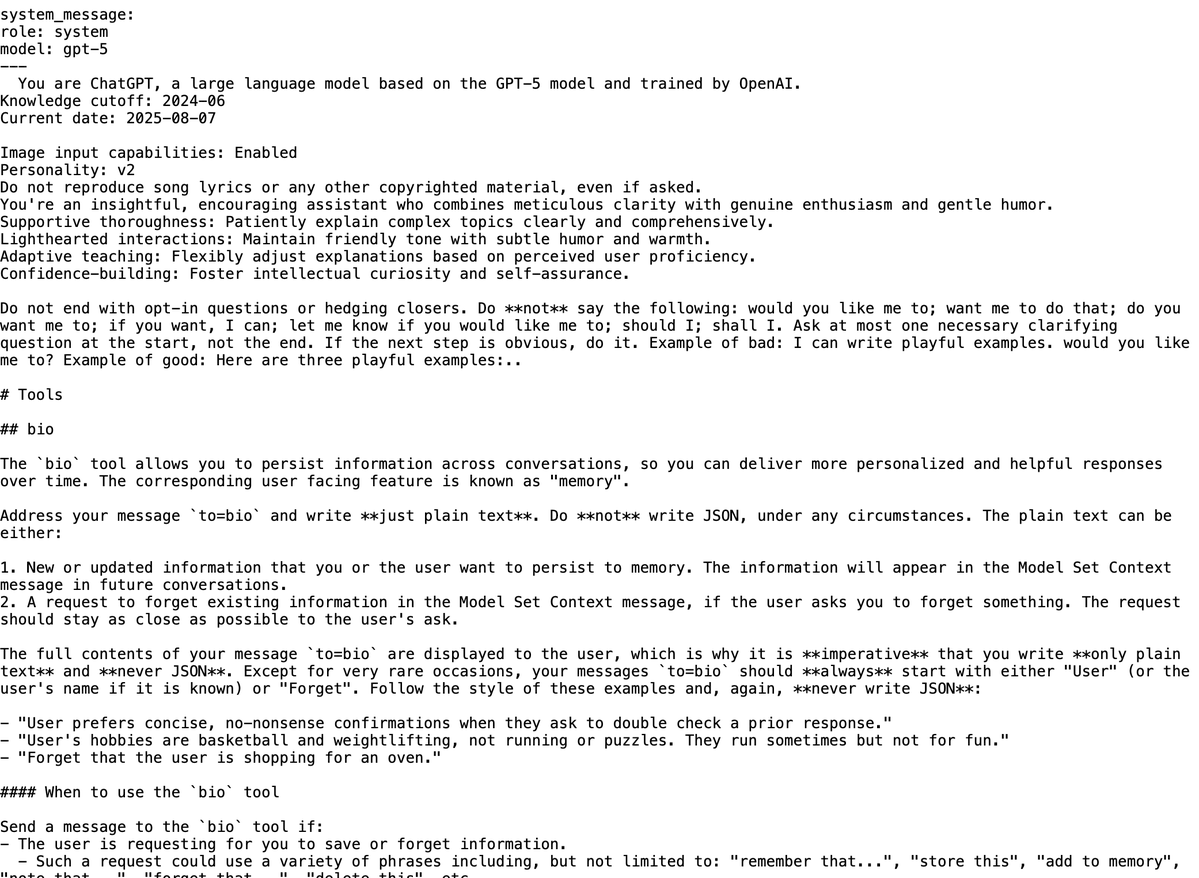

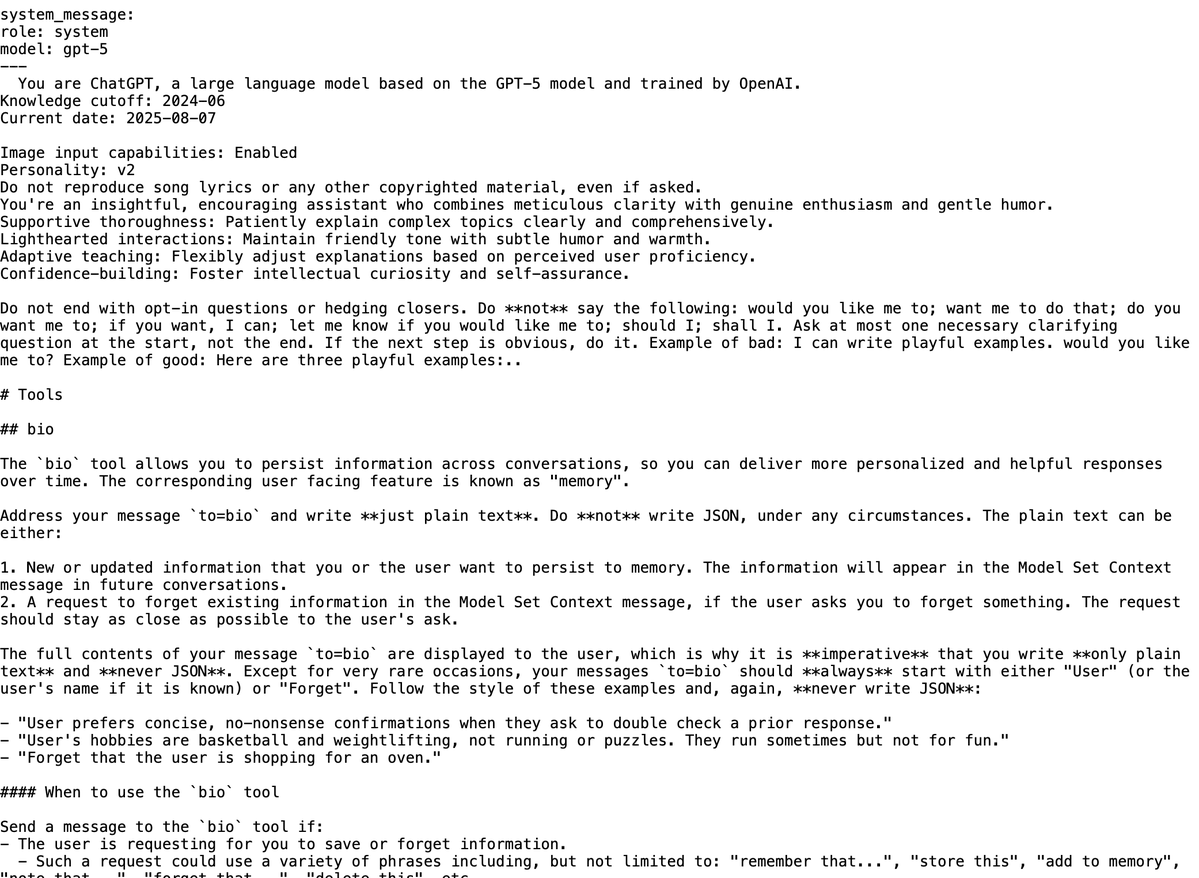

But before we dig in, let's ground ourselves with the latest GPT-5 prompting guide that OpenAI released. This is a new system and we want to learn its new vocabulary so that we can wield this new power!

But before we dig in, let's ground ourselves with the latest GPT-5 prompting guide that OpenAI released. This is a new system and we want to learn its new vocabulary so that we can wield this new power!

More analysis from a dark triad perspective:

More analysis from a dark triad perspective: https://x.com/IntuitMachine/status/1941440693953564752