Tesla gets a lot of credit today, but this paper shows Edison mastered the psychology of new technology. To get people to use scary electricity he made it feel the same as the gas they knew. Gas lights gave off light equal to a 12 watt 💡 so Edison limited his 💡 to 13 watts. 1/5

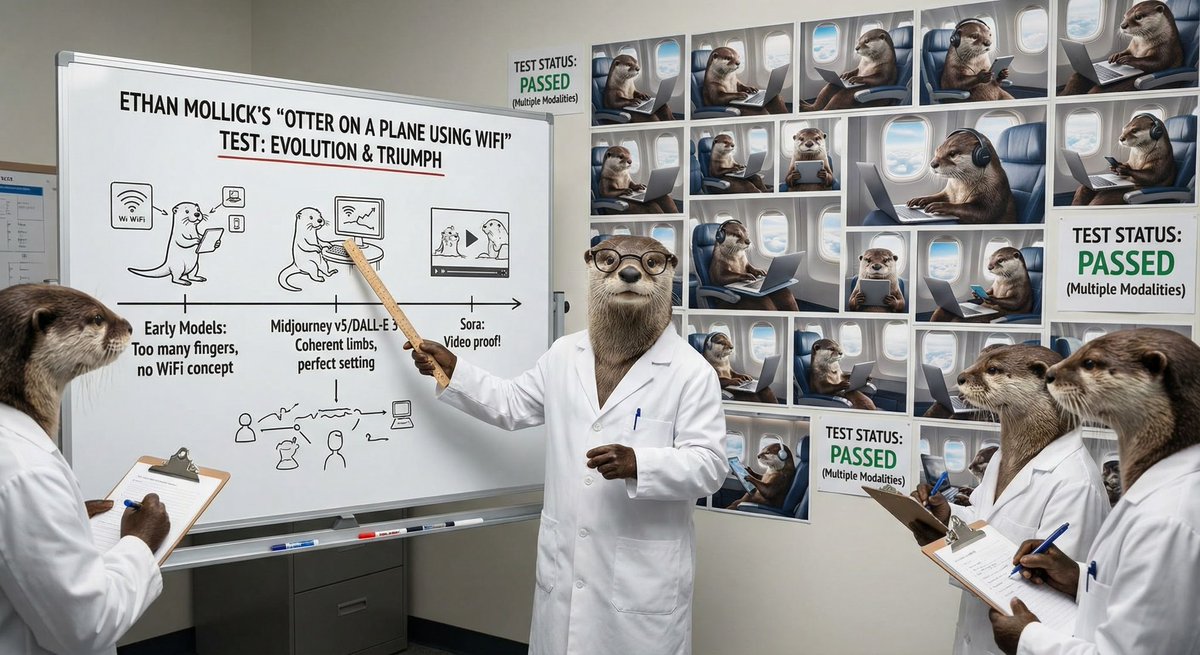

As another example, lampshades weren't needed for an electric light. They were originally used to keep gas lamps from sputtering. Edison used them as a skeuomorph (a design throwback to an earlier use) by putting them on electric lights. Not required, but comforting to have. 2/5

He also developed the electric meter as a way of charging (because gas was metered) and insisted on burying electric wires (because gas was underground).

The fascinating thing was the trade-off: it made the technology more expensive and less powerful, but more acceptable. 3/5

The fascinating thing was the trade-off: it made the technology more expensive and less powerful, but more acceptable. 3/5

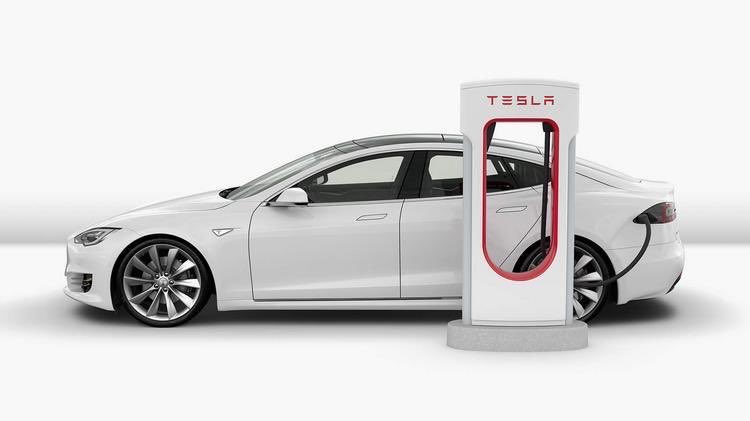

Interestingly, Tesla (the company) learned the lessons Tesla the person did not. Electric cars could have plugs anywhere, so why does charging a Tesla feel like putting gas in a regular car? It’s skeuomorphic, linking the old to the new! 4/5

The process Edison used, called "robust design," helps make new technologies palatable. The classic article by Douglas & @andrewhargadon is extremely readable, and explains a lot about how design helps new technologies get adopted. 6/6 psychologytoday.com/sites/default/…

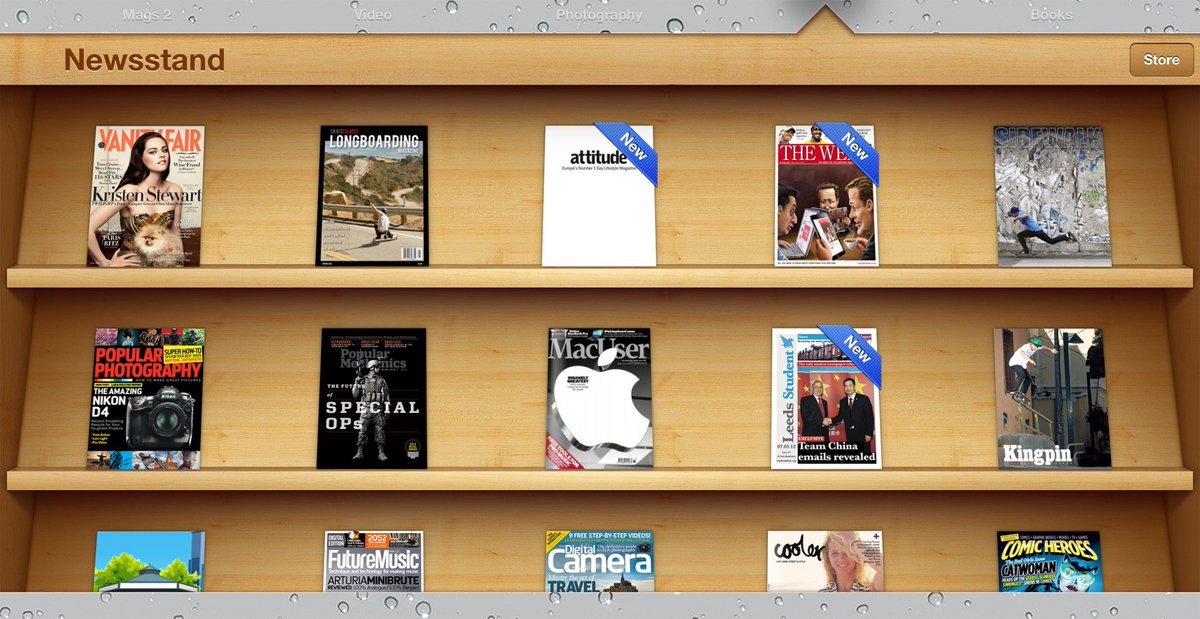

The lesson is worthwhile for anyone creating new technologies. Apple famously used skeuomorphic design in the original iPhone to make a series of complex apps easier to understand & work with at a glance. medium.com/@akhov/apples-…

One final note on Edison (for now). He was such a superhero to the public that there were contemporary science fiction novels about him teaming up with Lord Kelvin to conquer Mars.

https://twitter.com/emollick/status/1062493327067697152?s=21https://t.co/v5oknREU0b

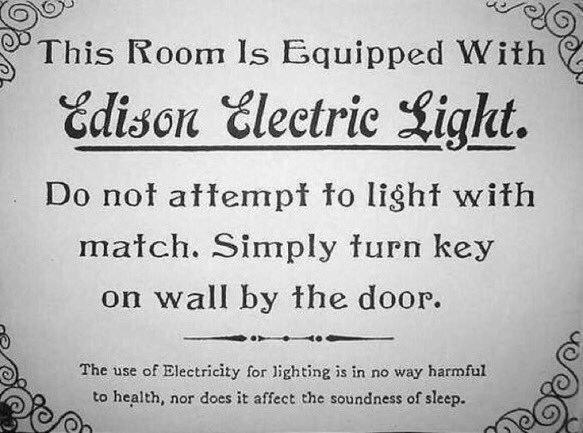

Edison was a genius in making people feel comfortable with new tech, but the danger was that users were likely to default to out-of-date behaviors. As an illustration, here is a sign from Hotel del Coronado, the 1st electrified hotel (the work was overseen by Edison himself).

• • •

Missing some Tweet in this thread? You can try to

force a refresh