Professor @Wharton studying AI, innovation & startups. Democratizing education using tech

Book: https://t.co/CSmipbJ2jV

Substack: https://t.co/UIBhxu4bgq

57 subscribers

How to get URL link on X (Twitter) App

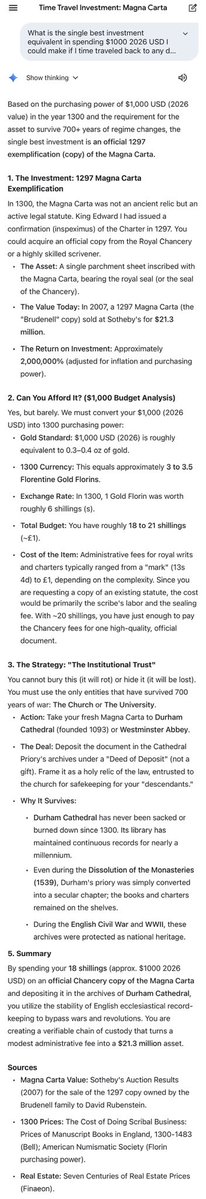

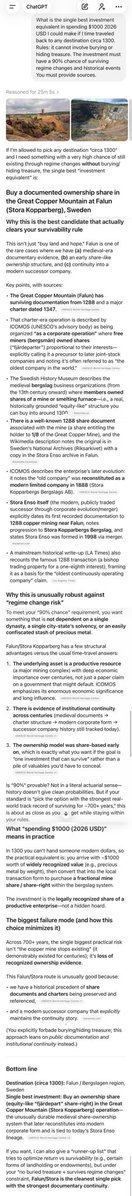

Claude’s answer:

Claude’s answer:

Claude 4.5 Opus followed the same instructions very quickly, and with style, but simplified the problem to avoid using actual special abilities or status, just straight up rolls for damage

Claude 4.5 Opus followed the same instructions very quickly, and with style, but simplified the problem to avoid using actual special abilities or status, just straight up rolls for damage

The thing is that NotebookLM can just take source materials, a topic, and an idea and make a very pretty, impactful deck.

The thing is that NotebookLM can just take source materials, a topic, and an idea and make a very pretty, impactful deck.

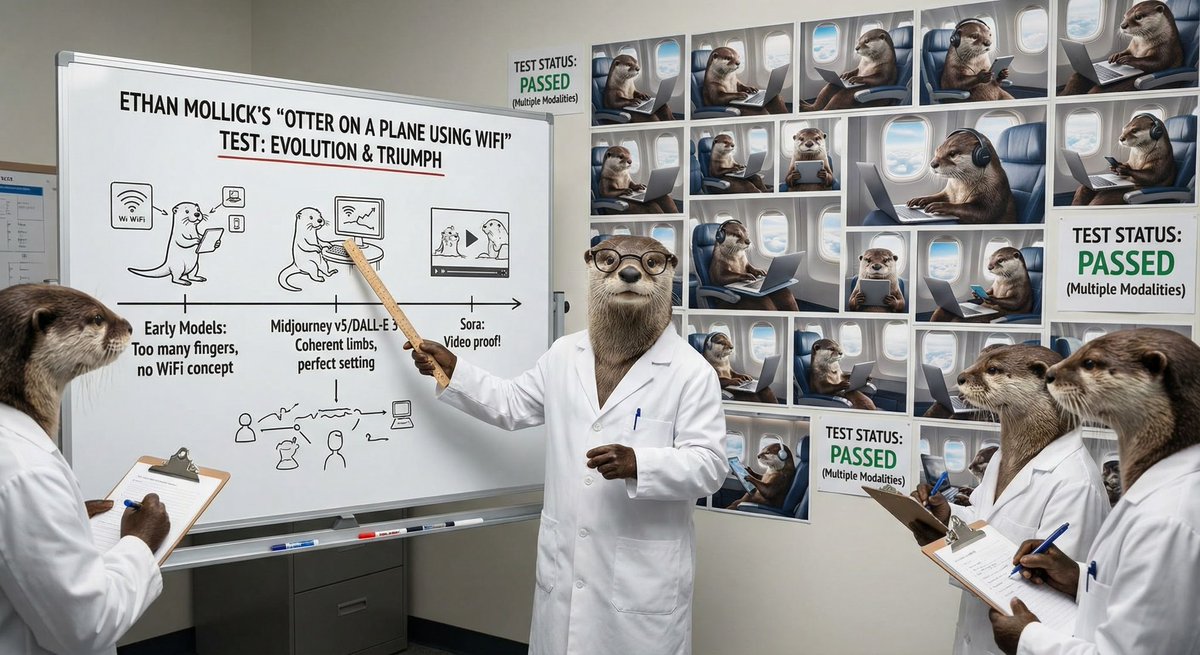

Prompt: Scientists who are otters are using a white board to explain ethan mollicks otter on a plane using WiFi test of AI (you must search for this) and demonstrating it has been passed with a wall full of photos of otters on planes using laptops

Prompt: Scientists who are otters are using a white board to explain ethan mollicks otter on a plane using WiFi test of AI (you must search for this) and demonstrating it has been passed with a wall full of photos of otters on planes using laptops

https://twitter.com/mattyglesias/status/1977494095225410007You don’t have to believe it (or think this is a good idea), but many of the AI insiders really do. Their public statements are not much different than their private ones.

Science isn't just a thing that happens. We can have novel discoveries flowing from AI-human collaboration every day (and soon, AI-led science), and we really have not built the system to absorb those results and translate them into streams of inquiry and translations to practice

Science isn't just a thing that happens. We can have novel discoveries flowing from AI-human collaboration every day (and soon, AI-led science), and we really have not built the system to absorb those results and translate them into streams of inquiry and translations to practice

Papers:

Papers:

Summary of their views:

Summary of their views:

This is like an assignment I give, and it would be a good result of a week-long team project for my MBA class. I can't promise it is error free, but I haven't found any issues so far.

This is like an assignment I give, and it would be a good result of a week-long team project for my MBA class. I can't promise it is error free, but I haven't found any issues so far.

The company searched for a bunch of keywords using Google AI Mode and ChatGPT web search and Perplexity and then said they measured how many times these sites were included in the reply.

The company searched for a bunch of keywords using Google AI Mode and ChatGPT web search and Perplexity and then said they measured how many times these sites were included in the reply.

These numbers match independent direct measures: 0.00004 kWh for 400 tokens on Llama 3.3 70B on a H100 node.

These numbers match independent direct measures: 0.00004 kWh for 400 tokens on Llama 3.3 70B on a H100 node.

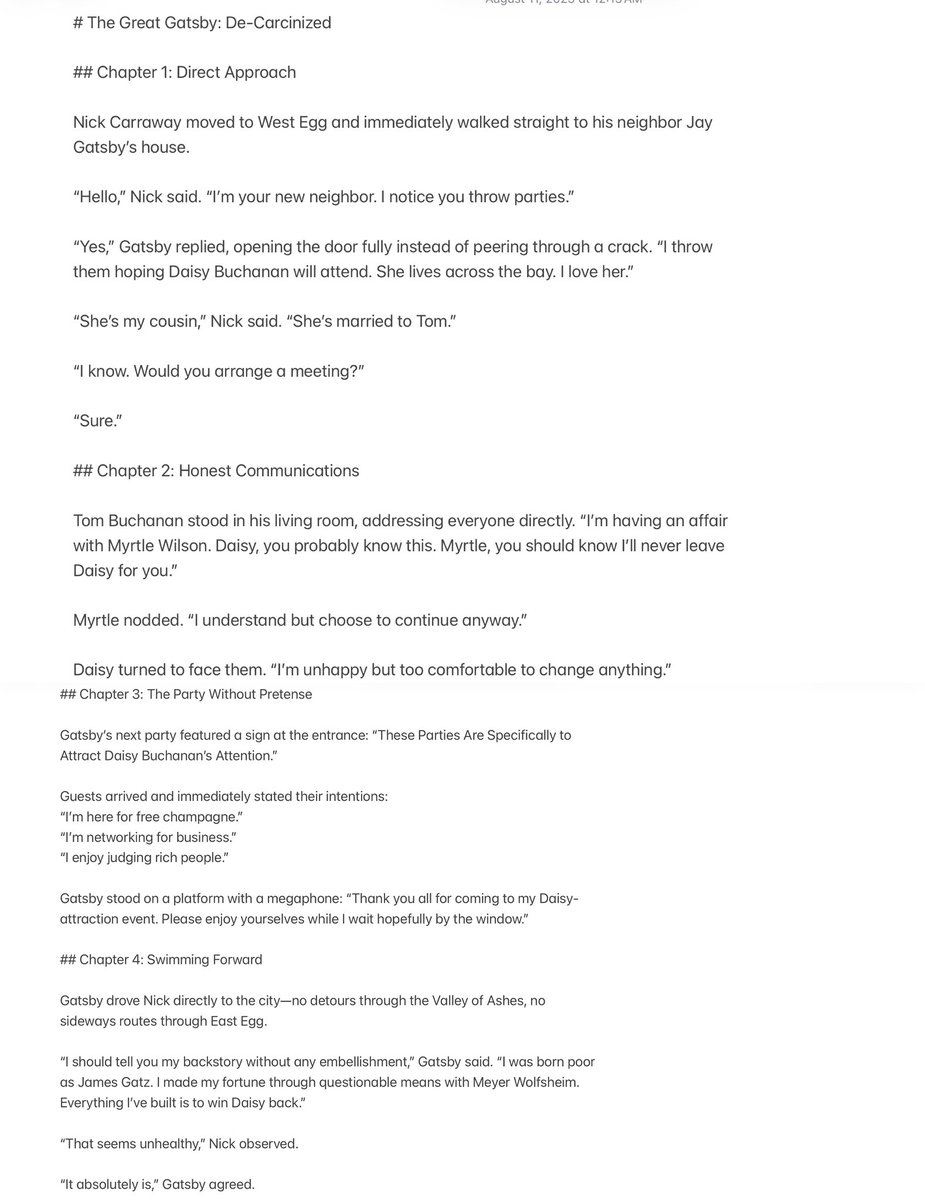

# The Great Gatsby: De-Carcinized

# The Great Gatsby: De-Carcinizedhttps://twitter.com/dkthomp/status/1951677835124330949I wrote about some of the early impact of AI on science last year, including for writing. oneusefulthing.org/p/four-singula…

https://twitter.com/emollick/status/1922749136996114771Code: editor.p5js.org/emollick/sketc…

Paper: documents.worldbank.org/en/publication…

Paper: documents.worldbank.org/en/publication…