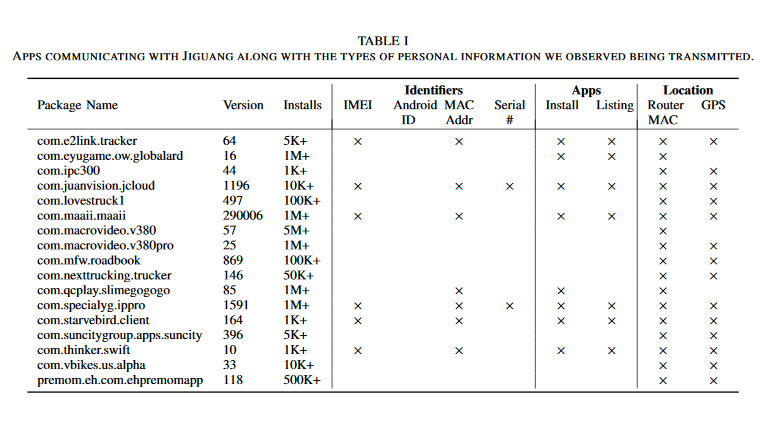

Android apps from dating to fertility to selfie editors share personal data with the Chinese company Jiguang via its SDK that is embedded in the apps, including GPS locations, immutable device identifiers and info on all apps installed on a phone.

Report: blog.appcensus.io/2020/09/15/rep…

Report: blog.appcensus.io/2020/09/15/rep…

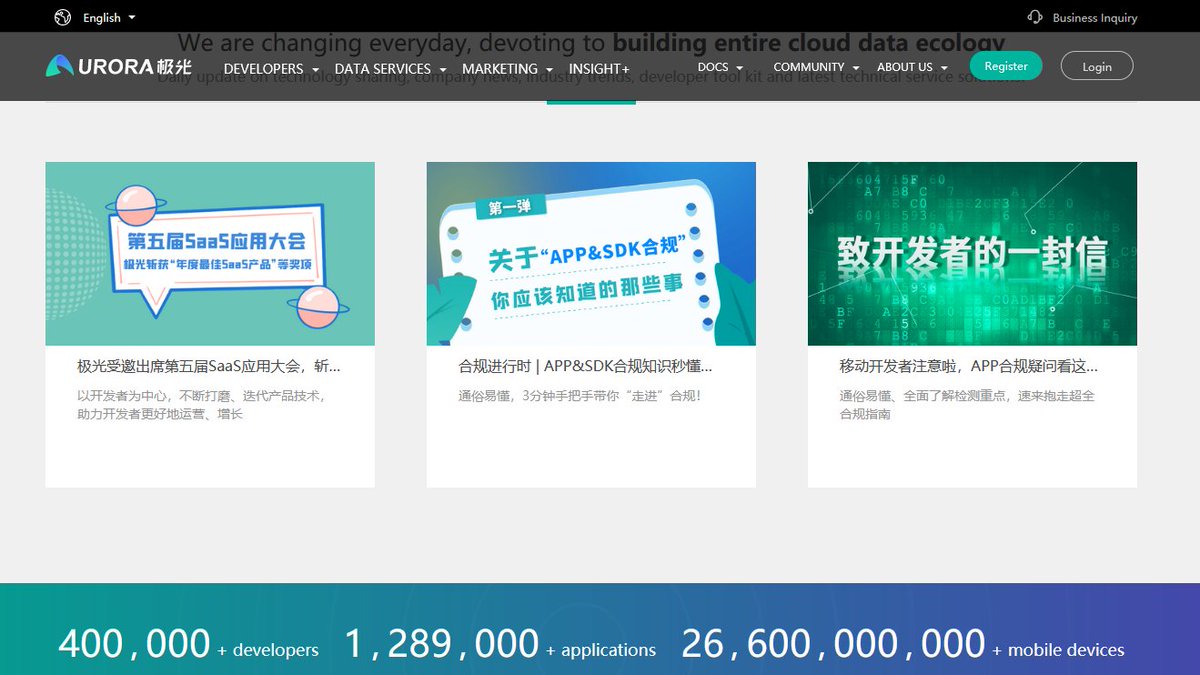

Jiguang, also known as Aurora Mobile, claims to be present in >1 million apps and >26 billion mobile devices. Which seems wildly exaggerated.

jiguang.cn/en/

Anyway, researchers found Jiguang's SDK in about 400 apps, some of them with hundreds of millions of installs.

jiguang.cn/en/

Anyway, researchers found Jiguang's SDK in about 400 apps, some of them with hundreds of millions of installs.

According to the paper, Jiguang’s SDK is "particularly concerning because this code can run silently in the background without the consumer ever using the app in which it is embedded". Also, the SDK uses several methods to "obfuscate and hide" its "behavior and network activity".

While many "previous research efforts focused on SDKs specialized in analytics and advertising services, the results of our analysis call for the need of analyzing and regulating …the whole third-party SDK ecosystem due to their privacy and consumer protection implications"

Yes

Yes

Study is by @AppCensusInc and @IDACwatchdog. Here's the link to the PDF report:

icsi.berkeley.edu/pubs/privacy/T…

Please note that there are hundreds, if not thousands of data companies based in the US, Europe, Russia, Singapore, India or in other countries that are doing similar stuff.

icsi.berkeley.edu/pubs/privacy/T…

Please note that there are hundreds, if not thousands of data companies based in the US, Europe, Russia, Singapore, India or in other countries that are doing similar stuff.

Nevertheless, Jiguang/Aurora is special in some way.

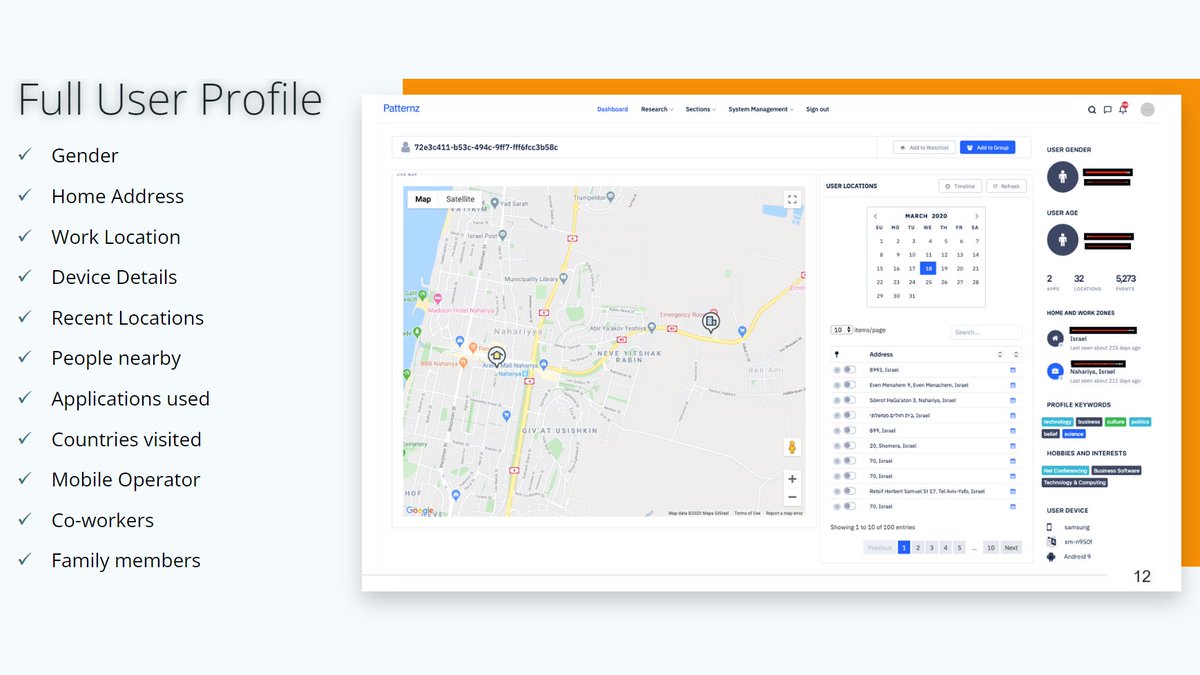

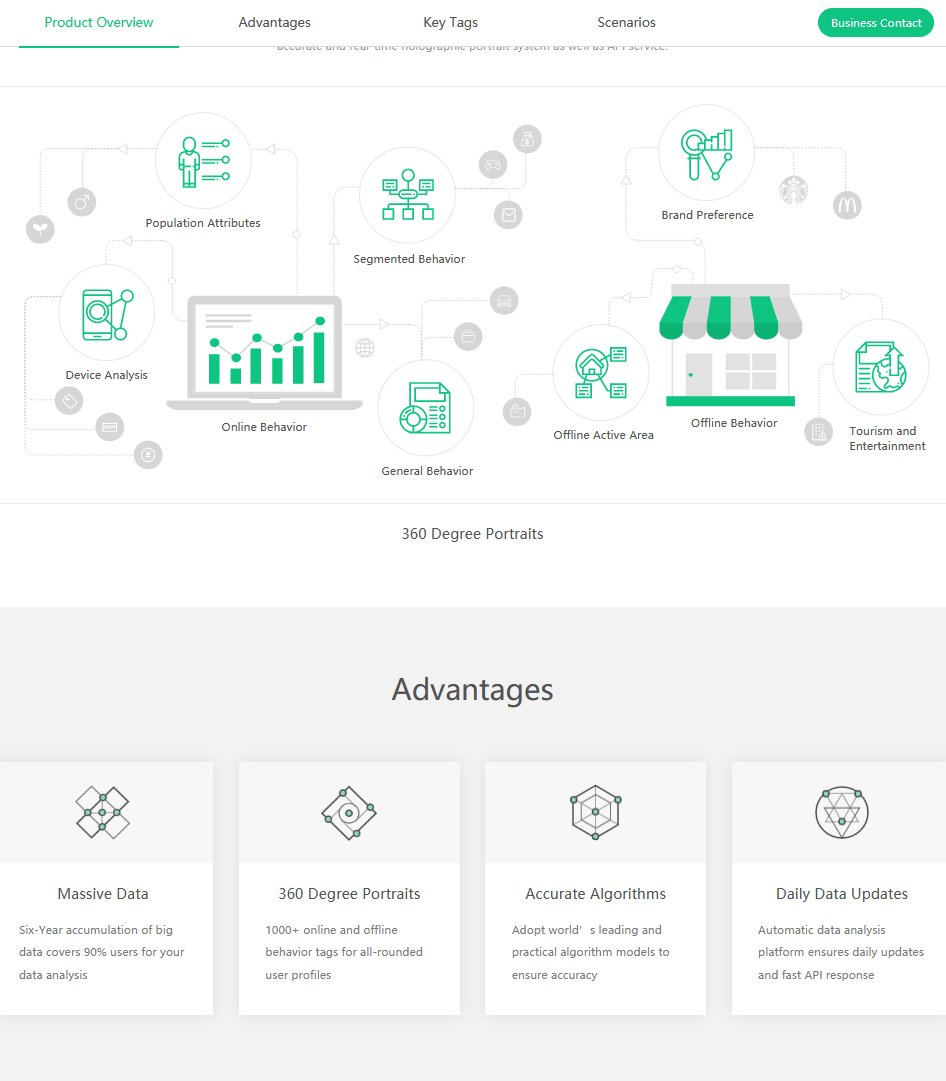

Like many other mobile data brokers all across the world, they sell so-called audience data, i.e. extensive user profiles with hundreds of attributes that can be used for surveillance-based advertising:

jiguang.cn/en/iaudience

Like many other mobile data brokers all across the world, they sell so-called audience data, i.e. extensive user profiles with hundreds of attributes that can be used for surveillance-based advertising:

jiguang.cn/en/iaudience

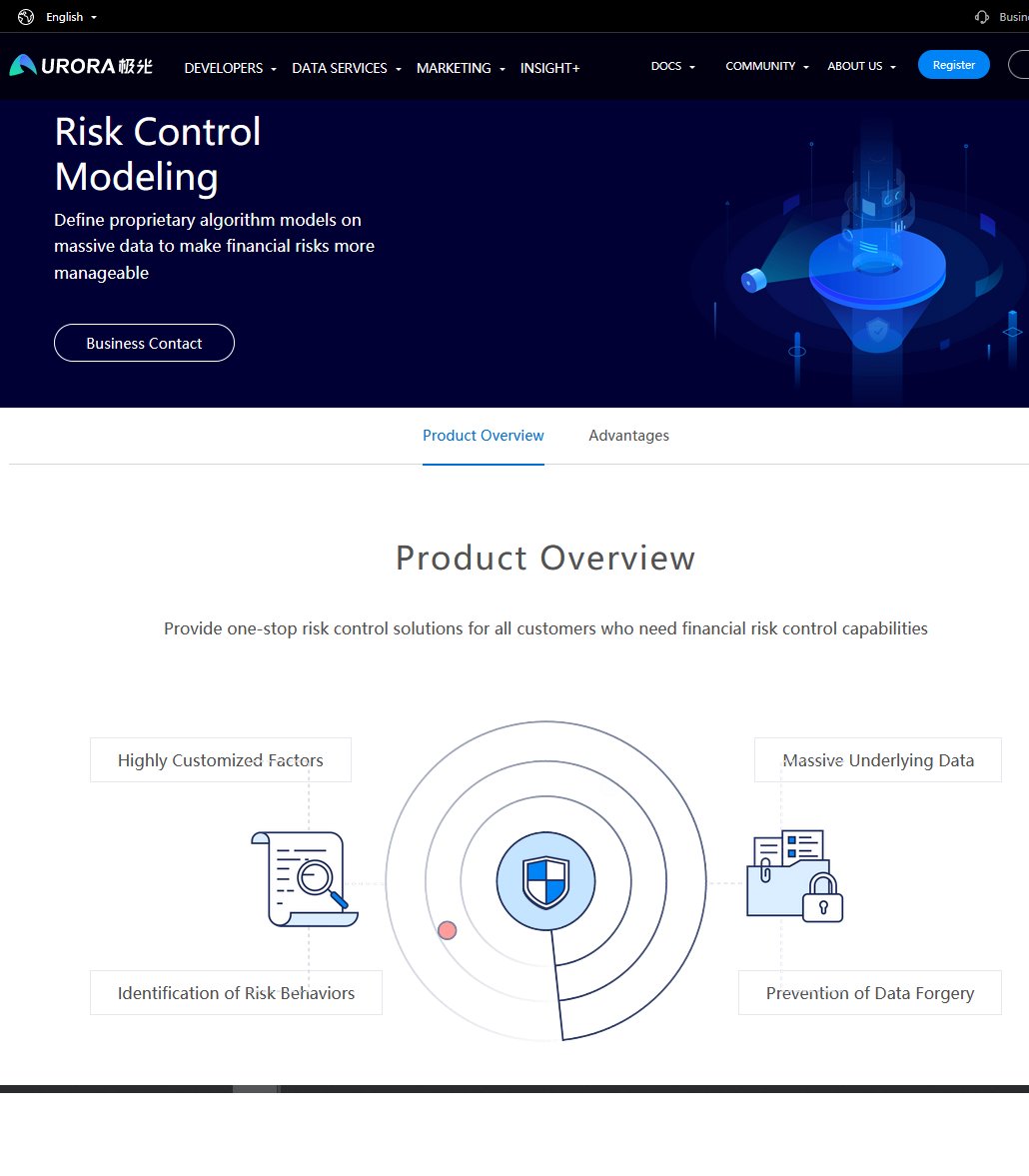

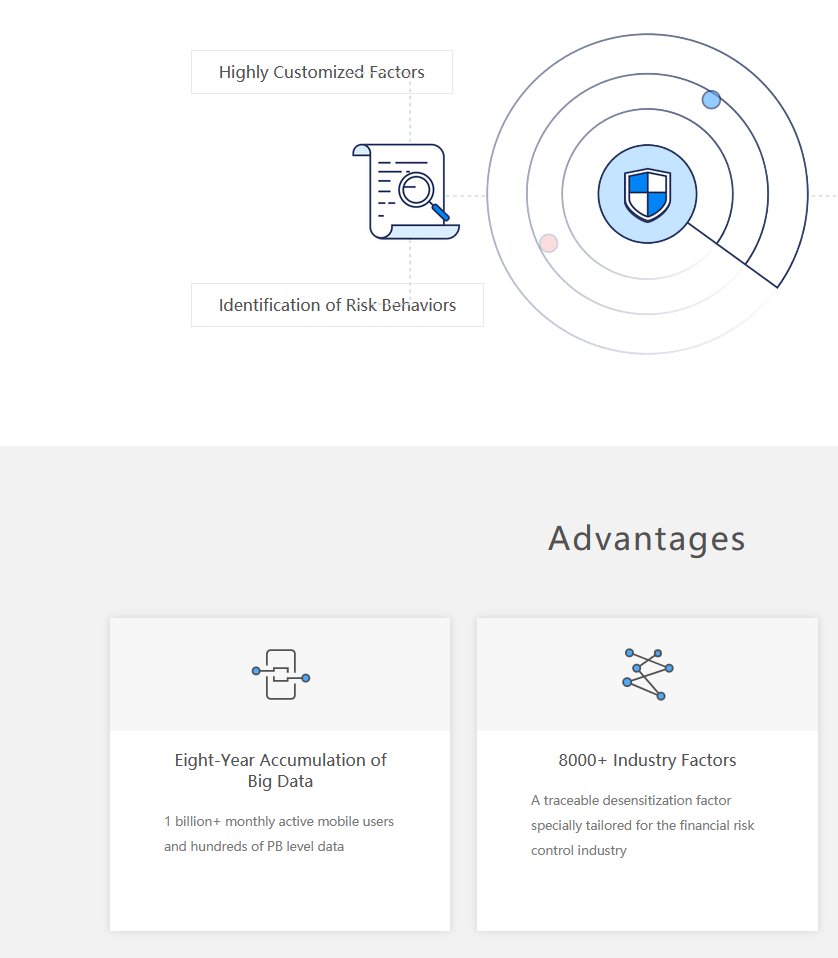

On the same website they openly suggest that customers can use eight years of accumulated data on '1 billion+ monthly active mobile users' also for 'financial risk control':

jiguang.cn/en/fintech

jiguang.cn/en/fintech

They say they identify the 'user's risk level' for 'corporate lending', 'relying on years of data accumulation'.

'JIGUANG’s data service objectively reflects user’s repayment willingness' ... 'highly correlative to the overdue behavior of loan clients'

jiguang.cn/en/anti-fraud

'JIGUANG’s data service objectively reflects user’s repayment willingness' ... 'highly correlative to the overdue behavior of loan clients'

jiguang.cn/en/anti-fraud

They also sell data for other purposes not related to advertising.

jiguang.cn/en/izone

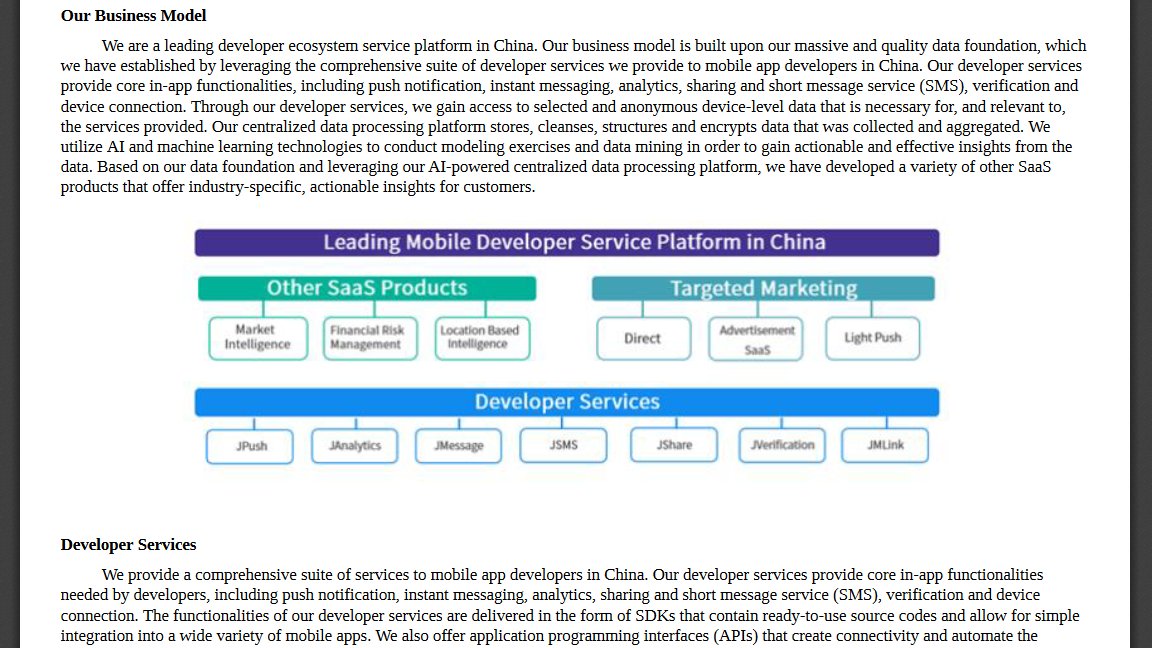

According to a press release, they provide data solutions for 'targeted marketing, financial risk management, market intelligence and location-based intelligence':

globenewswire.com/news-release/2…

jiguang.cn/en/izone

According to a press release, they provide data solutions for 'targeted marketing, financial risk management, market intelligence and location-based intelligence':

globenewswire.com/news-release/2…

Is this a purely Chinese enterprise? Not at all. Aurora Mobile is listed on Nasdaq.

According to a SEC filing, "Jiguang” is the brand and Aurora consists of a network of companies based in China, the British Virgin Islands, Cayman Islands and Hong Kong: ir.jiguang.cn/static-files/c…

According to a SEC filing, "Jiguang” is the brand and Aurora consists of a network of companies based in China, the British Virgin Islands, Cayman Islands and Hong Kong: ir.jiguang.cn/static-files/c…

(like many of their 'western' counterparts)

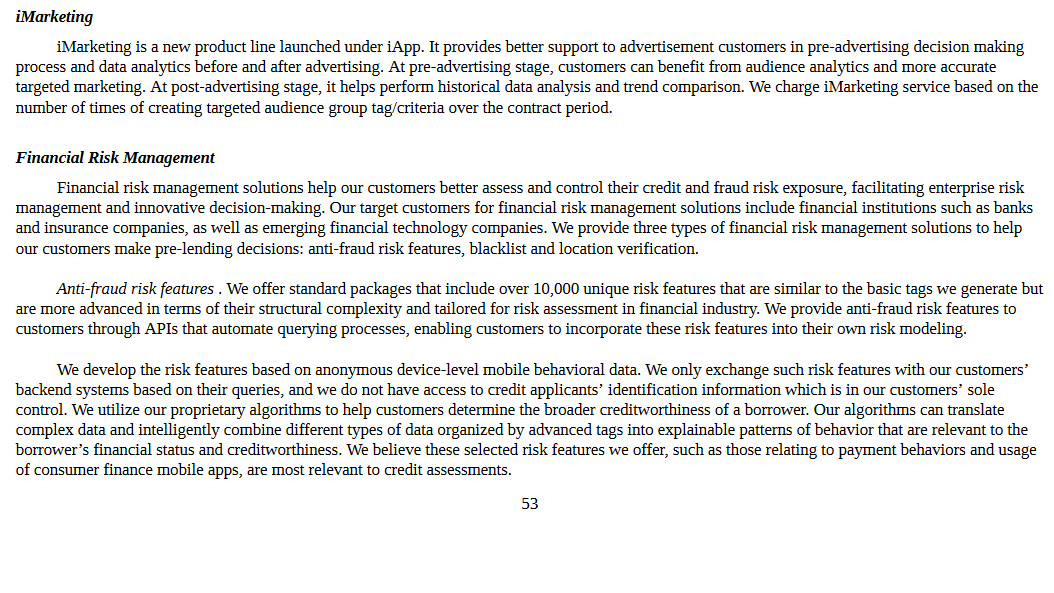

There's lots of interesting info in the SEC filing. Aurora emphasizes to 'assist financial institutions and financial technology companies in making informed lending and credit decisions' based on data from its 'developer services'.

There's lots of interesting info in the SEC filing. Aurora emphasizes to 'assist financial institutions and financial technology companies in making informed lending and credit decisions' based on data from its 'developer services'.

"We develop the risk features based on anonymous* device-level mobile behavioral data… We believe [the] risk features we offer, such as those relating to payment behaviors and usage of consumer finance mobile apps, are most relevant to credit assessments"

*most likely not true

*most likely not true

Listed companies must disclose all kinds of information including business risks.

Here they disclose to the SEC that they may have to 'obtain approval or license for personal credit reporting business' in China to 'continue offering its financial risk management solutions'.

Here they disclose to the SEC that they may have to 'obtain approval or license for personal credit reporting business' in China to 'continue offering its financial risk management solutions'.

"Due to the lack of further interpretations of the current regulations governing personal credit reporting businesses, the exact definition and scope of 'information related to credit standing' and 'personal credit reporting business' ... are unclear"

…same issues everywhere 🤖

…same issues everywhere 🤖

One more SEC filing tidbit, Aurora states it has "accumulated data from over 33.6 billion installations of [its] software development kits (SDKs)". So, they're counting every app install that contained their SDKs. And they claim to harvest data from 90% of Chinese mobile devices.

Enough for today.

TL;DR Chinese data companies provide cheap services to app vendors, harvest personal data on hundreds of millions without their knowledge, and exploit it for all kinds of business purposes, in many ways very similar to companies in the US and in other regions.

TL;DR Chinese data companies provide cheap services to app vendors, harvest personal data on hundreds of millions without their knowledge, and exploit it for all kinds of business purposes, in many ways very similar to companies in the US and in other regions.

• • •

Missing some Tweet in this thread? You can try to

force a refresh