Disappointed with this article. It just repeats what’s already known but with some added ambiguous wording -> Cognitive-Load Theory: Methods to Manage Working Memory Load in the Learning of Complex Tasks journals.sagepub.com/doi/full/10.11…

First the abstract. ‘Productive’ and ‘unproductive’ cognitive load seem the new faboured terms. Reminds me of ‘deliberate’ in ‘deliberate practice’. It’s tautological. Who would want ‘unproductive load’. When is it ‘unproductive’ any way?

It reminds me of the ‘good load’ that used to be ‘germane load’. But of course ‘germane load’ was supposedly removed as independent load type. Yet ‘germane’ still plays a role. The article mentions ‘germane processing’ and a distributive role between intrinsic and extraneous load

This is why I previously found claims that ‘germane load has been removed’ unjustified. It has been repackaged and tucked away, but actually still there. With its strengths and weaknesses and all.

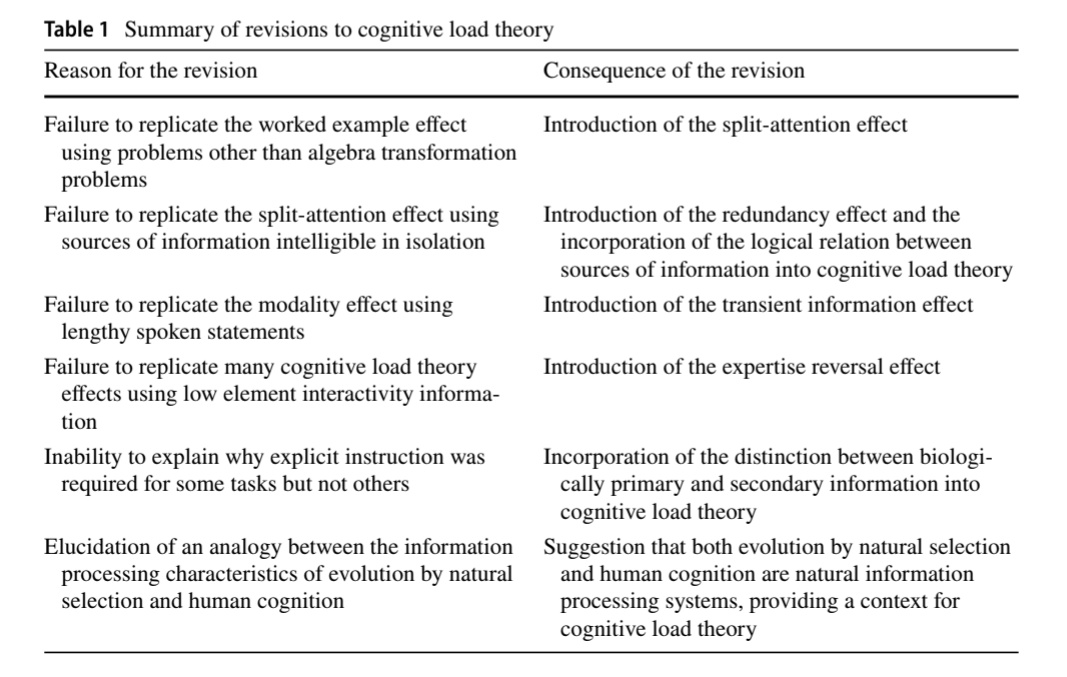

Like so many CLT articles the first pages is devoted to summarising. For example, the newly ‘found’ evolutionary principles. I seldom read a justification of adopting it, to be honest, just that it is. It remains risky, in my opinion, and also not necessary for the theory.

After all,authors reiterate CLT’s goal is “to optimize learning of complex cognitive tasks by transforming contemporary scientific knowledge on the manner in which cognitive structures and processes are organized (i.e., cognitive architecture) into guidelines for instr design”.

And in my opinion the evolutionary principles are not used to do that but to ‘post hoc’ speculate about the why of some of the findings.....still find it strange it made it to a core underpinning layer....there is no progression there either (studies biol pri/sec?).

Any way, the paper then goes on and describes ‘exemplary methods to manage cognitive load’. Three categories: through the learning tasks, the learner, and the learning tasks. Maybe that categorisation could have been something, but each example imo is woefully short.

Several sections simply refer to other articles and are therefore imo rather uninformative. Mind you, even with more information, most would just double up with other articles, in particular the 20 years on’ article link.springer.com/article/10.100…

For the learning tasks we only get short sections about the split-attention effect, worked examples effect, and guidance-fading effect. The latter is called a ‘compound effect’ and this is mentioned but hardly explained. While it is rather important, as it impacts other effects.

The ones for the learner are notable: collaboration, gesturing and motivational cues. I’m not sure you often see those mentioned by CLT adopters (outside of research, where these authors have mentioned them numerous times).

Good to mention motivation, especially if you have ‘imported’ Geary’s evolutionary work (not my choice). When you read Geary it’s quite clear it is a key element. In my opinion, best not to dismiss as resulting from achievement.

The final category pertains to the ‘learning environment’ and gives short descriptions of attention-capturing stimuli reduction, eye closure and stress-suppressing activities. But we don’t get a lot of detail of the methods that can do that. Often just one study.

The conclusion then also gives quite an important disclaimer: “It is important to note that these characteristics interact and should always be considered by instructional designers as one system in which manipulating one aspect has consequences for the whole system”.

• • •

Missing some Tweet in this thread? You can try to

force a refresh