One of the biggest challenges with influence ops is measuring their impact.

Here's a way to do it.

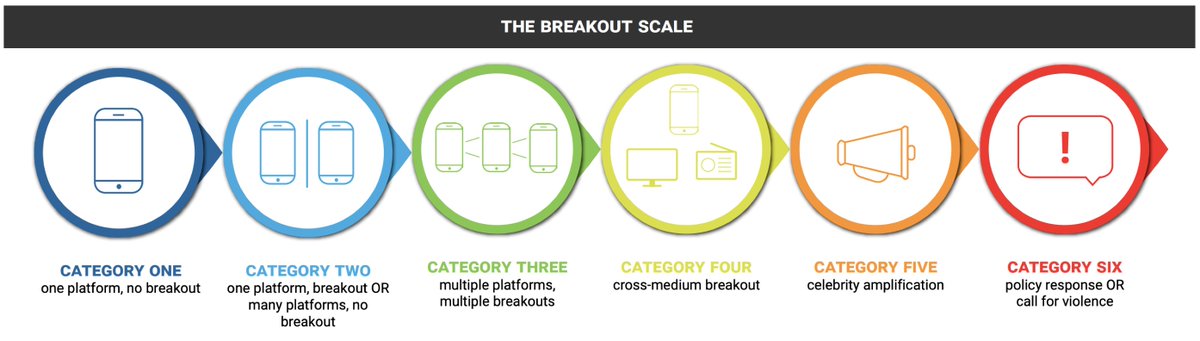

Six categories, based on IO spread through communities and across platforms.

Designed to assess and compare ops in real time.

H/t @BrookingsFP.

brookings.edu/research/the-b…

Here's a way to do it.

Six categories, based on IO spread through communities and across platforms.

Designed to assess and compare ops in real time.

H/t @BrookingsFP.

brookings.edu/research/the-b…

It assesses info ops according to two questions:

1. Did their content get picked up outside the community where it was originally posted?

2. Did it spread to other platforms or get picked up by mainstream media or high-profile amplifiers?

1. Did their content get picked up outside the community where it was originally posted?

2. Did it spread to other platforms or get picked up by mainstream media or high-profile amplifiers?

Category One ops stay on the platform where they were posted, and don't get picked up beyond the original community.

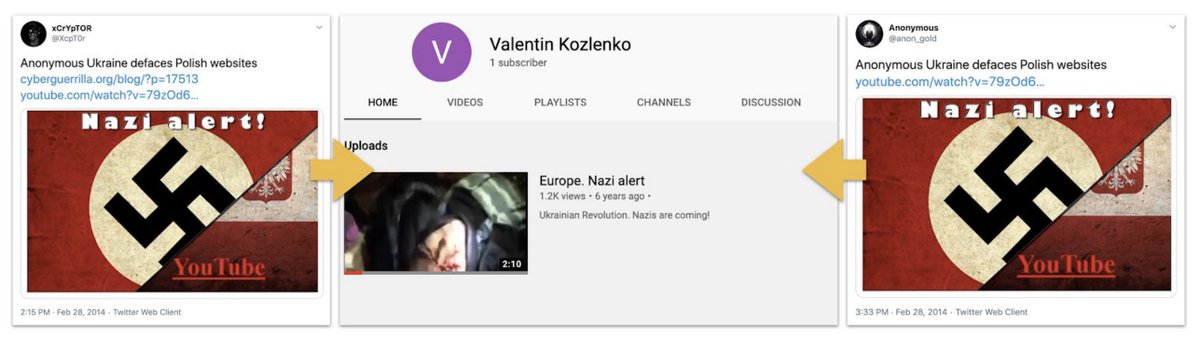

Most political spam and clickbait belong here. So does bot-driven astroturfing, like the Polish batch we found with @DFRLab.

medium.com/dfrlab/polish-…

Most political spam and clickbait belong here. So does bot-driven astroturfing, like the Polish batch we found with @DFRLab.

medium.com/dfrlab/polish-…

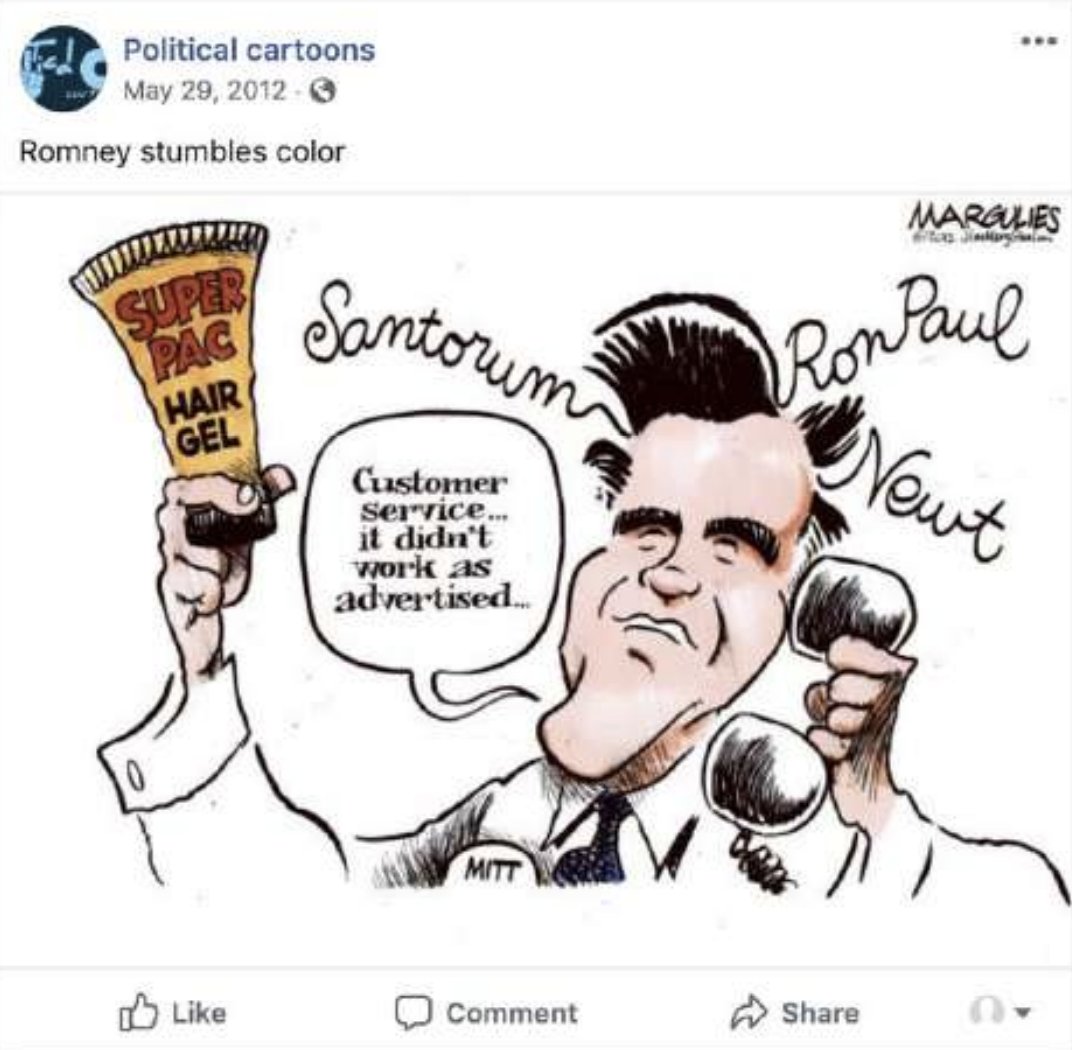

Iran's efforts to target the Republican primaries on Facebook in 2012 were Category One, too.

@Graphika_NYC reported that here. graphika.com/reports/irans-…

@Graphika_NYC reported that here. graphika.com/reports/irans-…

Category 2 are either posted on multiple platforms but don't spread beyond the insertion point, or stay on one platform but get picked up by multiple communities.

The Russian IRA's effort in 2019 was a Category 2. It was only on Instagram, but landed content in multiple communities at both ends of the political spectrum.

The pro-China operation Spamouflage Dragon was a Category Two as well.

It posted on YouTube, Facebook and Twitter, but we've not yet found it getting picked up by authentic users on any of them.

graphika.com/reports/spamou…

It posted on YouTube, Facebook and Twitter, but we've not yet found it getting picked up by authentic users on any of them.

graphika.com/reports/spamou…

Category Three ops get picked up by multiple communities on multiple platforms, but don't make the jump to mainstream media.

It's a transient category, because ops that make it this far are likely to get picked up by media.

Journalists: be careful what you amplify.

It's a transient category, because ops that make it this far are likely to get picked up by media.

Journalists: be careful what you amplify.

There was a time when QAnon and Pizzagate were both Category Threes.

If researchers identify a Category Three, it's important to deal with it fast, before it gets worse.

If researchers identify a Category Three, it's important to deal with it fast, before it gets worse.

Category Four ops break out of social media entirely, and get picked up by the mainstream media.

Many ops try to achieve this by reaching out directly to journalists by email or social media.

Many ops try to achieve this by reaching out directly to journalists by email or social media.

The Russian IRA hit Category Four many times. Jenna Abrams and Crystal Johnson were really good at getting mainstream pickup.

This piece in the @latimes quoted two alt-right tweets Both were IRA trolls.

latimes.com/nation/la-na-b…

This piece in the @latimes quoted two alt-right tweets Both were IRA trolls.

latimes.com/nation/la-na-b…

Iranian operation Endless Mayfly hit Category Four when Reuters picked up a fake story it created.

H/t @citizenlab for their work in breaking this.

citizenlab.ca/2019/05/burned…

H/t @citizenlab for their work in breaking this.

citizenlab.ca/2019/05/burned…

Category Five is when celebrities, politicians, candidates or other high-profile influencers share and amplify an influence operation.

This gives the op both much greater reach and much greater credibility.

Take care when you share.

This gives the op both much greater reach and much greater credibility.

Take care when you share.

When Sputnik ran an already-debunked theory about Google rigging its autocomplete suggestions to favour Hillary Clinton back in September 2016, Donald Trump ended up amplifying it.

Category Five.

nytimes.com/2016/09/29/us/…

Category Five.

nytimes.com/2016/09/29/us/…

When Jeremy Corbyn publicised leaks that had originally been posted online by Russian operation Secondary Infektion, that was a Category Five.

H/t @jc_stubbs for the great work on this.

(Most SI stories were Category Two.)

uk.reuters.com/article/uk-bri…

H/t @jc_stubbs for the great work on this.

(Most SI stories were Category Two.)

uk.reuters.com/article/uk-bri…

And then there's Category Six. That's when an influence operation either causes a real-world change of some kind, or else carries the risk of real-world harm.

There haven't been many. Let's keep it that way.

There haven't been many. Let's keep it that way.

The Russian DCLeaks operation was a Category Six.

At one end, leaks. At the other end, Debbie Wasserman Schultz resigns.

nytimes.com/2016/07/25/us/…

At one end, leaks. At the other end, Debbie Wasserman Schultz resigns.

nytimes.com/2016/07/25/us/…

The IRA's "Stop Islamization of Texas" effort in 2016 was a Category Six too.

Get two opposing groups. Organise them into simultaneous protests. Tell them to bring their guns.

Nobody got hurt, but the potential was there.

H/t @mrglenn.

chron.com/news/houston-t…

Get two opposing groups. Organise them into simultaneous protests. Tell them to bring their guns.

Nobody got hurt, but the potential was there.

H/t @mrglenn.

chron.com/news/houston-t…

One operation can hit different categories at different times.

For example, Secondary Infektion was usually Category Two, but jumped to Category Five.

By the same token, the 2016 IRA reached Category Six, but the 2019 IRA only reached Category Two.

For example, Secondary Infektion was usually Category Two, but jumped to Category Five.

By the same token, the 2016 IRA reached Category Six, but the 2019 IRA only reached Category Two.

I developed this scale for operational researchers to be able to assess, compare and prioritise info ops.

For example, how do Spamouflage Dragon (China), Endless Mayfly (Iran) and DCLeaks (Russia) compare?

Spamouflage: 2

Endless Mayfly: 4

DCLeaks: 6

For example, how do Spamouflage Dragon (China), Endless Mayfly (Iran) and DCLeaks (Russia) compare?

Spamouflage: 2

Endless Mayfly: 4

DCLeaks: 6

But it's also a reminder: info ops are not just on social-media platforms, and they *do* target journalists and influencers directly.

As I've said so often: stay calm - but stay watchful.

As I've said so often: stay calm - but stay watchful.

• • •

Missing some Tweet in this thread? You can try to

force a refresh