One of the hardest challenges in low-resource machine translation or NL generation nowadays is generating *into* low-resource languages, as it's hard to fluently generate sentences with few training examples. Check out our new #EMNLP2020 findings paper that tackles this. 1/3

https://twitter.com/luyu_gao/status/1313582132300845057

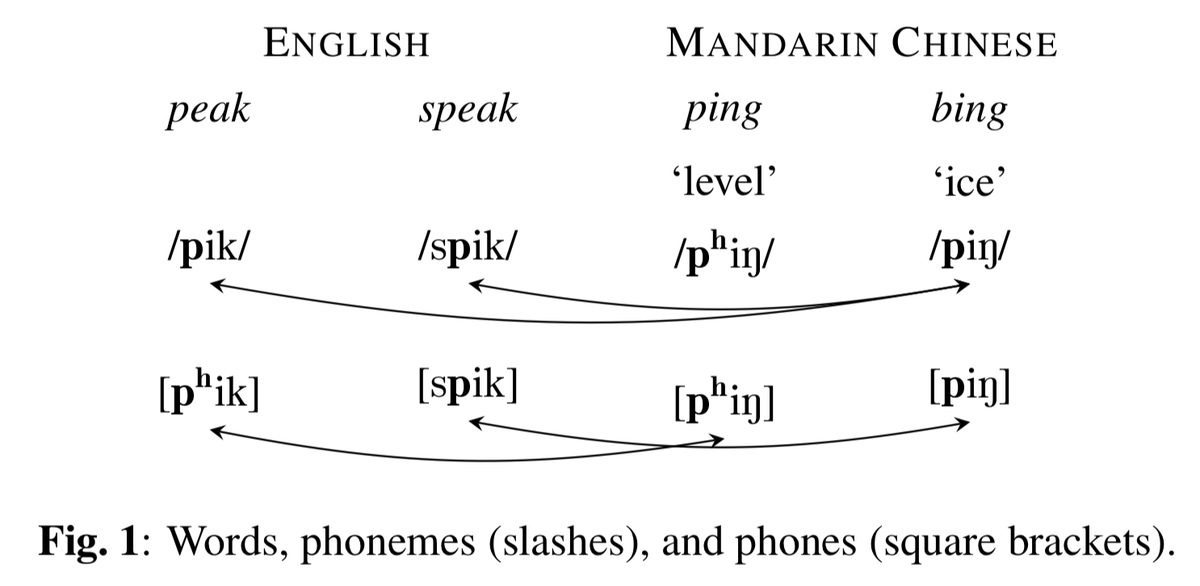

We argue that target-side learning of good word representations shared across languages with different spelling is crucial. To do so, we apply soft decoupled encoding (arxiv.org/pdf/1902.03499), a multilingual word embedding designed to maximize cross-lingual sharing. 2/3

Results are encouraging, with consistent improvements over 4 languages! Also, the method plays well with subword segmentation like BPE, and has no test-time overhead, keeping inference speed fast. Check it out! 3/3

• • •

Missing some Tweet in this thread? You can try to

force a refresh