I have literally no idea what Facebook's new policy is on QAnon or what it will apply to in future, and so I would like you to please read this post but replace "Twitter" with "Facebook"

lawfareblog.com/twitter-brings…

lawfareblog.com/twitter-brings…

It seems pretty sui generis, which makes sense because no one is really sure what QAnon is. Not even many of its adherents

wired.com/story/qanon-su…

wired.com/story/qanon-su…

Hard not to think that the House condemnation played a role here, given timing. I hope so: that seems a more accountable and democratic way for this to work. I wish that had been made explicit.

washingtonpost.com/powerpost/elec…

washingtonpost.com/powerpost/elec…

Facebook itself literally doesn't know what the limits of its policy are!

theguardian.com/technology/202…

theguardian.com/technology/202…

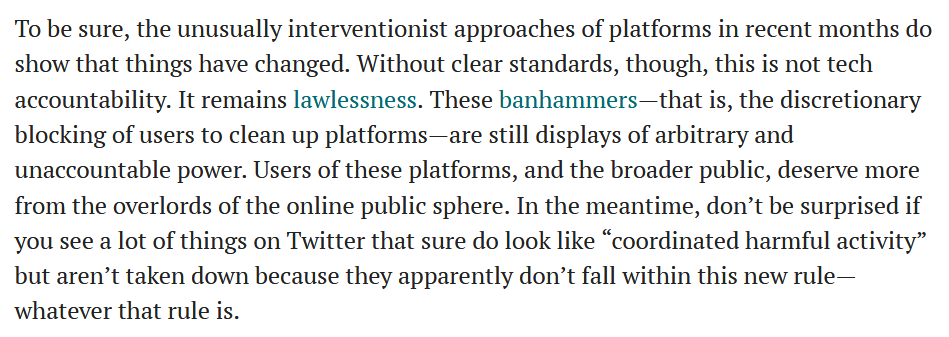

Applaud the outcome if you like, but this is not tech accountability. This may have temporarily solved fb's problem, but it has not solved ours, either with fb or (I bet) QAnon. It's kicking the can down the road.

Oh, sub in "Facebook" for Twitter again plz ☝️

Btw, Twitter did end up releasing what is the most comprehensive policy so far on this front. I like it, but bc I'm a pain, I'm waiting to see if they enforce it consistently & transparently (or at all)

help.twitter.com/en/rules-and-p…

Btw, Twitter did end up releasing what is the most comprehensive policy so far on this front. I like it, but bc I'm a pain, I'm waiting to see if they enforce it consistently & transparently (or at all)

help.twitter.com/en/rules-and-p…

*How* platforms moderate matters.

And, this is not CoMo but on the human side, this is a blunt way of dealing with a mental health crisis wrapped in conspiracy theories. We will need bigger imaginations than just bans.

• • •

Missing some Tweet in this thread? You can try to

force a refresh