I had a breakthrough that turned a Deep Learning problem on its head!

Here is the story.

Here is the lesson I learned.

🧵👇

Here is the story.

Here is the lesson I learned.

🧵👇

No, I did not cure cancer.

This story is about a classification problem —specifically, computer vision.

I get images, and I need to determine the objects represented by them.

I have a ton of training data. I'm doing Deep Learning.

Life is good so far.

👇

This story is about a classification problem —specifically, computer vision.

I get images, and I need to determine the objects represented by them.

I have a ton of training data. I'm doing Deep Learning.

Life is good so far.

👇

I'm using transfer learning.

In this context, transfer learning consists of taking a model that was trained to identify other types of objects and leverage everything that it learned to make my problem easier.

This way I don't have to teach a model from scratch!

👇

In this context, transfer learning consists of taking a model that was trained to identify other types of objects and leverage everything that it learned to make my problem easier.

This way I don't have to teach a model from scratch!

👇

I experimented with three different base models (all of them pre-trained on imagenet, which is a huge dataset):

▫️ResNet50

▫️InceptionV3

▫️NasNetLarge

I settled on NasNetLarge.

(Now I'm doing everything I can to move to any of the other two, but that's another story.)

👇

▫️ResNet50

▫️InceptionV3

▫️NasNetLarge

I settled on NasNetLarge.

(Now I'm doing everything I can to move to any of the other two, but that's another story.)

👇

I also tried different architectures to extend that base model.

A couple of layers later and a few dozen experiments, the model started providing decent results.

Decent results aren't perfect results. But they were good enough.

👇

A couple of layers later and a few dozen experiments, the model started providing decent results.

Decent results aren't perfect results. But they were good enough.

👇

If you have tried this before, there's been nothing surprising or different in this story.

I should have stopped at this point. (But then I wouldn't have this tweet to write!)

I didn't because sometimes, you don't want to be known as the "good enough" guy.

👇

I should have stopped at this point. (But then I wouldn't have this tweet to write!)

I didn't because sometimes, you don't want to be known as the "good enough" guy.

👇

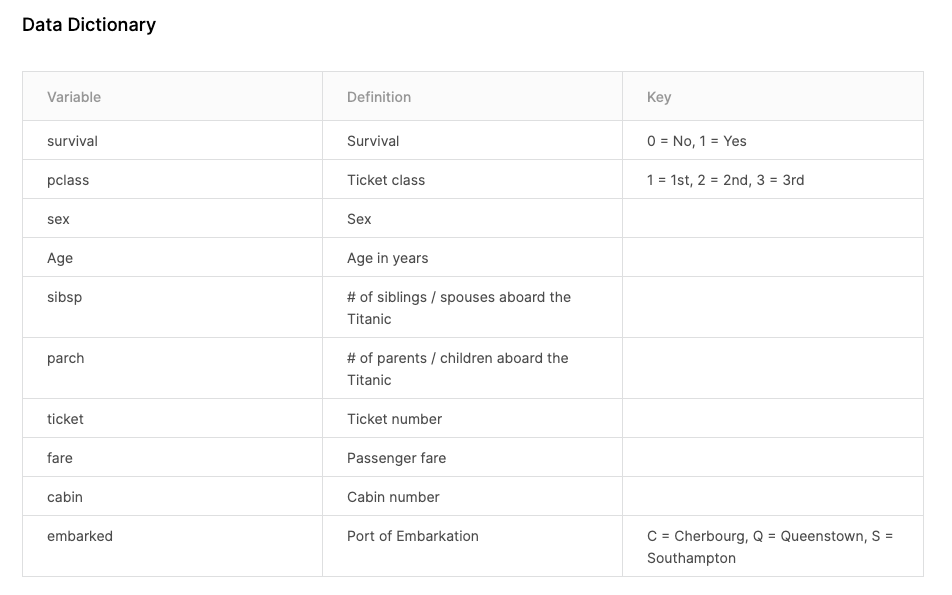

After a lot of tunning, I wasn't getting any substantial performance improvements so I decided to take some distance and look at the problem as a whole.

Users have a web interface to upload the images. These come as a set representing different angles of the object.

👇

Users have a web interface to upload the images. These come as a set representing different angles of the object.

👇

It turns out that the web interface requests images in a specific order:

1. Front of the object

2. Back of the object

3. Other

Users aren't forced to upload images that way, but 99% of them do.

A front picture of the object is usually very useful. A side picture is not.

👇

1. Front of the object

2. Back of the object

3. Other

Users aren't forced to upload images that way, but 99% of them do.

A front picture of the object is usually very useful. A side picture is not.

👇

Could I feed the order that these pictures were submitted as another feature to the model?

Would my Deep Learning model be able to pick up and use this information for better predictions?

👇

Would my Deep Learning model be able to pick up and use this information for better predictions?

👇

I changed the model to add a second input. Now, besides the photo, I have an integer value representing the order.

I retrained the whole thing.

And... yes, it worked!

The improvement was phenomenal: 10%+ higher accuracy!

👇

I retrained the whole thing.

And... yes, it worked!

The improvement was phenomenal: 10%+ higher accuracy!

👇

This is the end of a story that taught me an important lesson:

Open your mind. Look around. Think different.

Even more specific:

The magic is not in the architecture, the algorithm, or the parameters. The real magic is in you and your creativity.

Stay hungry!

Open your mind. Look around. Think different.

Even more specific:

The magic is not in the architecture, the algorithm, or the parameters. The real magic is in you and your creativity.

Stay hungry!

• • •

Missing some Tweet in this thread? You can try to

force a refresh