@AmazingThew Something life changing I learned early at NVIDIA is that "putting something in hardware" (creating an application-specific integrated circuit, ASIC) usually does not make a program faster. (thread):

@AmazingThew At a very high level, processor design is limited by three main factors:

1. Physical area; there's a limit on how large the die/mask can be

2. Power/heat dissipation ("Thermal Design Power" TDP). If you can't get power in or heat out, the chip won't work

3. Clock speed/voltage

1. Physical area; there's a limit on how large the die/mask can be

2. Power/heat dissipation ("Thermal Design Power" TDP). If you can't get power in or heat out, the chip won't work

3. Clock speed/voltage

@AmazingThew Microprocessor architects are, generally speaking, some of the smartest engineers I've met, at every company. They've already optimized these constraints for the chip. No slack.

So, when someone proposes a new hardware feature, it has to REPLACE existing functionality.

So, when someone proposes a new hardware feature, it has to REPLACE existing functionality.

@AmazingThew That usually means taking away general purpose computation (or cache, on a CPU) to get back area for special purpose computation.

If you know that your chip executes 95% FFTs, that *might* be worthwhile to replace the general math and logic circuits with FFT circuits.

If you know that your chip executes 95% FFTs, that *might* be worthwhile to replace the general math and logic circuits with FFT circuits.

@AmazingThew I say "might" because a GPU is already amortizing the dispatch and other shared logic area over 32 ALUs and sharing memory interfaces. So, you might only save about 1/33->i.e., get a 3% improvement. If you weren't using, say, division, then yes, you can redploy the DIV area, etc.

@AmazingThew And if your FFT is memory bound, then special purpose compute doesn't help at all.

Now, if your program spends 30% of its cycles on FFT and 70% on something else, then taking away general purpose computation to get special purpose computation is not a good strategy.

Now, if your program spends 30% of its cycles on FFT and 70% on something else, then taking away general purpose computation to get special purpose computation is not a good strategy.

@AmazingThew It turns out that for most of the operations you'd (I'd!) want to magically make faster on a GPU with new circuits, you either aren't willing to pay the price of what you'd make slower as a tradeoff, or wouldn't get a big enough speedup to matter.

@AmazingThew If you want GPU acceleration of FFT, I recommend:

docs.nvidia.com/cuda/cufft/ind…

If you want FFT to be 10x faster than *that*; well, I'd really like that too, but I don't know a chip design where a real program would be faster if the FFT was an ASIC that replaced general compute area.

docs.nvidia.com/cuda/cufft/ind…

If you want FFT to be 10x faster than *that*; well, I'd really like that too, but I don't know a chip design where a real program would be faster if the FFT was an ASIC that replaced general compute area.

@AmazingThew For fast CPU FFT, I've previously used fftw.org

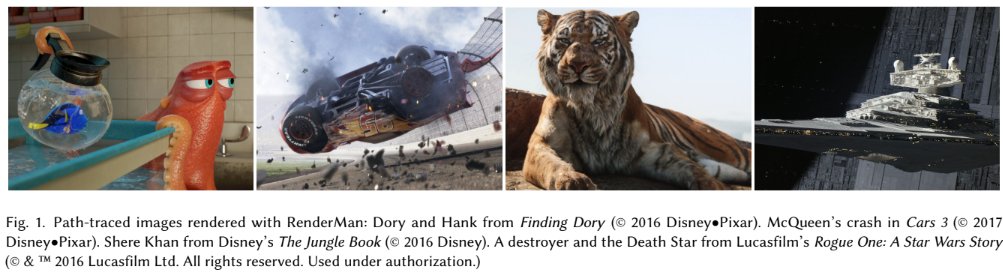

@AmazingThew Taking the RTX GPUs as an example, you can look at the history of published designs from NVIDIA and other institutions at High Performance Graphics and other venues.

Nobody directly translated their favorite ray tracing software into a circuit and shipped it.

Nobody directly translated their favorite ray tracing software into a circuit and shipped it.

@AmazingThew Hardware ray tracing (which has come and gone many times in recent history, including designs from Utah, Caustic, and Imagination) was a challenge of finding the design that would optimize for minimizing area, bandwidth, and area...and provide a powerful programming model.

@AmazingThew So was the rasterizer, the texture unit, tensor cores, and previous dedicated pixel and vertex shaders, and a lot of processing units that aren't visible in the high level programming model.

@AmazingThew I'm so impressed with what hardware designers are able to accomplish in each generation. They squeeze existing functionality into ever more efficient implementations, and then find a narrow slice of a new feature that accelerates the critical step, to slip into the empty space!

@AmazingThew In general, to help make something faster on a GPU, there are two things that you can do:

1. Reduce the memory demand until it is compute bound. For FFT: can you get away with 8-bit? 16F?

1. Reduce the memory demand until it is compute bound. For FFT: can you get away with 8-bit? 16F?

@AmazingThew 2. Publish clever algorithms or designs for the compute part. The last, engineering step is usually proprietary, but industry relies on researchers across the field to get them into the right ballpark. Modern GPUs are based on decades of SIGGRAPH papers, not created from thin air

@AmazingThew (Quite often, the value of research papers is ruling out not-quite-practical approaches so architects know where not to focus. Every research paper presents itself as a success: "5x!".

But knowing design A is a 5x max speedup is enough to tell others to look for 10x elsewhere😀)

But knowing design A is a 5x max speedup is enough to tell others to look for 10x elsewhere😀)

• • •

Missing some Tweet in this thread? You can try to

force a refresh