Friday morning tweet thread: some more depth and detail on AWS Nitro Enclaves, the trusted execution environment / confidential computing platform which we launched last week. aws.amazon.com/ec2/nitro/nitr… . Let's dive in!

If you're reading this thread, you're almost certain familiar with Amazon EC2. The basics: EC2 customers can launch Instances, which are virtual servers in the cloud. "Virtual" means we make one physical machine seem like many machines. It's powered by our virtual machine tech.

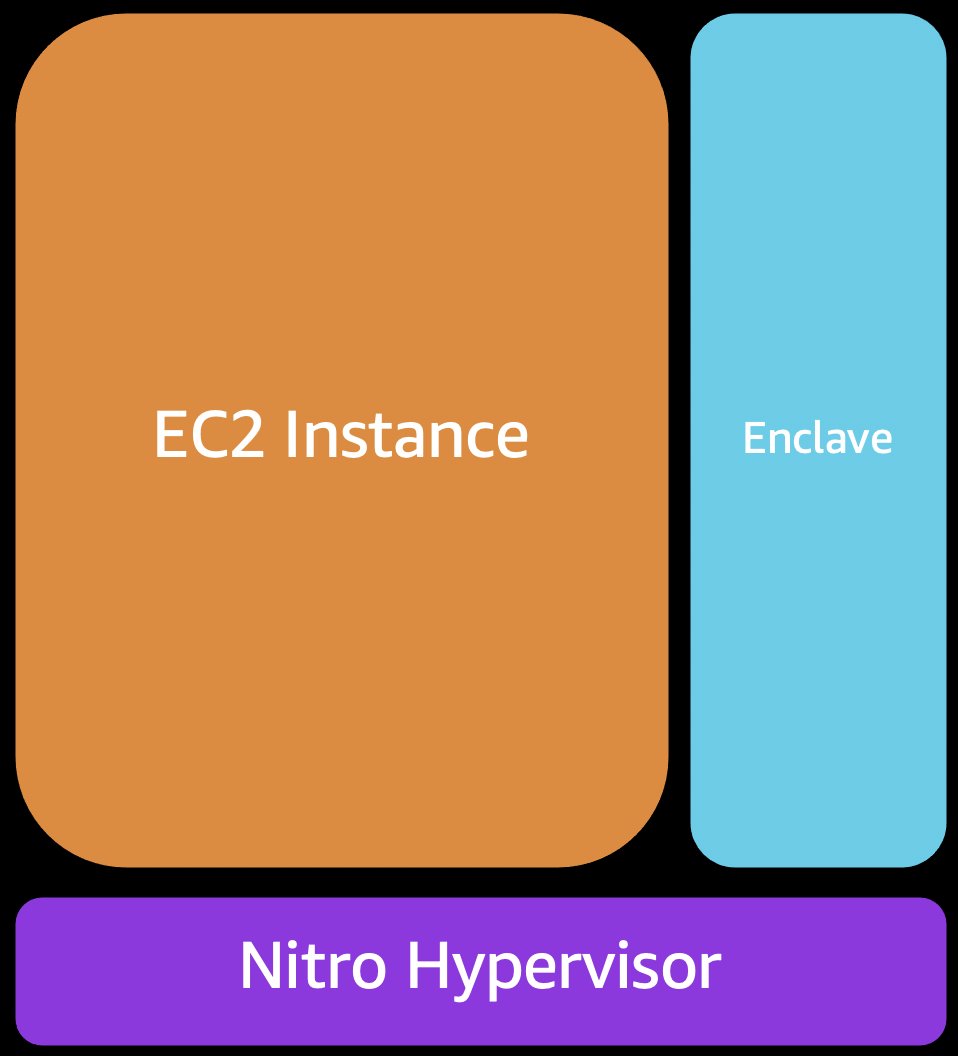

With AWS Nitro Enclaves you get to also create and run more super highly isolated virtual servers that are attached directly and only to your EC2 instance. Think of it like having another server, but with no connectivity at all except a cable plugged in to your Instance.

AWS Nitro Enclaves also get cryptographic attestation, and integration with Amazon KMS and ACM, so that you can be certain what's running in an enclave, and so that you can get sensitive material into an enclave for processing.

To use another metaphor, it's like a safe ... but for performing computation and running secure applications, and the locks on the safe are modern cryptography. O.k., let's get into how all of this works!

AWS Nitro Enclaves is built on the security and isolation of the AWS Nitro system, it's in the name! So we have to get into how that works. Ironically the best way is first to look at what came before, and what other cloud providers still use ... traditional virtualization.

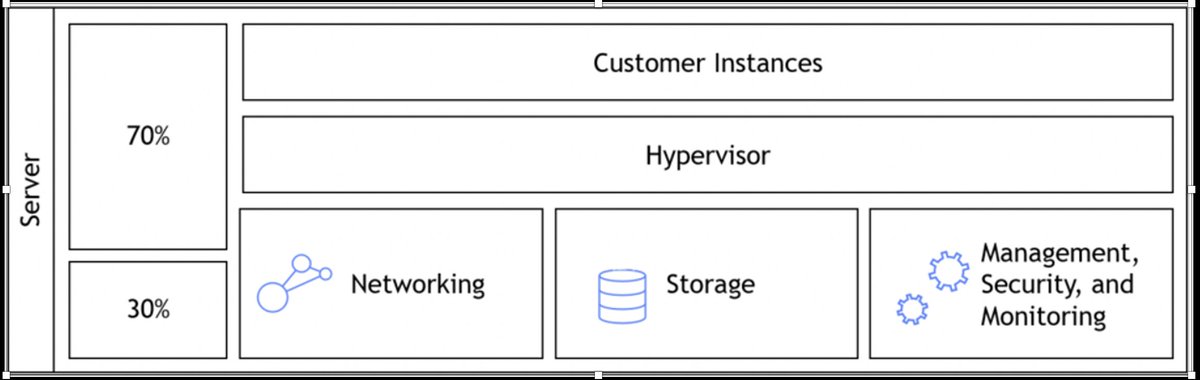

With traditional virtualization, like Xen, which we still love and support, all of the "magic" of emulating many machines happens on one physical server. That means it takes up some capacity, but it also has implications for security.

With traditional virtualization, security is based on hardening and operational security. We're very good at this, but with Nitro we can do better. Also, when you want to audit a machine, there's an almost philosophical challenge of "how can a machine verify itself?".

Solving that "how can a machine verify itself" problem gets you into some deep holes with trusted boot processes ... but then what if that has a bug in it, and so on. We do a lot of work here, but it's head-spinning to think about!

With the AWS Nitro System, we introduced a whole new way to do it. We have a second physical system ... the Nitro Card attached to every server, and we do the vast majority of the virtualization and security on that physically isolated dedicated hardware.

That's good for performance; the magic no longer needs to steal capacity from the server the customers are on. It's also great for security. We have physical isolation! The software running on the Nitro card is on a different CPU, different memory.

It also makes the verification problem easier too. One machine can verify another. We have a custom Nitro Security chip on the card, to bootstrap everything from. The Nitro System is also highly compartmentalized and isolated.

I work on AWS Nitro and there's no way for me to log in to it. It doesn't work like that. It exposes only secure, authenticated, vetted APIs that only perform safe and secure actions. It's also on its own highly isolated network.

What's left running on the server that customers use is a very thin hypervisor. It's coordinating with the CPU to enforce things like "This Instance can only access its own memory" ... this uses the simple robust hardware virtualization extensions built into modern CPUs.

O.k. so back to Nitro Enclaves. With Enclaves you first launch an EC2 instance as normal, though you do have to pick a Nitro capable instance that has more than 2 vCPUs.

Then, running on the Instance, you launch and run an enclave image using our nitro command line interface. You specify the image, how many vCPUs, how much memory you'd like for the enclave.

The AWS Nitro System will then create a new enclave virtual machine, using those resources, and boot that image in that virtual machine. That VM will have no networking, no durable storage, no DNS, no NTP, no Instance Metadata service. It is very very isolated.

I want to emphasize something here: this is not nested virtualization. The isolation between the enclave VM and the Instance VM is provided by the Nitro system and is as strong as between two customer EC2 instances.

Your instance simply has no access to the enclave's memory or CPU. It's like it's not there. We provide an open source kernel module that coordinates the "carving" of resources from the Instance kernel, but it plays no role in the security or isolation.

I also want to emphasize here, Nitro Enclaves don't rely on encryption for memory protection. There's just no visibility of the memory in the first place. Though we do encrypt all memory on Graviton2 instances, that's for defense in depth.

As a cryptography engineer this comforts me, because memory encryption is not a great control for isolation. With only encrypted memory as a defense, things like access patterns and changes are still visible, and it's hard not to make the memory malleable.

As a developer, memory encryption as the only defense is just an awful experience; having to think about encrypted address ranges, transactional memory frameworks, and so on. I *like* programming in assembly and I don't want to have to think about that stuff.

Just give me a securely isolated machine! O.k. so when Nitro launches the virtual machine and image it also checksums it as it's booting. We'll come back to that in a bit. First: How do you build these images, what are they?

You can think of enclave images as very small AMIs (Ah-mee). Because it's 2020, we've provided tooling to building these images from docker container definitions. You can put anything you want in an enclave image, and you can use container tooling to manage and build them!

This doesn't mean you need to run docker, or use containers to use Nitro Enclaves. It's a modern and convenient way to build the image. So if you're not in on containers yet, don't think you need to retool.

When you build the enclave image, the tooling will also tell you several "PCRs". Short for Platform Configuration Registers. I *hate* this confusing terminology, but it's consistent with how Trusted Platform Modules (TPMs) work, and they got to the naming party first!

Basically the PCRs are checksums. A checksum of the overall image, a checksum of the application, and so on. You can also sign the enclave image with a code-signing key, and Nitro will refuse to un an image that doesn't match its signature.

O.k. so now we know how to build an image, and how to run one. How do you actually use it? How do you talk to an enclave? how does it get any information to process? What is attestation? I have answers!

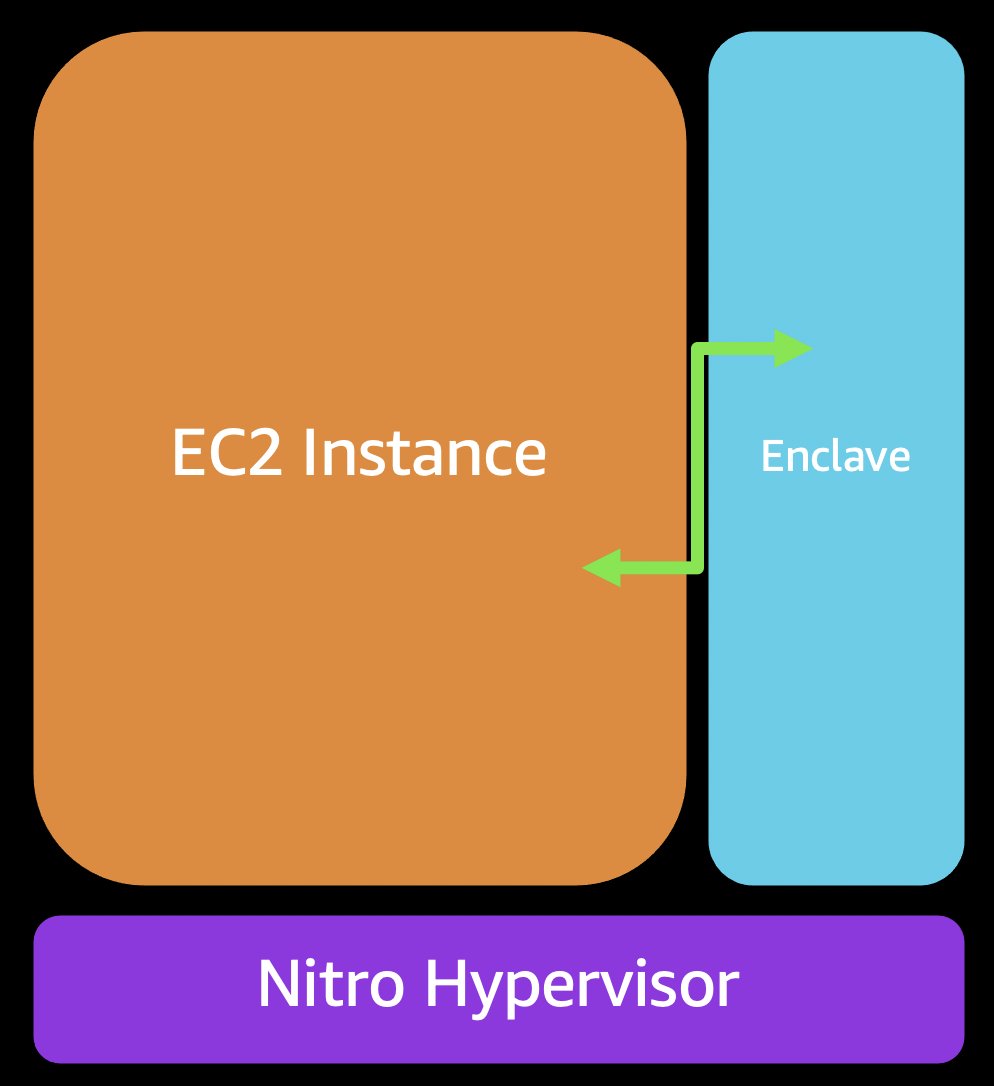

Although there is no networking, there is a "virtual socket" or "vsock" between the instance and the enclave. Think of it like a local-only version of TCP. But unlike TCP/IP, vsock communication can't be leaked, spoofed, or intercepted.

All communication between the Instance and the Enclave goes in and out over this vsock. This gives you a very small and very controlled surface area to the enclave. This is what you want for security!

This pattern also makes development far easier than other TEE or confidential computing models. You program and architect using your choice of RPC, message-passing, REST, microservices, or whatever else we are calling intercommunicating processes this trend cycle.

In just a week since launching, I've seen several customers get up and running very quickly! Nitro Enclaves is not a science project, you can actually build to it and use it productively.

So how do we get data into an enclave to process in the first place? this is where attestation and KMS come in. On the enclave we have two more facilities that the nitro system provides.

Nitro Enclaves are provided with an entropy service, where random data is provided directly from the Nitro system to the running enclave. This is useful because an enclave is so highly isolated that traditional kernel techniques for collecting randomness don't work.

Of course the Enclave application can still choose to use CPU-based randomness, like the RDRAND and RDSEED instructions. These can even be securely mixed with the Nitro provided randomness. O.k., that's one facility.

The Nitro System also provides the enclave with an attestation service. Here's how it works. First, the enclave application creates a cryptographic public-private key pair. These live and die with that running enclave, and the private key never leaves.

The enclave then provides the public key, and optionally a nonce ("number used once", for uniqueness), to the attestation service. That service then replies with a signed attestation document.

The signed attestation document includes the public key provided, the nonce, the enclave image's PCRs (checksums!), the key that signed the enclave image, the instance it's attached to, the instance's IAM role, the time it was launched, the current time, and more.

We've published the root key needed to validate an attestation document, so any party or service can verify this and determine that an attestation document had to have come from a particular running enclave application.

KMS now supports policy based on these attestation documents. So you can create a key in KMS and write a policy that says only a valid running enclave application can access or use that key. This is a very powerful primitive!

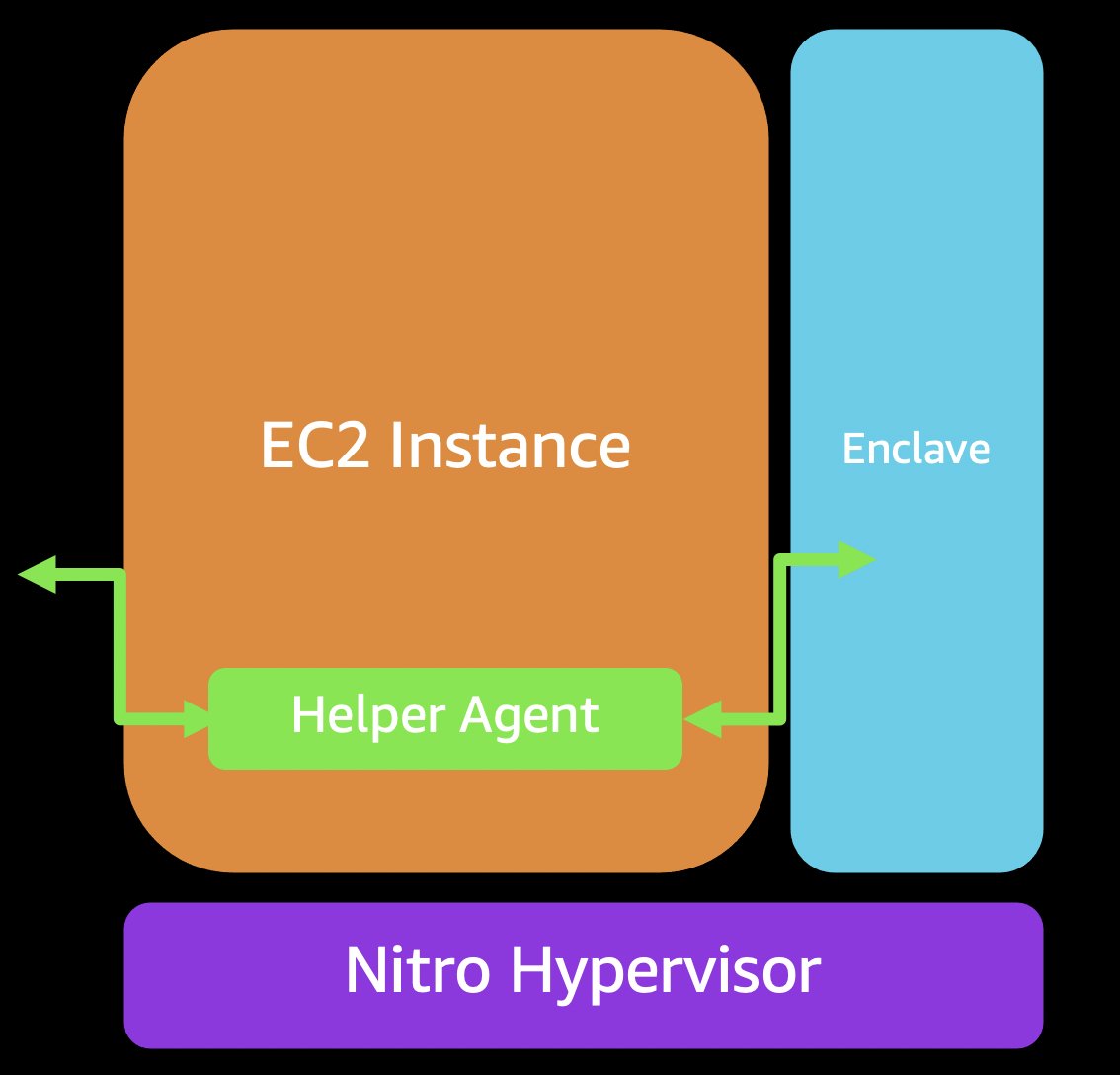

But how does the enclave application reach KMS? It has no networking! This is where our helper agent comes in. It relays end-to-end encrypted communication between the enclave and KMS. That can also be adapted and used for communication to services such as S3, DynamoDB, etc

Now end-to-end encryption here means that even though the instance is relaying some information, it can't see inside it. Unlike memory encryption which is still being iterated on, this kind of network encryption is very mature. It was always designed for adversarial environments.

Using this pattern, you can get any kind of sensitive data into the enclave for processing. After that security is as good as whatever the application enforces. With the shared responsibility mode, AWS owns the isolation, but the customer owns the security fo the application.

Let's go through an example: "COVID counter" an application that gets raw COVID patient data and allows only a few privacy-preserving queries such as "How many people in this zip-code have covid?"

First, the developer would write an application that does this. Say it takes a CSV file of patient data, and then lets you run the query. You need ordinary code reviews and security testing to check that that's all it will let you do.

Then you bundle this application into en enclave image and sign it. You can now choose to release encrypted CSV files only to that exact image (use its checksum) ... or to any images signed with that code-signing key. Just a matter of how your write the policy for the KMS key.

The former approach is hyper-paranoid and vigilant, but a bit brittle. Update the image and you have to update your KMS policies to match! That's why we support the signing key approach too, for flexibility. You get to choose.

So the enclave launches, retrieves the encrypted CSV file, gets the key from KMS, and now it can run the query. At no point did the instance ever have access to the raw unencrypted CSV file. It stays safely locked away.

You can extend this pattern very easily to multi-party computation too. Two parties with their own data sets, each encrypt with their own keys, and release only to en enclave application they mutually trust. It's very powerful!

We also have our own open source reference enclave application. It's easy to use and get started with, and it does something very very useful ... even if you don't want to use enclaves for any other reason. It gives you free ACM certificates on EC2 instances!

The ACM for Nitro Enclaves application lets you associate a free ACM certificate with an EC2 IAM role. The enclave application can then access your certificate and private key, and it provides a TLS offload (it's PKCS11, for the crypto people) capability.

For no additional cost, you can run nginx on your EC2 instance, terminating TLS/SSL with an ACM certificate ... and the key is securely locked away in a nitro enclave attached to your instance. Even if the instance were compromised, the key would be protected from theft.

O.k. that might be it for now. But if you take nothing else away, take this ... EC2 Nitro Enclaves are very powerful, but also very usable. They're designed so that mortals like us can build and ship secure highly-compartmentalized applications.

• • •

Missing some Tweet in this thread? You can try to

force a refresh