We really should be in the middle of a golden age of productivity. Within living memory, computers did not exist. Photocopiers did not exist. *Backspace* did not exist. You had to type it all by hand.

It wasn't that long ago that you couldn't search all your documents. Sort them. Back them up. Look things up. Copy/paste things. Email things. Change fonts of things. Undo things.

Instead, you had to type it all on a typewriter!

Instead, you had to type it all on a typewriter!

If you're doing information work, relative to your ancestors who worked with papyrus, paper, or typewriter, you are a golden god surfing on a sea of electrons. You can make things happen in seconds that would have taken them weeks, if they could do them at all.

We should also be super productive in the physical world. After all, our predecessors built railroads, skyscrapers, airplanes, and automobiles without computers or the internet. And built them fast. Using just typewriters, slide rules, & safety margins. patrickcollison.com/fast

This is a corollary to the @tylercowen / Thiel concept of the Great Stagnation. Where has all that extra productivity gone? It doesn't appear manifest in the physical world, for sure, though you can argue it *is* there in the internet world. There are a few possible theses...

Theses

1) The Great Distraction. All the productivity we gained has been frittered away on equal-and-opposite distractions like social media, games, etc.

2) The Great Dissipation. The productivity has been dissipated on things like forms, compliance, process, etc.

1) The Great Distraction. All the productivity we gained has been frittered away on equal-and-opposite distractions like social media, games, etc.

2) The Great Dissipation. The productivity has been dissipated on things like forms, compliance, process, etc.

3) The Great Divergence. The productivity is here, it's just only harnessed by the indistractable few.

4) The Great Dilemma. The productivity has been burned in bizarre ways that require line-by-line "profiling" of everything, like this tunnel study. tunnelingonline.com/why-tunnels-in…

4) The Great Dilemma. The productivity has been burned in bizarre ways that require line-by-line "profiling" of everything, like this tunnel study. tunnelingonline.com/why-tunnels-in…

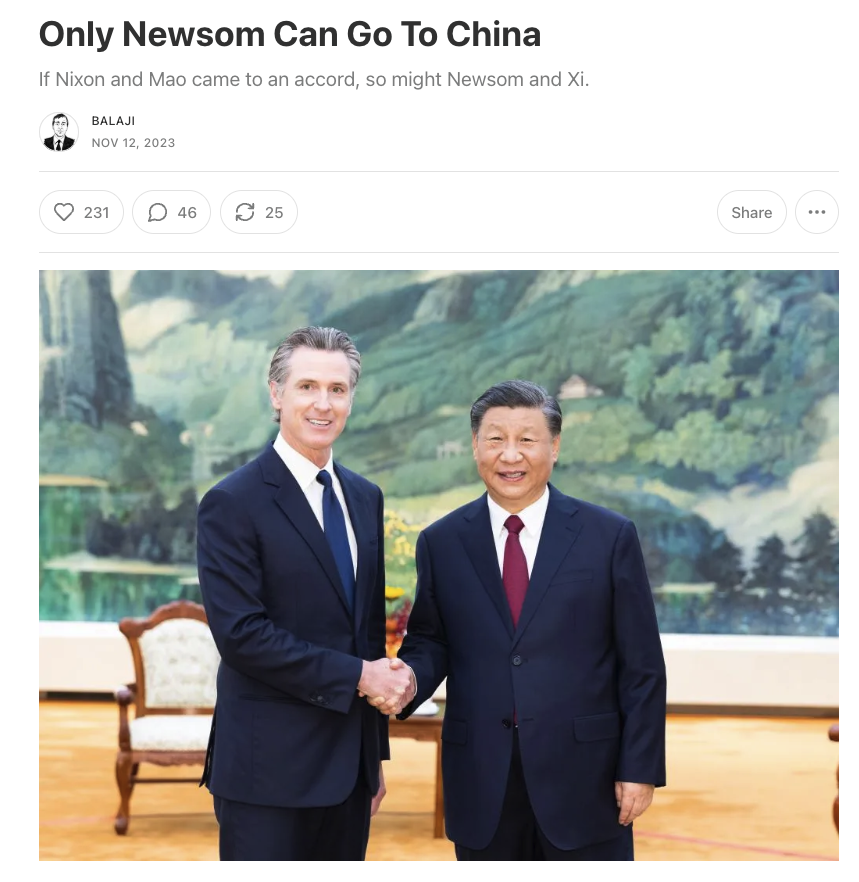

5) The Great Dumbness. The productivity is here, we've just made dumb decisions in the West while others have harnessed it. See for example China building a train station in nine hours vs taking 100-1000X that long to upgrade a Caltrain stop.

https://twitter.com/balajis/status/1143621827186454528

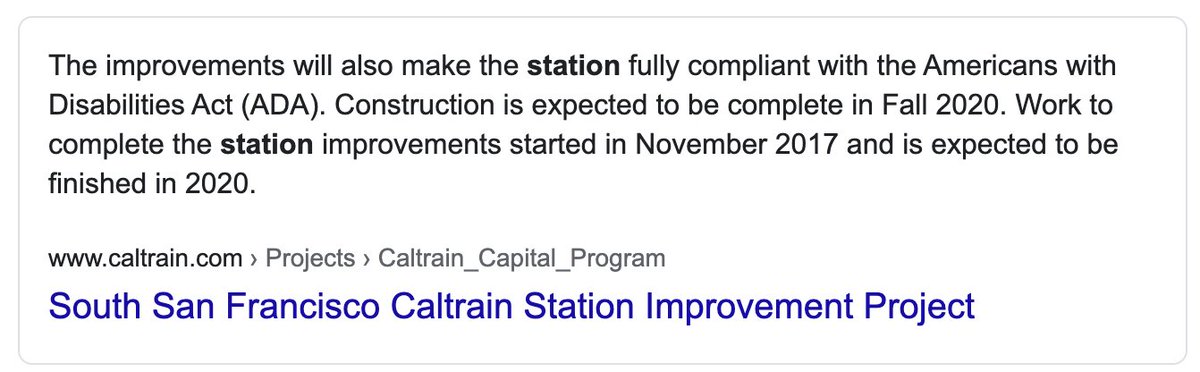

Btw when I say 100-1000X, I'm not kidding. November 2017 to Fall 2020 is ~3 years.

Three years vs nine hours is (3 * 365 * 24)/9 = 2920, which means the US needs almost 3000X as long to upgrade a train station as China does to build one from scratch.

caltrain.com/projectsplans/…

Three years vs nine hours is (3 * 365 * 24)/9 = 2920, which means the US needs almost 3000X as long to upgrade a train station as China does to build one from scratch.

caltrain.com/projectsplans/…

Now, yes, I'm sure not every train station in China is built in nine hours, and wouldn't be surprised if some regions in the US (or the West more broadly) do better than SFBA. But feels likely that a systematic study would find a qualitative speed gap, 10-100X or more.

Back to main thread. I don't know the answer. But I think the line-by-line profiling approach used on the tunnels is the slow way to find out exactly what went wrong, while the look-at-other-countries-and-time-periods approach is the fast way of figuring out what might be right.

Theory: for things we can do completely on the computer, productivity has measurably accelerated. It is 100X faster to email something than to mail it.

The problem may be in the analog/digital interface. Which makes robotics the limiting factor. Actuate as fast as we compute?

The problem may be in the analog/digital interface. Which makes robotics the limiting factor. Actuate as fast as we compute?

Essentially, representing a complex project on disk may not be the productivity win we think it is. Humans still need to comprehend all those electronic documents to build the thing in real life.

Perhaps robotics is the true productivity unlock. We haven’t gone full digital yet.

Perhaps robotics is the true productivity unlock. We haven’t gone full digital yet.

• • •

Missing some Tweet in this thread? You can try to

force a refresh