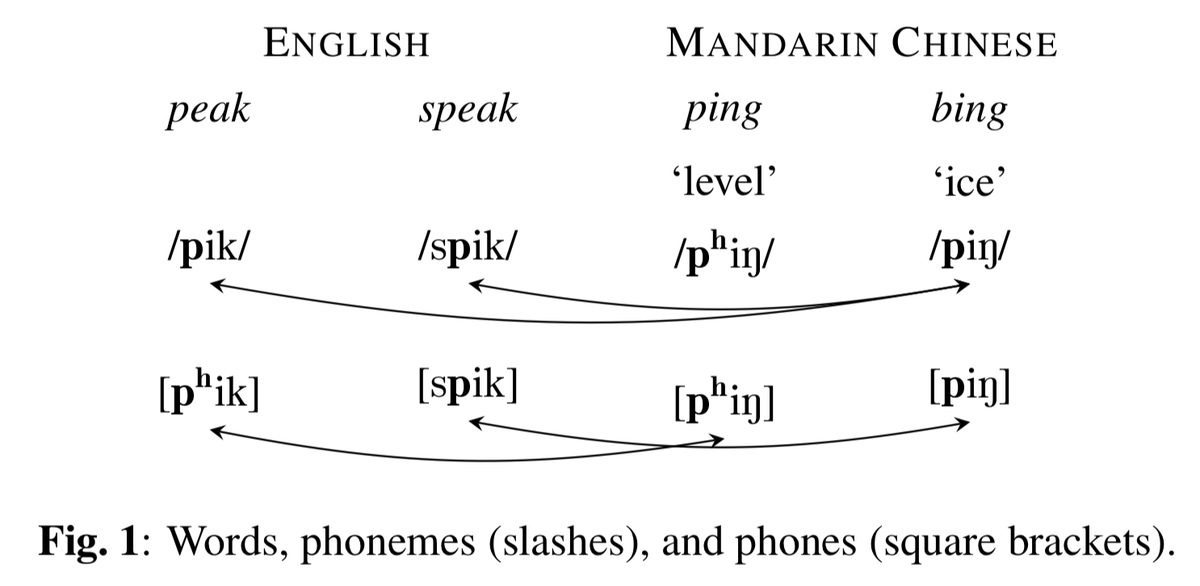

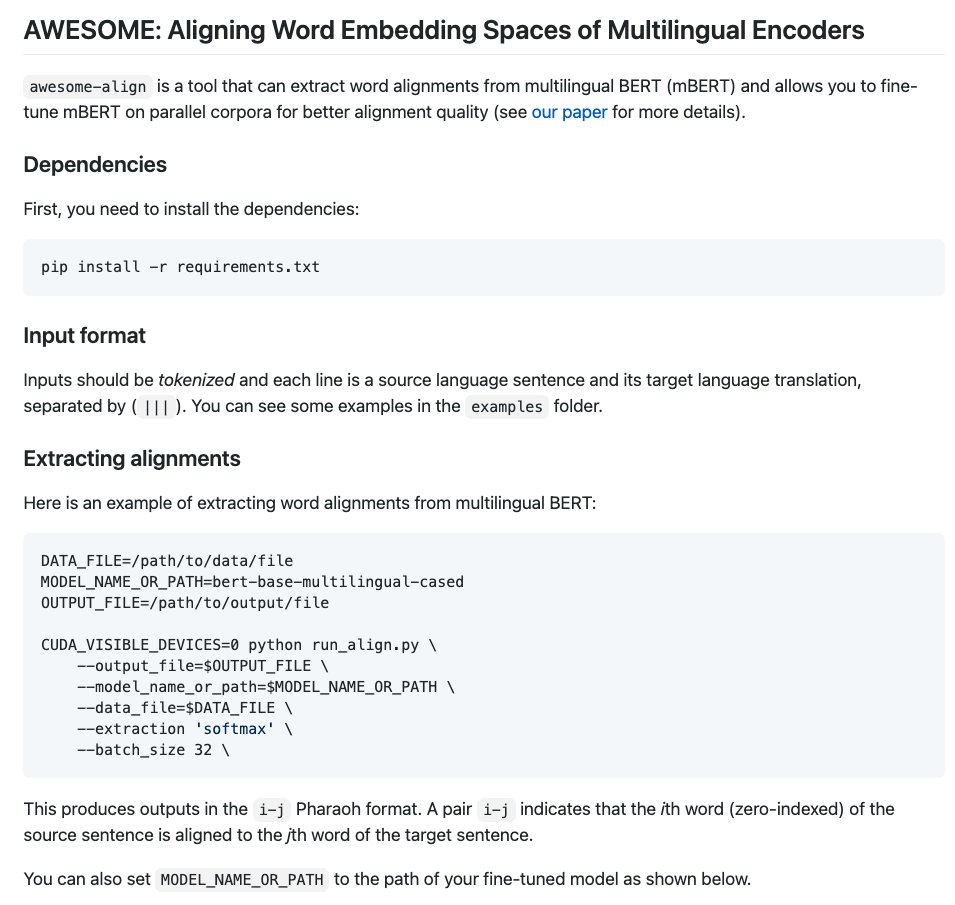

Check out our new awesome word aligner, AWESOME aligner by @ZiYiDou 😀: github.com/neulab/awesome…

* Uses multilingual BERT and can align sentences in all included languages

* No additional training needed, so you can align even a single sentence pair!

* Excellent accuracy

1/3

* Uses multilingual BERT and can align sentences in all included languages

* No additional training needed, so you can align even a single sentence pair!

* Excellent accuracy

1/3

A paper describing the methodology will appear at #EACL2021: arxiv.org/abs/2101.08231

The model is trained on parallel data using contrastive and self-training losses. But it generalizes zero-shot to new language pairs without any training data! 2/3

The model is trained on parallel data using contrastive and self-training losses. But it generalizes zero-shot to new language pairs without any training data! 2/3

Why do we need word alignments in the first place? We use them for lexicon learning, model analysis, and cross-lingual learning. For example, AWESOME aligner results in better cross-lingual results for annotation projection in NER. Check it out and we welcome comments/issues! 3/3

• • •

Missing some Tweet in this thread? You can try to

force a refresh