THREAD: Can you start learning cutting-edge deep learning without specialized hardware? 🤖

In this thread, we will train an advanced Computer Vision model on a challenging dataset. 🐕🐈 Training completes in 25 minutes on my 3yrs old Ryzen 5 CPU.

Let me show you how...

In this thread, we will train an advanced Computer Vision model on a challenging dataset. 🐕🐈 Training completes in 25 minutes on my 3yrs old Ryzen 5 CPU.

Let me show you how...

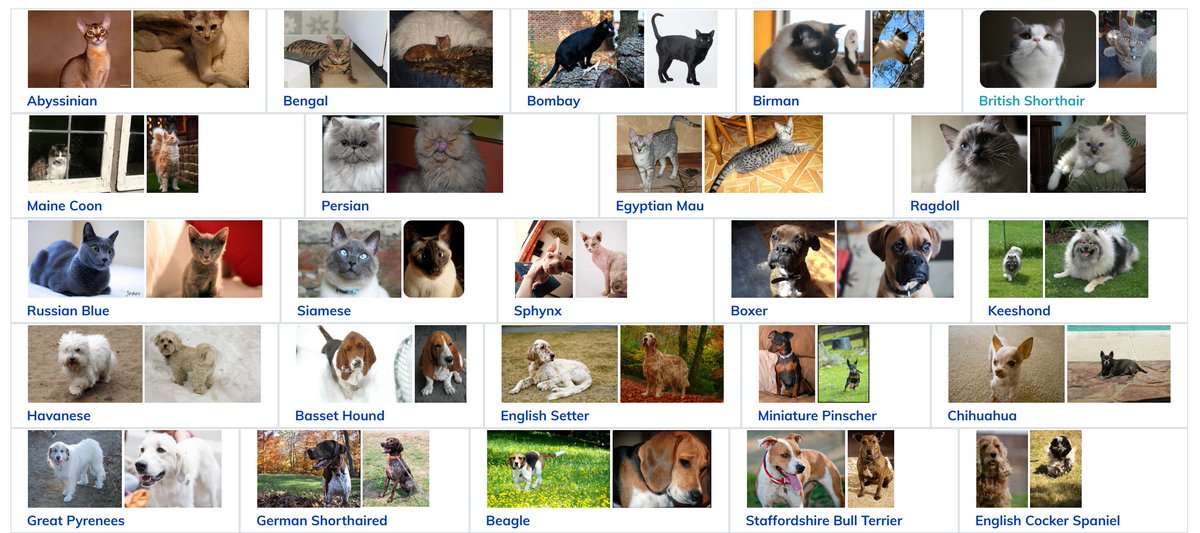

2/ We will train on the challenging Oxford-IIIT Pet Dataset.

It consists of 37 classes, with very few examples (around 200) per class. These breeds are hard to tell apart for machines and humans alike!

Such problems are called fine-grained image classification tasks.

It consists of 37 classes, with very few examples (around 200) per class. These breeds are hard to tell apart for machines and humans alike!

Such problems are called fine-grained image classification tasks.

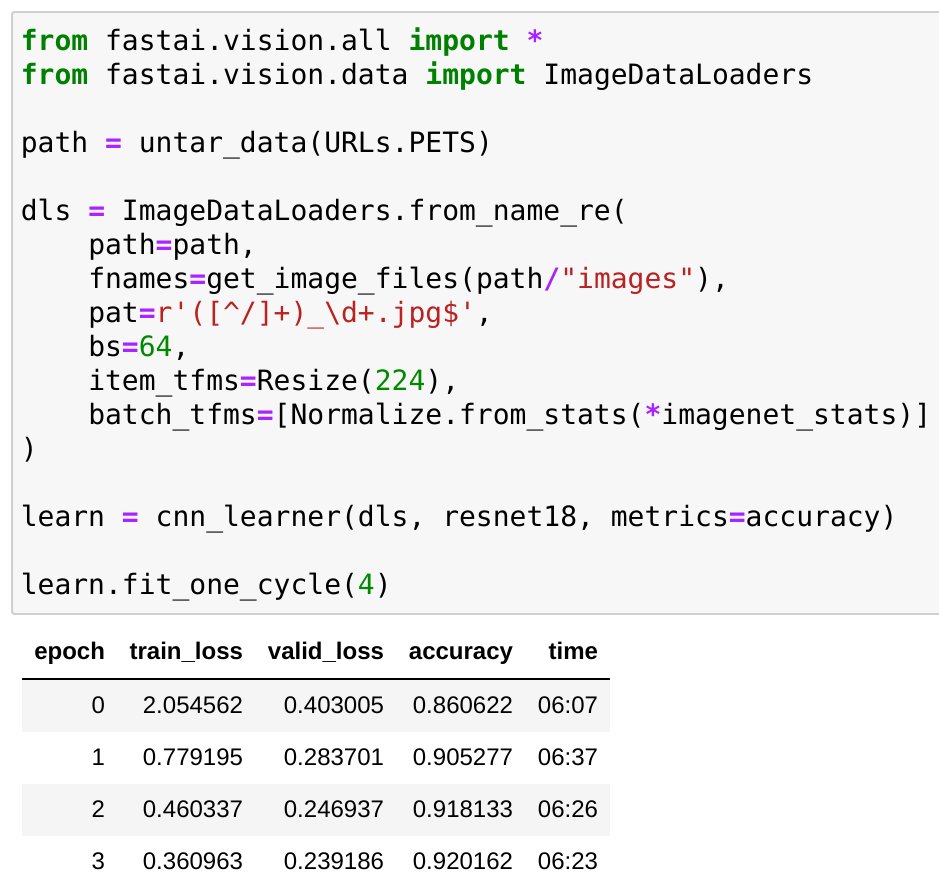

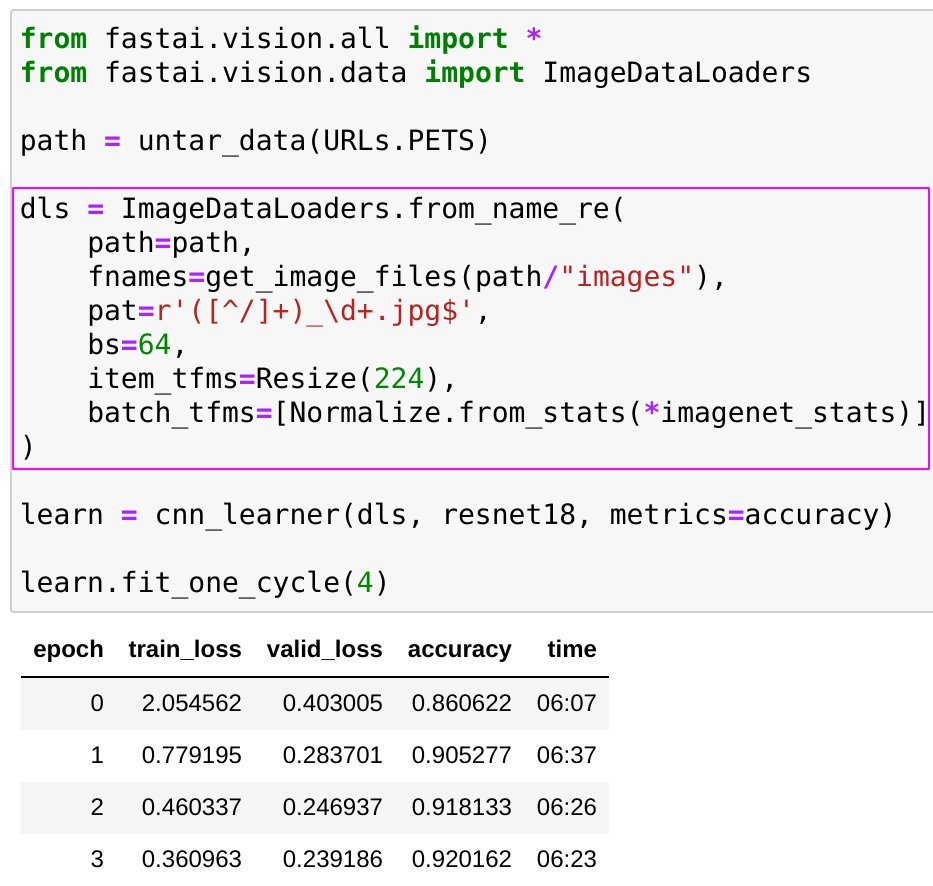

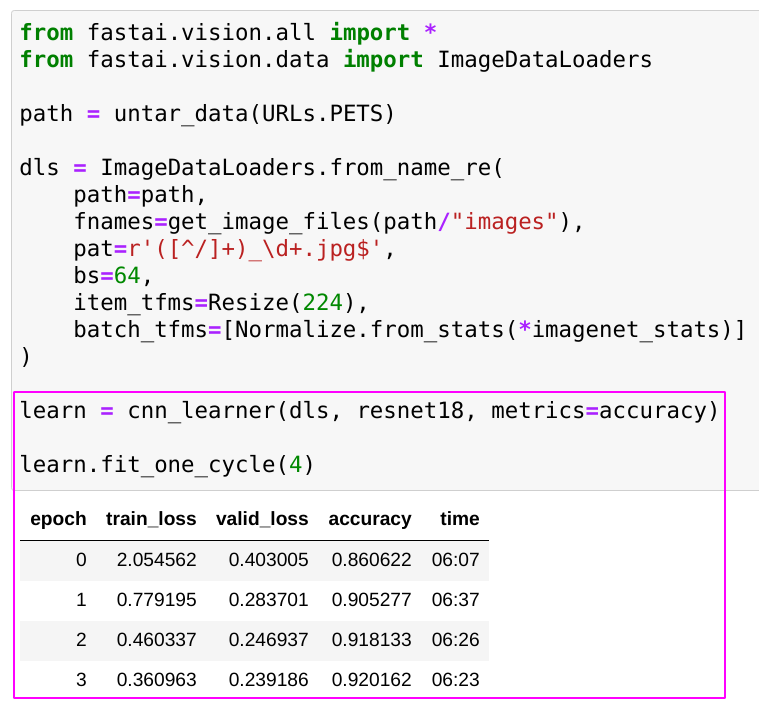

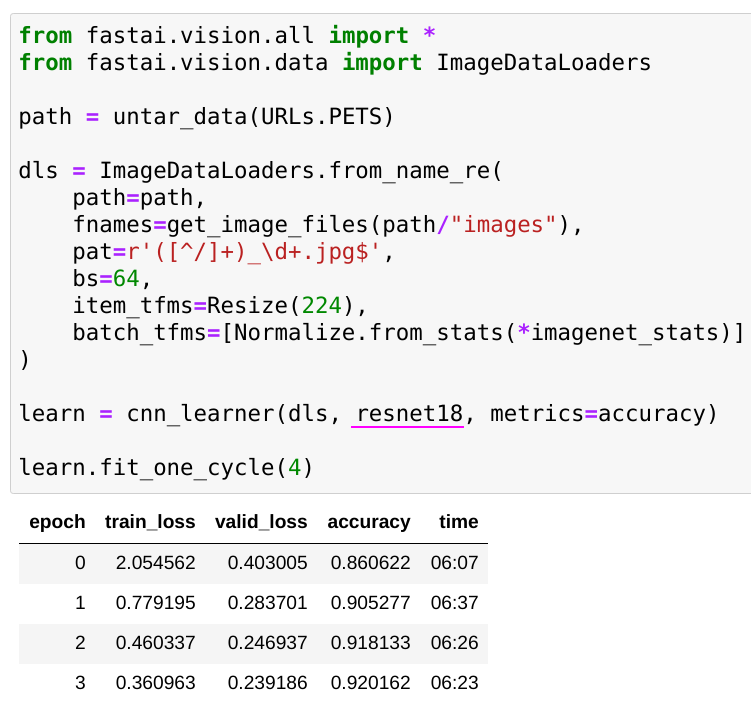

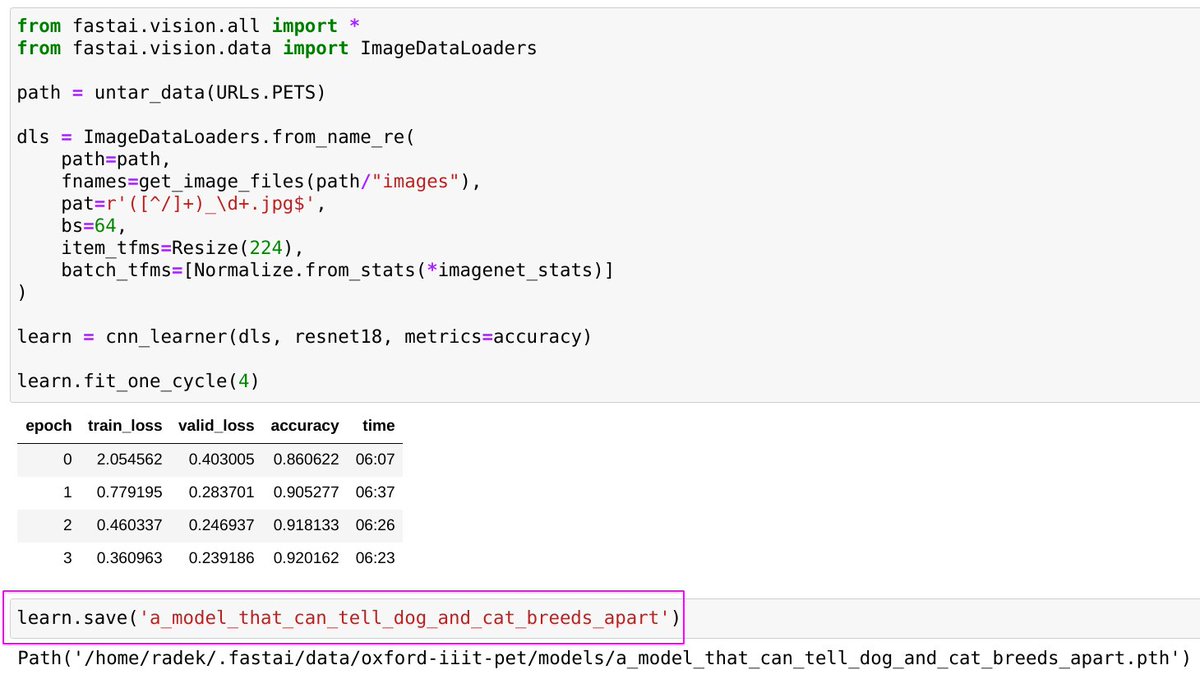

3/ These are all the lines of code that we will need!

Our model trains to a very good accuracy of 92%! This is across 37 classes!

How many people do you know who would be as good at telling dog and cat breeds apart?

Let's reconstruct how we did it...

Our model trains to a very good accuracy of 92%! This is across 37 classes!

How many people do you know who would be as good at telling dog and cat breeds apart?

Let's reconstruct how we did it...

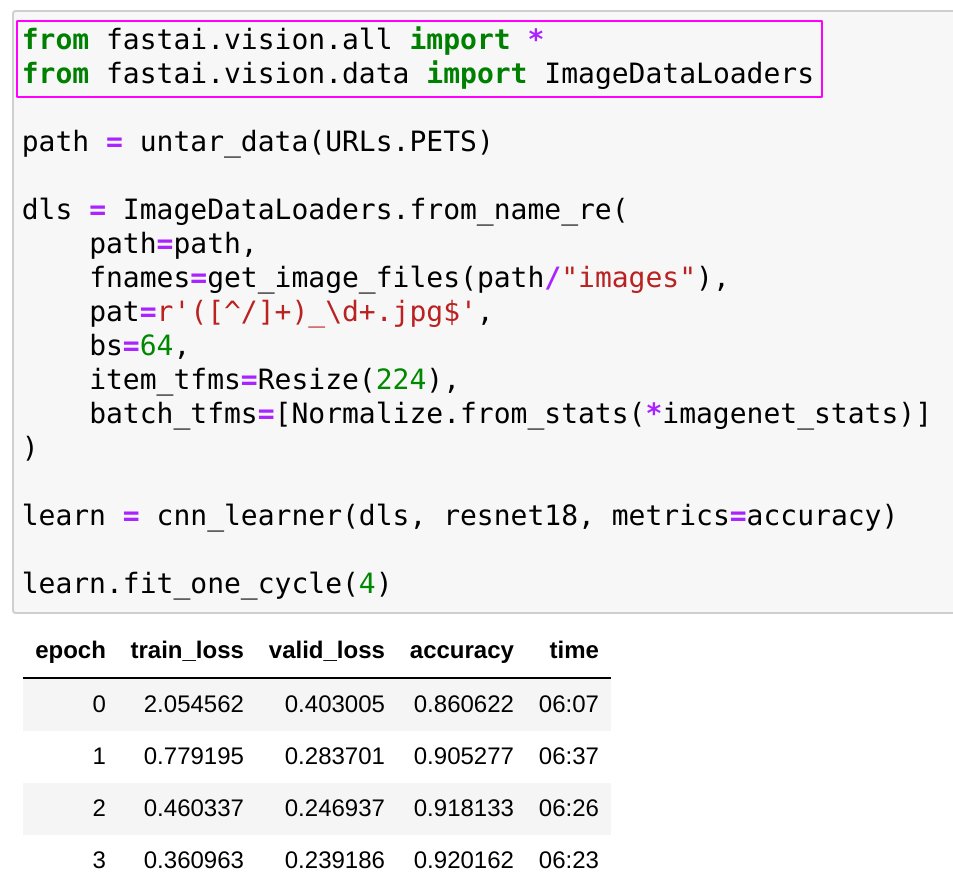

4/ We first import all the necessary functionality from the @fastdotai library.

This is the best deep learning library for learning and for doing research.

You can install it here: github.com/fastai/fastai

This is the best deep learning library for learning and for doing research.

You can install it here: github.com/fastai/fastai

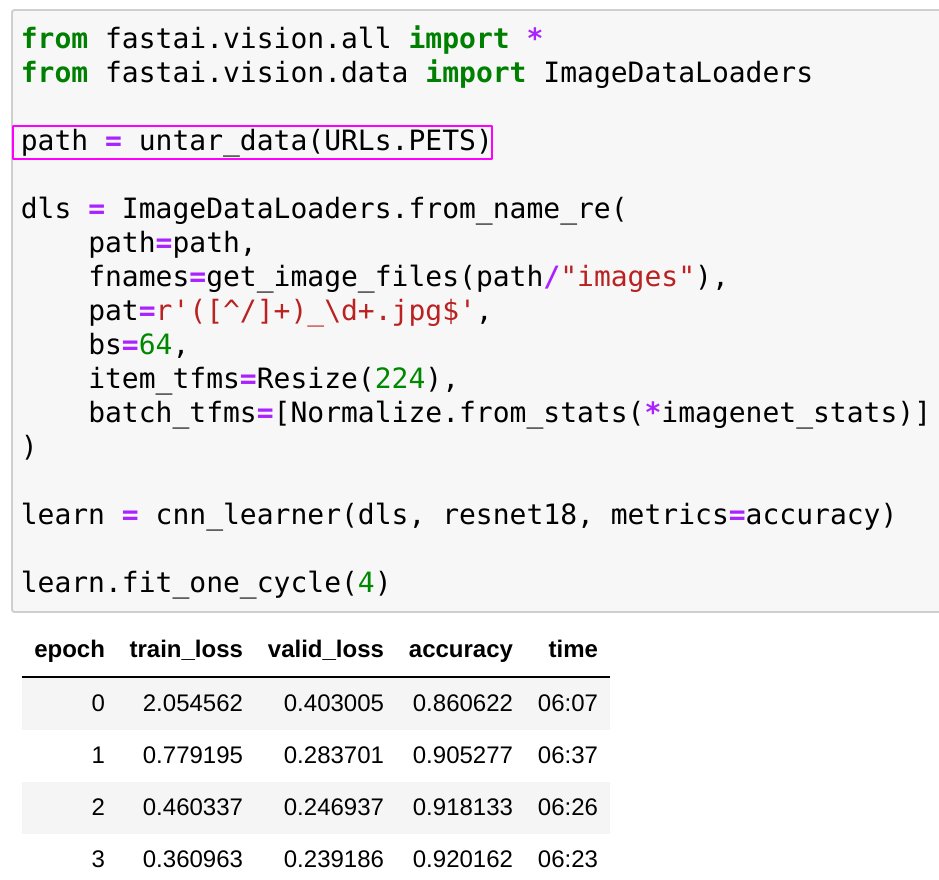

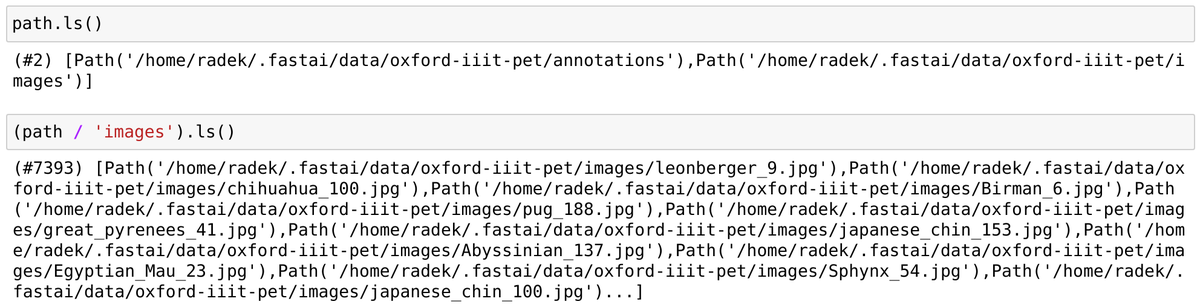

5/ Next we download the data

𝚙𝚊𝚝𝚑 points to the location where the data has been downloaded.

It contains an 𝚒𝚖𝚊𝚐𝚎𝚜 directory with all the pictures in the dataset.

𝚙𝚊𝚝𝚑 points to the location where the data has been downloaded.

It contains an 𝚒𝚖𝚊𝚐𝚎𝚜 directory with all the pictures in the dataset.

6/ Let's now create our 𝙳𝚊𝚝𝚊𝙻𝚘𝚊𝚍𝚎𝚛𝚜

We tell 𝙳𝚊𝚝𝚊𝙻𝚘𝚊𝚍𝚎𝚛𝚜 where to find our data and how to process it.

We pass the location of our data (𝚙𝚊𝚝𝚑 / 'images') and ask for the images to be resized to 224 x 224 pixels.

This is important!

We tell 𝙳𝚊𝚝𝚊𝙻𝚘𝚊𝚍𝚎𝚛𝚜 where to find our data and how to process it.

We pass the location of our data (𝚙𝚊𝚝𝚑 / 'images') and ask for the images to be resized to 224 x 224 pixels.

This is important!

7/ Many advanced DL models require that all examples are of the same shape. Since the pictures in the dataset have different dimensions, we need to resize them before we can train on them.

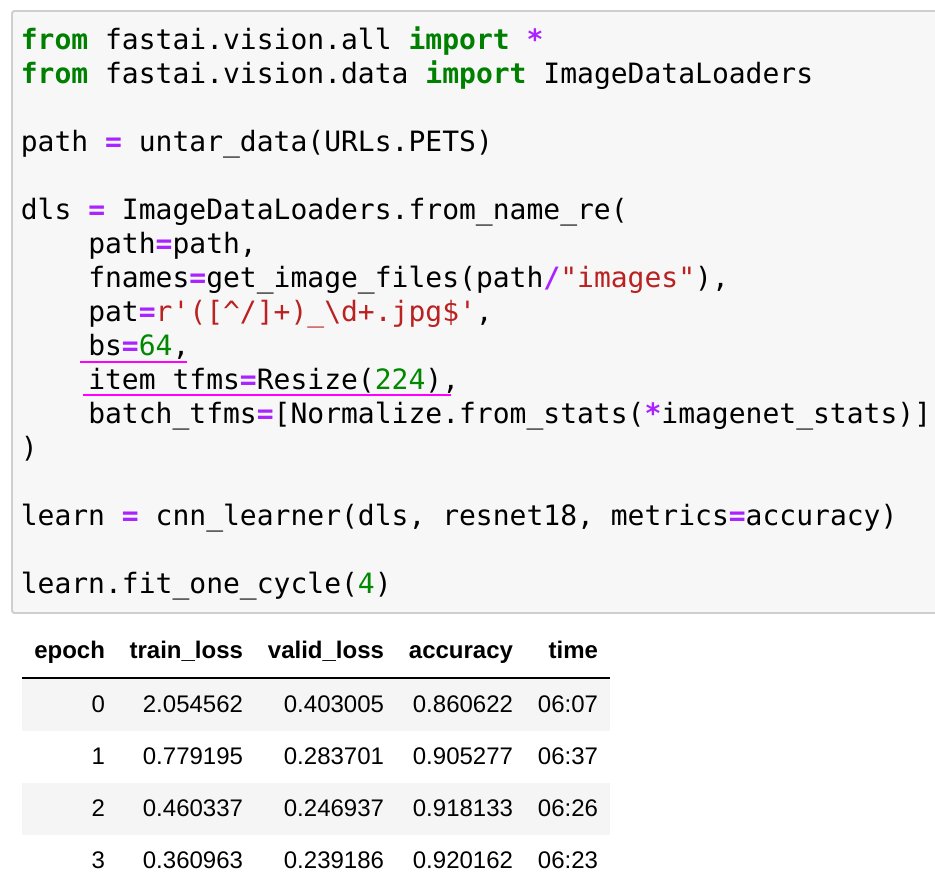

𝚋𝚜 (batch size) is how many images our model will be shown at a time.

𝚋𝚜 (batch size) is how many images our model will be shown at a time.

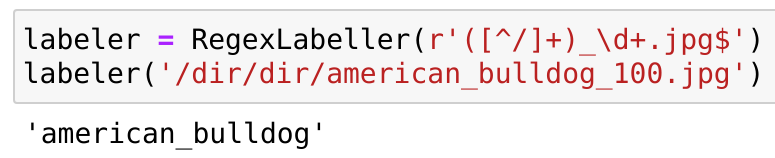

8/ We also need to tell our 𝙳𝚊𝚝𝚊𝙻𝚘𝚊𝚍𝚎𝚛𝚜 how to assign labels.

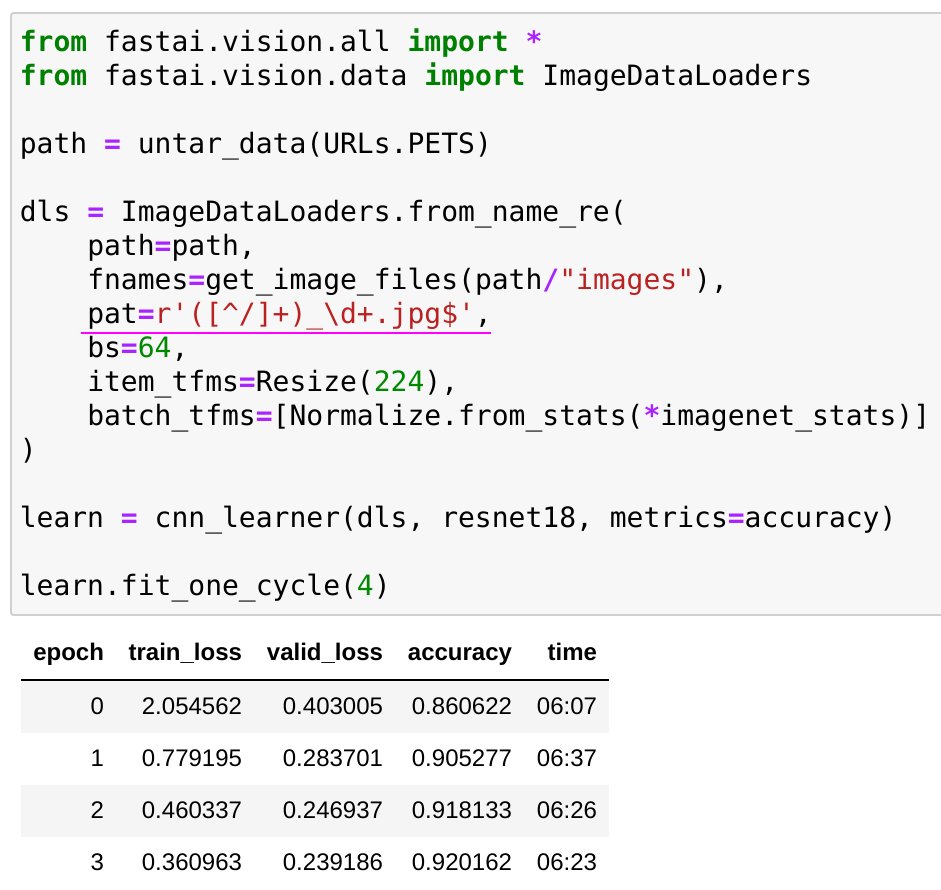

We are passing in a regex that tells them how to look for the class within a file name.

We are passing in a regex that tells them how to look for the class within a file name.

9/ The regex says:

✅ start from the end of the file name, skip .jpg, then skip 1 or more digits followed by an underscore

✅ now take everything up to the first forward slash, this will be our class name

✅ start from the end of the file name, skip .jpg, then skip 1 or more digits followed by an underscore

✅ now take everything up to the first forward slash, this will be our class name

10/ Regex patterns can be intimidating, but they don't have to be!

The next issue of my newsletter will demystify them once and for all 🙂

You can sign up for it here 👇 learnersdigest.radekosmulski.com

The next issue of my newsletter will demystify them once and for all 🙂

You can sign up for it here 👇 learnersdigest.radekosmulski.com

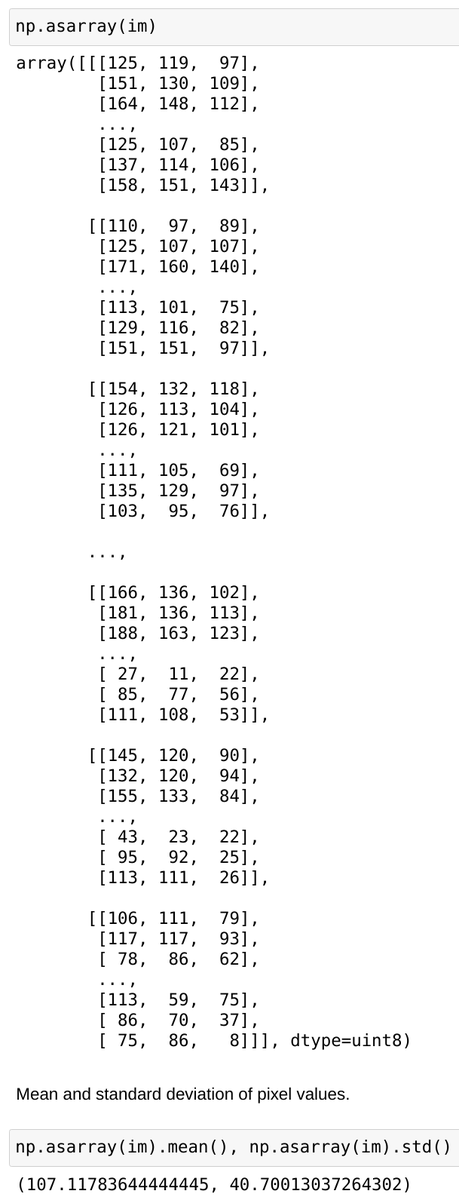

11/ Last but not least, we tell our 𝙳𝚊𝚝𝚊𝙻𝚘𝚊𝚍𝚎𝚛𝚜 to normalize the data!

Normalization is a big name for subtracting the mean of pixel values of images a model has been trained on and dividing by the standard deviation. 🤷

This helps the model train.

Normalization is a big name for subtracting the mean of pixel values of images a model has been trained on and dividing by the standard deviation. 🤷

This helps the model train.

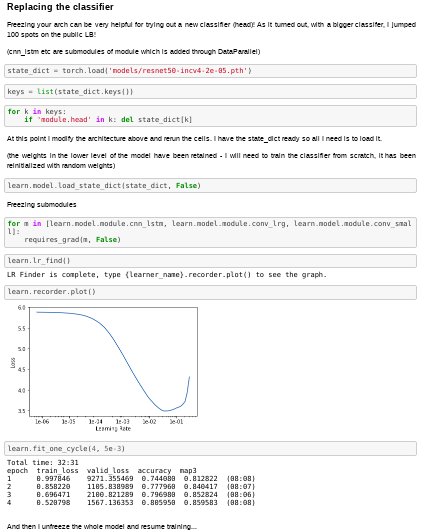

12/ It's time to train our model!

We create a convolutional neural network (CNN), we pass in the architecture - 𝚛𝚎𝚜𝚗𝚎𝚝𝟷𝟾, and we train the model for four epochs.

An epoch is an iteration through our dataset - a model will see each example 4 times.

We create a convolutional neural network (CNN), we pass in the architecture - 𝚛𝚎𝚜𝚗𝚎𝚝𝟷𝟾, and we train the model for four epochs.

An epoch is an iteration through our dataset - a model will see each example 4 times.

13/ An architecture tells the model how to perform the computation.

For CNNs, it's mostly tiny windows sliding across the image and looking for patterns!

There are many architectures that are widely used and we'll look more closely at them in my tweets to follow.

For CNNs, it's mostly tiny windows sliding across the image and looking for patterns!

There are many architectures that are widely used and we'll look more closely at them in my tweets to follow.

14/ After 25 minutes of training, the results are in!

Let us save the trained model for later use (a trained model is a fully-fledged computer program that can do interesting things!).

But wouldn't it be great to see where our model makes mistakes?

Let us save the trained model for later use (a trained model is a fully-fledged computer program that can do interesting things!).

But wouldn't it be great to see where our model makes mistakes?

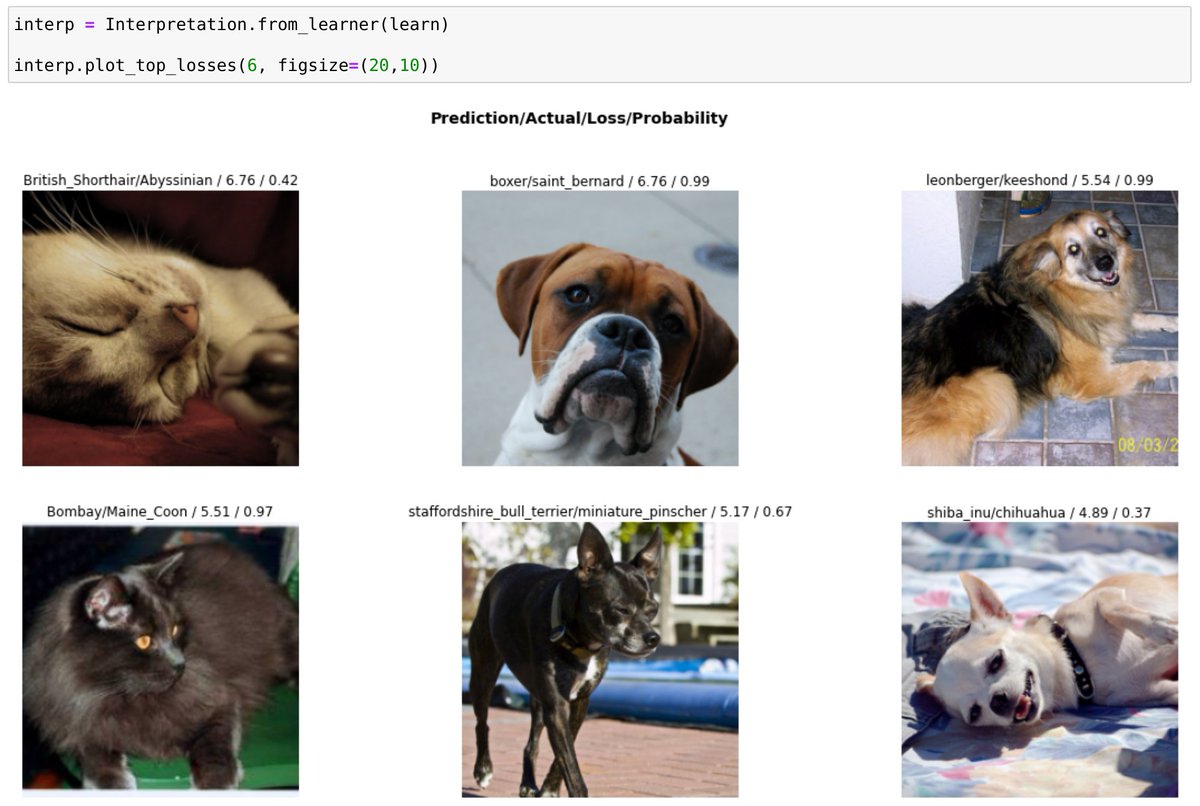

15/ The @fastdotai library makes this super easy.

These are some of the images that our model got wrong. Not bad at all!

These are some of the images that our model got wrong. Not bad at all!

16/ I hope you enjoyed this thread as it sure has taken me quite a while to write it 😂

I plan on doing more of such threads in near future - please share with others so that I can reach more people 🙂

Thank you for reading and let me know what you think!

Ah I nearly forgot...

I plan on doing more of such threads in near future - please share with others so that I can reach more people 🙂

Thank you for reading and let me know what you think!

Ah I nearly forgot...

17/ Do you have any suggestions on how we could improve the results of our model?

Please share them with me in the comments below!

A thread on how we can improve our results, pulling all your suggestions together, coming soon...

Please share them with me in the comments below!

A thread on how we can improve our results, pulling all your suggestions together, coming soon...

• • •

Missing some Tweet in this thread? You can try to

force a refresh