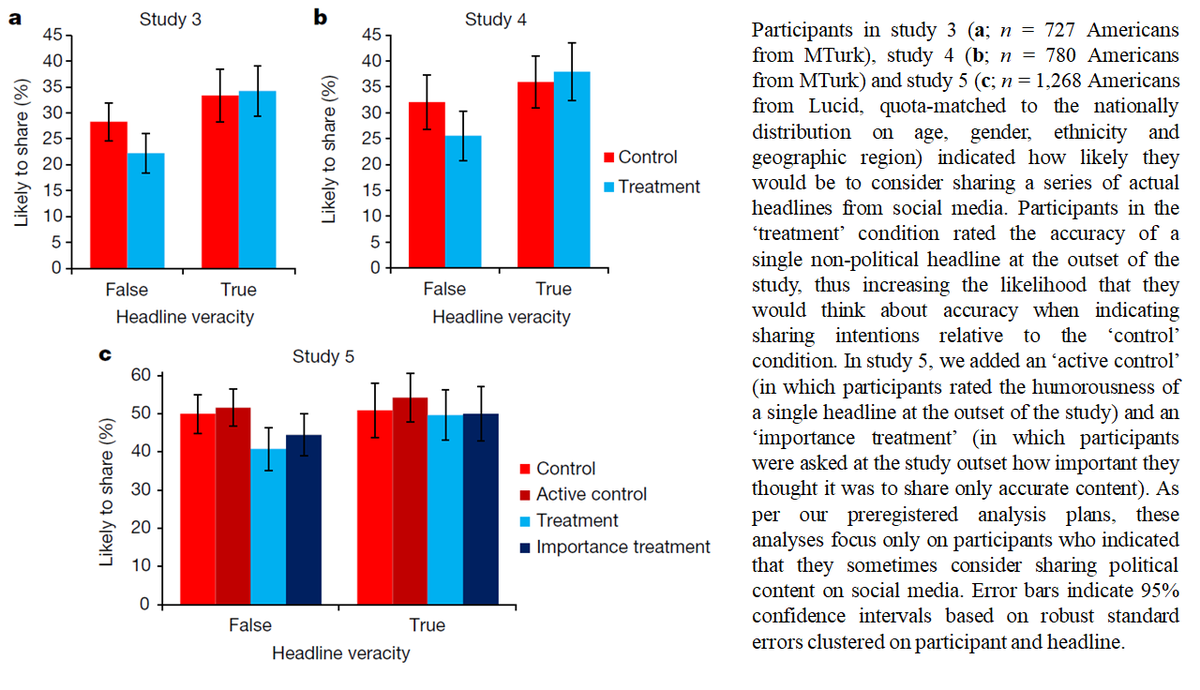

🚨Out now in Nature!🚨

A fundamentally new way of fighting misinfo online:

Surveys+field exp w >5k Twitter users show that gently nudging users to think about accuracy increases quality of news shared- bc most users dont share misinfo on purpose

nature.com/articles/s4158…

1/

A fundamentally new way of fighting misinfo online:

Surveys+field exp w >5k Twitter users show that gently nudging users to think about accuracy increases quality of news shared- bc most users dont share misinfo on purpose

nature.com/articles/s4158…

1/

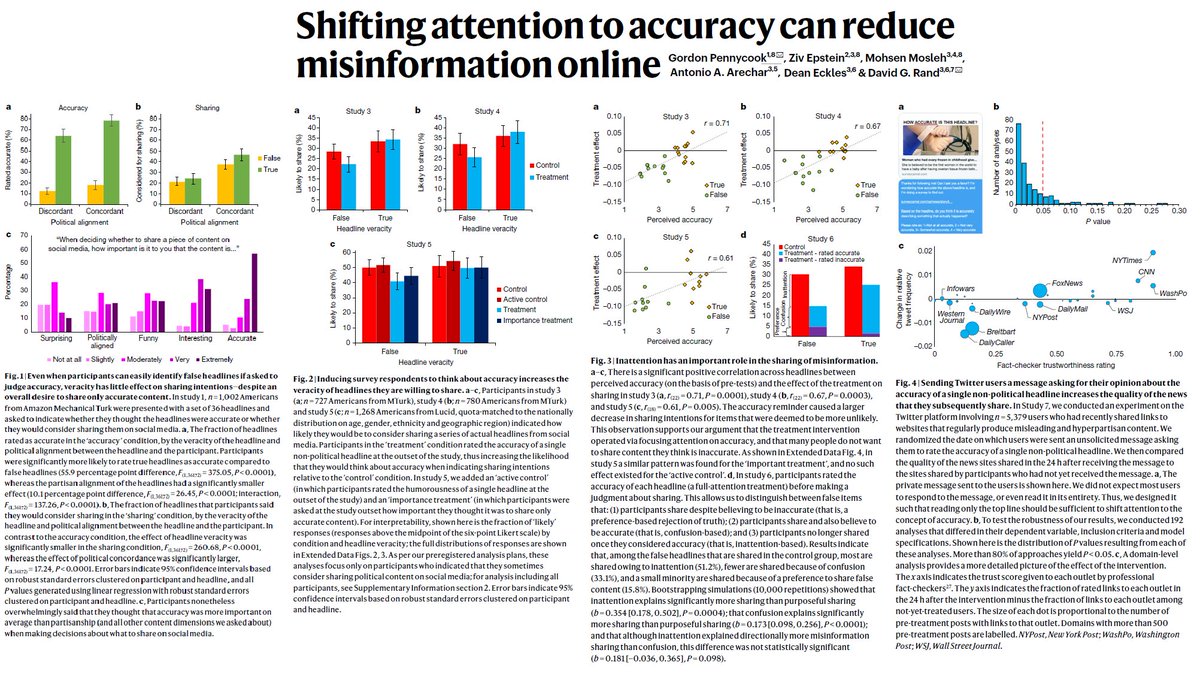

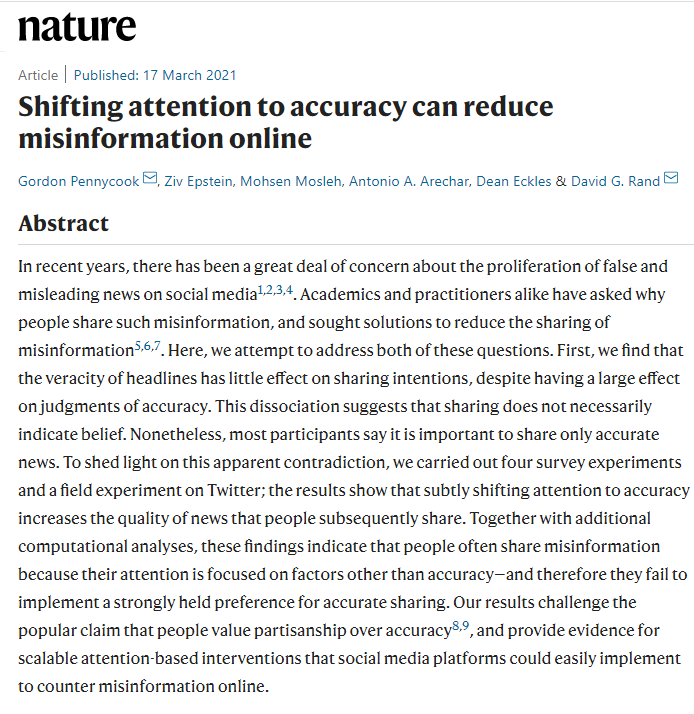

Why do people share misinfo? Are they just confused and can't tell whats true?

Probably not!

When asked about accuracy of news, subjects rated true posts much higher than false. But when asked if theyd *share* online, veracity had little impact-instead was mostly about politics

Probably not!

When asked about accuracy of news, subjects rated true posts much higher than false. But when asked if theyd *share* online, veracity had little impact-instead was mostly about politics

So why this disconnect between accuracy judgments and sharing intentions? Is it that we are in a "post-truth world" and people no longer *care* much about accuracy?

Probably not!

Participants overwhelmingly say that accuracy is very important when deciding what to share

Probably not!

Participants overwhelmingly say that accuracy is very important when deciding what to share

We argue the answer is *inattention*: accuracy motives are often overshadowed bc social media focuses attention on other factors, eg desire to attract/please followers

This lines up w past finding that more intuitive Twitter users share lower quality news

This lines up w past finding that more intuitive Twitter users share lower quality news

https://twitter.com/_mohsen_m/status/1359772108499345409?s=19

We test these competing views by shifting attention towards accuracy in 4 exps (total N=3485) w MTurkers & ~representative sample. If people don’t care much about accuracy, this should have no effect. But if problem is inattention, this should make sharing more discerning.

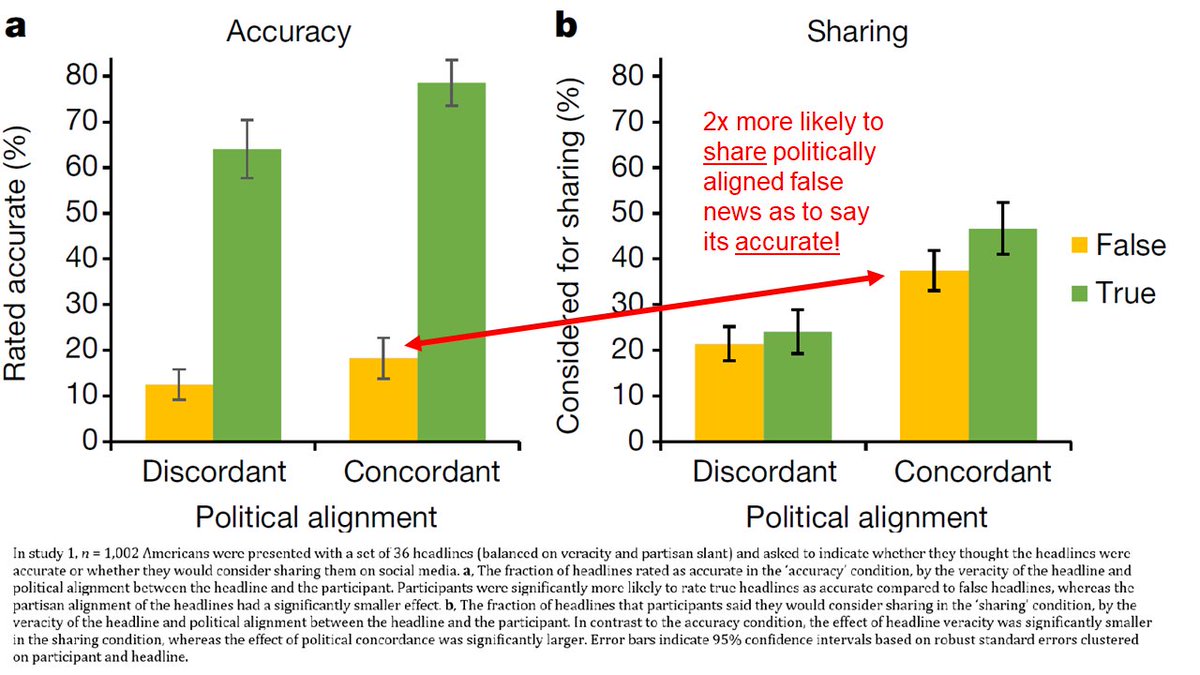

In one exp, Treatment participants rate accuracy of every news post before indicating how likely they'd be to share it. In Control they just indicate sharing intentions

Treatment reduces sharing of false news by 50%! Most of remaining sharing of false news explained by confusion

Treatment reduces sharing of false news by 50%! Most of remaining sharing of false news explained by confusion

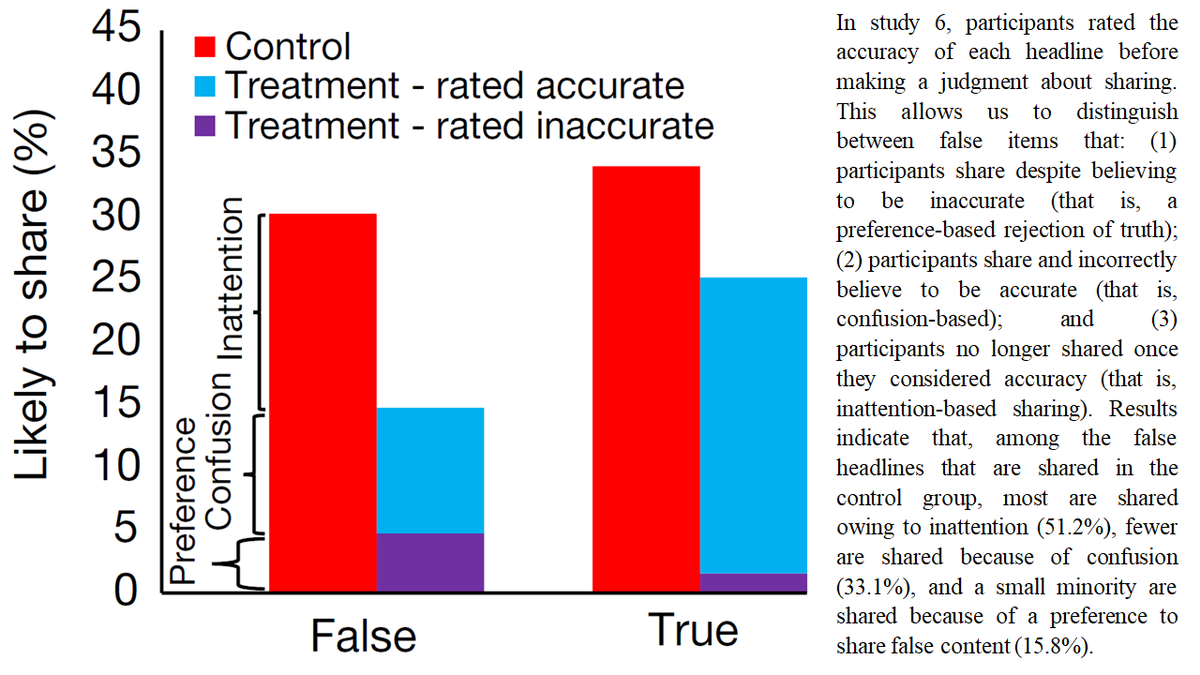

How about a light-weight prompt?

Treatment=subjects rate accuracy of 1 nonpolitical headline at start of study, subtly priming concept of accuracy

Significantly increases quality of subsequent sharing intentions (reduces sharing of false but not true news) relative to control

Treatment=subjects rate accuracy of 1 nonpolitical headline at start of study, subtly priming concept of accuracy

Significantly increases quality of subsequent sharing intentions (reduces sharing of false but not true news) relative to control

Next, we test our intervention "in the wild" on Twitter. We build up follower-base of users who retweet Breitbart or Infowars. We then send N=5379 users a DM asking them to judge the accuracy of a nonpolitical headline (w DM date randomly assigned to allow causal inference)

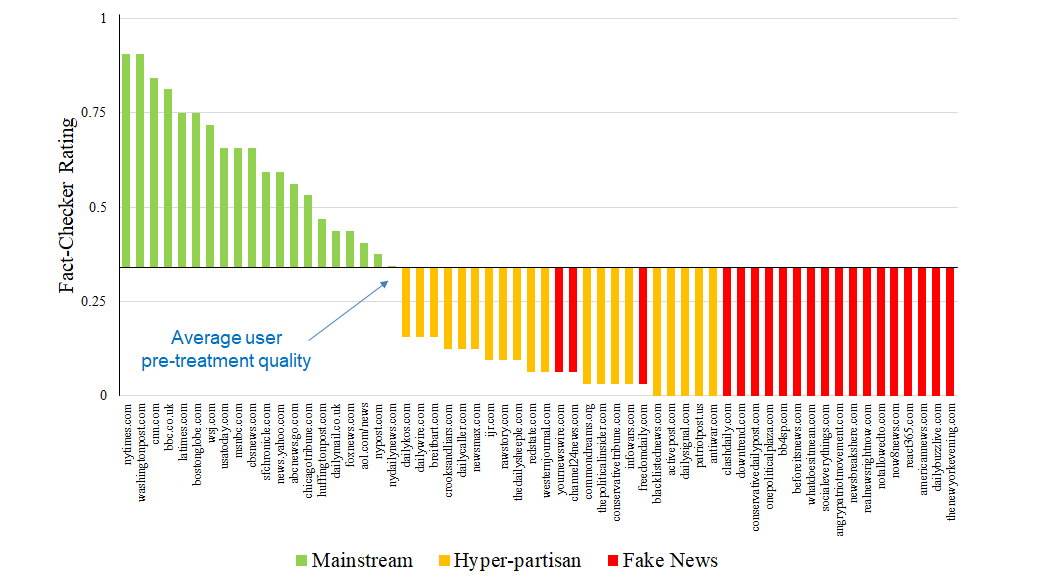

We quantify quality of news tweeted using fact-checker trust ratings of 60 news sites (pnas.org/content/116/7/…)- at baseline, our users share links to quite low-quality sites

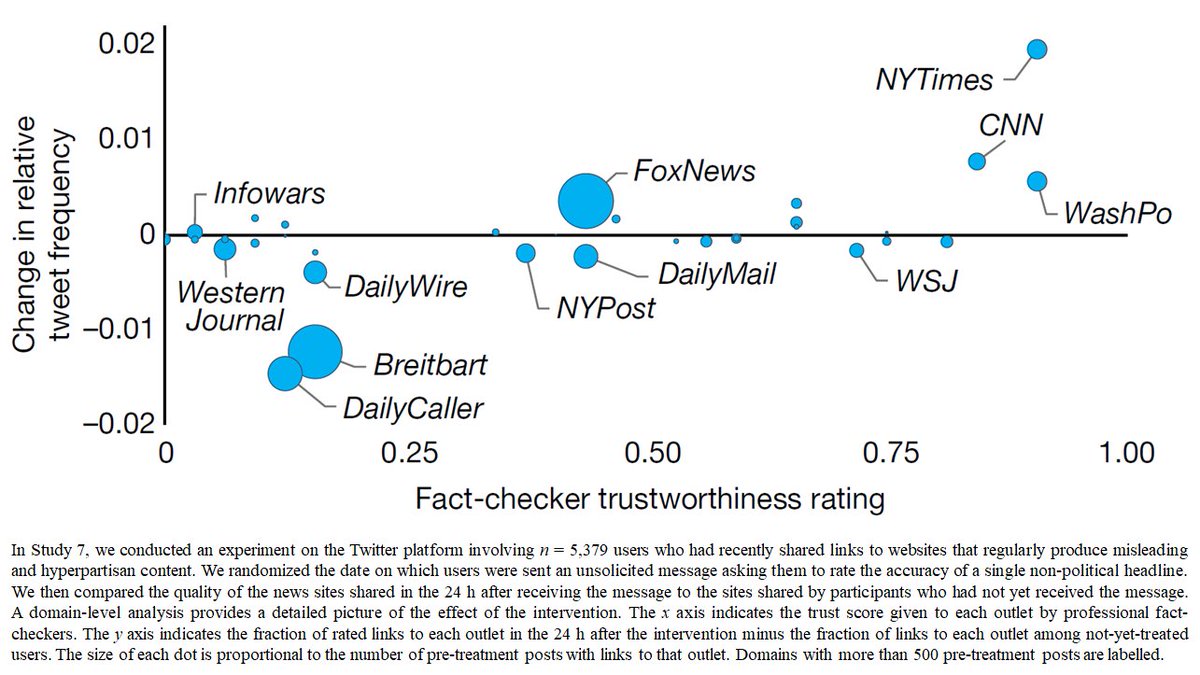

We assess intervention by comparing links in 24 hrs after receiving DM to links from users not yet DMed

We assess intervention by comparing links in 24 hrs after receiving DM to links from users not yet DMed

We find increase in quality of news retweeted after receiving accuracy-prompt DM! 4.8% increase in avg quality, 9.0% increase in summed quality, 3x increase in discernment. Fraction of RTs to DailyCaller/Breitbart 🡳, to NYTimes 🡱

Sig effect in >80% of 192 model specifications

Sig effect in >80% of 192 model specifications

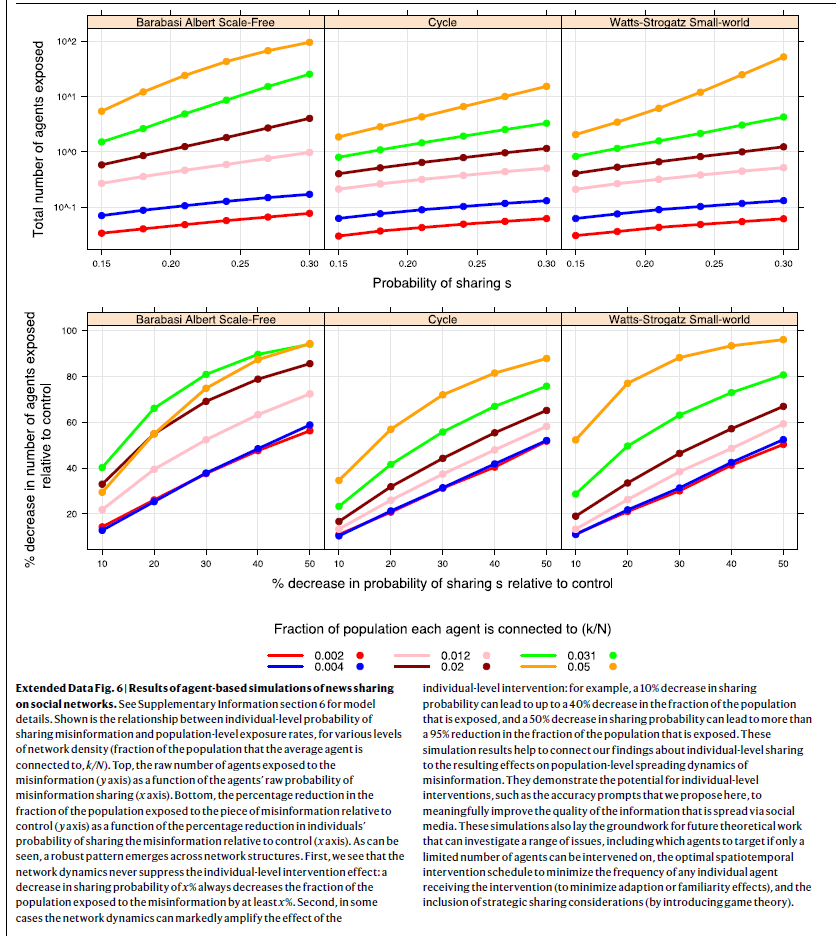

Agent-based simulations show how this positive impact can be amplified by network effects. If I dont RT, my followers dont see it and wont RT, so none of their followers will see it etc. Plus, effect sizes observed in our exp could certainly be increased through optimization

We also formalize our inattention account using utility theory. Due to attention constraints, agents can only attend to a subset of terms in their utility fn. So even if you have a strong pref for accuracy, accuracy wont impact sharing choice when attention is directed elsewhere!

Mechanism?

Fitting model to the experimental data shows avg participant cares about accuracy as much or more than partisanship (confirming survey results)- but attention is often directed away from accuracy

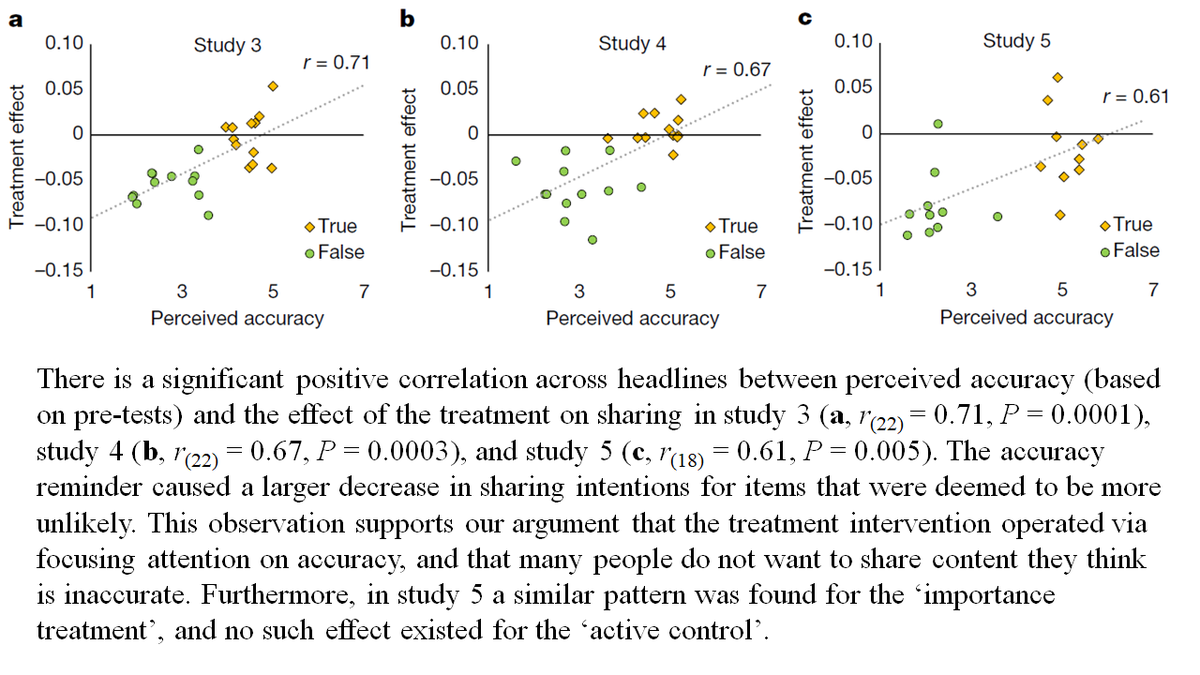

Plus, treatment specifically reduces sharing of more implausible news

Fitting model to the experimental data shows avg participant cares about accuracy as much or more than partisanship (confirming survey results)- but attention is often directed away from accuracy

Plus, treatment specifically reduces sharing of more implausible news

These studies help us see past the illusion that everyday citizens on the other side must be either stupid or evil- instead, we are often simply distracted from accuracy when online. Another implication of our results is that widely-RTed claims are not necessarily widely BELIEVED

Our treatment could be easily implemented by platforms, eg periodically asking users to rate the accuracy of random posts. This primes accuracy (+generates useful crowd ratings to identify misinformation

Scalable+doesnt make platforms arbiters of truth!

https://twitter.com/DG_Rand/status/1314212731826794499?s=20)

Scalable+doesnt make platforms arbiters of truth!

Here we focused on political news, but in follow-up studies we showed that the results generalize to COVID-19 misinformation as well (eg in this paper that we frantically pulled together in the first few days of the pandemic)

https://twitter.com/GordPennycook/status/1270050765113929730?s=20

We hope that tech companies will investigate how they can leverage accuracy prompts to improve the quality of the news people share online

To that end, we're really excited about an ongoing collaboration we have with researchers at @Google's @Jigsaw -see psyarxiv.com/sjfbn

To that end, we're really excited about an ongoing collaboration we have with researchers at @Google's @Jigsaw -see psyarxiv.com/sjfbn

We were also really excited to see @tiktok_us, in collaboration with @IrrationalLabs, develop assess and implement an intervention based in part on our accuracy-prompt work

Hoping that @jack @Twitter @Facebook and others will be similarly interested

Hoping that @jack @Twitter @Facebook and others will be similarly interested

https://twitter.com/IrrationalLabs/status/1357033901311451140?s=20

This study is the latest in our research group's efforts to understand why people believe and share misinformation, and what can be done to combat it. For a full list of our papers, with links to PDFs and tweet threads, see docs.google.com/document/d/1k2…

Finally, if you made it this far into the thread and want to know how this work connects to broader psychological and cognitive science theory, check out this recent review "The Psychology of Fake News" that @GordPennycook and I published in @TrendsCognSci authors.elsevier.com/sd/article/S13…

I'm extremely excited about this project, which took years & was led by @GordPennycook @_ziv_e @MohsenMosleh w invaluable input from coauthors @AaArechar @deaneckles

Please let us know your comments, critiques, suggestions etc. Thanks!!

Ungated PDF: psyarxiv.com/3n9u8

Please let us know your comments, critiques, suggestions etc. Thanks!!

Ungated PDF: psyarxiv.com/3n9u8

I also wanted to share this @sciam piece that @GordPennycook and I wrote summarizing the paper and related work scientificamerican.com/article/most-p…

Taking a page from @STWorg, ICYMI: @olbeun

@SciBeh @lombardi_learn @kostas_exarhia

@stefanmherzog @commscholar @johnfocook @Briony_Swire @Kendeou @dlholf @ProfSunitaSah @HendirkB @emmapsychology @ThomsonAngus

@UMDCollegeofEd @gavaruzzi @katytapper @orspaca @adamhfinn @HPRU_BSE

@SciBeh @lombardi_learn @kostas_exarhia

@stefanmherzog @commscholar @johnfocook @Briony_Swire @Kendeou @dlholf @ProfSunitaSah @HendirkB @emmapsychology @ThomsonAngus

@UMDCollegeofEd @gavaruzzi @katytapper @orspaca @adamhfinn @HPRU_BSE

@JoshCompton2011

@WlliamCrozierIV

@SeifertColleen

@d_j_flynn

@M_B_Petersen

@tinaeliassi

@JaneSuit

@DanLarhammar

@mariabaghramian

@PieroBianucci

@a_b_powell

@ProfDFreeman

@andrew_chadwick

@ipanalysis

@SineadPLambe

@SJVanders

@MarieJuanchich

@JulieLeask

@ProfRapp

@roozenbot

@WlliamCrozierIV

@SeifertColleen

@d_j_flynn

@M_B_Petersen

@tinaeliassi

@JaneSuit

@DanLarhammar

@mariabaghramian

@PieroBianucci

@a_b_powell

@ProfDFreeman

@andrew_chadwick

@ipanalysis

@SineadPLambe

@SJVanders

@MarieJuanchich

@JulieLeask

@ProfRapp

@roozenbot

@PhilippMSchmid

@GaleSinatra

@JasonReifler

@philipplenz6

@jayvanbavel

@AndyPerfors

@MicahGoldwater

@M_B_Petersen

@Karen_Douglas

@CorneliaBetsch

@ira_hyman

@lingtax

@annaklas_

@DerynStrange

@GaleSinatra

@JasonReifler

@philipplenz6

@jayvanbavel

@AndyPerfors

@MicahGoldwater

@M_B_Petersen

@Karen_Douglas

@CorneliaBetsch

@ira_hyman

@lingtax

@annaklas_

@DerynStrange

• • •

Missing some Tweet in this thread? You can try to

force a refresh