Prof @cornell - I'm more active on BlueSky at @dgrand.bsky.social

3 subscribers

How to get URL link on X (Twitter) App

“@grok is this true?” might be the most important new fact-checking behavior on social media—and we finally have population-scale data on it.

“@grok is this true?” might be the most important new fact-checking behavior on social media—and we finally have population-scale data on it.

Accusations of bias against Reps (eg Trump+others suspensions) drove @elonmusk to buy Twitter & gut fact-checking in favor of @CommunityNotes. @finkd did same at @Meta. But greater sanctioning of Reps could just be the result of Reps sharing more misinfo

Accusations of bias against Reps (eg Trump+others suspensions) drove @elonmusk to buy Twitter & gut fact-checking in favor of @CommunityNotes. @finkd did same at @Meta. But greater sanctioning of Reps could just be the result of Reps sharing more misinfo https://x.com/DG_Rand/status/1841519775026987078

After 2016, tech cos were under intense pressure to combat misinfo. Now, that pressure has shifted to avoiding claims of political bias, mostly from the right. This is stifling action against misinfo (and unfairly punishing misinfo researchers like Renee DiResta @katestarbird )

After 2016, tech cos were under intense pressure to combat misinfo. Now, that pressure has shifted to avoiding claims of political bias, mostly from the right. This is stifling action against misinfo (and unfairly punishing misinfo researchers like Renee DiResta @katestarbird )

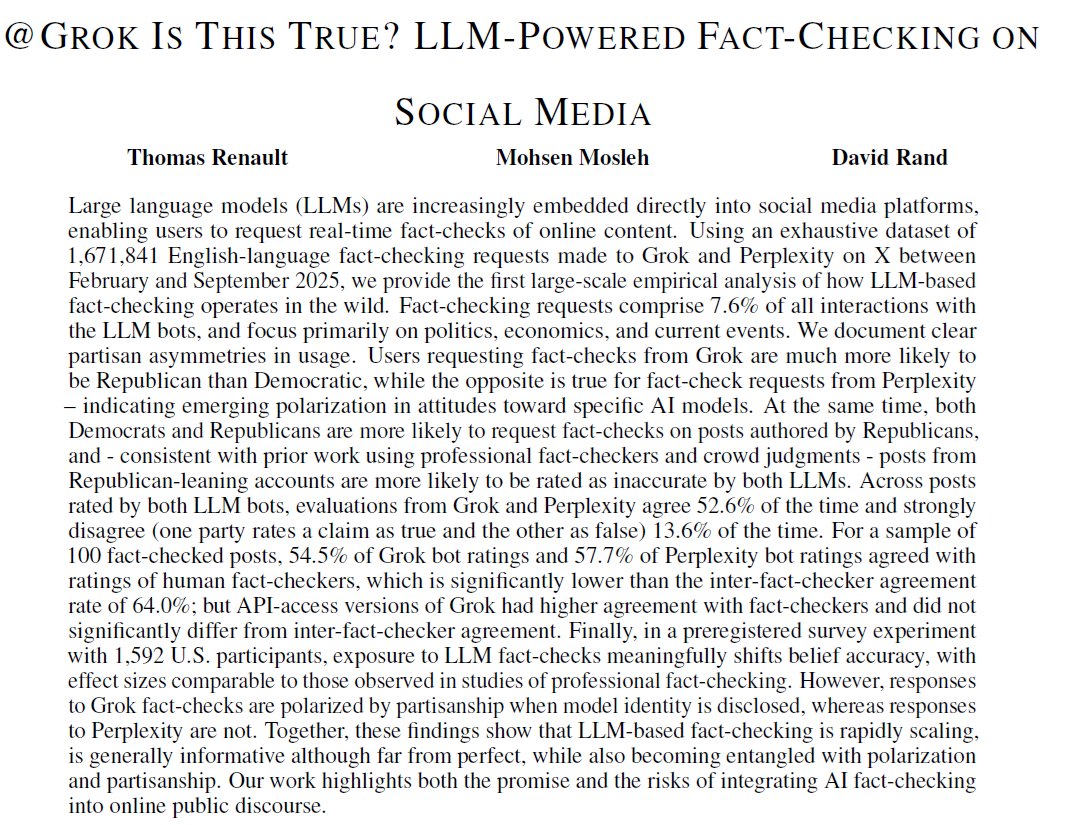

Attempts to debunk conspiracies are often futile, leading many to conclude that psychological needs/motivations blind pple & make them resistant to evidence. But maybe past attempts just didn't deliver sufficiently specific/compelling evidence+arguments?

Attempts to debunk conspiracies are often futile, leading many to conclude that psychological needs/motivations blind pple & make them resistant to evidence. But maybe past attempts just didn't deliver sufficiently specific/compelling evidence+arguments?

Attempts to debunk conspiracies are often futile, leading many to conclude that consp beliefs are driven by needs/motivations & thus resistant to evidence. But maybe past attempts just didn't deliver sufficiently specific/compelling evidence+arguments?

Attempts to debunk conspiracies are often futile, leading many to conclude that consp beliefs are driven by needs/motivations & thus resistant to evidence. But maybe past attempts just didn't deliver sufficiently specific/compelling evidence+arguments?

Belief in misinfo has been linked to components of the digital media environment that increase reliance on intuitions. But why do our intuitions support belief in falsehoods?

Belief in misinfo has been linked to components of the digital media environment that increase reliance on intuitions. But why do our intuitions support belief in falsehoods?

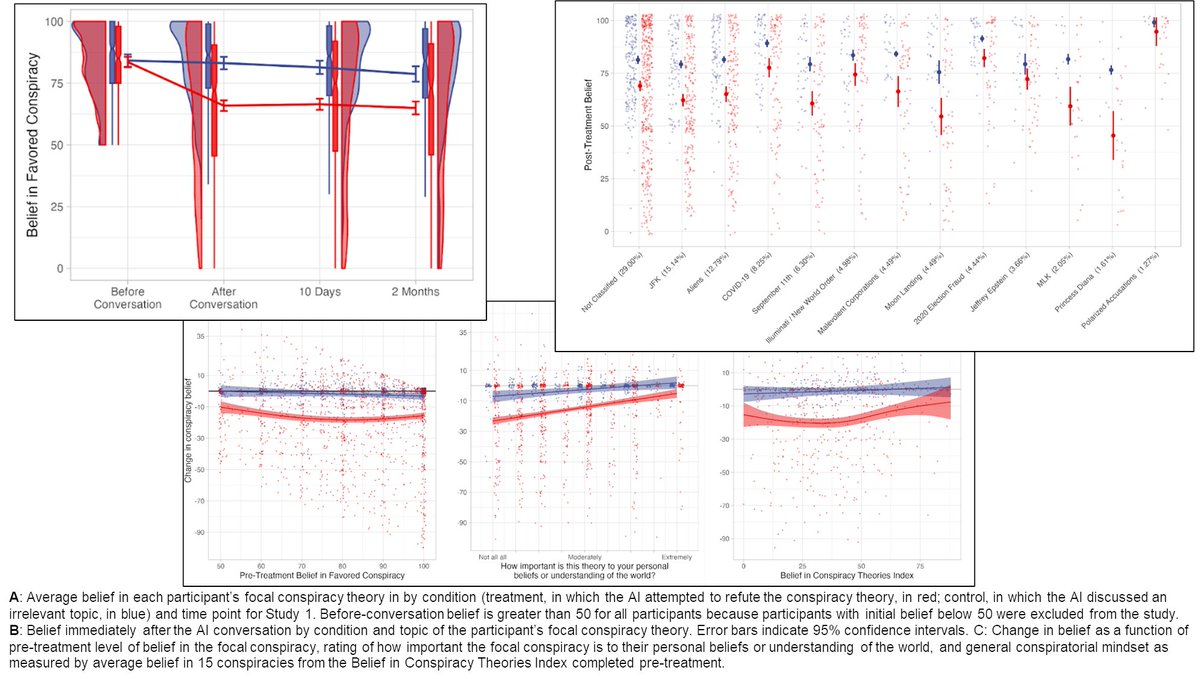

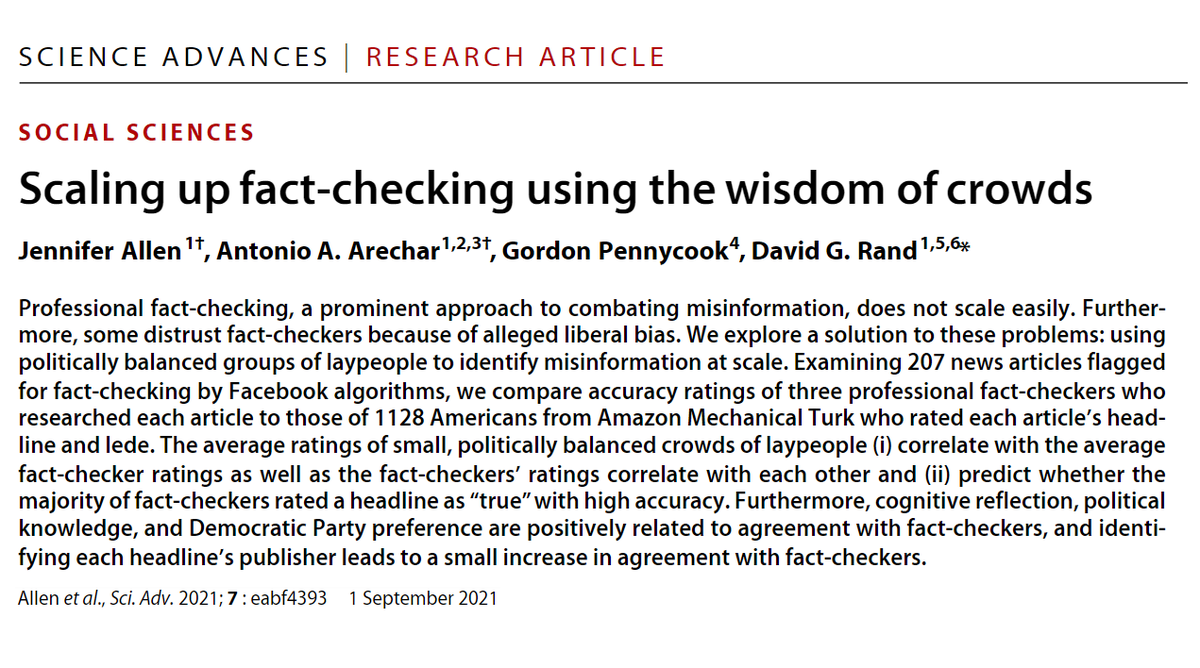

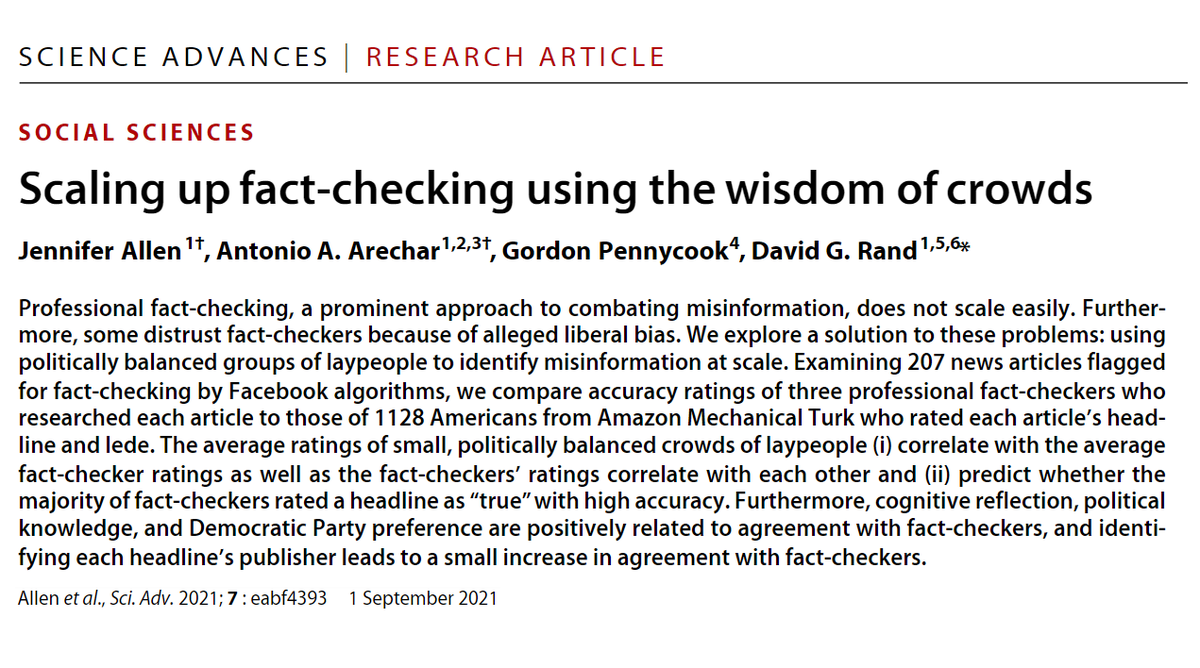

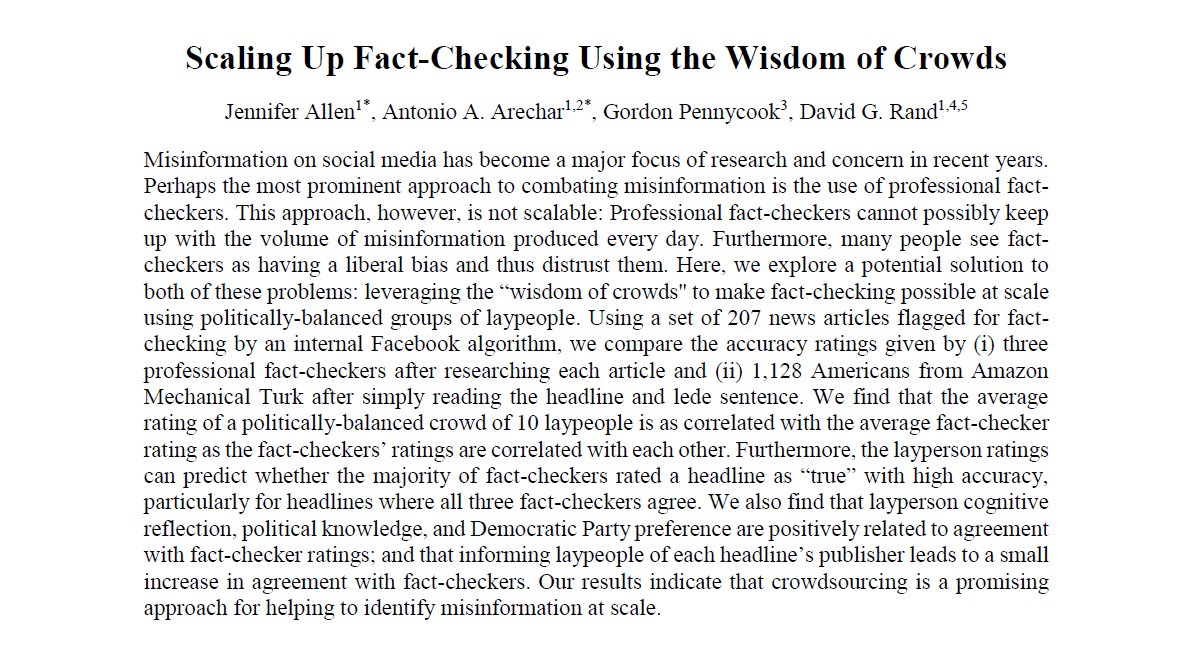

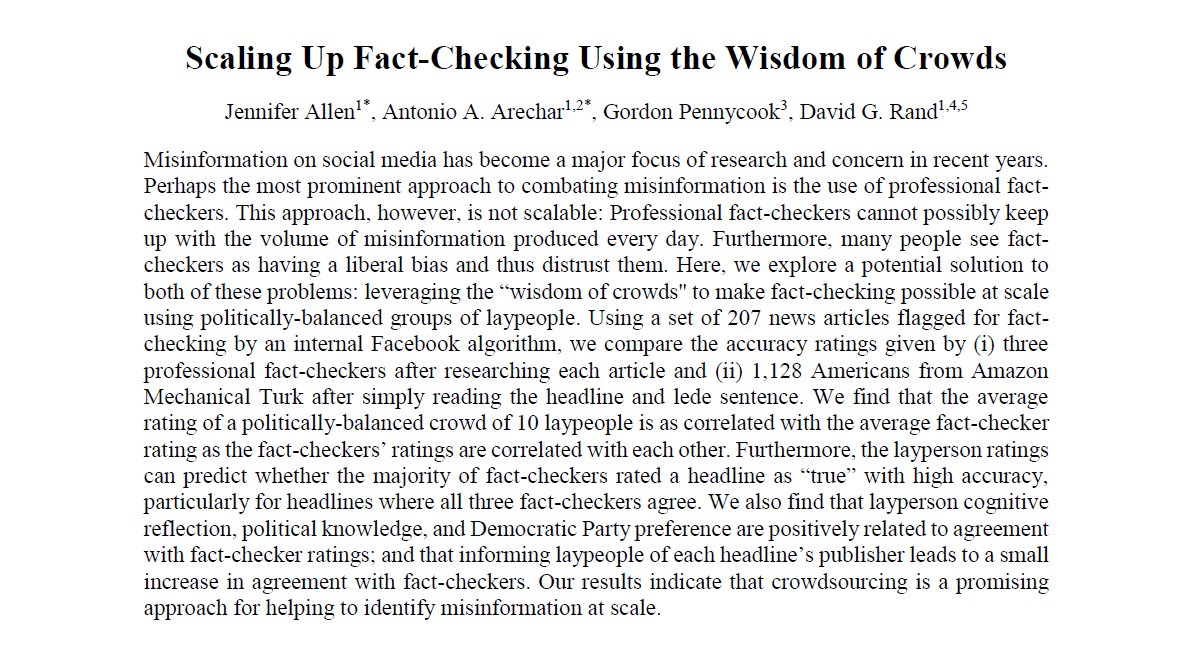

Professional fact-checking can help reduce misinfo:

Professional fact-checking can help reduce misinfo:

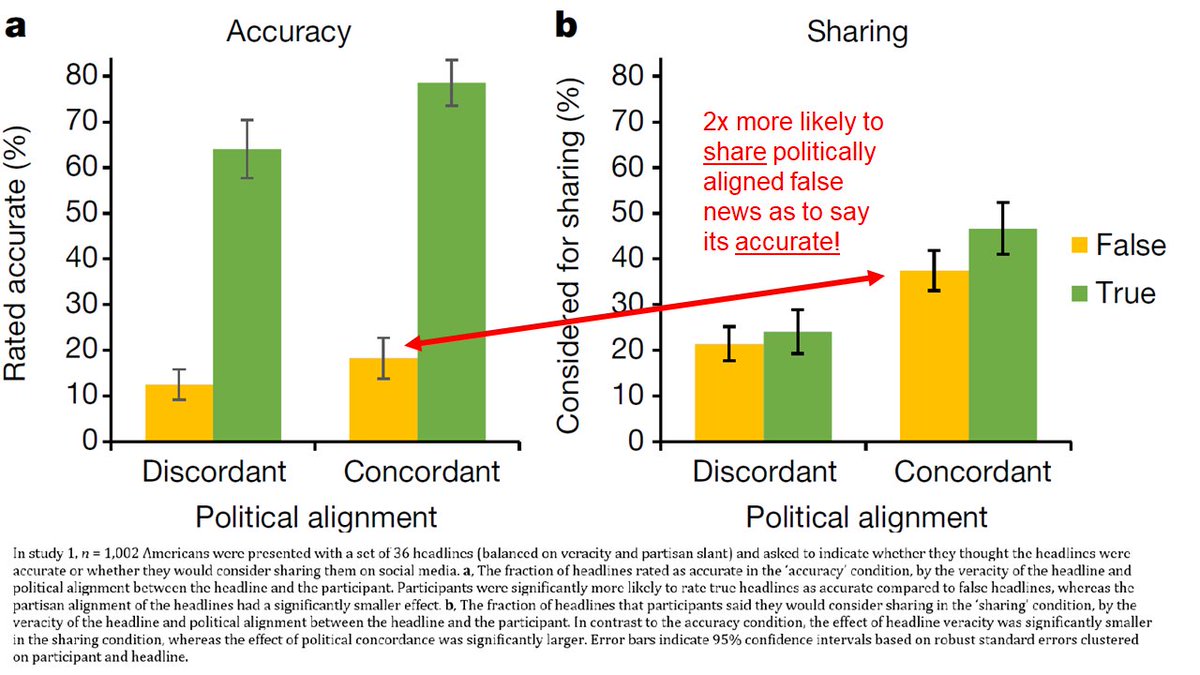

A lot has been learned about psychology of misinformation/fake news, and what interventions may work. For overview, see @GordPennycook and my TICS review:

A lot has been learned about psychology of misinformation/fake news, and what interventions may work. For overview, see @GordPennycook and my TICS review: https://twitter.com/GordPennycook/status/1379513315542495235?s=20

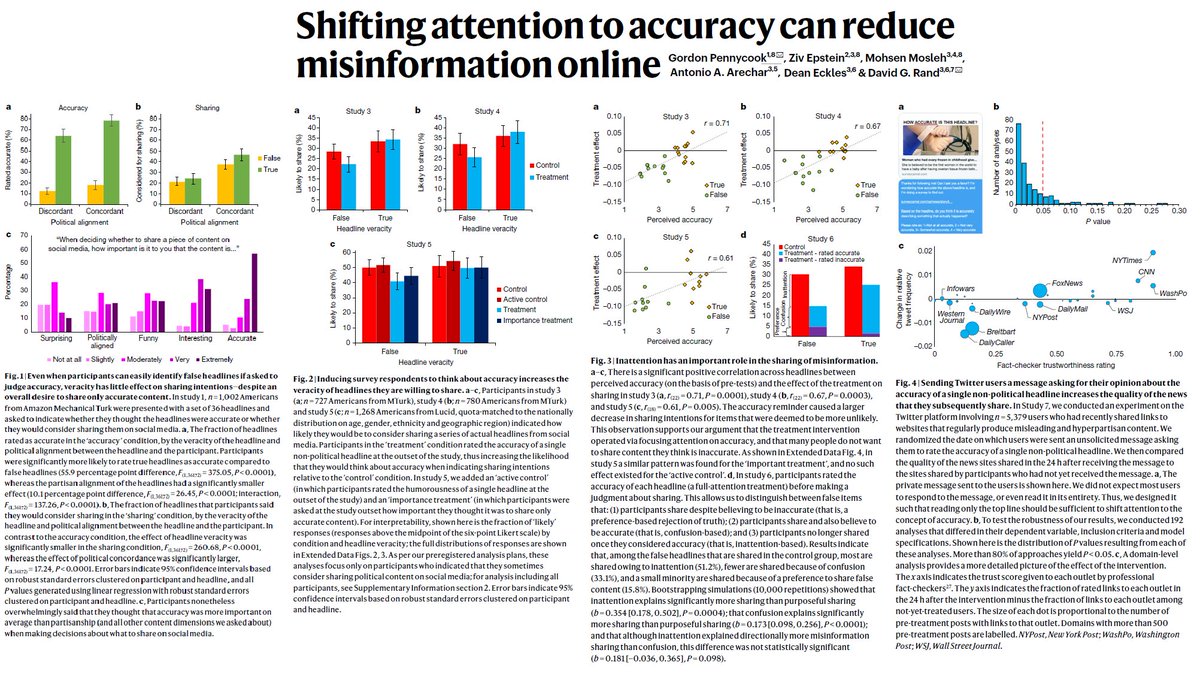

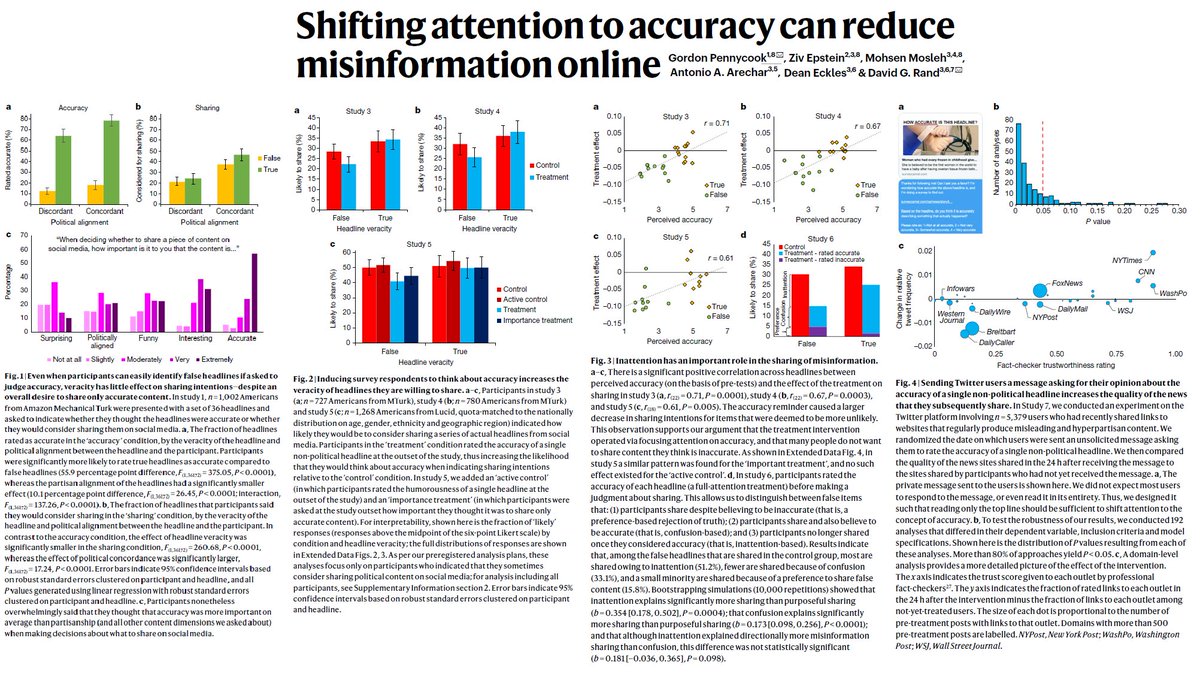

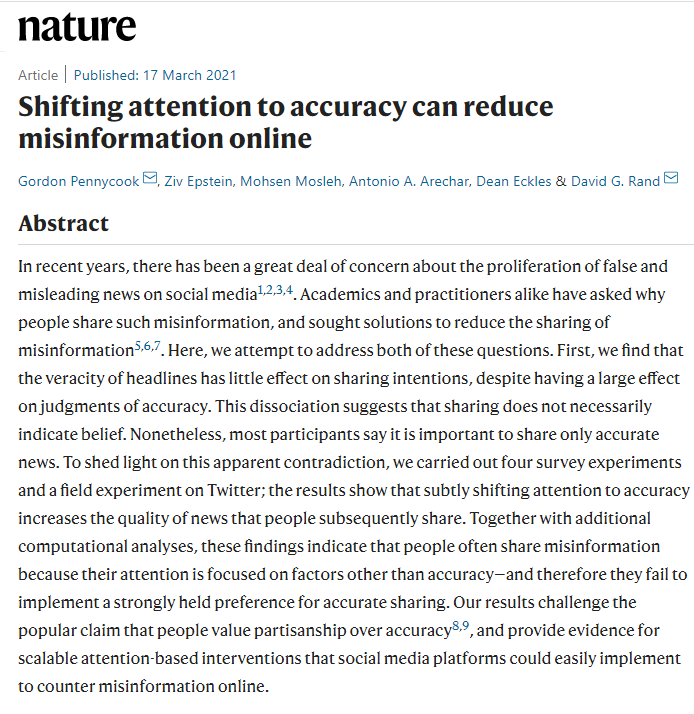

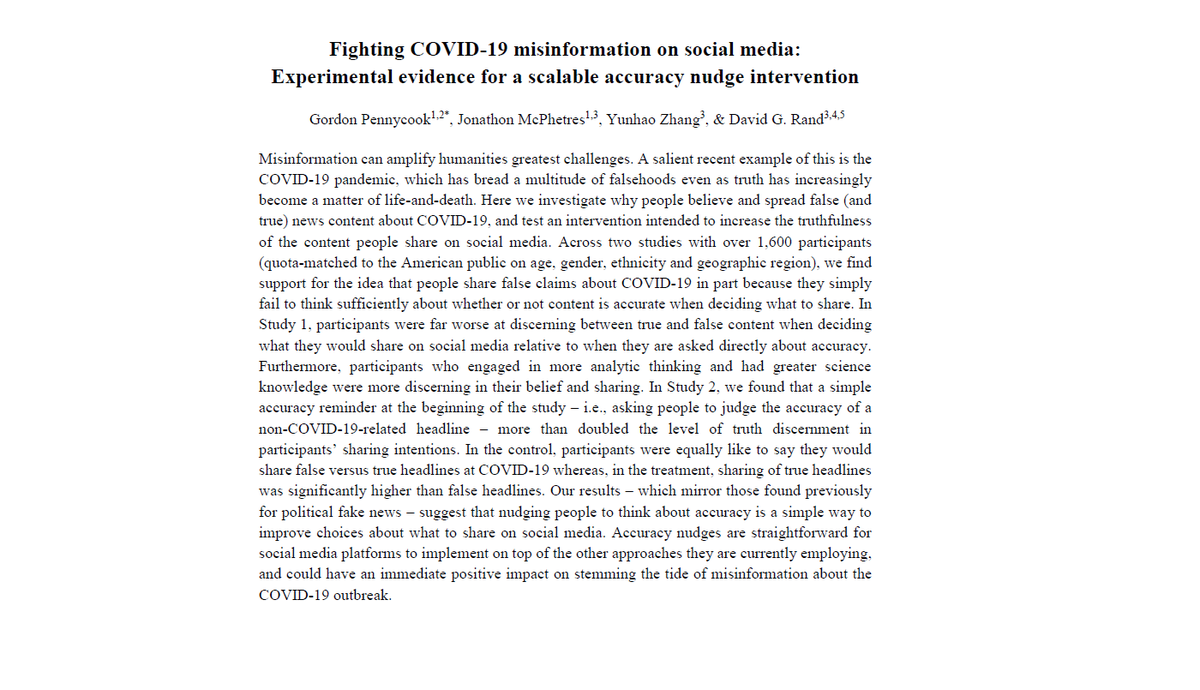

Previously we found a disconnect between what people judge as accurate and what they say they'd share- despite not wanting to share things they realize are false. Why? Largely bc people simply forget to consider accuracy when deciding what to share

Previously we found a disconnect between what people judge as accurate and what they say they'd share- despite not wanting to share things they realize are false. Why? Largely bc people simply forget to consider accuracy when deciding what to sharehttps://twitter.com/DG_Rand/status/1372217700626411527?s=20

The root of the challenge when inferring political bias is that Republicans/conservatives are substantially more likely to share misinformation/fake news, as shown eg by @andyguess @j_a_tucker @grinbergnir @davidlazer et al science.org/doi/10.1126/sc… science.org/doi/abs/10.112…

The root of the challenge when inferring political bias is that Republicans/conservatives are substantially more likely to share misinformation/fake news, as shown eg by @andyguess @j_a_tucker @grinbergnir @davidlazer et al science.org/doi/10.1126/sc… science.org/doi/abs/10.112…

A lot has been learned about psychology of misinformation/fake news, and what interventions may work - for overview, see @GordPennycook and my TICS review below:

A lot has been learned about psychology of misinformation/fake news, and what interventions may work - for overview, see @GordPennycook and my TICS review below:https://twitter.com/GordPennycook/status/1379513315542495235?s=20

Lack of digital literacy is a favorite explanation in both public & academy for the spread of fake news/misinformation. But there's surprisingly little data investigating this, and results that do exist are mixed. One issue is that dig lit is operationalized in various diff ways

Lack of digital literacy is a favorite explanation in both public & academy for the spread of fake news/misinformation. But there's surprisingly little data investigating this, and results that do exist are mixed. One issue is that dig lit is operationalized in various diff ways

Fact-checking could reduce misinformation

Fact-checking could reduce misinformation

Why do people share misinfo? Are they just confused and can't tell whats true?

Why do people share misinfo? Are they just confused and can't tell whats true?

We are more likely to be friends with co-partisans offline & online

We are more likely to be friends with co-partisans offline & online

Fact-checking could help fight misinformation online:

Fact-checking could help fight misinformation online:

Previously we found people share political misinfo b/c social media distracts them from accuracy- NOT b/c they cant tell true v false, NOT b/c they dont care about accuracy

Previously we found people share political misinfo b/c social media distracts them from accuracy- NOT b/c they cant tell true v false, NOT b/c they dont care about accuracyhttps://twitter.com/DG_Rand/status/1196171145227251712

We first ask why people share misinformation. It is because they simply can't assess the accuracy of information?

We first ask why people share misinformation. It is because they simply can't assess the accuracy of information?