1/ Do you feel hopeless about political polarization on social media? Introducing a new suite of apps, bots, and other tools that you can use to make this place less polarizing from our Duke Polarization Lab: polarizationlab.com/our-tools

One of the biggest problems with social media is that it amplifies extremists and mutes moderates, leaving us all feeling more polarized than we really are. Our tools can help you avoid extremists and identify moderates with whom you might engage in more productive conversations.

The Duke Polarization Lab’s Bipartisanship Leaderboard identifies politicians, celebrities, activists, journalists, media outlets, and advocacy groups whose posts get likes from people in both parties: polarizationlab.com/bipartisanship…

Meet Polly, a bot who retweets messages from the opinion leaders on our bipartisanship leaderboard every few hours to help you identify people on the other side with whom you might find compromise: polarizationlab.com/our-bots

What are the issues on social media where there is room for compromise? This tool tracks terms that both Republicans and Democrats are discussing on Twitter, and analyzes the text of these posts to see if they share the same sentiment about them: polarizationlab.com/issue-tracker

What about the trolls? Use our Troll-o-meter to learn how to identify the characteristics of online extremists and monitor the types of language that they use: polarizationlab.com/troll-o-meter.

Finally, what about you? Learn what your posts say about your politics and how your offline views compare to your online behavior using these tools: polarizationlab.com/tweet-ideology & polarizationlab.com/ideologyquiz

You can also use our tools to identify whether you are in an echo chamber. But make sure to read our research that suggests stepping outside your echo chamber can also be counter-productive if not done properly: pnas.org/content/pnas/1… (ungated)

We’re also developing a Polarization Pen Pal Network to connect real social media users with opposing views, since research shows brief conversations among non-elite people can have strong depolarizing effects:

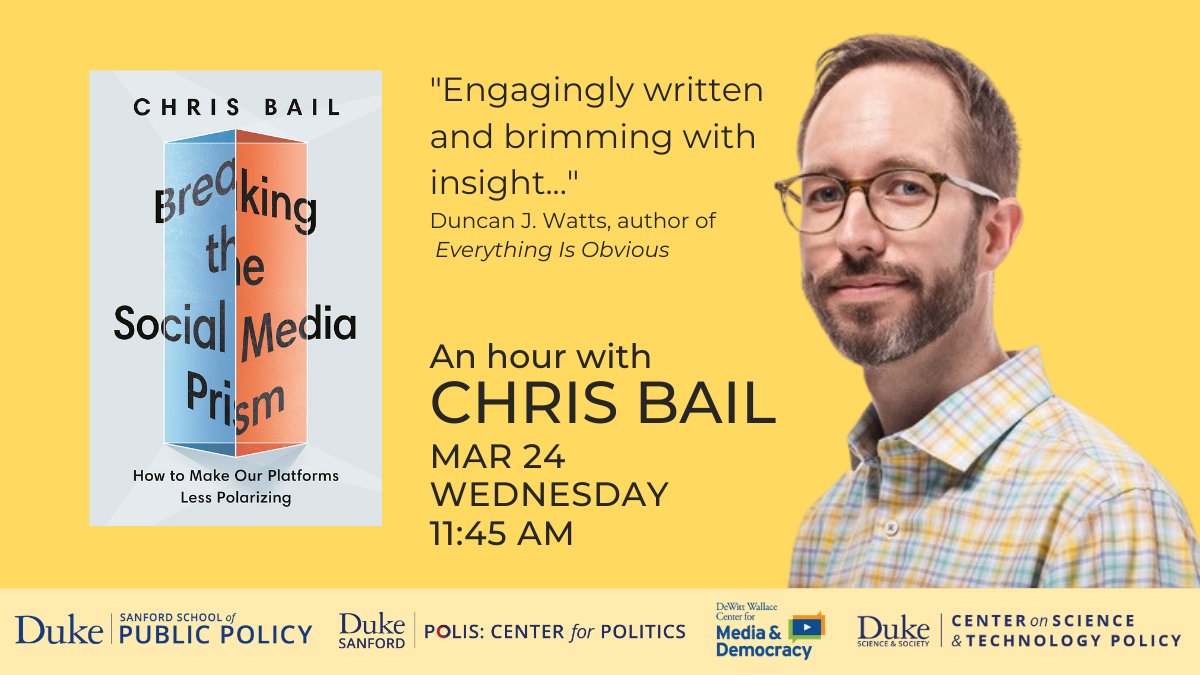

To learn more about these apps and how we can create a bottom-up movement to fight polarization, read the first chapter of my new book Breaking the Social Media Prism: bit.ly/38Ozzrt If you like it, support the indie booksellers linked here: bit.ly/2OBlsip.

Or, join me at one of the next few public lectures I’ll be giving about my new book and the new technology described above listed here: bit.ly/3qUQONX including a free event at @DukeU tomorrow [registration required]

If you want to get updates about the new technology we create to improve political discussions on social media, subscribe to our mailing list here: polarizationlab.com/subscribe

If you have ideas about how to make these tools better, reach out: polarizationlab.com/contact-us. We try hard to translate insights from research into actionable tools, but this is easier said than done. We want to make academia more open and transparent, for the benefit of all.

• • •

Missing some Tweet in this thread? You can try to

force a refresh