Duke Professor directing https://t.co/U2L73v1dpE, https://t.co/fQ330fVoR7 & https://t.co/tVyZCAm6A9 author of Breaking the Social Media Prism.

3 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/AlecStapp/status/15232706048911237132/ People who answered this question about their confidence in the scientific community only had four choices: "1) A great deal; 2) Only some; 3) Hardly any; 4) Don't know." People who had significant trust in science but not a great deal had no valid response category.

https://twitter.com/DG_Rand/status/1514613743388278793

https://twitter.com/elonmusk/status/1516483038242385928pewresearch.org/politics/2019/…

https://twitter.com/emilymbender/status/1518580576768327680Perhaps she is right that my thread was too "hype-y"-- I was mostly excited because I have seen so few examples of ML applied to human tasks that work so well. In any case, I encourage folks to read her thread (and @elicitorg 's response as well).

https://twitter.com/Nature/status/15083876005953085482/ I’m worried we’ve simply accepted the status quo— especially because most of our current platforms were never designed to be democracy’s public square.

2/4 Though many 2022 #SICSS locations hope to run in-person, some will be virtual institutes at a variety of exciting institutions as well. For a full list of sites, see sicss.io (where details about each institute will be posted in the very near future)

2/4 Though many 2022 #SICSS locations hope to run in-person, some will be virtual institutes at a variety of exciting institutions as well. For a full list of sites, see sicss.io (where details about each institute will be posted in the very near future)

There is more research/work to be done (especially with experimental designs and on other platforms), but this is the most comprehensive and careful analyses I've yet seen by @homahmrd @aaronclauset @duncanjwatts @markusmobius @DavMicRot and Amir Ghasemian.

There is more research/work to be done (especially with experimental designs and on other platforms), but this is the most comprehensive and careful analyses I've yet seen by @homahmrd @aaronclauset @duncanjwatts @markusmobius @DavMicRot and Amir Ghasemian.

Back in 2017 our Polarization Lab surveyed 1,220 people about their political views and paid half of them to follow bots that exposed them to opposing political views for one month. Unfortunately, stepping outside the echo chamber made people more polarized, not less.

Back in 2017 our Polarization Lab surveyed 1,220 people about their political views and paid half of them to follow bots that exposed them to opposing political views for one month. Unfortunately, stepping outside the echo chamber made people more polarized, not less.

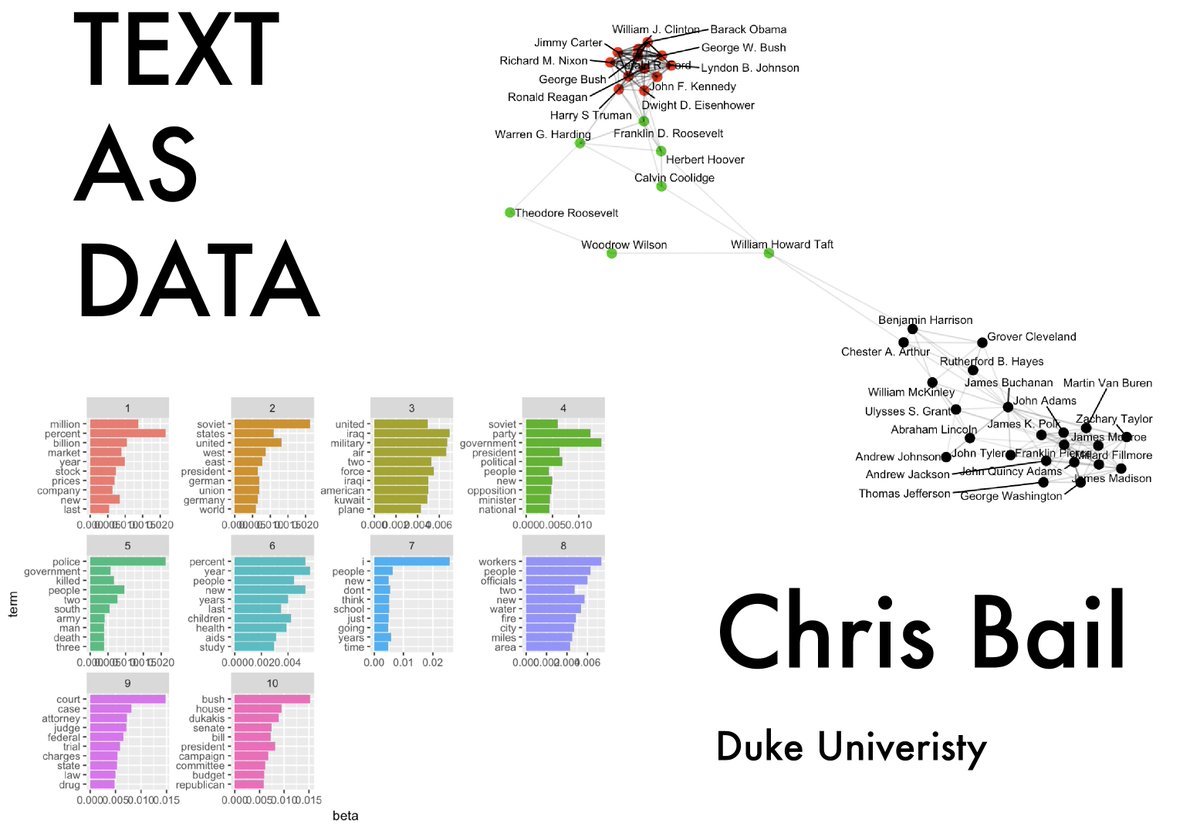

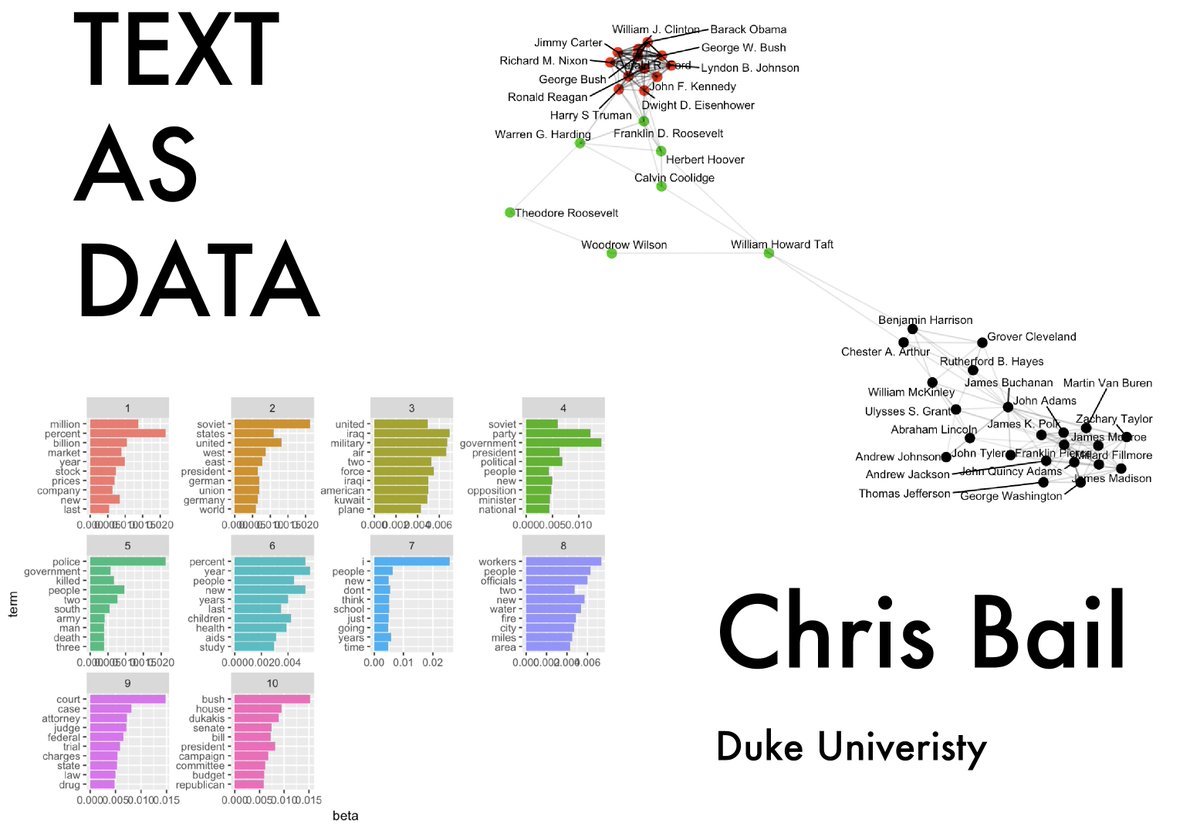

2/n These videos cover a range of different topics from ethics to text analysis, digital field experiments, mass collaboration and many other topics (only the first few days of material is up there now, but more will be added soon)

2/n These videos cover a range of different topics from ethics to text analysis, digital field experiments, mass collaboration and many other topics (only the first few days of material is up there now, but more will be added soon)

2/5 The course website (above) includes tutorials on a range of subjects with annotated R code. The class assumes basic knowledge of R and describes the techniques we use in the @polarization lab to run studies like this: pnas.org/content/115/37… and this: pnas.org/content/113/42…

2/5 The course website (above) includes tutorials on a range of subjects with annotated R code. The class assumes basic knowledge of R and describes the techniques we use in the @polarization lab to run studies like this: pnas.org/content/115/37… and this: pnas.org/content/113/42…